Unit Testing Best Practices: A Complete Guide to Writing Better Tests

Why Unit Testing Still Matters (And How to Do It Right)

When developers write solid unit tests, they create a foundation for reliable code. Good testing practices help teams catch bugs early and work more efficiently. But many teams struggle to implement effective testing that provides real value rather than just checking boxes. Let's explore how to make unit testing truly worthwhile.

The Power of Preventing Problems

Unit testing shines brightest when it helps teams catch issues before they reach production. By testing individual components in isolation, developers can spot and fix problems at the earliest stages. For instance, teams that run automated unit tests as part of their Jenkins or other CI/CD pipelines typically see 20% fewer defects making it to later stages. Finding bugs this early saves significant time and money compared to fixing them after release. This proactive approach helps prevent small issues from growing into major headaches down the road.

Achieving Meaningful Code Coverage

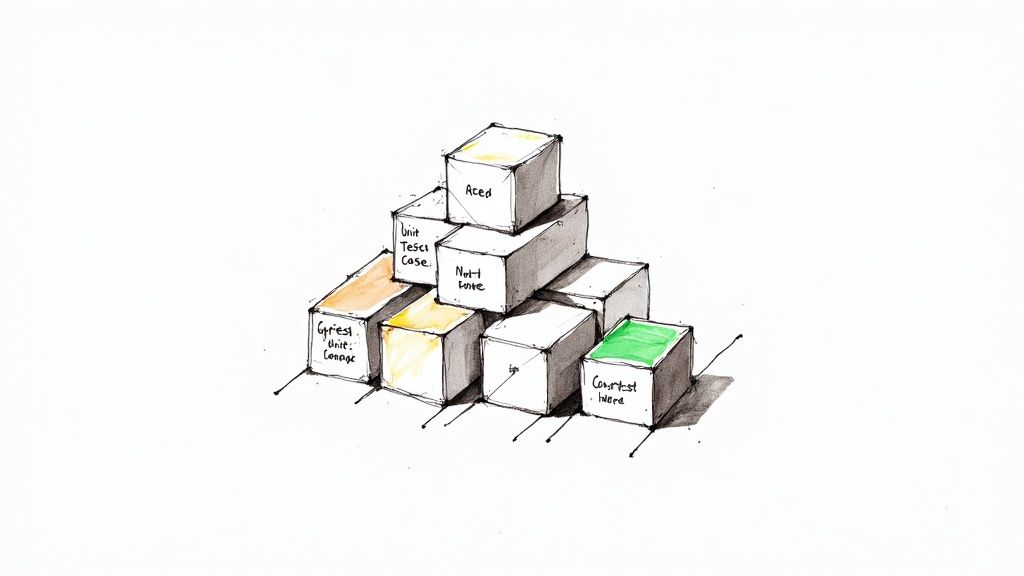

Writing effective unit tests means focusing on the right things, not just hitting arbitrary coverage numbers. While high code coverage (often targeting 80% or more) indicates good test coverage, the quality of those tests matters more than quantity. Smart teams prioritize testing critical code paths and edge cases that could cause real problems. Tools like JaCoCo for Java, Istanbul for JavaScript, and Coverage.py for Python help track which parts of the code need more testing attention. This targeted approach ensures testing efforts deliver maximum value.

Writing Tests That Help You Debug

The best unit tests do more than just verify code works - they help developers quickly identify what's wrong when things break. Clear, focused tests that follow the Arrange-Act-Assert pattern make debugging much faster and easier.

| Step | Description |

|---|---|

| Arrange | Set up the necessary preconditions and inputs for the test. |

| Act | Execute the unit under test with the arranged inputs. |

| Assert | Verify that the actual outcome matches the expected outcome. |

By keeping each test small and focused on a single assertion, developers create tests that clearly point to problems when they fail. This approach makes tests easier to understand and maintain over time. When unit tests provide clear, specific feedback about what went wrong, debugging becomes much more straightforward. This helps the whole team work more efficiently and keeps the codebase stable as it grows.

Making the Arrange-Act-Assert Pattern Work for You

Writing clear and maintainable unit tests is essential for any software project. One of the most effective approaches is the Arrange-Act-Assert (AAA) pattern, which provides a simple but powerful structure for organizing test code. Let's explore how you can use this pattern to write better tests.

Understanding the Core Components of AAA

The AAA pattern divides each test into three clear phases:

- Arrange: This is where you set up everything needed for the test. Just like setting up a stage before a performance, you create objects, set up variables, and prepare any mock dependencies. For example, when testing a rectangle's area calculation, you would create a

Rectangleobject with specific width and height values. - Act: Here's where you run the actual code being tested. It's the main event - executing the method or function under test. In our rectangle example, this would be calling the

calculateArea()method to get the result. - Assert: Finally, you check if everything worked as expected. This phase compares the actual results with what you expected to see. For the rectangle test, you'd verify that the calculated area matches width times height.

Practical Applications of the AAA Pattern

Let's look at a real example of how AAA makes tests clearer and more focused. Here's a test for an email validation function:

public boolean isValidEmail(String email) { // ... validation logic ... }

Using AAA, the test becomes straightforward:

@Test public void isValidEmail_withValidEmail_returnsTrue() { // Arrange String email = "test@example.com";

// Act

boolean isValid = isValidEmail(email);

// Assert

assertTrue(isValid);

}

This structure clearly shows what's being tested and how, making it easy for anyone to understand the test's purpose.

Benefits of Using the AAA Pattern

The AAA pattern offers real advantages for development teams. When tests fail, the clear structure helps you quickly pinpoint the problem - is it in the setup, the execution, or the verification? This focused approach saves valuable debugging time.

Using AAA consistently also helps teams work better together since everyone follows the same testing approach. Studies show that good testing practices, especially when automated in continuous integration, can reduce test failures by up to 20%.

By keeping tests organized and clear, the AAA pattern helps teams maintain high code quality and catch issues before they reach production. This structured approach to testing proves particularly valuable as projects grow larger and more complex.

Beyond the Numbers: Meaningful Code Coverage

After exploring the Arrange-Act-Assert pattern, let's discuss what actually matters for code coverage. While many teams focus on hitting high coverage percentages, the real value lies in testing what's most important. Let's examine how successful teams approach coverage in a way that improves code quality rather than just chasing numbers.

Identifying Critical Paths and Edge Cases

Think of your codebase like a city's road network. Some roads, like major highways, handle most of the traffic - these are your critical paths. Other areas see much less activity, like quiet side streets. The same applies to your code - certain functions and modules handle core business logic and are used constantly, while others play supporting roles. Smart testing means focusing your energy on these high-traffic areas first. Beyond the common cases, you'll want to test edge scenarios that could cause problems. For example, when testing a function that validates user input, don't just verify it works with normal text - also check how it handles empty strings, special characters, or extremely long inputs. These unusual cases often reveal bugs that basic testing misses.

Utilizing Coverage Tools Effectively

Tools like JaCoCo, Istanbul, or Coverage.py help track which parts of your code have tests and which don't. This information helps you spot gaps in your test suite. But don't get caught up in hitting 100% coverage everywhere. Sometimes getting that last 20% coverage means writing many tests for simple code that rarely changes. Focus instead on thoroughly testing your core logic - for example, achieving 80% coverage of critical features often provides better value than reaching 100% coverage of everything. The key is understanding where additional tests will actually improve reliability versus where they're just padding statistics.

Balancing Coverage Goals With Development Velocity

Testing takes time, and trying to test everything extensively can seriously slow down development. This often frustrates developers and makes them resist writing tests altogether. A better approach is to start by testing the most complex and important code paths first. Less critical areas can have basic tests initially, with more comprehensive coverage added over time as resources allow. This keeps development moving while still protecting the most important functionality. As the project matures and you have more breathing room, you can gradually expand test coverage to other areas. The goal is steady improvement without creating bottlenecks.

By focusing on strategic testing rather than arbitrary coverage goals, teams can build reliable software while maintaining good development speed. This practical approach helps everyone understand where testing efforts matter most, leading to higher quality code that actually serves users better. The key is finding the right balance between thorough testing and efficient development.

Crafting Tests That Actually Help Debug Issues

Good unit tests do more than just verify code - they help you quickly find and fix bugs when things go wrong. By designing tests thoughtfully, you can create a test suite that points directly to issues rather than leaving you scratching your head. Here's how to write tests that make debugging easier and faster.

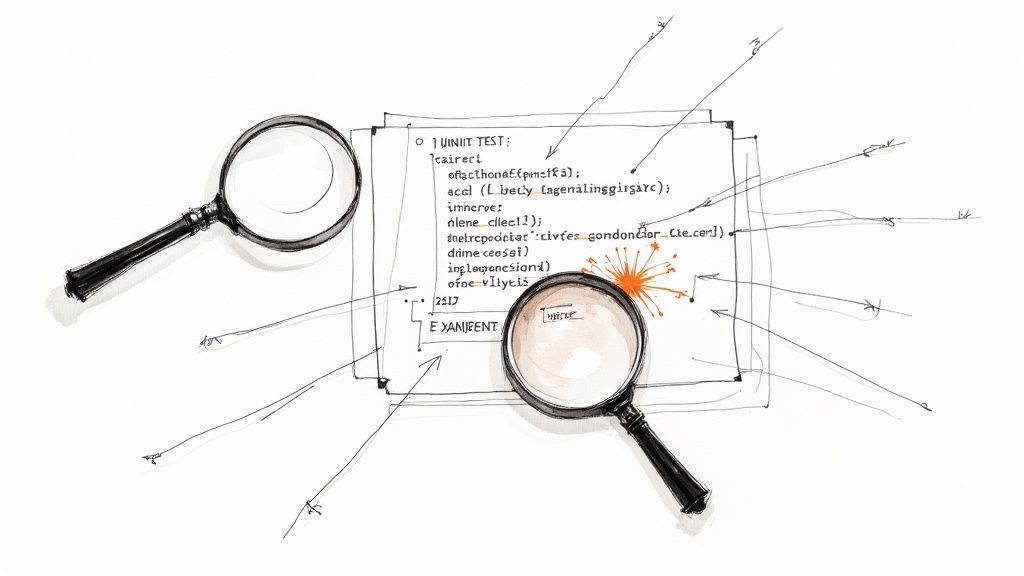

Writing Focused Unit Tests

The key to debuggable tests is keeping each test focused on one specific thing. Instead of cramming multiple checks into a single test, break them out separately. For example, when testing a rectangle area calculator, write individual tests for positive numbers, zero, and negative inputs. This way, when a test fails, you know exactly which case is broken. Think of it like using a magnifying glass - a focused test zooms right in on the problem spot instead of making you search through a maze of scenarios.

Structuring Tests for Clarity

The way you organize your tests makes a big difference in how easily you can track down bugs. Following the Arrange-Act-Assert pattern gives each test a clear structure: first set up the test conditions, then run the code you're testing, and finally check if it worked correctly. This clear separation helps pinpoint issues - if the setup phase fails, you know the problem is in your test configuration rather than the actual code being tested. Well-structured tests save time by showing you exactly where to look when something breaks.

Using Assertions Effectively

Smart use of assertions makes test failures much more informative. Rather than basic true/false checks, use assertions that provide helpful details when they fail. For instance, instead of just asserting result > 0, use assertEquals(expectedValue, result) with a message explaining what you're checking: assertEquals("Area calculation incorrect", expectedValue, result). When a test fails, these detailed messages often tell you what went wrong without requiring deeper investigation.

Managing Test Data

The test data you choose has a big impact on how easily you can debug issues. Simple, predictable test inputs make it much easier to trace what happened and understand what should have happened. Skip complex or random test data that can mask the source of errors. Use small, clear examples that you can easily examine and modify. When tests fail, you want to be able to quickly recreate the problem and identify exactly what conditions caused it. Consider using test doubles like mocks to isolate the code you're testing from external dependencies - this reduces the number of moving parts that could cause problems. With well-chosen test data and proper isolation, your tests become precise tools for finding and fixing bugs efficiently.

Making Test-Driven Development Actually Work

Many developers struggle to move beyond basic Test-Driven Development (TDD) examples into real-world usage. While the concept sounds great in theory, putting it into daily practice often proves challenging. This section explores practical ways to implement TDD successfully, based on insights from teams who have made it work. We'll look at realistic test-first approaches, when to be flexible with the rules, and how to handle existing codebases.

Overcoming Resistance to TDD

Getting team members on board with TDD is often the first major challenge. Many developers initially view it as unnecessary extra work that slows them down. The key is addressing these concerns directly with concrete evidence. Show the team specific examples of how TDD reduces time spent debugging and creates more stable code. Share real stories from other development teams who have successfully adopted TDD, including metrics showing improved code quality.

Start with small wins rather than forcing wholesale adoption. Pick a new feature or small module where the team can try TDD in a contained way. This gives developers a chance to experience the benefits firsthand without feeling overwhelmed by changing their entire workflow at once. As they see positive results in their own work, they'll be more likely to embrace TDD naturally rather than resisting it as a top-down mandate.

Practical TDD Implementation

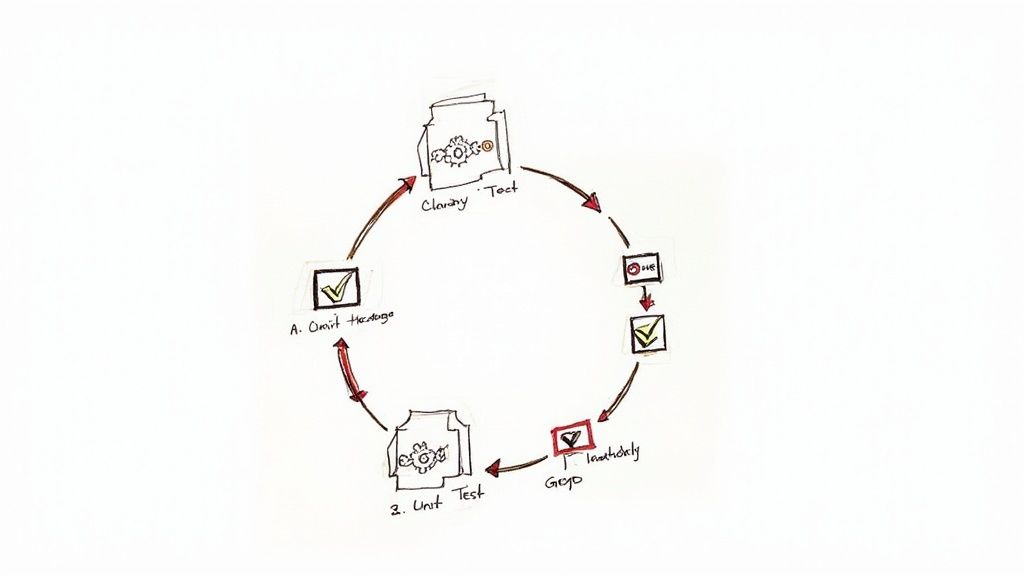

While the Red-Green-Refactor cycle forms the backbone of TDD, real projects need flexibility in how it's applied. For example, when working with older codebases, writing tests first isn't always feasible. A step-by-step approach often works better - write tests for new code while gradually adding test coverage for existing code as you work on it. This lets you improve test coverage over time without blocking development.

Being pragmatic about the "tests first" rule is also important. In some cases, like when exploring a new algorithm, it makes sense to write the code first and add tests immediately after while the implementation details are fresh. The important part is ensuring you write those tests promptly, so they accurately verify the code's behavior. Think of TDD as a helpful guide rather than an inflexible set of rules.

Measuring the Impact of TDD

Clear evidence helps maintain long-term commitment to TDD. Track concrete metrics like the number of bugs reaching production, time spent debugging, and how often code needs to be changed. Many teams see production defects drop by 20% or more after adopting TDD consistently. This data helps justify the upfront time investment and keeps teams motivated to continue with TDD. It also highlights areas where your TDD approach could improve. Following solid unit testing practices alongside TDD creates a strong foundation for building reliable software. When teams focus on practical implementation and measuring results, they can get the full benefits of TDD while creating better software more efficiently.

Automating Tests Without the Headaches

Good unit tests only deliver real value when they're part of an effective automated process. In this section, we'll explore practical strategies for integrating unit tests into modern CI/CD workflows that work reliably - no more fighting with flaky pipelines or unreliable automation. Let's focus on what actually works in real-world development environments.

Streamlining Your CI/CD Pipeline With Unit Tests

Think of your CI/CD pipeline like a quality control assembly line, with unit tests checking each component before it moves forward. When integrated properly into platforms like Jenkins, GitLab CI, or Azure DevOps, these tests catch issues early - which saves significant time and money. Research shows that fixing bugs during development costs up to 100 times less than addressing them post-release.

The key is configuring your pipeline to run tests automatically on every code push. This creates an immediate feedback loop that flags any regressions or breaking changes before they can impact other stages of development. With consistent automated testing in place, teams spend less time on manual checks while maintaining high code quality standards.

Managing Test Environments and Data for Reliable Automation

Just like scientists need controlled lab conditions, automated tests require stable, predictable environments to produce reliable results. When test environments are inconsistent, you get those frustrating "flaky" tests that pass and fail randomly - making it nearly impossible to identify real issues.

The solution starts with maintaining separate environments for development, staging, and production that closely mirror each other. Equally important is how you handle test data - using techniques like data factories and test data generators helps create consistent, repeatable datasets that reduce unexpected failures. Clear environment separation combined with reliable test data forms the foundation for dependable automation.

Maintaining Automated Test Suites at Scale

As projects grow larger, test suites can quickly become difficult to maintain without proper organization. Success at scale requires clear structure - group tests logically, use consistent naming conventions, and review regularly. This makes it much easier to locate and update specific tests as your codebase evolves.

Performance also becomes critical with larger test suites. Tools like pytest-xdist for Python enable parallel test execution, dramatically reducing overall run time. This keeps the feedback loop quick and prevents testing from becoming a development bottleneck.

By focusing on these core practices - reliable environments, consistent test data, good organization, and efficient execution - teams can build automated testing systems that genuinely improve their development process. The result is better software delivered more quickly.

Want to simplify your CI/CD pipelines and improve your team's development workflow? Check out Mergify for a powerful automation solution that helps you manage pull requests, automate merges, and keep your codebase stable.