How to Improve Code Quality: Real Strategies That Work

Why Code Quality Determines Your Project's Fate

Shipping features quickly is a rush, right? But I've chatted with enough engineering leads to know the dark side: sacrificing code quality creates a technical debt monster. It's the "quick fix" that turns into late-night debugging and a whole lot of frustration. How many times have you watched a tiny issue snowball into a major outage? This isn't just bad luck; it's the direct result of neglecting quality. Small compromises accumulate, leading to instability, security flaws, and even burnout. I've seen talented developers leave projects simply because they were tired of wrestling with a crumbling codebase.

Let's talk real costs. Poor code quality doesn't just affect the technical side. It hits your team's morale, project timelines, and the bottom line. Think about the time spent debugging, fixing bugs, and rewriting messy code. That's time not spent on new features, and it adds up fast. Plus, poor quality erodes customer trust, leading to bad reviews and less user engagement. Nobody wants that.

The Real Cost of Poor Quality

To truly grasp the difference quality makes, let's look at some hard numbers. The following table illustrates the tangible impact of poor vs. high-quality code across several key metrics.

| Metric | Poor Code Quality | High Code Quality | Impact |

|---|---|---|---|

| Development Time | Slowed by frequent bug fixes and rework | Faster due to cleaner, easier-to-understand code | Significant delays vs. faster delivery |

| Maintenance Costs | High due to complex and difficult-to-maintain code | Lower due to modular and well-documented code | Increased expenses vs. cost savings |

| Security Risks | Higher vulnerability to exploits due to coding errors and lack of security best practices | Lower risk due to secure coding practices and regular audits | Increased potential for breaches vs. enhanced security |

| Customer Satisfaction | Lower due to bugs, performance issues, and unreliable software | Higher due to stable, performant, and reliable software | Negative reviews and churn vs. positive user experience |

| Team Morale | Lower due to frustration with constant bug fixing and technical debt | Higher due to a sense of accomplishment and pride in quality work | Burnout and attrition vs. increased motivation |

As you can see, the long-term effects on everything from development speed to customer happiness are substantial. Investing in quality upfront is an investment in your project's future.

Shifting the Mindset: Quality as a Feature

Companies like Airbnb and Spotify get it. Quality isn't a bonus; it's a core feature. They've built cultures where quality is baked into every step of development. This means treating code reviews as collaborative learning opportunities, investing in solid testing frameworks (like automated testing with tools such as Selenium), and embracing automation. It also means recognizing the growing importance of quality with the rise of low-code development. By 2024, low-code is projected to power over 65% of app development. This demands robust code quality metrics and tools to ensure speed doesn't compromise quality. With increasing adoption of low-code and cloud-based development (already at 14% and projected to grow to 50% soon, according to Kissflow), focusing on clean, maintainable, and testable code from the start is more important than ever.

Investing in Quality Upfront Pays Off

This isn't about chasing unrealistic perfection. It's about realizing that prioritizing quality upfront saves you from the inevitable headaches and firefighting that come from neglecting it. Think of it as preventative maintenance. By taking the time to do things right the first time, you avoid the dreaded 2 AM calls and endless debugging marathons that drain your team and derail your project. A commitment to quality means a more stable, secure, and ultimately, successful project.

Code Reviews That Build Better Code and Better Teams

Code reviews. Sometimes those two words bring up images of productive collaboration, and sometimes… well, not so much. The difference? How you approach them. Done right, code reviews are much less about nitpicking and much more about building better code and stronger teams. I’ve personally seen how a healthy review culture can transform a team from hesitant to excited about sharing their work and learning together. At GitHub, for example, senior developers often frame feedback as questions like, “What led you to this approach?” This encourages junior developers to explain their reasoning, creating a learning opportunity for everyone.

Creating a Culture of Collaboration

How do you shift from dreaded critiques to valuable conversations? It all begins with accountability. Take a look at this resource on Accountability; it explains why it's the foundation of quality code. When everyone feels ownership of the code, reviews become a shared responsibility. Clear expectations around code style and best practices are also key. This removes ambiguity and allows for more focused feedback. The ultimate aim is improving code quality, not simply pointing out flaws.

Feedback That Fosters Growth

I once got a review comment that just said, "This logic seems convoluted.” Honestly, it felt like a personal attack. A more helpful approach? “I’m having a bit of trouble understanding the logic here. Could you walk me through your thinking? I’m wondering if approach X might be simpler.” That type of feedback opens a dialogue. It focuses on understanding the code, not criticizing the person who wrote it. For more on this, check out this guide on code review best practices. Another great tip is to highlight what's working along with areas for improvement. For example, “This function is well-documented and easy to read. I did, however, notice a potential edge case that could be problematic.”

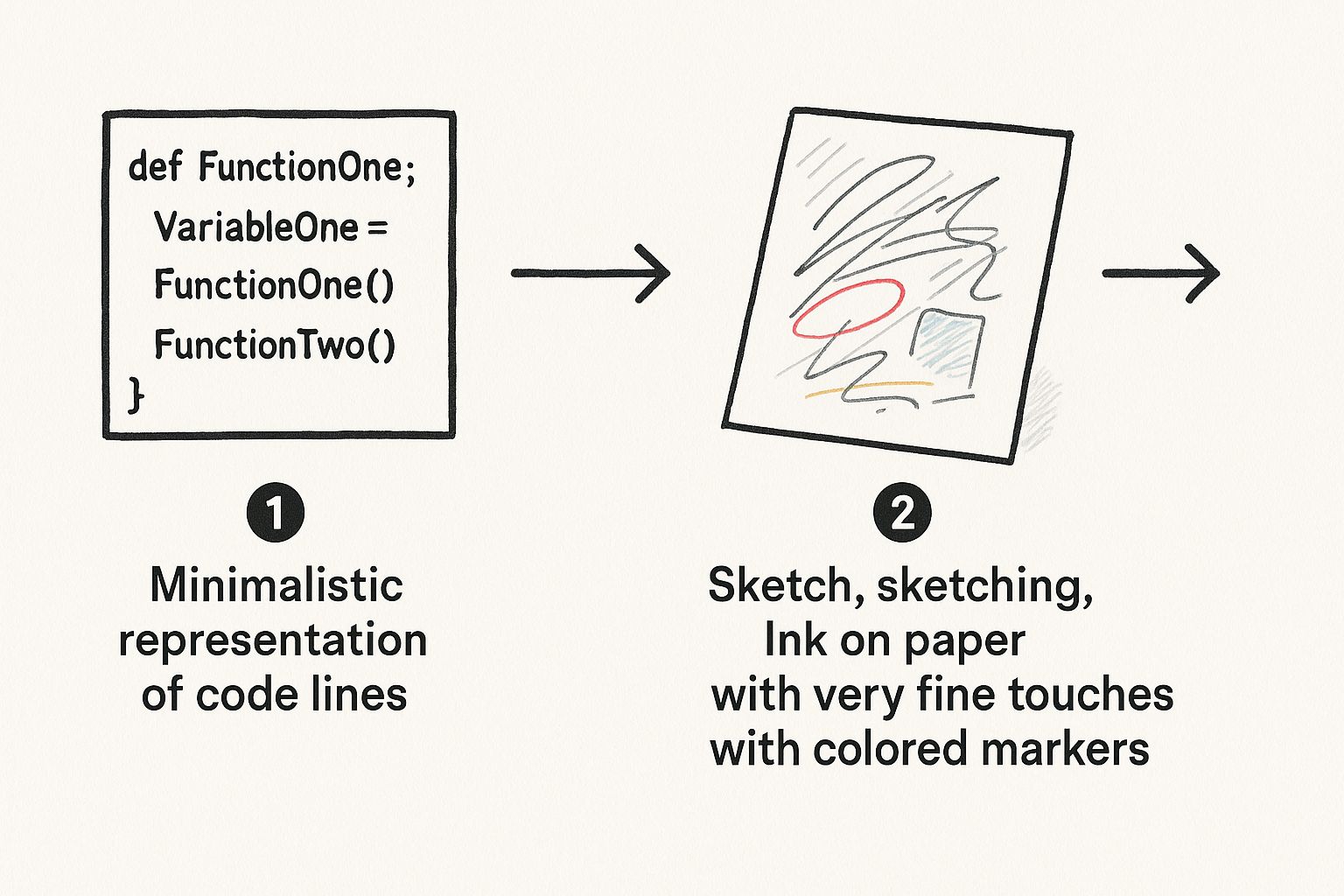

This infographic illustrates how clean, well-structured code, with consistent naming conventions and indentation, improves readability and maintainability. Think of it like this: neat, organized text is easier to understand, right? The same goes for code. This clear structure really boosts code quality, making it easier to debug, maintain, and collaborate on.

To help guide your reviews, here’s a handy checklist:

Code Review Best Practices Checklist: Essential elements to check during code reviews, organized by priority and impact on code quality.

| Review Area | What to Check | Red Flags | Time Investment |

|---|---|---|---|

| Functionality | Does the code work as intended? Are all requirements met? Are there any unexpected behaviors? | Broken tests, illogical flow, unmet requirements | High |

| Logic | Is the code's logic clear, concise, and efficient? Are there any potential performance bottlenecks? | Overly complex code, redundant calculations, inefficient algorithms | High |

| Security | Are there any potential security vulnerabilities? Does the code handle sensitive data appropriately? | Unvalidated inputs, hardcoded credentials, insecure dependencies | High |

| Style | Does the code follow established style guidelines? Is it consistently formatted and easy to read? | Inconsistent indentation, unclear naming conventions, messy code | Medium |

| Documentation | Is the code adequately documented? Are comments clear and helpful? | Lack of comments, unclear explanations, outdated documentation | Medium |

| Testing | Are there sufficient tests covering different scenarios? Do the tests effectively catch potential errors? | Insufficient test coverage, ineffective tests, broken tests | High |

Remember this checklist is a starting point – tailor it to your specific project needs. Focusing on these key areas can really make a difference.

Handling Disagreements Constructively

Disagreements during code reviews are bound to happen. The key is to approach them respectfully and focus on finding the best solution. I've seen heated debates transform into productive discussions just by changing the language from “You’re wrong” to “I’m concerned this approach might lead to X.” This instantly shifts the tone from accusatory to collaborative. When disagreements do arise, offer alternatives, clearly explain your reasoning, and be open to compromise. Sometimes, the best way to resolve an issue is to step away from the comments and have a quick in-person chat.

Balancing Thoroughness with Velocity

Thorough reviews are important, but they shouldn’t create bottlenecks. At Stripe, I learned the value of balancing review depth with development speed. Focusing on the most critical aspects – logic, security, and performance – makes reviews effective without slowing things down. Setting time limits for reviews can also help. Another effective strategy is to break large pull requests into smaller, more manageable chunks. This makes them easier to review and reduces the chance of overlooking something important. Combining these techniques lets you build a review process that catches real problems without sacrificing development velocity.

Building Your Arsenal of Quality-Checking Tools

Static analysis tools are indispensable for boosting code quality. They're like having an extra pair of eyes constantly scanning your code, catching those pesky errors we all make, especially when deadlines loom. Choosing the right tools from the vast array available can be daunting, so let's break down some essential tools developers use daily, from configuring ESLint to leveraging SonarQube for deeper insights.

Essential Static Analysis Tools

ESLint is a fantastic first line of defense for JavaScript. It's highly adaptable, able to catch syntax errors, enforce coding styles, and even identify potential logic issues. I've personally witnessed teams significantly improve code consistency simply by implementing a shared ESLint configuration. For other languages, tools like pylint (Pylint) for Python, RuboCop (RuboCop) for Ruby, and PHPStan (PHPStan) for PHP provide comparable advantages. These tools go beyond mere style enforcement; they actively prevent bugs before they become major problems. Remember, proper configuration is essential. Begin with a preset, and then tailor it to align with your specific coding conventions and project requirements.

Integrating SonarQube for Deeper Insights

SonarQube (SonarQube) elevates code analysis. It delves deeper than surface-level issues, examining code complexity, potential security vulnerabilities, and even code duplication. In my experience, SonarQube has unveiled hidden technical debt we were completely unaware of. This allowed us to prioritize refactoring and address potential problems before they impacted our users. SonarQube's dashboards offer invaluable metrics for tracking code quality trends over time, providing clear visibility into the impact of your quality improvement efforts and highlighting areas needing attention.

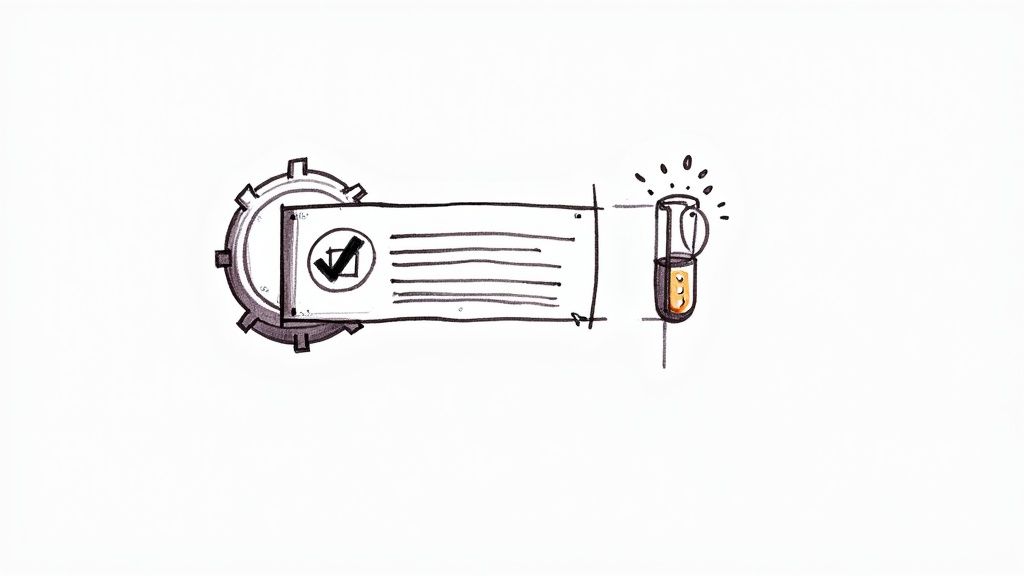

This screenshot displays a code quality dashboard, providing a centralized overview of important metrics like code complexity, style issues, and test coverage. These dashboards make it significantly easier to identify areas for improvement and track your progress. You can quickly assess the health of your codebase and prioritize necessary fixes. Such dashboards are incredibly useful for visualizing and efficiently tackling code quality challenges, particularly in larger projects. Speaking of efficiency, the increasing use of AI and ML in code quality assessments is a game-changer. These technologies automate code analysis, identify potential bugs, and even predict areas ripe for refactoring. Tools like Codacy's quality dashboards offer metrics like static analysis problem counts and unit test coverage, empowering you to proactively address quality issues (Discover more insights).

Finding the Right Balance

The key is to strike a balance. Overwhelming your team with warnings can lead to them being ignored. I've seen teams successfully implement custom filters and rulesets to prioritize critical issues, allowing developers to focus on what truly matters. Companies like Shopify have effectively integrated multiple analysis tools without overwhelming their developers by meticulously configuring notifications, utilizing thresholds to minimize noise, and concentrating on actionable feedback.

Tailoring Your Toolchain

The ideal set of tools depends heavily on your project’s context. If you're working with a large legacy codebase, tools that identify and manage technical debt are crucial. For fast-paced projects, tools that seamlessly integrate with your CI/CD pipeline are essential. The key is to experiment, discover what works best for your team, and continually refine your toolchain as your project evolves. Begin with a few core tools and gradually add more as needed. Remember, improving code quality is a continuous journey, and your tools should support that process.

Working Smarter With AI Coding Assistants

AI coding assistants like GitHub Copilot are changing the way we write code. These tools offer incredible productivity boosts, auto-completing lines and even suggesting entire functions. Check out Copilot in action:

The image shows how Copilot provides contextually relevant suggestions, saving developers time and effort. But while these tools are helpful, they aren’t a magic fix for code quality. Relying solely on AI for quality can actually be risky. I've personally seen AI-generated code that works, but lacks the elegance and maintainability of human-written code.

Reviewing AI-Generated Code Effectively

Think of AI suggestions as a starting point, not the finished product. Just like any code, thorough review is critical. This means checking for logic errors, making sure the code follows your team’s style, and examining the overall design. Look for edge cases the AI might have missed.

Don’t be afraid to refactor AI-generated code to improve readability and maintainability. This often means simplifying complex suggestions or adding clearer comments. Use AI to boost your skills, not replace them.

Spotting AI’s Blind Spots

AI assistants are trained on huge datasets of code, but they don't understand the nuances of your project. This can lead to technically correct suggestions that don't fit your overall design. I’ve noticed AI-generated code can be verbose or overly complex. It can also struggle with context-specific logic or unique edge cases. One common issue is AI suggesting code that duplicates existing functionality, which we definitely want to avoid.

Training Your AI Assistant

AI coding assistants can learn and adapt. By providing feedback on suggestions, you can help train them to better match your team's standards and best practices. This could mean rejecting suggestions that deviate from your style guide or correcting snippets that add unnecessary complexity. This feedback loop can drastically improve the quality and relevance of future suggestions. The more you use the tool and give feedback, the better it understands your needs. This is especially important for keeping AI-assisted and human-written code consistent.

Maintaining Human Oversight

AI assistants speed up development, but human oversight is still essential for quality. The impact of AI on code quality is a big deal. In 2023, more than 50% of developers used AI tools like GitHub Copilot. But studies show that while AI automates tasks, it can also lead to problems like decreased code reuse and increased churn. Discover more insights about AI's downward pressure on code quality. This emphasizes the need for developers to stay focused on quality and find ways to address these issues. It's about integrating AI effectively without sacrificing the attention to detail that leads to a truly maintainable and robust codebase.

Creating CI Pipelines That Catch Problems Early

Your continuous integration (CI) pipeline is your safety net, but it needs to be both reliable and fast. A slow CI process can really bog down development. I've seen firsthand how effective CI can be, and I'm happy to share what I've learned about building a workflow that catches quality issues early without slowing things down.

Structuring Your Pipeline for Fast Feedback

Think of your CI pipeline like an assembly line. Each stage performs a specific check and gives you quick feedback. The key is to catch problems early. Start with the quickest checks like basic linters and unit tests. These can quickly surface common coding errors. If those pass, then move on to more complex tests, like integration or end-to-end tests. This layered approach prevents wasted time on lengthy checks when basic issues are present. This also helps maintain developer momentum.

Setting Up Smart Quality Gates

Quality gates are your checkpoints. They set the minimum bar for code quality before it moves through the pipeline. Think code coverage thresholds, static analysis scores from tools like SonarQube, or the number of code smells. The trick is setting realistic goals. Requiring 100% code coverage might sound great in theory, but it can be a massive time sink, especially on older projects. Start with achievable targets and gradually raise them as you clean up your codebase.

Balancing Checking and Velocity

There's always a tension between thorough checking and development speed. You want to catch every bug, but you also need to ship code quickly. One great strategy I've used is intelligent batching. Instead of running every test on every commit, group related tests together. Run your essential checks often, but save the more comprehensive testing for release candidates or nightly builds. This keeps feedback loops tight without sacrificing thoroughness.

Managing Flaky Tests

Flaky tests are the bane of any CI pipeline. They pass sometimes, fail others, and destroy your team's trust in the system. I've watched teams waste countless hours on phantom bugs that turned out to be flaky tests. One good approach is to quarantine them. Put them in a separate suite and run them less often. This keeps them from blocking the main pipeline. Then, dedicate some focused time to fixing or rewriting them. You'll see a huge improvement in the reliability of your CI system. For more ideas, check out this guide on continuous integration best practices.

Enhancing Productivity, Not Hindering It

The entire point of CI is to make life easier for developers. Successful teams treat their pipeline like a living document. They constantly refine it based on their evolving needs. This means looking at test performance data, identifying bottlenecks, and streamlining workflows. They also make sure the CI system delivers clear, actionable feedback. No one wants cryptic error messages. Give developers the information they need to fix problems quickly and get back to building. When your CI pipeline is a valued tool, your team can confidently and efficiently ship high-quality code.

Tracking Progress With Metrics That Matter

Meaningful metrics offer a powerful lens into the health and evolution of your code quality. But I've seen firsthand how easily these numbers can morph into vanity metrics, offering little real insight. Teams can get fixated on things like code coverage percentages while ignoring the core issues impacting users. So, let's ditch the vanity and focus on the metrics that genuinely fuel informed decisions and codebase improvement.

Identifying the Right Metrics

Choosing the right metrics is a bit like selecting the right tool for a job. You wouldn’t use a wrench to hammer in a nail, right? Similarly, lines of code isn’t a great productivity indicator. Instead, zero in on metrics that mirror the qualities you're aiming for. For example, if maintainable code is your goal, track things like cyclomatic complexity or code duplication. If reliability is top of mind, consider defect density or mean time to resolution (MTTR). The core principle? Align your metrics with your objectives.

Tracking Technical Debt Effectively

Technical debt is a tricky beast. Like financial debt, it can be useful in the short-term, but a real burden down the line. I’ve worked on projects where unchecked technical debt choked productivity. Effective management requires more than just counting code smells; it demands understanding their impact. Where’s your code most fragile? Where are bug fixes consuming most of your time? These are the areas where addressing technical debt will give you the biggest bang for your buck. Tools like SonarQube can be real game-changers here, visualizing technical debt hotspots and trends over time.

Measuring the Impact of Quality Initiatives

Let’s say you've implemented new code review practices, adopted static analysis tools, and started using Mergify to automate your workflow. Fantastic! But how can you be sure these initiatives are actually moving the needle? This is where impact measurement is crucial. Instead of simply tracking the number of code reviews, track the number of defects found during those reviews. Instead of just looking at code coverage, examine how it correlates with fewer production bugs. By tying your quality initiatives to tangible outcomes, you can demonstrate their value and justify continued investment.

Communicating Progress to Stakeholders

Often, stakeholders are more interested in business outcomes than raw code quality metrics. From experience, I know that translating technical improvements into business value is key for getting buy-in. Instead of leading with code coverage percentages, explain how improved quality has resulted in faster delivery times or fewer customer complaints. Showcase how investing in quality has decreased maintenance costs or boosted user engagement. By speaking their language, you can effectively communicate your work's importance and secure ongoing support for your quality improvement efforts.

Avoiding the Metric Trap

Remember, metrics are there to guide you, not control you. I’ve witnessed teams become so focused on optimizing for the metric that they lose sight of the underlying quality – leading to counterproductive behaviors. For instance, a laser focus on code coverage can incentivize developers to write superficial tests for simple cases while neglecting more complex, critical scenarios. The goal is to elevate your code's quality, not just improve the numbers on a dashboard. Use metrics as a compass, not a destination.

Celebrating Meaningful Improvements

Last but not least, celebrate the wins! Improving code quality is a marathon, not a sprint, so acknowledge and appreciate the progress made along the way. Did you significantly squash the number of critical production bugs? Successfully refactor a particularly tangled section of the codebase? Celebrate these milestones! Recognizing and rewarding progress keeps the team motivated and reinforces the value of quality. It fuels momentum and cultivates a culture of continuous improvement.

Your Roadmap for Lasting Quality Improvements

Let's talk about building a realistic plan for improving your code quality. Forget the overnight miracles and instant perfection. This is a marathon, not a sprint. You wouldn't run 26 miles on your first day of training, would you? The same applies here. It’s about creating sustainable habits and making incremental improvements. Think small, consistent steps that build on each other.

Assessing Your Current State

Before you lace up your running shoes and sprint towards perfect code, take a moment to assess where you’re starting. What are your biggest pain points? Drowning in bugs? Is your technical debt a monster under the bed? Is your CI pipeline a bottleneck? An honest assessment will help you focus. Don't be afraid to get the whole team involved; their perspective is gold.

Prioritizing Your Improvements

Now that you know your challenges, prioritize. Which changes make the biggest impact? Which are the easiest to implement? Maybe focusing on your code review process is the first step. Or perhaps tackling those flaky tests is mission-critical. There's no single right answer. It depends on your goals and those pain points you just identified. As you're thinking about this, test-driven development can be a valuable tool.

Creating an Implementation Timeline

Improving code quality is a journey, not a destination. Don't create a timeline that sabotages your existing projects. Break down large goals into bite-sized pieces. This might mean targeting a single code smell each week, or dedicating time daily to refactoring. Small, consistent wins beat grand gestures every time.

Learning From Other Teams

Want to get better? Talk to teams who have successfully transformed their code quality. What hurdles did they face? What strategies worked? What mistakes did they make? Learning from others saves you time and prevents headaches. For example, many teams find that focusing on readability first creates a ripple effect, improving other quality aspects.

Measuring Your Progress and Celebrating Wins

How do you know you’re making progress? Choose metrics that actually matter. Are you catching more bugs before they hit production? Spending less time fixing them? Is your CI pipeline flowing smoothly? Tracking your progress is crucial for staying motivated and making adjustments along the way. And remember to celebrate your wins! Crushed a nasty bug? Hit a new code coverage milestone? Acknowledge those victories. It keeps everyone engaged.

Maintaining Momentum

Initial enthusiasm can fade when other priorities pop up. How do you keep that momentum going? Remind your team about the benefits of high-quality code. Share success stories and highlight the positive impact of your work. Make quality part of your team's DNA, not just a flavor-of-the-week initiative.

Ready to level up your CI process and improve code quality? Check out Mergify for automated workflows, smart CI batching, and powerful insights to help you ship better code faster.