Unit Testing vs Integration Testing A Practical Guide

The fundamental difference between unit and integration testing really comes down to focus. Think of it like this: unit testing is like checking a single brick for quality, making sure it’s solid and well-formed all by itself. Integration testing, on the other hand, is about making sure all those individual bricks come together to form a strong, stable wall.

Foundations of Software Testing

In software development, you need both unit and integration testing to build reliable applications. They aren't competing with each other; instead, they're complementary layers in any robust quality assurance strategy. Getting a handle on their distinct purposes is the first step toward creating an efficient workflow that nips bugs in the bud, long before they ever see production.

To put it in more concrete terms, a unit test makes sure a single function, like calculateTotal(), gives you the right sum when you feed it specific inputs. It doesn't care where those inputs came from or what happens to the output later on. An integration test, however, would check that the calculateTotal() function correctly pulls data from a database and successfully passes the final result to the user interface.

If you want to dive deeper, you can explore more about these foundational concepts in our guide to the differences between unit and integration testing.

Core Roles in the Development Lifecycle

Each testing type plays a unique and critical role, forming the backbone of what's often called the testing pyramid. Any healthy test suite is built on a massive base of fast, isolated unit tests.

- Unit Testing: This is all about the smallest testable parts of your code—think individual methods, functions, or classes. These tests are incredibly fast and simple to write, giving developers immediate feedback and making them perfect for catching logic errors early.

- Integration Testing: Here, the goal is to verify that different components or services talk to each other correctly and that data flows between them as expected. These tests are naturally more complex and slower to run because they often need a more complete environment, including live databases or external APIs.

The mix of these tests is crucial. A good rule of thumb is that unit tests should make up about 60-80% of your automated test suite, with integration tests accounting for another 15-30%. This strategic balance shows how they work together to build a truly resilient testing strategy.

Unit Testing vs Integration Testing at a Glance

For a quick side-by-side comparison, the table below boils down the key distinctions between these two essential testing methods.

| Attribute | Unit Testing | Integration Testing |

|---|---|---|

| Scope | A single function or component | Multiple interacting components |

| Goal | Verify individual logic | Verify component collaboration |

| Speed | Very fast (milliseconds) | Slower (seconds to minutes) |

| Dependencies | Mocked or stubbed out | Real services and databases |

Ultimately, this table gives you a snapshot of their different roles. Unit tests provide a microscopic view of your code's correctness, while integration tests give you a wider, more systemic perspective on how everything fits together.

A Nuanced Comparison of Testing Philosophies

Moving beyond simple definitions, let's get into the philosophies that drive how teams actually hunt down and squash defects. When you understand the core mindset behind each testing approach, you can start optimizing your workflow, from validating a tiny function all the way to ensuring your entire system is solid.

Scope And Execution Speed

This is the most immediate and obvious difference. Unit testing is laser-focused, zooming in on a single function or method. Because of this tight scope, these tests are lightning-fast, executing in milliseconds.

On the other hand, integration testing takes a wider view, spanning several modules or services. Naturally, this broader scope means tests take longer—anywhere from seconds to minutes.

The scope directly impacts how granular your tests are and how often you get feedback. And that execution speed? It's a huge factor in a developer's confidence when they're refactoring or adding new code.

Quick feedback is the lifeblood of continuous integration. Developers rely on sub-100ms unit tests to catch logic errors before they even think about merging a feature branch. This rapid cycle keeps them in the zone and cuts down on context switching.

Integration tests, however, often need to spin up services or connect to databases, which adds time. It’s a classic trade-off: teams have to balance the immediate feedback from unit tests with the more comprehensive reality check that integration tests provide.

Setup Complexity And Maintenance

Unit tests are designed to be simple. They typically use mocks or stubs to fake dependencies, which keeps the code under test neatly isolated with minimal setup. This approach is great for stability—it reduces the chances of tests failing because of some external environmental issue.

Integration tests are a different beast entirely. They often need to talk to real databases or external APIs, which immediately cranks up the setup complexity. Suddenly, you're managing Docker containers or maintaining separate test databases, and that overhead is just part of the deal.

Maintenance costs diverge in the same way. Unit tests usually change right alongside the code they’re testing; a quick refactor might require a small update. But an integration suite can shatter if just one service endpoint changes, leading to significantly higher maintenance efforts over time.

The chart below gives a good visual breakdown of how test types are often distributed and where the biggest setup headaches tend to live.

This illustrates the typical test pyramid, showing where the complexity really concentrates as you move up from unit to end-to-end tests.

White-Box Versus Black-Box Insights

Think of unit testing as a white-box technique. Developers have full visibility into the internal code structures, logic flows, and edge cases. They write tests with intimate knowledge of the code's branches, loops, and potential failure points.

Integration testing, in contrast, usually operates as a black-box method. The focus isn't on the internal workings but on the public interfaces and the data flowing between components. You're testing the system from the outside, just as another service or a user would.

These two perspectives are brilliant at catching different kinds of bugs. White-box tests are phenomenal for finding off-by-one errors or tricky boundary conditions tucked away inside a function. Black-box tests are essential for spotting bigger-picture problems, like mismatched data contracts between services or misconfigured API connections.

“A hybrid strategy leverages white-box speed with black-box realism to catch both code-level and system-level defects.”

Making the right call here is all about context. In a microservices architecture, you’d lean heavily on white-box unit tests for your core business logic. Then, you'd layer on black-box integration tests to confirm that all those services play nicely together and that failover scenarios work as expected.

For catching mistakes early, unit tests are indispensable. Research shows that defects caught at the unit testing stage are up to 10 times cheaper to fix than those found after integration or release. That cost difference alone highlights the massive financial upside of early validation. You can learn more about the cost trade-offs between unit and integration testing.

But when it comes to guaranteeing that a user can actually sign up, make a payment, and get a confirmation email—a flow that touches authentication, payment, and notification services—integration tests become absolutely non-negotiable.

By combining rapid white-box checks with realistic black-box scenarios, teams create a robust pipeline that minimizes risk without killing development velocity.

When To Use Each Philosophy

So, which approach do you choose? It always comes down to your specific context, risk tolerance, and where you are in the project lifecycle.

Here are a few ground rules:

- Building new modules? Start with unit tests. They'll give you instant feedback on your logic and prevent simple mistakes from slipping through.

- Verifying a workflow across services? This is prime territory for integration tests. They'll confirm that everything behaves as expected under realistic conditions.

- Testing a critical user journey? For things like authentication or payments, integration tests are a must. They're your best defense against configuration and deployment issues that unit tests could never see.

For example, an e-commerce team might write a battery of unit tests for their complex pricing logic. Once that’s solid, they'll build integration tests to verify the entire checkout flow, ensuring it works correctly with the payment gateway and inventory system.

This hybrid approach ensures both code correctness and system reliability. As your project evolves, you'll find yourself adjusting the balance, but starting with this mindset puts you on the right track.

Choosing the Right Tools and Workflow

Putting the theory of unit and integration testing into practice comes down to having a solid workflow and the right tools for the job. Your choice of technology fundamentally shapes how efficiently you can write, run, and maintain your tests, which has a direct impact on developer productivity and code quality.

For unit testing, the name of the game is speed and isolation. This is where lightweight frameworks really shine.

Unit Testing Toolkits and Isolation

The unit testing workflow is pretty straightforward: a developer writes a small, focused test for a single function or method. To get that true isolation from external dependencies like databases or APIs, developers rely on mocks and stubs. These test doubles mimic the behavior of real components, making sure the test only validates the logic inside the unit itself.

A few popular frameworks make this process a breeze with built-in assertion libraries and mocking capabilities:

- JUnit (Java): This is the gold standard for Java applications, with a huge ecosystem of extensions for mocking and assertions.

- NUnit (.NET): A powerful and flexible framework for the .NET world, heavily inspired by JUnit's design.

- Jest (JavaScript): Widely adopted in the JavaScript community, Jest is known for its zero-configuration setup, built-in mocking, and blazing-fast execution.

This whole workflow is designed for immediate feedback. A developer writes some code, whips up a corresponding unit test, and runs it in seconds—often without ever leaving their IDE.

Integration Testing Pipelines and Environments

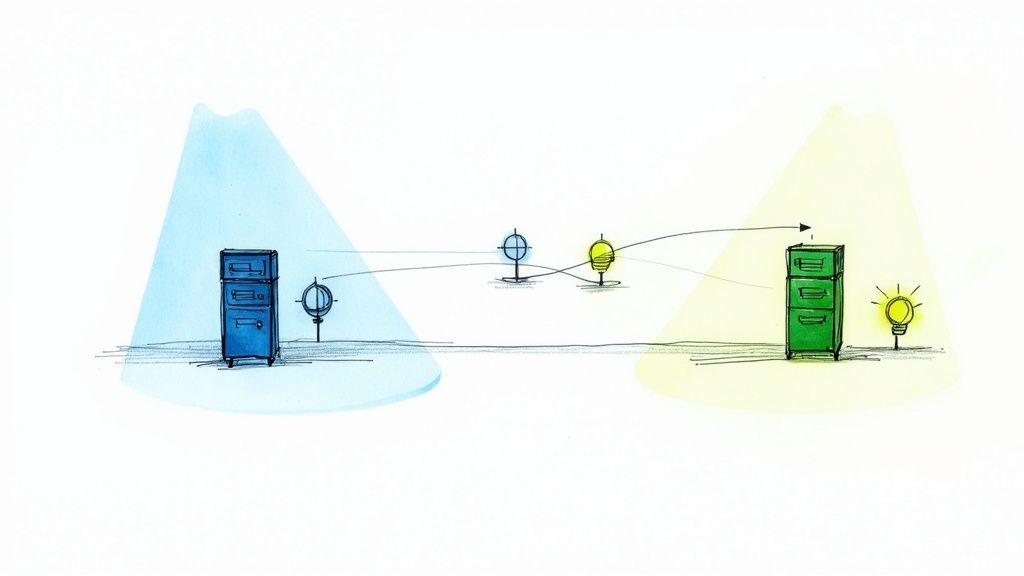

The workflow for integration testing is a different beast entirely. It’s more complex because it has to verify how multiple components talk to each other. This process usually kicks off within a Continuous Integration (CI) pipeline right after a developer pushes their changes. The pipeline’s first task is to spin up a realistic test environment.

An effective integration test environment mirrors production as closely as possible, including databases, message queues, and external API endpoints. This realism is what gives integration tests their power to find system-level bugs.

Trying to set up this environment by hand is slow and a recipe for errors. This is where modern tooling becomes absolutely essential. Docker and containerization are staples here, letting teams spin up consistent, isolated environments with all the necessary services on demand. This ensures that a test failing on a developer's machine will also fail in the CI pipeline, finally putting an end to the "it works on my machine" problem.

When it's time to test API interactions and user flows, specialized tools come into play:

- Postman/Newman: Perfect for hitting API endpoints to send requests and validate responses. It's easily automated in a CI script.

- Cypress: A modern front-end testing tool that’s also great for integration tests. It can simulate user interactions and verify the underlying API calls that happen as a result.

This workflow is slower by design, but it provides that critical check before any code gets merged. For teams looking to build out more robust pipelines, our guide on mastering automated integration testing dives deeper into effective strategies.

By combining the right tools with smart workflows, you create a layered defense against bugs—protecting both the tiny details and the big picture.

Making the Right Choice for Every Scenario

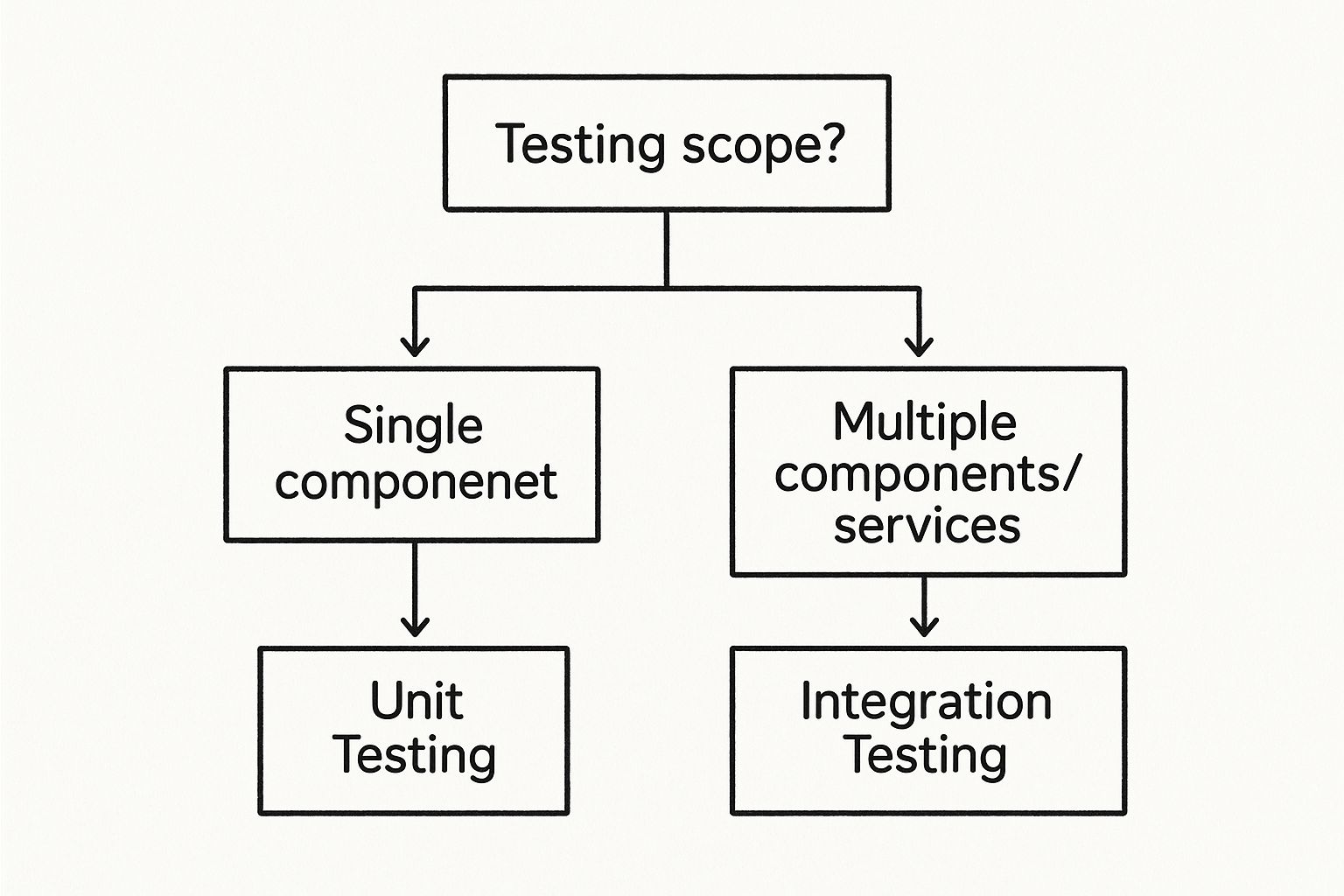

Deciding between unit and integration testing isn't about picking a winner; it's about choosing the right tool for the job at hand. The goal of your test dictates which one you should reach for. Are you trying to validate a single, complex piece of business logic? Or are you confirming that a multi-step user journey works from end to end?

The answer to that question will point you in the right direction.

Think about it this way: a unit test is perfect for verifying a function that calculates shipping costs. You can throw dozens of edge cases at it—zero weight, negative numbers, cross-country destinations—all in a matter of milliseconds. You're testing the calculation in complete isolation, which is exactly what you need: speed and precision.

On the other hand, if you're building a new user authentication flow, a unit test just won't cut it. You need an integration test to confirm the entire sequence works together. The front-end form has to correctly call the authentication API, which then needs to query the database and return a valid session token. This test verifies the connections, not just the individual components.

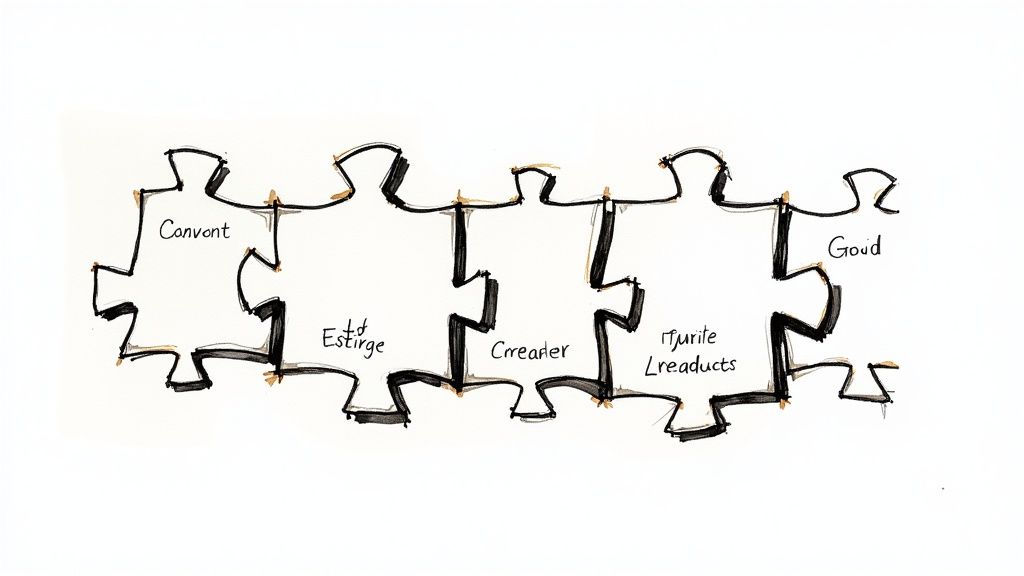

This simple decision tree helps visualize the core choice based on the scope of your test.

As you can see, it really boils down to scope—are you looking at a single building block, or the entire structure it helps create?

Use Cases for Unit Testing

Unit tests truly shine when you need to validate the internal logic of a single component without any external noise. They're your first line of defense, making sure your code is correct at the most granular level.

Lean on unit tests for scenarios like these:

- Complex Algorithms: Perfect for validating a sorting algorithm, a tricky pricing calculation, or a data transformation function.

- Helper and Utility Functions: Ensuring that simple, reusable functions for things like formatting strings or parsing dates work reliably across all possible inputs.

- State Management: Testing how a single component reacts to different states, like a UI element that should render differently based on user permissions.

- Boundary Conditions: Great for checking for off-by-one errors, null inputs, and other edge cases that are a real pain to replicate in a live environment.

Unit tests give developers a rapid feedback loop, letting them confirm code logic in seconds. This speed is non-negotiable for maintaining development velocity, especially if you're practicing Test-Driven Development (TDD).

When Integration Testing Is Non-Negotiable

Integration tests become essential when the connections between components are the primary source of risk. They answer the critical question: "Do all these perfectly functioning units actually work together?"

Deploy integration tests in situations where interactions are the star of the show:

- API Endpoints: Verifying that your service correctly processes requests, talks to the database, and returns the response format you expect.

- Database Interactions: Confirming that your application's data layer can correctly perform CRUD (Create, Read, Update, Delete) operations.

- Third-Party Services: Making sure your application can successfully communicate with external services, like a Stripe payment gateway or a SendGrid email provider.

- Multi-Step User Workflows: Testing critical user journeys, such as the complete checkout process or a password reset flow that touches multiple services.

To make this even clearer, let's look at some practical examples to help guide your decision. The table below outlines common development tasks and which testing approach usually makes the most sense.

Scenario-Based Testing Decision Guide

| Development Scenario | Primary Test Type | Justification |

|---|---|---|

| Implementing a new password validation function (e.g., checks length, special characters). | Unit Test | The logic is self-contained. You're testing inputs and outputs, not its connection to other systems. |

| Building a new "Add to Cart" API endpoint. | Integration Test | This test must verify the API, database interaction (updating cart), and the response. |

| Creating a utility to format currency values. | Unit Test | This is a pure function. Its behavior depends solely on its input, making it a perfect candidate for isolated testing. |

| Testing the full user registration process. | Integration Test | This workflow involves the UI, an API, a database, and possibly an email service. The interaction is the key risk. |

| Fixing a bug in a complex data-sorting algorithm. | Unit Test | You need to isolate the algorithm, feed it the problematic data, and confirm the fix without external dependencies. |

By understanding these distinct scenarios, you can build a smarter testing strategy that applies the right level of scrutiny at the right time. This targeted approach helps you avoid over-testing simple logic with slow integration tests or, even worse, under-testing the critical workflows that your users depend on.

Building a Balanced and Efficient Test Suite

A solid testing strategy isn't about picking a winner between unit and integration tests. It’s about building a smart, balanced portfolio where each type of test plays to its strengths. The real goal is to find as many bugs as possible and give developers confidence, all while keeping costs and run times low. The best way to visualize this balance is with the Test Pyramid.

The Test Pyramid is a simple but powerful model for allocating your testing efforts. It calls for a massive foundation of fast, inexpensive unit tests at the bottom. As you move up the pyramid, the tests get broader in scope but fewer in number.

The Ideal Test Pyramid Structure

A healthy test suite that gives you the best return on your time and effort should look something like this:

- Unit Tests (The Foundation): The vast majority of your tests—roughly 70-80%—should be unit tests. They're quick, stable, and cheap to write and maintain. This wide base ensures individual components work correctly on their own before they’re ever connected to anything else.

- Integration Tests (The Middle Layer): Making up about 15-20% of the suite, integration tests are there to verify that different modules or services actually work together. They're a bit more complex and slower than unit tests, but they're essential for catching issues that only appear at the seams—things like broken API calls or database schema mismatches.

- End-to-End (E2E) Tests (The Peak): Right at the top, a tiny number of E2E tests (~5%) validate complete user journeys through the application's UI. These are valuable, but make no mistake: they are the slowest, most brittle, and most expensive tests to maintain.

This structure creates the most efficient feedback loop possible. Fast unit tests can run with every single code change, catching most logic errors in seconds. The slower integration and E2E tests run less often, maybe in a CI pipeline, to confirm the system works as a whole.

Think of a well-structured test pyramid as a quality filter. The wide base of unit tests catches the majority of defects early and cheaply, freeing up the more expensive integration tests to focus only on interaction bugs.

The Inverted Pyramid Anti-Pattern

There’s a common and dangerous anti-pattern that flips this idea on its head: the "Inverted Pyramid," or what some call the "Ice Cream Cone." This is what happens when teams skimp on unit tests and lean heavily on slow, brittle E2E or UI tests. This approach is a recipe for disaster.

The test suite becomes painfully slow, delaying feedback to developers and gumming up the entire delivery pipeline. Worse, these tests are notoriously flaky. A minor UI tweak can break dozens of tests at once, leading to a maintenance nightmare and destroying the team's trust in the test suite. This constant "test thrash" ultimately grinds productivity to a halt, making it a costly and unsustainable strategy for any serious development team.

Common Questions About Unit and Integration Testing

Even when you’ve got a solid grasp of unit and integration testing, some tricky questions always seem to pop up. Let's clear the air on a few of the most common ones to help you build a smarter, more effective test suite from day one.

Can Integration Tests Replace Unit Tests?

This question comes up a lot, especially when teams are staring down a tight deadline. The short and simple answer is no. It might feel like a clever shortcut to skip straight to testing integrated components, but that path leads directly to the dreaded "Inverted Pyramid" anti-pattern.

When you rely only on integration tests, debugging becomes a total nightmare. A single failed test could point to a problem in any one of the interacting modules, sending developers on a wild goose chase through layers of code. Unit tests, on the other hand, are designed to pinpoint failures with surgical precision inside one isolated component.

Trying to replace a broad foundation of unit tests with a few integration tests is like building a house with no foundation. It may stand for a little while, but it's guaranteed to be unstable and incredibly difficult to fix when things go wrong.

What Is the Difference Between Mocks and Stubs?

In the world of unit testing, both mocks and stubs are "test doubles" that help you isolate the code you're testing. But they do different jobs. Getting this distinction right is crucial for writing clean, isolated tests. For a deeper dive, our unit testing best practices guide is a great place to start.

- Stubs: A stub is all about state. It provides pre-programmed, "canned" answers to calls your code makes during a test. For instance, if your function needs to fetch a user from a database, a stub could be hardcoded to always return the exact same user object every time.

- Mocks: A mock, in contrast, is all about behavior. It's an object you program with specific expectations, like "I expect this method to be called exactly once with these arguments." If those expectations aren't met, the test fails. Mocks are there to verify interactions.

So, here's the bottom line: use a stub when you need to control the state of a dependency, and use a mock when you need to verify that your code is interacting with that dependency correctly.

How Does This Apply to Microservices?

Microservice architectures make the distinction between unit and integration testing more important than ever. Each microservice is its own self-contained application, which actually makes the boundaries for testing incredibly clear.

Here’s a solid strategy for testing microservices:

- Go Heavy on Unit Testing: Inside each service, you should have extensive unit tests covering all the internal business logic, helper functions, and data models. This ensures each service is internally sound before it ever talks to another.

- Use Contract-Based Integration Testing: Forget about spinning up the entire ecosystem. Instead, use integration tests to confirm that each service honors its API contract. This proves a consumer service and a provider service can communicate as expected without needing a full-blown environment, which makes your tests way faster and more reliable.

Ready to stop wrestling with your CI pipeline and start shipping code faster? Mergify's Merge Queue and CI Insights features help you eliminate merge conflicts, optimize CI costs, and give your developers back valuable time. Find out how Mergify can streamline your workflow.