Testing Framework in Python: The Ultimate Guide to Writing Reliable Code

Master Python testing frameworks with proven strategies used by top development teams. Learn practical approaches to implementing unittest, pytest, and doctest to create more reliable, maintainable code.

Building reliable Python applications requires more than just writing code - it demands systematic testing to ensure everything works as intended. Testing frameworks provide the essential structure and tools that developers need to create and run tests effectively. As projects grow larger and teams expand, having a solid testing approach becomes even more critical for maintaining code quality and development speed.

The Benefits of Using a Testing Framework in Python

A good testing framework offers clear advantages that make it worth implementing in your development process. These tools give teams a consistent way to organize and run tests, making it simple to catch issues through automated testing.

-

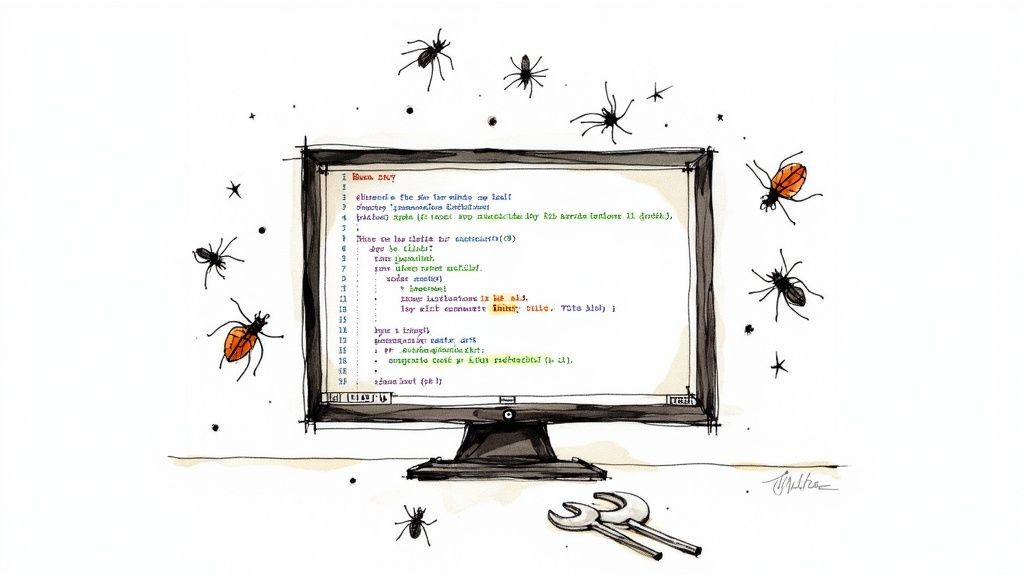

Early Bug Detection: Finding and fixing bugs early in development saves significant time and resources. When issues are caught quickly through automated tests, they're much easier to resolve than problems discovered in production.

-

Improved Code Quality: Writing tests first pushes developers to create cleaner, more modular code that's easier to test. This naturally leads to better organized code that's simpler to understand and update over time.

-

Faster Development Cycles: Automated tests dramatically speed up the development process compared to manual testing. Developers get immediate feedback after code changes, allowing them to move forward confidently. For example, research on over 20,000 GitHub projects showed that those using automated tests completed development cycles much faster.

-

Confidence in Code Changes: Having thorough test coverage acts like a safety net for developers. They can make changes knowing that tests will catch potential problems. Surveys show that developers who regularly use testing frameworks feel much more secure when modifying code.

-

Better Team Collaboration: Testing frameworks create a shared understanding of how tests should work. This helps team members write and interpret tests consistently, improving overall code quality.

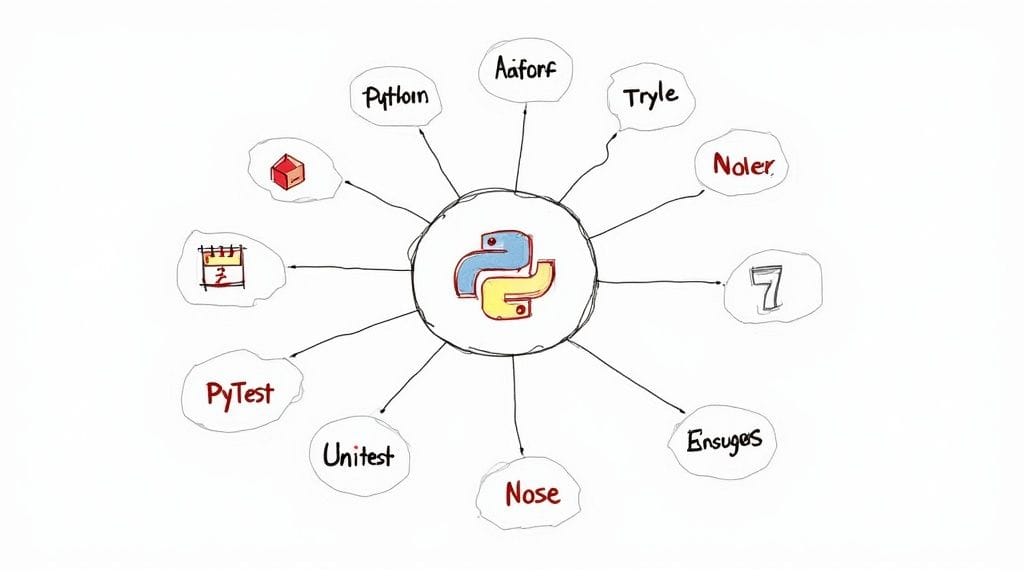

Choosing the Right Testing Framework

Your choice of testing framework should match your project's specific needs. Python offers several popular options. Python's built-in unittest provides solid foundations for unit testing. pytest stands out for its easy-to-use syntax and large plugin ecosystem. doctest lets you embed tests directly in documentation, combining code examples with verification.

The widespread adoption of testing frameworks shows their importance - over 65% of Python machine learning projects on GitHub use at least one testing framework. This trend extends beyond open source, with companies increasingly adopting testing frameworks to improve their development processes. Picking the right framework can significantly impact how effective your testing strategy becomes. In the next section, we'll explore the specific features and use cases of each framework to help you make an informed choice for your project.

Choosing the Right Testing Framework for Your Project

Finding the ideal testing framework for your Python project requires careful consideration of several key factors. Your team's experience level, project scope, and testing needs should guide this important decision. Let's explore three popular Python testing frameworks - unittest, pytest, and doctest - to understand where each one works best.

Unittest: The Standard Library Solution

Built directly into Python, unittest provides a structured approach that will feel familiar to developers coming from Java or C#. Its class-based testing model promotes clean organization, especially in larger codebases. This framework shines when working with teams that have experience with similar testing tools in other languages.

- Strengths: Comes with Python, structured test organization, ideal for large projects

- Weaknesses: More verbose syntax, less flexible features, takes time to learn for Python newcomers

Take a banking application, for example. The development team needs strict test organization and clear documentation of test cases. unittest provides the structure and familiarity that makes maintaining complex test suites manageable.

Pytest: The Flexible and Extensible Choice

Many Python developers prefer pytest for its simple syntax and rich feature set. The framework automatically finds and runs tests, provides detailed error messages, and offers a wide range of plugins. Recent data shows pytest gaining significant adoption, particularly in machine learning projects where over 65% use it as their testing tool of choice.

- Strengths: Easy to write tests, finds tests automatically, powerful test setup tools, many plugins available

- Weaknesses: Feature complexity can overwhelm beginners, plugin dependencies add moving parts

Consider a web application that needs to test complex API interactions. pytest makes it simple to mock external services and manage test environments. The framework's plugins help test everything from database operations to async code, making it perfect for modern web development.

Doctest: Testing Through Documentation

doctest takes a unique approach by embedding tests in your code's documentation strings. This helps keep documentation accurate while verifying code behavior. Though not meant for complex testing scenarios, it works well for straightforward code examples and basic functionality checks.

- Strengths: Tests and documentation work together, shows clear usage examples, perfect for simple projects

- Weaknesses: Limited testing features, not suited for complex test cases

For example, when building a utility library with clear-cut functions, doctest ensures your usage examples stay accurate. Developers can quickly understand how to use your code while the tests verify everything works as documented.

Combining Frameworks and Scaling Your Testing Strategy

Many successful projects mix these frameworks to get the best of each one. You might use unittest for core features, pytest for integration testing, and doctest to verify documentation examples. This combined approach lets you match the right tool to each testing need.

As your project grows, consider building a complete testing strategy. Think about code coverage goals and how testing fits into your build pipeline. Focus on choosing tools that support your team's workflow and help deliver reliable code consistently.

Building a Comprehensive Test Coverage Strategy

Testing in Python requires clear planning and smart prioritization to maximize effectiveness. Instead of chasing meaningless metrics, a good testing strategy focuses on preventing bugs and ensuring code reliability. Let's explore practical ways to identify key areas to test, create effective test cases, and measure real testing impact.

Identifying Critical Code Paths

The foundation of effective testing is knowing which parts of your code need the most attention. Just as road maintenance crews focus on busy highways first, you'll want to prioritize testing code that's essential to your application's core functions, data handling, external integrations, and complex logic. For example, in an e-commerce site, thoroughly testing checkout and payment processing is more important than testing product recommendation displays. This targeted approach helps you use testing resources where they matter most.

Implementing Effective Test Cases

After identifying priority areas, the next step is designing tests that thoroughly check those critical paths. Think of it like testing a lock - you need different keys to verify it works properly and resists tampering. Your Python testing should include varied inputs, edge cases, and error scenarios. Take advantage of parameterized tests to efficiently create multiple test variations from a single template. Make sure to cover both expected usage patterns and potential error conditions, including how your code handles invalid or unexpected data.

Measuring the Real Impact of Your Testing Efforts

Raw test counts don't tell the whole story about testing effectiveness. Focus instead on how well your tests catch potential issues. While code coverage tools can help spot gaps in testing, high coverage numbers alone don't guarantee quality. Concentrate on meaningful coverage of critical code paths rather than hitting arbitrary percentage targets. Review your test suite regularly to remove duplicate tests and keep it focused on what matters most.

Strategies for Gradual Coverage Improvement and Maintenance

Building good test coverage takes time. Start with your application's most important features and expand testing gradually as your project grows. This helps establish solid testing practices early while allowing room for growth. As your codebase evolves, your tests need to keep pace. Regular reviews help ensure tests stay relevant and effective. Tools like Mergify can help by automatically checking that new code meets testing requirements before merging, maintaining consistent quality throughout development. With this methodical approach to testing, you'll build confidence in your code's reliability while catching issues before they affect users.

Mastering Test Automation for Continuous Delivery

Testing is essential, but manual testing alone isn't enough. By automating your testing process, you can build, test, and deploy code changes automatically through continuous delivery. Teams that integrate automated tests into their CI/CD pipeline can ship updates more frequently with confidence that every change has been properly tested before reaching users.

Automating Different Test Types with a Python Testing Framework

A Python testing framework gives you the tools to automate multiple types of tests systematically. From basic unit tests that check individual components to complex integration tests that examine component interactions, automation reduces manual work while ensuring consistent results. The right framework helps organize and manage these different test types efficiently.

-

Unit Tests: These focus on testing the smallest pieces of code like individual functions. By catching bugs early, unit tests verify that each component works correctly by itself. For example, a unit test might check if a discount calculation function returns the right amount for different scenarios.

-

Integration Tests: These examine how different code components work together and catch issues that unit tests might miss, like data flow problems between parts of the system. An integration test could verify that users can successfully log in and add products to their cart.

-

End-to-End Tests: These simulate real user workflows from start to finish to ensure all parts of the application work together smoothly. For an online store, an end-to-end test might go through the entire shopping process from browsing products to completing payment.

Setting Up Robust Testing Environments and Managing Test Data

A key part of test automation is creating dedicated test environments that closely match production. This means properly setting up databases, dependencies, and infrastructure. For instance, many Python testing frameworks use environment variables to safely connect to test databases without affecting production data.

Managing test data effectively is crucial for reliable automated testing. This includes creating realistic test datasets, protecting sensitive information, and handling data dependencies between tests. Test data management tools can help generate good test data, work with large datasets, and automatically reset data between test runs. This is especially important for integration and end-to-end tests that involve complex data interactions.

Ensuring Test Reliability and Maintainability as Your Project Evolves

As your project grows, keeping your automated tests reliable and efficient becomes more challenging. Regular test maintenance involves reviewing and updating tests, removing duplicates, and making test code more readable. Your tests need to evolve alongside your application code to stay useful. Mergify can help by automatically enforcing testing requirements and simplifying test update workflows. Taking care of your tests early on with a solid Python testing framework will save time and effort as your codebase expands.

Advanced Testing Strategies for Machine Learning Projects

Testing machine learning applications presents a unique set of challenges compared to traditional software testing. While regular apps follow clearly defined rules, ML models learn from data and exhibit less predictable behavior that standard testing approaches can't fully validate. To properly evaluate ML systems, we need specialized testing methods that account for how these models actually work. Let's explore practical testing strategies that help ensure your ML projects perform reliably.

Testing Model Behavior: Beyond Traditional Unit Tests

Standard unit tests that check specific inputs and outputs don't work well for ML models since the goal is different - we want to verify that models can effectively handle new, unseen data rather than just memorize training examples. A better approach is to set aside a portion of your data as a test set that the model never sees during training. This gives you a realistic way to assess how well the model will perform on real-world data. You can also use cross-validation, where you train and test the model on different data subsets, to get a more complete picture of performance.

Validating Data Transformations: Ensuring Data Integrity

The quality of data going into ML models directly impacts their results. Problems in data preparation can lead to poor predictions, so it's essential to thoroughly test your data pipeline - all the steps that process raw data into model-ready format. Each transformation needs verification to confirm it properly cleans and formats the data without introducing errors. For example, if you normalize values to fall between 0 and 1, you should test that the output actually stays within those bounds and preserves important patterns in the original data. These checks help catch data issues before they affect model performance.

Evaluating Performance Metrics: Measuring What Matters

Simply measuring accuracy often isn't enough to understand if an ML model is truly performing well. This is especially true for imbalanced datasets where one class appears much more frequently than others. Metrics like precision, recall, F1-score, and AUC provide more detailed insights into different types of errors. Take a medical diagnosis model - missing an actual disease (false negative) is generally much worse than flagging a healthy patient for additional screening (false positive). Your testing should track these various metrics over time to spot any degradation in model performance.

Implementing Effective Testing Practices: Ensuring Reproducibility and Stability

Being able to reproduce your ML results consistently is crucial for debugging issues and improving models over time. This means carefully tracking everything that affects model behavior - the model version, data version, parameter settings, and testing setup. Tools like Git help manage these various components systematically. Following consistent testing practices builds confidence that your models will perform reliably when deployed and makes it easier to collaborate with team members and maintain the system long-term.

Implementing Your Testing Strategy: A Practical Roadmap

Setting up a Python testing framework takes more than picking the right tools - it requires changing mindsets, following best practices, and having a clear plan. Here's a practical guide to help you make testing a natural part of your development process while building a strong testing culture.

Introducing Testing Frameworks to Your Projects

Start small when adding testing to your projects. Choose a pilot project with clear requirements and a manageable size to help your team get comfortable with the new framework. For example, if you're using pytest, begin with simple test functions before moving on to more complex features like fixtures and plugins. This step-by-step approach helps everyone learn at a comfortable pace while building confidence.

Establishing Testing Practices That Stick

The key to making testing stick is treating it as an essential part of writing code, not an afterthought. Encourage developers to write tests alongside their code - just like they would write documentation. Make test coverage part of your code review checklist. When tests become part of the routine, teams catch bugs earlier and spend less time debugging later.

Measuring the Impact of Your Testing Efforts

Keep track of how testing improves your code quality. Look at specific numbers like how many bugs are caught during testing versus those found after release. Compare the time saved by automated tests to manual testing efforts. These concrete results show the real value of investing in testing and help justify the resources needed to do it well.

Building a Testing Culture and Handling Resistance

Some team members might push back against new testing requirements, seeing them as extra work or feeling unsure about using testing tools. Address these concerns by showing how testing saves time in the long run through fewer bugs and faster development. Offer training sessions and pair programming to help everyone get better at testing. Share success stories where good tests prevented problems to help the team see testing's value.

Maintaining Testing Momentum Over Time

As your projects grow, keeping tests effective requires ongoing attention. Review and update tests regularly, removing duplicates and making sure they match current features. Tools like Mergify can help automate this process, ensuring tests stay relevant as your code evolves. This consistent effort keeps your testing investment worthwhile, maintaining code quality without slowing development.

Streamline your team's code review and merging process with Mergify. Automate your workflows, improve code quality, and save valuable development time.