Test Environment Strategy: From Chaos to Confident Delivery

Understanding Test Environment Strategy: Your Safety Net

Imagine building a house without blueprints. It'd be a disaster, wouldn't it? A test environment strategy is like the blueprint for your software. It makes sure all the parts work together smoothly before you show it to the world. It's a safety net that catches bugs and performance problems before they impact your users, saving you time, money, and headaches. It's much more than just a staging server; it’s a complete plan for how you design, manage, and use your testing environments.

Why "It Works on My Machine" Just Doesn't Cut It

The old-school way of testing – just using individual developer environments – is full of problems. Different configurations, dependencies, and data lead to the classic "it works on my machine" excuse. Bugs sneak through and wreak havoc in production. A good test environment strategy fixes this by creating standardized environments that are as close to production as possible. This way, your test results are actually reliable and useful.

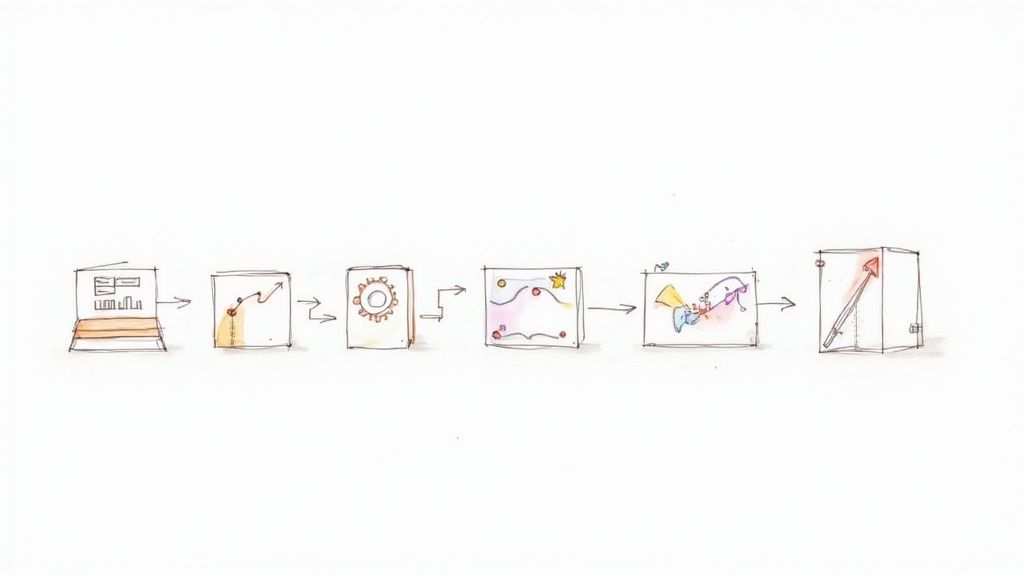

From Chaotic Testing to Systematic Validation

A strong test environment strategy takes messy testing and turns it into a well-organized validation process. Think of an orchestra: each instrument (environment) has its own part to play. You might have one environment just for performance testing, another for security, and one for user acceptance testing (UAT). Each is set up with the right tools and data for its specific job. If you're interested in learning more about managing these environments, check out this helpful article: Managing Test Environments.

Building Confidence Through Strategic Environment Design

The growing popularity of "test environment as a service" shows just how important this strategic approach is. Things like cost-effective scaling and support for Agile and DevOps are fueling this growth. The market, currently valued at USD 15.2 billion, is expected to boom to USD 62.0 billion by 2033, growing at a CAGR of 16.06% between 2025 and 2033. This clearly demonstrates the increasing value placed on well-planned test environments. Want to dive deeper into this trend? Discover more insights on the Test Environment as a Service market.

The Foundation of Your Development Pipeline

A complete test environment strategy isn't just about servers and tools. It’s also about setting up processes, defining roles, and creating a culture of quality. It’s the bedrock of your entire development pipeline, allowing for quicker releases, fewer risks, and more confidence in your software. Investing in a solid test environment strategy isn’t just about squashing bugs; it’s about building a system for continuous improvement and reliable software delivery. This solid foundation supports everything from the very first lines of code to the final launch, ensuring quality at every stage. It allows your team to embrace new ideas, experiment without fear, and deliver amazing software that surpasses user expectations.

Building Your Environment Blueprint: Architecture That Works

Think of architecting your test environments like urban planning. You wouldn't just randomly plop down buildings, would you? You need infrastructure, different zones for different purposes, and reliable connections between everything. Similarly, a solid test environment strategy is crucial for smooth software development. The best teams rely on proven patterns that adapt well to different project sizes and levels of complexity.

Environment Hierarchies: From Local to Production

Just as a city has distinct districts, a robust test environment strategy relies on a well-defined hierarchy. Let's break it down:

- Local Development Environments: These are the individual workspaces where developers write and initially test their code, like personal workshops where they can tinker freely.

- Shared Development Environments: These are collaborative spaces where developers integrate their code and test it together, much like a bustling town square where everyone contributes.

- Staging Environment: This is a near-replica of the production environment, a dress rehearsal before the big show, allowing for final checks and adjustments.

- Production Environment: This is the live system, the bustling city center where your users interact with your software.

This layered approach allows for isolated testing at each stage, minimizing the risk of bugs sneaking into production.

Managing Dependencies and Configurations

Just like different city districts require specific services, your environments need careful management of dependencies and configurations. Imagine testing a feature with a database version different from production—chaos would ensue!

- Tools like containerization (Docker and Kubernetes) help package dependencies, creating portable and consistent environments that can be easily deployed across different stages. Think of them as standardized shipping containers that can be moved seamlessly from one location to another.

- Configuration management tools like Ansible and Terraform automate and standardize environment setup, preventing configuration drift and reducing human error. They act like the city's meticulous planning department, ensuring everything runs smoothly.

Choosing the Right Architecture Pattern

One size doesn't fit all when it comes to environment architecture. Just as different cities have different layouts, various architectural approaches cater to different project needs.

Let's look at a few options:

- Shared Environment Model: Suitable for smaller teams and simpler projects, this is like a small town where everyone shares resources.

- Dedicated Environments per Stage: Essential as complexity grows, this is akin to a larger city with dedicated zones for different functions.

- Service Virtualization: For large-scale projects, this simulates dependencies, reducing the need for complex integrations. Think of it as using sophisticated simulations to study traffic flow without disrupting the actual city.

Choosing the right pattern depends on factors like team size, project complexity, and resources. It's about finding a balance between isolation, realism, and cost-effectiveness.

To help visualize these different approaches, let's examine a comparison table:

Environment Architecture Patterns Comparison

| Architecture Pattern | Team Size | Complexity Level | Resource Requirements | Best Use Case |

|---|---|---|---|---|

| Shared Environment | Small (1-5) | Low | Minimal | Small projects, rapid prototyping |

| Dedicated Environments per Stage | Medium to Large (5+) | Medium to High | Moderate to High | Complex projects, multiple releases |

| Service Virtualization | Large (10+) | High | High | Large-scale projects, complex dependencies |

This table highlights the trade-offs between different architecture patterns, helping you select the best fit for your specific needs. For smaller teams, a shared environment might suffice. As complexity grows, dedicated environments become crucial. And for very large projects, service virtualization can be a game-changer.

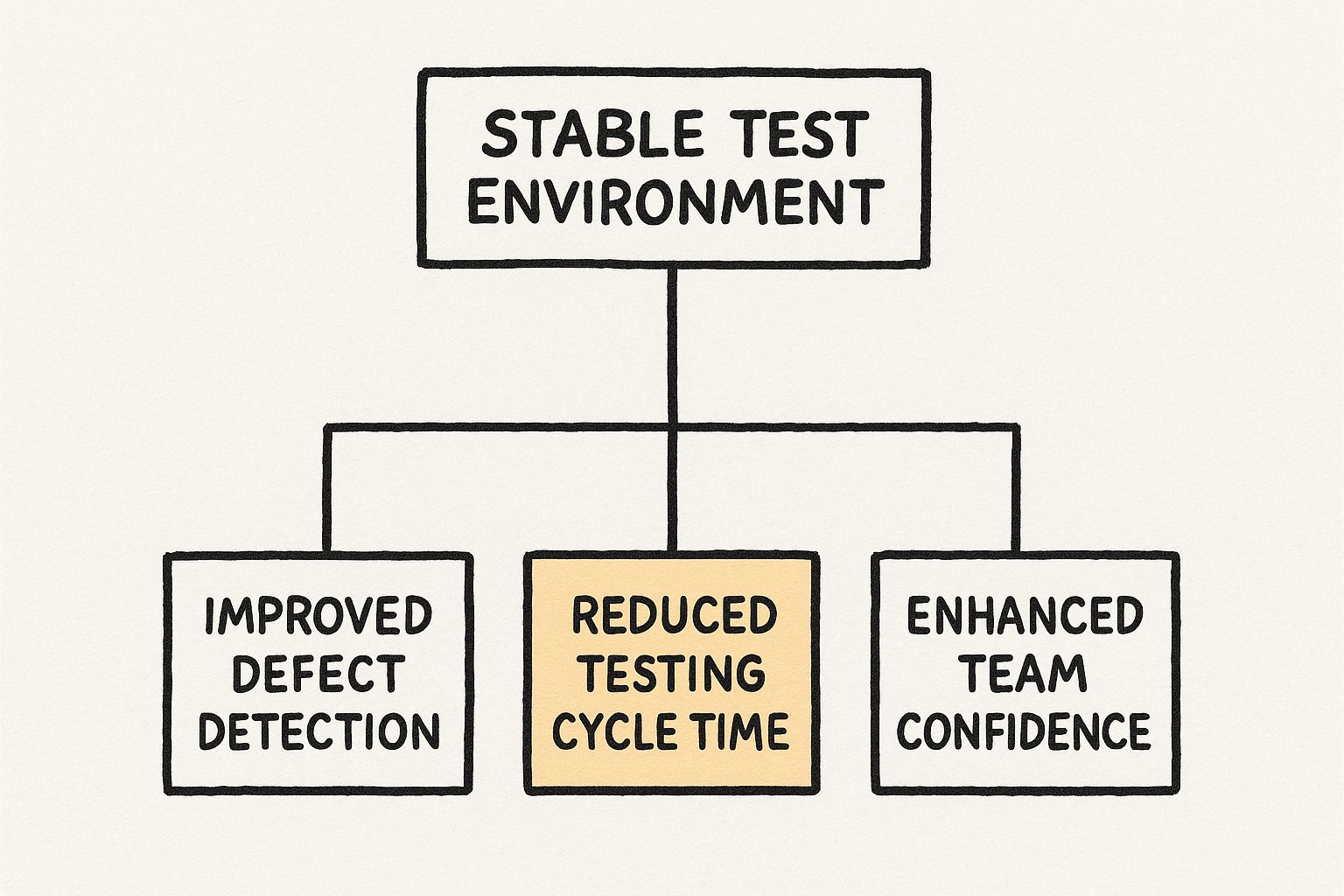

The infographic above demonstrates how a stable test environment positively impacts software development. A solid testing foundation leads to better defect detection, faster testing cycles, and increased team confidence, ultimately improving software quality and delivery speed.

Isolation vs. Realism: Finding the Sweet Spot

Your test environments should be isolated enough for safe experimentation but realistic enough to provide valuable feedback. Think of it like a flight simulator—it needs to be separate from actual flight to avoid real-world consequences but realistic enough to provide effective training.

Factors like data management, dependency management, and environment provisioning influence this balance. Striking the right balance between isolation and realism is key to catching critical bugs before they impact users while ensuring your testing accurately reflects real-world conditions. Building an effective test environment strategy is about creating environments that support both rigorous testing and confident releases.

Cloud-Native Approaches: Scaling Without Breaking Banks

The cloud offers incredible potential for scaling software development and deployment. Think on-demand resources and seemingly limitless scalability. But many teams haven't quite figured out how to fully tap into these benefits when it comes to their test environments. They're often stuck with older processes, leading to inefficient scaling and higher costs. Smart organizations are adopting cloud-native approaches, transforming testing and creating environments that scale smoothly and affordably.

Containerization: Your Portable Test Environments

Imagine packing your entire test environment—code, dependencies, configurations—into a neat, self-contained box. That's containerization, powered by tools like Docker. These containers are incredibly portable. You can run them consistently across different cloud providers or even your local machine. This eliminates the infamous "it works on my machine" problem.

This portability is especially useful in a CI/CD workflow. It allows for seamless transitions between development, testing, and production.

Infrastructure as Code: Automating Your Environments

Manually managing infrastructure? It's like trying to juggle chainsaws—time-consuming and risky. Infrastructure as Code (IaC) changes the game. You define your infrastructure in code, just like your application code, using tools like Terraform and Ansible.

This automates environment provisioning and management. Spinning up a new environment becomes as easy as running a script. IaC makes environments reproducible and reduces errors, common headaches in traditional environment management.

Orchestration: Managing the Complexity

As your testing gets more complex, you'll likely need to manage many containers, services, and dependencies. That's where environment orchestration tools like Kubernetes come in. They automate the deployment, scaling, and management of these complex systems. Think of Kubernetes as the conductor of an orchestra, making sure everything works together.

This automation is crucial for scaling testing while controlling costs. You can automatically scale down resources during quieter periods and scale up when needed, optimizing cloud spending. This dynamic scaling is a huge advantage of cloud-native approaches. Interestingly, this shift to cloud computing is driving growth in the test environment as a service market, predicted to hit USD 17.19 billion by 2025, a 19.6% CAGR from 2024. Discover more insights on this growing market.

Avoiding Complexity Traps

While cloud-native approaches are powerful, they can also create new challenges. One common trap is over-engineering. Teams sometimes build systems that are too complex, causing more problems than they solve.

Another issue is security. In the cloud, you're responsible for securing your applications and data within the cloud environment. Your test environment strategy needs to address security from the outset.

Finally, simply using cloud-native tools without understanding the core principles can lead to wasted resources and frustration. Focus on solving real problems, not just following trends. By carefully planning your strategy, addressing potential problems, and focusing on practical implementation, you can truly harness the power of the cloud, scaling effectively without overspending. This empowers you to build and deploy high-quality software, confident in your robust and cost-effective testing process.

CI/CD Integration: Making Testing Seamless and Automatic

Your test environment strategy isn't a static display piece; think of it as a dynamic bridge between development and deployment. When tightly integrated with your CI/CD pipeline, a robust test environment strategy becomes the engine for efficient and reliable software delivery. This integration guarantees every code change undergoes thorough testing before reaching your users. Let's explore how top-notch teams weave their environment strategy into their CI/CD workflows, building automated processes developers can rely on.

Automating Environment Provisioning

Imagine needing a fresh test environment for each new feature branch. Manually setting these up would be like painting the Golden Gate Bridge with a toothbrush – tedious and impractical! That's where automation steps in. With CI/CD integration, environment provisioning becomes an automatic part of your pipeline. Tools like Terraform and Ansible, combined with cloud platforms, let you define your infrastructure as code and spin up new environments in minutes. This drastically cuts down the time and effort needed to set up and dismantle testing environments.

Think of it like ordering a pizza online: a few clicks, and it’s on its way. No need to knead dough, grate cheese, or even talk to a human!

Streamlining Test Data Management

Data is essential for testing, but managing it effectively can be a real headache. CI/CD pipelines automate data setup and teardown, ensuring each test runs with the correct data. Think of it as a stagehand setting the scene for a play – each act needs its own props. Techniques like data masking and synthetic data generation can be integrated into the pipeline to protect sensitive information while providing realistic data for testing. This keeps your real data safe while still giving your tests a good workout.

Automated Validation: Ensuring Quality at Every Stage

CI/CD integration enables automated testing at every stage of the pipeline. Unit tests, integration tests, and even end-to-end tests can automatically trigger when code changes. This continuous validation catches bugs early, like a spellchecker catching typos, making them much cheaper and easier to fix. This constant feedback loop is crucial for maintaining code quality and speeding up development. You might find this interesting: Flaky Test Detection.

Orchestrating Complex Workflows With Tools Like Mergify

This screenshot showcases Mergify’s dashboard, allowing developers to see and manage their merge queues and automation rules. This visual representation simplifies complex merge workflows and offers greater control over the integration process.

Tools like Mergify act as the conductor of an orchestra, coordinating complex environment workflows within your CI/CD pipeline. Mergify automates merge processes, ensuring only thoroughly tested code makes it into the main branch. This automation minimizes manual work and removes the chance of human error, leading to more stable and reliable releases. When adopting cloud-native approaches, it's crucial to follow best practices; this article details key considerations: cloud migration best practices. This tight integration of testing, merging, and deployment streamlines the entire delivery process.

To illustrate the power of CI/CD integration tools, let's look at the following table:

CI/CD Integration Tools and Capabilities Overview of popular tools for integrating test environments with CI/CD pipelines, their key features and integration complexity

| Tool | Environment Provisioning | Test Data Management | Integration Complexity | Key Strengths |

|---|---|---|---|---|

| Jenkins | Supports various provisioning tools (e.g., Docker, Kubernetes) | Plugin ecosystem offers several data management options | Moderate | Open-source, highly customizable, large community |

| GitLab CI | Integrated environment management with Kubernetes and Docker | Supports artifacts and caching for data management | Easy to Moderate | Tight integration with GitLab, built-in features |

| GitHub Actions | Direct integration with various cloud providers and provisioning tools | Supports artifacts and caching for data management | Easy to Moderate | Tight integration with GitHub, growing ecosystem |

| CircleCI | Supports Docker and cloud deployments | Offers workflows for data setup and teardown | Easy to Moderate | Cloud-based, easy to use, scalable |

| Mergify | Focuses on merge automation and workflow orchestration, integrates with existing provisioning tools | Relies on other tools for data management, streamlines testing workflows | Easy | Simplifies complex merge strategies, improves developer productivity |

The table above summarizes the capabilities of different CI/CD tools in managing test environments. Each tool offers unique strengths and levels of integration complexity, allowing teams to choose the best fit for their needs.

Building Pipelines for Fast Feedback and Confident Releases

The ultimate goal of CI/CD integration is to build pipelines that offer rapid feedback to developers while maintaining the high standards needed for confident releases. This means designing pipelines that balance speed and thoroughness. By automating testing and environment management, developers get quick feedback on their code changes, letting them address issues promptly. This rapid feedback loop encourages continuous improvement and speeds up the entire development cycle. At the same time, automated checks and balances in the pipeline ensure only carefully vetted code makes it to production. This builds confidence in the stability and reliability of each release, leading to a more efficient and higher-quality software delivery process.

Data Management: The Challenge Nobody Talks About Enough

Let's be honest: even with the best infrastructure and the shiniest tools, your test environment strategy will crumble without proper data management. It's like having a top-of-the-line race car but no fuel. The real headaches often come from handling sensitive data, keeping complex datasets in order, and ensuring everything stays consistent across different environments.

The Real-World Data Struggles

Teams frequently run into tricky data problems. Consider referential integrity, which is like making sure all the pieces of a puzzle fit together perfectly. It means ensuring that relationships between different data points remain consistent. This is crucial for realistic testing, but can quickly turn into a logistical nightmare, particularly when juggling multiple environments. For a deeper dive into automated testing, check out this CI/CD Pipeline Tutorial. Another common pitfall is data drift, where the data in your test environment slowly starts to resemble a funhouse mirror version of your production data. This, of course, throws off the accuracy of your test results.

Balancing Realism With Security and Compliance

It's a delicate balancing act. You need realistic test data to get meaningful results, but you also need to keep sensitive information under lock and key. This is especially important in regulated industries. Imagine testing a financial application. Using real customer financial data is a non-starter due to privacy regulations. This is where techniques like data masking (think of it like redacting confidential information) and synthetic data generation (creating realistic but artificial data) become essential. These methods allow you to perform thorough testing without compromising security or regulatory compliance.

Data Refresh Strategies: Keeping Your Data Current

How often should you refresh your test data? Well, it depends. Some teams do it daily, some weekly, and some only when absolutely necessary. A key factor is how often your production data changes. If your production data is constantly evolving, you'll need to refresh your test environments more frequently to stay relevant. For more insights, take a look at this Continuous Integration Best Practices Guide. Finding the right frequency is critical – too infrequent, and your testing becomes out of touch; too frequent, and it drains your resources.

Building Sustainable Data Workflows

Effective data management isn’t a one-and-done task; it's an ongoing commitment. Think of it like tending a garden – constant weeding, watering, and fertilizing are necessary for it to flourish. Building sustainable data workflows involves automating processes like data masking, synthetic data generation, and data refresh. It also requires careful planning and collaboration between database administrators, testing engineers, and development teams. These efficient workflows free up valuable time and resources, allowing your team to focus on building great software instead of putting out data fires. By putting strong data management practices into place, you’re not just managing data; you're transforming your test environment strategy into a powerful engine for quality and speed. This proactive approach nips problems in the bud, allowing for smooth and efficient testing throughout the entire development lifecycle. This, in turn, empowers your teams to build higher-quality software with greater confidence and efficiency.

Avoiding The Pitfalls That Sink Environment Strategies

Building a robust test environment strategy isn't a simple task. It's more like navigating a ship through a tricky sea. Plenty of teams start with the best intentions, only to find themselves facing unexpected challenges. Let's explore some of these common pitfalls and figure out how to avoid them.

Environment Drift: The Silent Saboteur

Imagine two ships sailing in dense fog, slowly drifting apart without realizing it. That's what environment drift is like. Your staging environment, meant to mirror production, gradually becomes different due to undocumented changes and mismatched configurations. This leads to unreliable test results. You might think a bug is fixed in staging, only to have it reappear in production, causing wasted time and effort.

How do you combat this drift? Think of configuration management tools like Ansible or Terraform as your navigational charts. These tools treat your infrastructure as code, ensuring consistency between environments and making it easy to undo changes if necessary.

Configuration Creep: The Complexity Monster

Over time, configurations can become incredibly complex, much like barnacles accumulating on a ship’s hull. This configuration creep makes environments difficult to manage and reproduce. A seemingly minor change can have unexpected consequences, leading to delays and frustration.

To simplify things, embrace modular design and microservices architecture. These approaches break down the complexity into smaller, manageable parts. They isolate changes, making your environments more adaptable and easier to control.

Resource Contention: The Bottleneck Blues

Picture multiple ships trying to squeeze through a narrow canal at the same time. This is what resource contention feels like. Multiple teams compete for the same limited testing resources, creating bottlenecks and delays. Teams are left waiting, development slows down, and releases get pushed back.

The solution? Implement resource scheduling and on-demand provisioning with tools like Kubernetes. Think of Kubernetes as a traffic controller, dynamically allocating resources so everyone has what they need, when they need it.

Security Gaps: The Open Door to Disaster

A test environment with weak security is like a ship with its doors wide open, inviting pirates aboard. Sensitive data can be exposed, compliance rules broken, and your whole system compromised.

From the beginning, build security into your test environment. Use techniques like data masking and synthetic data to protect sensitive information. And just like a ship needs regular maintenance, follow a web application security checklist to keep your entire environment secure.

Ignoring Feedback: The Sinking Ship

Imagine a ship’s crew ignoring the warning signs of a leak – that’s what it’s like to ignore feedback from your development and testing teams. Frustration grows, productivity drops, and eventually, your test environment strategy falls apart.

Open communication is key. Regularly gather feedback from your teams. Understand their challenges and adapt your strategy accordingly. Your test environment strategy isn't a static document; it's a constantly evolving system, adapting to your team's needs and experiences.

By addressing these potential problems, you’re not just creating a better test environment; you're building a more efficient and resilient development process.

Measuring Success: Metrics That Actually Indicate Health

How can you tell if your test environment strategy is truly effective? Are you simply creating a facade of progress, or are you genuinely making a difference? Let's ditch the superficial metrics and dive into the measurements that truly reflect the health and effectiveness of your test environment approach. We'll explore the key performance indicators (KPIs) that high-performing teams closely monitor.

Key Performance Indicators (KPIs) for Environment Health

Successful teams don't rely on intuition; they use data to inform their decisions. Here are some essential metrics to consider:

- Environment Availability: Think of your test environment like a gym. If it's constantly closed, you can't work out! How often are your test environments ready for use? Downtime is a major obstacle to progress. Strive for high availability (99% or higher) to maintain momentum in your testing.

- Provisioning Time: Imagine ordering takeout and waiting hours for it to arrive. Frustrating, right? How long does it take to set up a new environment? Lengthy wait times discourage developers and hinder the entire process. Analyze and refine your provisioning process to minimize delays.

- Test Reliability: If your bathroom scale gives you a different weight every time you step on it, you can't trust it. Similarly, inconsistent test results erode confidence and waste valuable time. Track test reliability to pinpoint and eliminate unreliable tests.

- Developer Satisfaction: Happy developers are productive developers. Regularly survey your team to understand their satisfaction with the testing process. This provides valuable insights into pain points and areas for improvement. And remember, security is always important. When designing your test environment, consider a web application security checklist.

Monitoring Environment Performance and Resource Utilization

Imagine managing a city's traffic flow without knowing how many cars are on the roads. It would be chaos! Similarly, you need insight into your environment's performance to optimize its use. Track metrics like CPU usage, memory consumption, and network traffic to identify bottlenecks and optimize resource allocation. This proactive approach allows you to address potential problems before they escalate.

Measuring the Impact on Development Velocity

Ultimately, your test environment strategy should contribute to a faster, more efficient development process. Measure its impact on essential metrics like:

- Lead Time: How long does it take for a feature to go from concept to production?

- Deployment Frequency: How often are you releasing new code?

- Change Failure Rate: What percentage of deployments result in production issues?

By tracking these metrics, you can demonstrate the tangible benefits of your test environment strategy to the business.

Setting Realistic Benchmarks and Creating Actionable Dashboards

Just like a tailored suit, your benchmarks should fit your team perfectly. The ideal benchmarks depend on factors like team size, project complexity, and industry. Establish realistic goals based on your specific circumstances. Don't just create dashboards for show; prioritize actionable insights. Use the data to identify areas for improvement, monitor progress, and make informed decisions about your test environment strategy.

By concentrating on these key metrics, you'll move beyond superficial appearances and gain a deep understanding of your test environment's health and effectiveness. This data-driven approach empowers you to optimize your testing process, resulting in faster development cycles, higher-quality software, and a more productive team.

Start optimizing your development workflow with Mergify today. Try Mergify for free!