Test Automation Best Practices: A Proven Playbook for Modern Development Teams

Building Your Test Automation Foundation

Setting up test automation requires careful planning and a solid foundation. Like building a house, you need a strong base to support everything that comes after. This means understanding exactly why you need automation, picking tools that fit your needs, and creating clear processes that everyone can follow.

Defining Your Automation Goals

Before diving into automation, take time to identify what you want to achieve. Are you trying to catch more bugs? Speed up your release cycle? Each team's needs are different. For instance, if your developers keep finding old bugs coming back, you might focus on regression testing first. But if you're more concerned about getting features out quickly, your priority might be automating tests in your continuous integration pipeline.

Selecting the Right Tools

Your choice of testing tools should match your specific situation. Consider what type of application you're testing, your team's technical skills, and your budget. Popular options like Selenium, Cypress, and Playwright each have their strengths. Take Playwright - it works well for teams that need fast test runs and want to write tests in different programming languages. The right tool can make a big difference in how effectively your team can work.

Establishing Best Practices

Good habits and practices make test automation work better in the long run. Focus on choosing the right tests to automate, setting up reliable test environments, and maintaining good test data. Research shows that companies take automation seriously - 55% use it mainly to improve quality, while 30% want faster releases. Find more detailed statistics here. Additionally, 42% of companies see automation as key to quality assurance, spending 10% to 49% of their QA budget on it. Starting with good practices helps avoid common problems like tests that fail randomly or become hard to maintain.

Building a Culture of Quality

Success with test automation depends on your whole team being on board. Everyone needs to understand its value and work together to make it successful. Testing should be part of development from start to finish, not just something that happens at the end. When quality becomes part of your team's daily work, it's easier to show stakeholders why investing in automation matters.

A strong foundation sets you up for long-term success with test automation. By focusing on clear goals, choosing the right tools, and following good practices, you create a system that truly helps your team deliver better software. This foundation gives you something solid to build on as your needs grow and change.

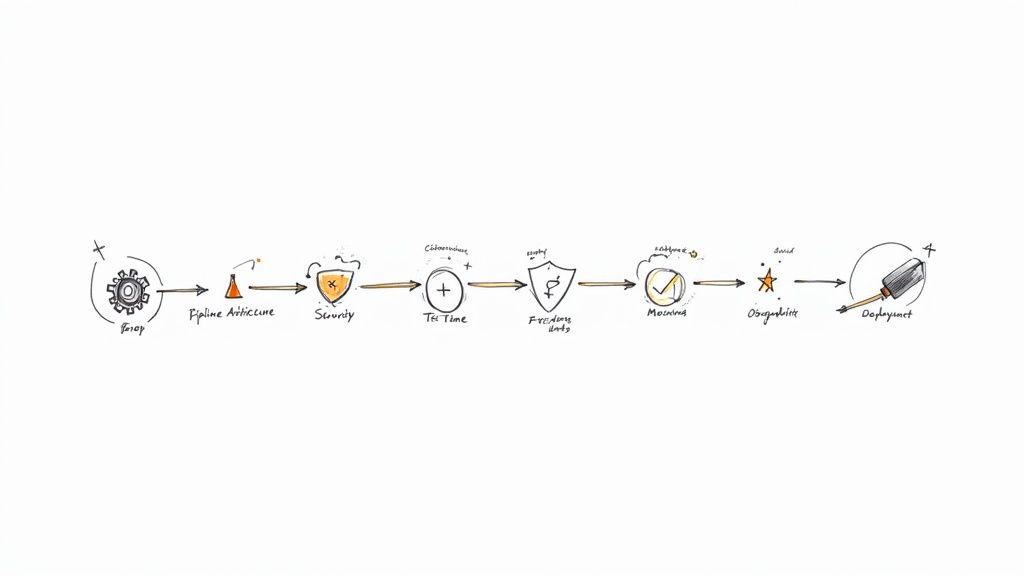

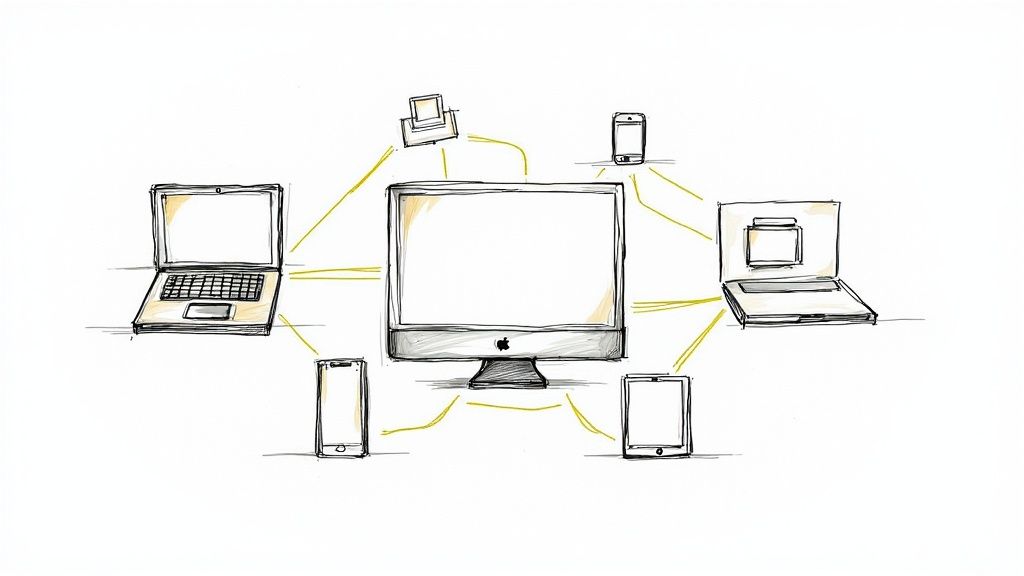

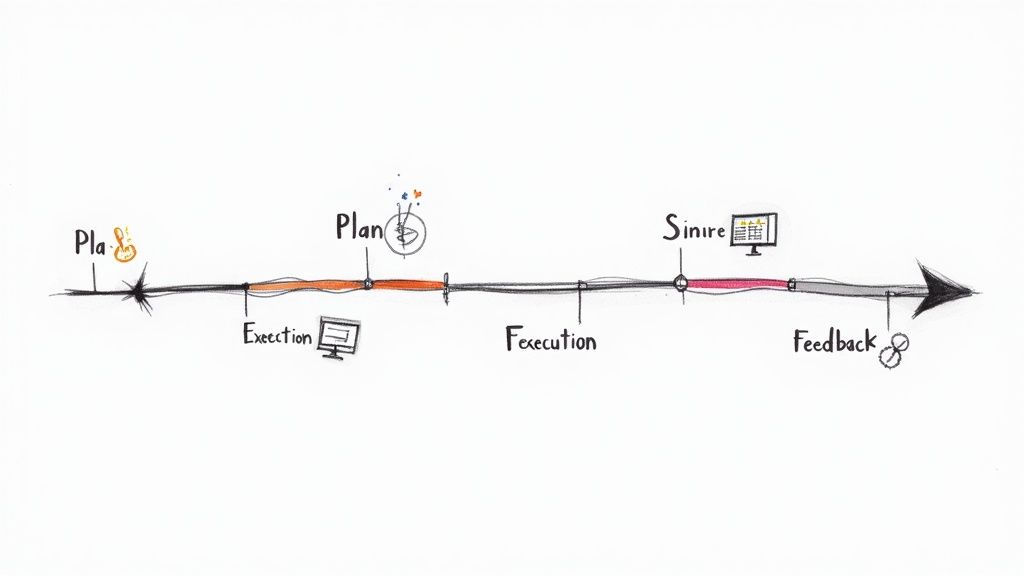

Mastering Continuous Testing Integration

Quality software development requires more than just automated tests - it needs a testing process fully woven into your development workflow. This means carefully planning when and how often tests run, and what parts of your application they verify. Continuous Testing helps teams catch issues early by running tests throughout development, reducing problems that could surface later.

Optimizing Test Timing and Frequency

Finding the right testing rhythm is crucial for your team's success. Some projects need tests running constantly for immediate feedback, while others work better with testing at key milestones. For example, a new feature under active development benefits from continuous testing, but stable components may only need periodic test runs.

Recent data from a 2023 Gartner survey shows how teams approach this: 40% run tests continuously, 38% test at milestones, and 32% follow set intervals. The benefits are clear - 60% of teams report better product quality and 58% see faster deployments with regular automated testing.

Organizing Test Suites and Execution

Smart organization of test suites helps teams work efficiently, like having a well-arranged toolbox where everything has its place. When tests are grouped logically and integrated thoughtfully into your CI/CD pipeline, you can quickly run exactly what you need.

Key practices include:

- Prioritize Tests: Run critical tests more often than less important ones

- Parallel Execution: Save time by running multiple tests at once

- Targeted Testing: Focus on tests relevant to recent code changes

Integrating With CI/CD Pipelines

For testing to truly support development, it must be automated within your CI/CD pipeline. This means tests run automatically when code changes, giving developers quick feedback. Tools like Mergify can help by handling pull request merges and CI builds automatically, keeping your testing process smooth and reliable.

Overcoming Challenges and Measuring Effectiveness

Every testing system faces obstacles like unreliable tests or stability issues. Success requires clear processes for fixing problems and regular reviews of test suites. Tracking key metrics helps prove the value of your testing approach and spots areas to improve. Focus on measuring test execution time, pass/fail rates, and defect escape rate to ensure your tests stay effective and support quick, high-quality releases.

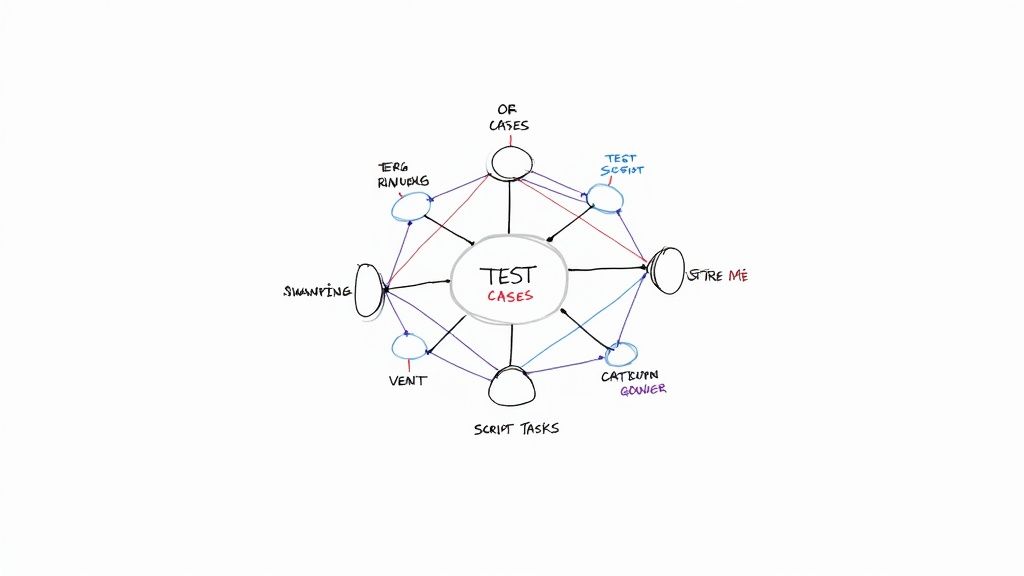

Strategically Selecting High-Impact Test Cases

The key to successful test automation isn't trying to automate every possible test case - it's identifying and focusing on the tests that deliver the most value. Just as a chef carefully selects the right ingredients for a signature dish, picking the right test cases determines the success of your automation efforts.

Identifying High-ROI Test Automation Opportunities

Some test cases deliver significant benefits when automated, while others may create more work than they're worth. To find the best candidates for automation, look for tests with high Return on Investment (ROI). The best tests to automate typically share these characteristics:

- Repetitive: Tests you run frequently, like regression testing after each code change

- Error-Prone: Complex tests where manual execution often leads to mistakes

- Time-Intensive: Long-running tests that tie up QA resources

- Mission-Critical: Tests covering core features that directly impact users

- Hard to Run Manually: Tests requiring precise timing or multiple simultaneous users

Consider a login feature test as an example. Checking login across different browsers and devices manually is tedious and prone to inconsistency. Automating this process ensures reliable results while freeing up testers for more valuable work.

Balancing Automated and Manual Testing

While automation brings clear benefits, manual testing remains essential for certain scenarios. Tests requiring human judgment - like usability testing or exploratory testing - still need the human touch. The goal is finding the right mix of automated and manual approaches based on what works best for each type of test.

Implementing Risk-Based Testing

Risk-based testing means focusing your testing efforts where problems would hurt the most. This approach helps you identify the most critical areas of your application and ensure they get thorough testing coverage. For instance, in an e-commerce site, the checkout flow needs more rigorous testing than the "About Us" page since payment processing issues directly impact revenue.

The test cases you choose to automate have a huge impact on your automation success. By focusing on tests with high reuse potential like smoke tests and regression tests, you can achieve better ROI. Many companies aim to automate between 50% to 75% of their testing - but which tests you automate matters more than hitting an arbitrary percentage. Learn more about successful test automation adoption here.

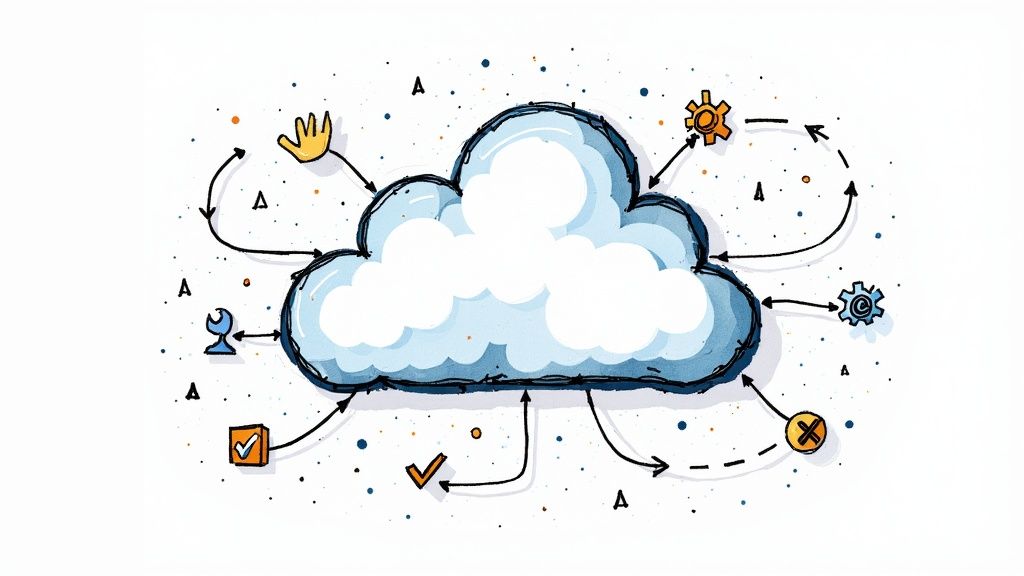

Harnessing AI and ML for Smarter Testing

Modern software testing needs to keep up with increasingly complex applications. Artificial Intelligence (AI) and Machine Learning (ML) offer practical solutions to make test automation more effective and efficient. These technologies deliver real improvements in how teams plan, execute and maintain their test suites.

Intelligent Test Case Selection

Choosing which tests to run is a constant challenge for testing teams. AI analyzes past test results, code modifications, and usage patterns to identify tests most likely to find bugs. Like having an experienced QA engineer who knows exactly which scenarios need attention, intelligent test selection helps teams focus on high-value tests while reducing overall test runtime. For example, AI can spot patterns in historical failures to prioritize tests in areas prone to defects.

Automated Test Maintenance and Optimization

Manual test maintenance takes up valuable time that could be spent on strategic testing work. ML algorithms can automatically detect and resolve flaky tests that fail randomly due to timing or environment issues. The AI also reviews test performance data to suggest optimizations that make tests more reliable and efficient. This automated approach means testers can focus on designing new test cases rather than constantly fixing existing ones.

Predicting Potential Failures and Optimizing Test Execution

AI and ML help catch issues before they become problems in production. By examining code changes and historical bug patterns, these tools can highlight risky areas that need extra testing attention. Finding and fixing issues early in development saves significant time and resources compared to addressing them after release. Teams can learn more about integrating AI into their testing process here.

Integrating AI/ML into Existing Frameworks

Adding AI capabilities doesn't require rebuilding your entire test framework. Many testing platforms now include AI features that work with your current setup. For instance, Mergify can automate CI/CD tasks while you gradually incorporate AI-powered testing tools. This step-by-step approach lets teams experiment to find the right mix of AI assistance for their specific testing needs. The key is starting small with targeted AI implementations that deliver clear value, then expanding based on results.

Building Sustainable Automation Frameworks

Creating an effective test automation framework requires much more than just writing tests. The real challenge lies in building a system that evolves with your software while staying maintainable and efficient. When done right, a good framework helps teams avoid common issues like unreliable tests and messy code while supporting proven test automation best practices.

Designing for Maintainability and Scalability

A well-designed automation framework should be as easy to work with as a neatly organized bookshelf. Just as you can quickly find any book in a properly organized library, your team should be able to easily navigate and modify the framework's components.

Here's what makes a framework truly maintainable:

- Modular Design: Split your framework into smaller, self-contained pieces. This makes updates simpler and allows teams to reuse code efficiently across different parts of the system.

- Clear Code Organization: Stick to consistent coding standards and best practices. When everyone follows the same rules, the code becomes much easier to read and maintain.

- Detailed Documentation: Keep clear records of how the framework works, its architecture, and usage guidelines. This helps new team members get up to speed quickly and serves as a valuable reference for everyone.

Managing Technical Debt and Framework Evolution

Your framework needs to grow and adapt alongside your software. This means actively managing technical debt - the future cost of choosing quick fixes over better long-term solutions. Without proper management, technical debt can make your framework brittle and hard to maintain.

Key practices to keep your framework healthy:

- Regular Refactoring: Clean up and improve your framework code periodically, just like you would with application code. Fix small issues before they become big problems.

- Version Control: Use Git to track changes and enable smooth team collaboration. This gives you a safety net for trying new approaches and keeps a clear record of how the framework has evolved.

- Stay Current: Keep your testing tools and libraries up to date. This ensures you can work with new features in your software and benefit from the latest testing capabilities.

Fostering Team Collaboration and Knowledge Sharing

Success in test automation depends heavily on how well your team works together. Your framework should make collaboration easier, not harder.

Effective ways to promote teamwork:

- Pair Programming: Have two engineers work together when developing framework components. This spreads knowledge across the team and catches potential issues early.

- Code Reviews: Make review processes a regular part of framework development. This maintains quality standards and creates learning opportunities.

- Shared Ownership: Encourage everyone to take responsibility for the framework's success. When the whole team feels invested, they're more likely to keep it well-maintained.

By following these practices, you'll build a framework that delivers value for years to come. The upfront investment in good design and maintenance pays off through faster testing cycles, lower maintenance costs, and better software quality. Most importantly, you'll have a framework that can handle increasing complexity as your software grows.

Measuring and Scaling Automation Success

Successfully building a test automation framework is a key milestone - but it's just the beginning. The next step is carefully measuring your results and growing your capabilities based on actual project needs. Instead of just counting test runs, you need to understand the real value that your automation delivers through proven test automation best practices.

Establishing Key Performance Indicators (KPIs)

Clear metrics are essential for guiding your automation efforts. The right Key Performance Indicators (KPIs) provide concrete data to track progress and spot areas needing improvement. Rather than focusing solely on pass/fail rates, effective automation KPIs measure true business impact. Here are the key metrics to monitor:

- Test Execution Time: Track how quickly your full test suite runs. Faster execution means your team gets feedback sooner.

- Defect Detection Rate: Compare bugs found through automation versus manual testing. Higher automated detection means catching issues earlier.

- Test Coverage: Monitor what portion of your application features have automated tests. While complete coverage isn't realistic, focus on protecting critical functionality.

- Automation ROI: Calculate cost savings from reduced manual testing effort and faster releases.

By tracking these KPIs consistently, you can see where your automation delivers value and what needs adjustment. This data guides smart refinements to your approach.

Tracking Progress and Continuous Improvement

Just like monitoring vital signs indicates health, regularly checking your automation KPIs reveals the strength of your testing practice. Reviewing metrics over time exposes meaningful patterns and potential issues. For example, if test runs keep getting slower, it may signal growing technical debt that needs attention.

This measured approach enables your team to make improvements based on real performance data rather than assumptions. You can focus efforts where they'll have the most impact on software quality.

Scaling Your Automation Practice

As projects expand, automation capabilities must grow to match. Scaling effectively requires thoughtful planning and methodical execution.

Key factors for successful scaling include:

- Framework Design: Build your framework to grow from day one. Clean, modular code makes adding and maintaining tests much easier.

- Resource Management: Plan for the infrastructure and team members needed to support a larger test suite. This may mean upgrading testing hardware or bringing in more automation engineers.

- Process Optimization: Keep testing efficient as you scale by implementing parallel test runs and smart test data handling.

Maximizing ROI and Identifying Optimization Opportunities

Effective automation should provide clear business value. The KPIs outlined above help measure and prove that value. This data justifies continued investment by showing concrete benefits to your development process.

Your metrics also spotlight chances to improve. If defect detection is low, you may need better test cases. Long run times could mean it's time for parallel execution or cleaning up bloated tests.

Using data to guide decisions and constantly refining your approach leads to robust, scalable testing that improves quality throughout development.

Want to make your development workflow smoother and enhance your CI/CD pipeline? Learn more about Mergify and how it can boost your team's productivity.