Mastering Software Quality Assurance Processes

When you hear "software quality assurance," what comes to mind? For many, it's the final, frantic testing phase right before a product ships. But that's a bit like saying a chef's job is just tasting the food before it leaves the kitchen.

Real software quality assurance processes are the master blueprint for building exceptional software from day one. It’s a complete framework designed to prevent defects, not just find them. This proactive approach weaves quality into every single stage of development, making sure the final product is not just functional, but also reliable and secure.

The Core Purpose of Quality Assurance

Let's stick with that chef analogy for a moment. Quality Control (QC) is that final taste test. It's a reactive check to catch any obvious mistakes right at the end.

But software quality assurance processes are the entire system the chef builds to guarantee a perfect dish, every single time. It's about the whole kitchen, not just the final plate.

This system includes things like:

- Sourcing the best ingredients (starting with well-defined requirements)

- Perfecting the recipe (using standardized coding practices)

- Training the kitchen staff (fostering deep collaboration between developers and QA)

- Maintaining clean equipment (ensuring a stable and reliable testing environment)

It’s all about refining the process to stop problems before they even start. You're building quality in, not just trying to inspect defects out.

From Gatekeeper to Strategic Partner

QA used to be seen as the final boss—a gatekeeper you had to get past before release. That old way of thinking is long gone. Today, QA is a continuous, collaborative effort that’s baked into the entire software development lifecycle (SDLC).

It’s about creating a culture where quality is everyone’s job, from product managers to the newest developer on the team.

The real goal of QA is to build confidence. It’s a structured way to prove that the software will not only work as intended but will also stand up to the messy, unpredictable conditions of the real world and exceed what users expect. This shift to a proactive mindset dramatically cuts down the risk of finding expensive, reputation-damaging bugs after release.

The Growing Importance of QA

The proof is in the numbers. The market for quality assurance is exploding as automation and AI become central to how we build software. The software quality automation job market is on track to hit USD 58.6 billion in 2025. By 2035, it's expected to nearly double, landing somewhere between USD 120 and 130 billion.

This incredible growth, which you can read more about on TechStartAcademy.io, shows just how vital QA has become, especially in high-stakes industries like healthcare, finance, and e-commerce where software failures are simply not an option. It all points to one simple truth: investing in quality upfront is always smarter than paying to fix mistakes later.

Navigating the Core Stages of the QA Lifecycle

Every solid piece of software is built on a structured foundation. Much like building a house, you don't just start laying bricks and hope for the best; you start with a detailed blueprint. This journey is the software quality assurance processes lifecycle—a roadmap that guides a feature from a simple idea to a reliable tool in the hands of users.

Think of it as a series of connected stages, each with a clear purpose. Rushing through a phase or skipping a step can create foundational cracks that are far more painful and expensive to fix down the road. Let’s walk through this essential path.

Stage 1: Requirement Analysis

Before a single line of code is written, the QA process kicks off by asking, "Why?" This first stage is a deep dive into the project's requirements. Here, QA teams work closely with product managers and developers, scrutinizing specifications, user stories, and acceptance criteria.

The goal is absolute clarity. Are the requirements testable? Are there any confusing or contradictory statements? For a mobile banking app, this means asking questions like, "What specific security protocols must the 'transfer funds' feature follow?" or "What’s the expected response time when a user checks their balance?" This phase ensures everyone is building from the same blueprint.

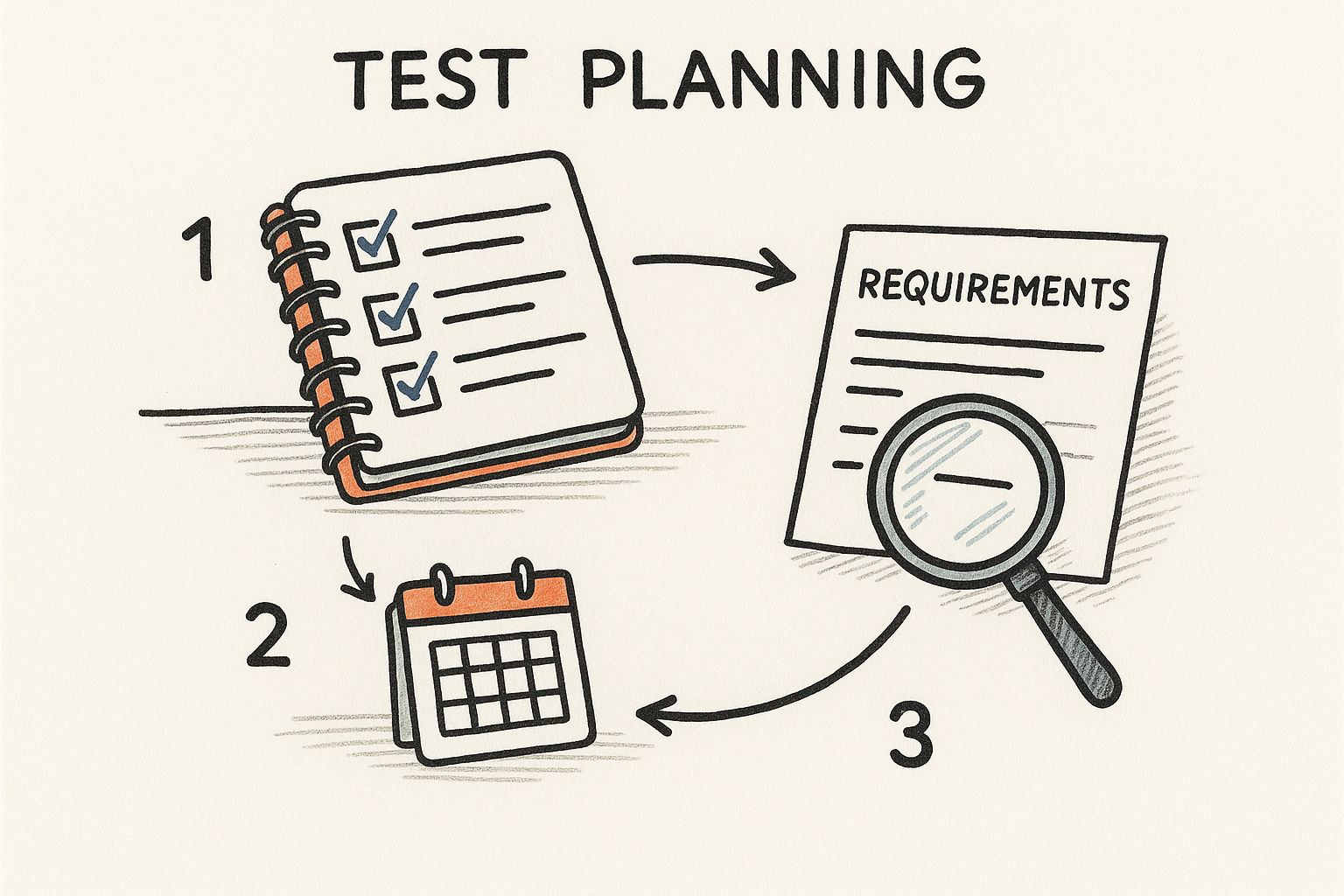

Stage 2: Test Planning

With a solid grasp of the requirements, it’s time to draft the architectural plan for quality. This is Test Planning, where the QA lead or manager maps out the entire testing strategy. It's not just about deciding what to test, but also how, when, and by whom.

This stage involves several key activities:

- Defining the scope: Which features are in, and just as importantly, which are out?

- Estimating resources: How many testers do we need? What tools and environments are required?

- Setting deadlines: When does each testing phase begin and end?

- Identifying risks: What could derail our efforts, like an unstable test environment?

This is where you organize all those requirements, schedules, and resources into a coherent strategy.

A well-structured plan acts as the north star for all quality assurance work, making sure the effort is organized and purposeful.

Stage 3: Test Case Design

Now we zoom in from the high-level plan to the nitty-gritty details. Test Case Design is where QA engineers translate requirements into step-by-step test scenarios. Each test case is a mini-script detailing an action, the input data, and the expected result.

For that banking app, a test case might look like this:

- Action: User logs in with valid credentials.

- Input: Correct username and password.

- Expected Outcome: User is successfully authenticated and lands on the account dashboard within 2 seconds.

Great test cases are precise, repeatable, and cover everything from the "happy path" (positive scenarios) to what happens when things go wrong, like entering an incorrect password.

Stage 4: Test Execution and Defect Tracking

This is where the rubber meets the road. During Test Execution, engineers run the test cases they designed, meticulously comparing what actually happens with what should happen. This is where the bugs surface.

When a bug is found, it isn't just jotted down on a sticky note. It's formally logged in a bug-tracking system like Jira. A solid defect report includes crucial information:

- A clear, concise title.

- Steps to reproduce the bug.

- The actual vs. expected result.

- Screenshots or video recordings.

- Severity and priority levels.

A well-documented defect is the first step toward a quick resolution. Clear bug reports eliminate guesswork for developers, enabling them to locate and fix the root cause efficiently, which is a cornerstone of effective software quality assurance processes.

Once a bug is fixed, the QA team re-tests the feature to confirm the fix works. They also perform regression testing to ensure the change didn't accidentally break something else. This cycle of testing, logging, fixing, and re-testing continues until the software hits the quality bar and is ready for a confident release.

To bring it all together, here’s a quick overview of how these stages flow.

Key Stages in the QA Process

| Stage | Primary Objective | Key Activities |

|---|---|---|

| Requirement Analysis | Ensure all requirements are clear, complete, and testable before development starts. | Reviewing user stories, acceptance criteria, and functional specifications. Identifying ambiguities. |

| Test Planning | Create a comprehensive strategy outlining the scope, resources, schedule, and risks. | Defining test objectives, estimating effort, scheduling activities, and selecting tools. |

| Test Case Design | Develop detailed, step-by-step test cases to validate software functionality. | Writing test scenarios, preparing test data, and defining expected outcomes for all use cases. |

| Test Execution | Execute test cases to find defects and verify the software meets requirements. | Running manual and automated tests, logging bugs, and reporting results. |

| Defect Tracking & Retesting | Manage the lifecycle of defects from discovery to resolution and confirm fixes. | Documenting bugs, re-testing fixed issues, and performing regression testing. |

Each stage builds upon the last, creating a structured process that systematically drives a product toward quality and stability.

Choosing the Right QA Methodology for Your Project

Picking the right approach to quality assurance feels a lot like choosing the right way to build a house. You wouldn't use the same blueprint for a skyscraper as you would for a custom home. It's the same with software—the best software quality assurance processes are tailored to your project's unique needs, timeline, and goals.

There’s no one-size-fits-all solution here. If you force a methodology that clashes with your team's workflow, you'll just create friction, slow things down, and let bugs slip through. The trick is to match your QA approach to your development style, making quality a natural part of the process, not a roadblock.

The Foundational Waterfall Approach

Imagine building a house from a rigid, unchangeable blueprint. First, you pour the foundation. Then you frame the walls. Then you do the plumbing. Every single stage has to be completely finished before the next one can even start. That’s the Waterfall model in a nutshell.

In this classic approach, testing is its own separate phase that happens only after all the development work is done. It’s a very structured, linear process that works surprisingly well for projects with crystal-clear, static requirements that you know won't change.

- Pros: It's straightforward to understand and manage. The clear separation of phases makes it easy to track progress against a fixed plan.

- Cons: It's incredibly inflexible. If you find a major bug during that final testing phase, going back to fix it is a nightmare. It can be wildly expensive and time-consuming because you have to revisit much earlier stages. This makes it a poor fit for any project where requirements might evolve.

You'll often see this method in industries with strict regulatory hoops to jump through, like medical device software, where every single step needs to be documented and signed off before moving on.

The Modern Agile Approach

Now, let's picture building that same house, but one room at a time. You build the kitchen, get feedback from the homeowner, make a few tweaks, and then you move on to the living room. This iterative, feedback-driven process is the heart of the Agile methodology.

In an Agile world, quality isn't some final gate you have to pass through; it's a continuous, shared responsibility. Testing happens right alongside development in short cycles called "sprints." This means developers and QA engineers are working together constantly, catching issues early and often.

By integrating testing into every sprint, Agile teams ensure that quality is built into the product from the very beginning. This approach dramatically reduces the risk of discovering major, costly defects late in the project lifecycle.

Agile is designed to embrace change. Its flexibility is perfect for projects where you don't know exactly what the final product will look like from day one, allowing teams to adapt to new requirements and user feedback on the fly.

DevOps and Continuous Quality

DevOps takes the principles of Agile and pushes them even further by tearing down the walls between development, QA, and IT operations. Think of it like having the architects, builders, and city inspectors all working as a single, unified team on the construction site, automating as much of their work as they possibly can.

In a DevOps culture, quality gets woven directly into a Continuous Integration/Continuous Deployment (CI/CD) pipeline. Automated tests are triggered automatically every single time a developer commits new code. This creates a super-fast feedback loop that ensures every change is validated almost instantly.

This approach is all about automation to keep things moving fast without sacrificing reliability. If you want to go deeper on this, check out our guide on best practices for automated testing to see how you can really supercharge your CI/CD pipeline.

Comparing Methodologies

To make the right call, it helps to see how these approaches stack up against each other based on what your project really needs.

| Factor | Waterfall | Agile | DevOps |

|---|---|---|---|

| Flexibility | Rigid and resistant to change. | Highly adaptable to changing requirements. | Extremely flexible with a focus on rapid iteration. |

| Testing Phase | A distinct, final stage after development. | Integrated and continuous throughout each sprint. | Automated and embedded directly into the CI/CD pipeline. |

| Feedback Loop | Very slow; feedback comes at the end. | Fast; feedback is gathered at the end of each sprint. | Immediate; feedback is provided on every code commit. |

| Best For | Projects with fixed, well-defined requirements. | Evolving projects with dynamic requirements. | Projects demanding high velocity and reliability. |

Ultimately, choosing the right methodology comes down to understanding your project's DNA. For a simple, unchanging application, Waterfall might be enough. But for most modern software projects that need to adapt and innovate quickly, an Agile or DevOps approach provides the speed, flexibility, and continuous quality you need to win.

Tracking the QA Metrics That Actually Matter

If you can't measure it, you can't improve it. This simple truth is the bedrock of any effective QA process. Without hard data, your quality assurance efforts are just guesswork, a shot in the dark. Metrics and Key Performance Indicators (KPIs) are what transform QA from a subjective art into a data-driven science, giving you the real insights needed to sharpen your strategy.

Tracking the right numbers helps you answer the big questions. Are your testing efforts actually efficient? Do they catch the bugs that matter? Most importantly, is the quality of your product genuinely getting better over time? Let's dig into the essential metrics that give you those answers.

Measuring the Efficiency of Your QA Process

Efficiency metrics are all about the speed and productivity of your QA team. They tell you how well you're using your resources and whether your process is creating bottlenecks instead of clearing them. Think of these numbers as the 'how' behind your testing operation.

A few key efficiency metrics to watch:

- Test Execution Rate: This is the percentage of planned tests you actually managed to run in a given period. If this number is low, it might mean your test plans are too ambitious, or your team is hitting unexpected roadblocks.

- Tests Passed Rate: Simply, what percentage of the tests you ran actually passed? A consistently low pass rate could point to problems with new features, an unstable test environment, or even poorly written tests.

- Average Time to Test: This one tracks the average time it takes to run a single test case. It's a fantastic way to spot tests that are overly complex and prime candidates for automation.

Keeping an eye on these numbers helps you streamline your workflow, making sure your team is focused on high-impact activities instead of getting bogged down.

Gauging the Effectiveness of Your Testing

Efficiency is about speed, but effectiveness is all about impact. These metrics show how good your QA process is at its core mission: finding defects before they ever see the light of day with a customer. They measure the 'what'—the real quality outcome of all your hard work.

Tracking effectiveness is crucial because a team can be incredibly efficient at running tests that don't actually find the important bugs. Effectiveness metrics ensure your quality assurance efforts are hitting the mark.

Here are the top metrics you should be monitoring:

- Defect Detection Percentage (DDP): This metric compares the bugs your QA team found before a release to the total number of bugs found both before and after. A high DDP (ideally over 90%) is a sign of a really solid internal QA process.

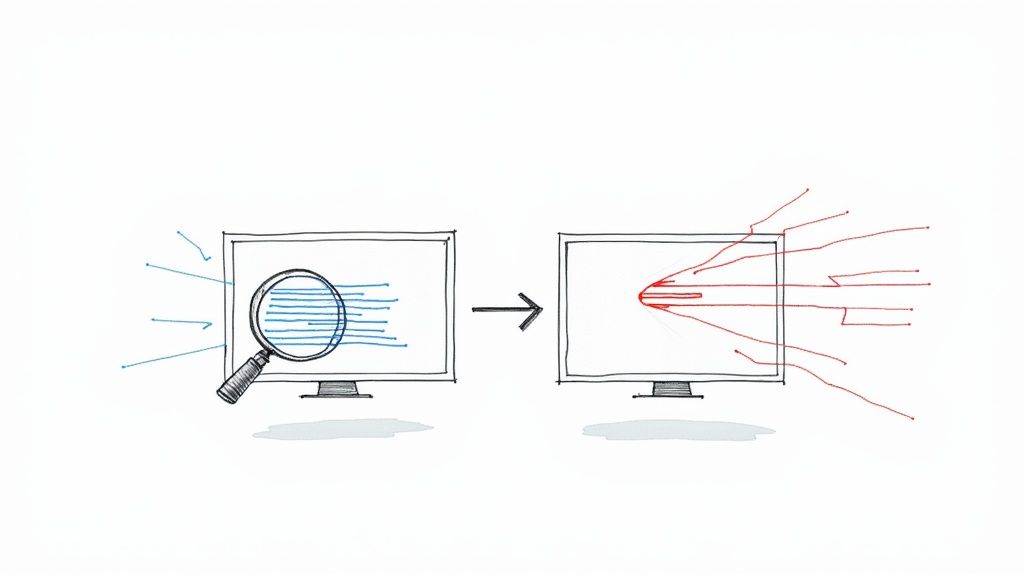

- Defect Leakage: This is the flip side of DDP and arguably one of the most critical metrics you can track. It measures the percentage of bugs that leaked past your QA process and were discovered by users in production. A high leakage rate is a massive red flag that your quality gates need to be much stronger.

These effectiveness indicators paint a clear picture of how well your software quality assurance processes are protecting your users from bugs.

Assessing Overall Product Quality and Stability

Finally, product quality metrics give you that high-level, 10,000-foot view of your software's health and the user experience. These KPIs often blend data from both development and operations to show you what reliability and stability look like in the real world.

Important product quality metrics include:

- Defect Density: This calculates the number of confirmed defects per unit of code, like defects per 1,000 lines of code. It’s a great way to pinpoint which parts of your application are the most complex or bug-prone.

- Mean Time To Repair (MTTR): This measures the average time it takes to fix a bug, from the moment it's reported to the moment the fix is deployed. A low MTTR is a strong signal of an agile and responsive development team.

This strategic focus on measurement is quickly becoming standard practice. According to a recent World Quality Report, about 77% of companies are now investing in artificial intelligence to improve their QA, highlighting a growing recognition that AI can help spot defects earlier and optimize testing cycles.

By combining efficiency, effectiveness, and product quality metrics, you create a powerful feedback loop. To dive deeper into which indicators can help your team, check out our detailed guide on software quality metrics. This data empowers you to make smarter decisions, justify resources, and continuously improve the processes that deliver a fantastic product.

Let's be honest: modern software development moves at a blistering pace. The old days of developers finishing their work and "throwing it over the wall" to a separate QA team just don't cut it anymore. That final, siloed testing phase is a bottleneck we can't afford.

This is where software quality assurance processes have to evolve. Instead of being a final checkpoint, quality assurance must become a living, breathing part of your Continuous Integration/Continuous Deployment (CI/CD) pipeline.

Think of your CI/CD pipeline as a high-speed, automated assembly line. Every time a developer commits code, that new part is instantly inspected for quality before it ever moves down the line. This simple shift transforms quality from a roadblock into a seamless, rapid feedback loop that keeps everything moving.

Establishing Automated Quality Gates

The secret to making this work is a concept called quality gates. These are automated checkpoints baked directly into your pipeline. Code must pass these gates to advance to the next stage. It's like an automated security scan at the airport; if your code doesn't pass, it's immediately flagged and sent back for inspection. No exceptions.

These gates are your safety net. They ensure that faulty code never makes it into the main codebase or—even worse—into production. This allows your team to move quickly and confidently without constantly worrying about breaking things.

A typical setup might have several quality gates:

- Commit Stage: Every single time code is committed, unit tests and static code analysis tools kick in automatically.

- Build Stage: If those initial tests pass, the code is built, and more in-depth integration tests are run.

- Deployment Stage: Before anything gets pushed to a staging environment, performance and security scans are executed.

This layered approach means feedback is almost instant. A developer knows within minutes if their change broke something, letting them fix it while the context is still fresh in their mind. For a deeper look at setting up these kinds of workflows, check out our continuous integration best practices guide.

Tools That Power Integrated QA

Of course, this level of automation doesn't happen by magic. You need the right tools. Platforms like Jenkins, GitHub Actions, and CircleCI are the conductors of the CI/CD orchestra, triggering your automated testing frameworks every time a commit or pull request comes in.

This is where a tool like Mergify comes in, automating the pull request workflow—a critical piece of the CI/CD puzzle.

In the screenshot, you can see rules configured to automatically merge a pull request only after all required checks, like tests and code reviews, have passed. This is a perfect, real-world example of an automated quality gate in action. It guarantees that no code gets merged until it meets your predefined quality standards.

The whole point is to make the right thing the easy thing. By automating these quality checks, you eliminate both human error and the soul-crushing toil of running the same tests over and over. This frees up your QA engineers to do what they do best: complex, exploratory testing that finds the truly tricky bugs.

The Future of QA in CI/CD

The integration of QA and CI/CD isn't standing still. We're seeing a massive shift toward automated, cloud-based testing environments that can scale up instantly and run tests faster than ever, all while keeping data secure.

One of the most exciting trends for 2025 is autonomous test data generation, which uses synthetic data to create realistic, privacy-safe datasets for testing. This move toward cloud-native tools and smart data generation is quickly becoming the foundation of modern QA, enabling agile, cross-platform testing on a scale we could only dream of a few years ago.

By embracing this automated, integrated approach, your team can build a workflow that delivers instant feedback, cuts down on manual work, and ultimately ships higher-quality software at the speed business demands today.

Common Questions About Software QA Processes

Even with a solid plan, a few questions always pop up when teams start getting serious about their software quality assurance processes. Let's clear up some of the most common points of confusion to help you build a confident, quality-driven culture.

Quality Assurance vs Quality Control

One of the first hurdles is understanding the difference between Quality Assurance (QA) and Quality Control (QC). They sound similar, but they tackle quality from two completely different angles.

Think of it like building a car. QA is the architect designing the entire assembly line, making sure every process, tool, and training program is set up to prevent defects from ever happening. It’s proactive and focuses on the process.

QC, on the other hand, is the inspector at the very end of that line, checking the finished car before it gets a "for sale" sticker. It’s a reactive check designed to find defects in the final product.

Quality Assurance (QA) is a proactive process designed to prevent defects by improving development systems. Quality Control (QC) is a reactive process designed to identify defects in the final product through testing.

You absolutely need both. One builds quality in from the start, and the other validates that you succeeded.

Top Challenges in Implementing QA

Putting a formal QA process in place isn’t always a walk in the park. Teams often run into the same few roadblocks that can derail even the best intentions.

The most common obstacles we see are:

- Aggressive Deadlines: When the pressure is on, testing is often the first thing to get cut. It's a classic case of sacrificing long-term quality for short-term speed.

- Communication Gaps: If developers, testers, and product owners aren't talking, assumptions take over. This almost always leads to misunderstandings about what's actually being built.

- Vague Requirements: You can't test what you haven't defined. When requirements are fuzzy or incomplete, testers are just guessing, and that's a recipe for disaster.

Getting past these hurdles takes real commitment from leadership. It means setting achievable deadlines, insisting on clear communication, and treating QA as a non-negotiable part of how you build software.

Getting Started with Formal QA

For a small team, the thought of implementing "formal QA" can feel like a mountain to climb. But you don't need a huge team or expensive tools to make a real difference. The secret is to start small and focus on consistency.

Here are a few simple steps to get the ball rolling:

- Document Requirements Clearly: Before a single line of code is written, write down what the software is supposed to do. Keep it simple.

- Create Basic Test Plans: For any new feature, just outline what you’re going to test. It doesn't need to be a massive document.

- Use Manual Checklists: Before every release, run through a consistent checklist. You’d be amazed at what you can catch with this simple habit.

- Adopt a Bug Tracker: Stop tracking bugs in spreadsheets or Slack. Use a simple tool to log, prioritize, and track issues so nothing falls through the cracks.

The goal here is to build good habits, not a complex bureaucracy. Start with these basics, and you can gradually introduce more advanced practices like automation as your team grows.

Automating your quality gates is the next logical step to scaling your QA processes. With Mergify, you can automate your pull request workflow, ensuring no code gets merged until all your required checks and tests have passed. This acts as a guardian for your codebase, freeing up your team to focus on what they do best: building great software. Discover how Mergify can streamline your development lifecycle at https://mergify.com.