Pytest Tutorial: The Complete Guide to Python Testing Excellence

Getting Started With Pytest Success

Writing tests doesn't have to be complicated. With the right approach and tools like Pytest, testing can become a natural part of how you code. Let's walk through the basics of getting started with Pytest and build your confidence in writing clean, effective tests.

Installing Pytest

Getting Pytest up and running is straightforward. Open your terminal and run: pip install pytest

Once installed, verify everything worked by checking the version: pytest --version

That's all you need to start writing your first test. The simple setup process means you can focus on what matters - writing good tests for your code.

Writing Your First Pytest Test

One of Pytest's best features is its simplicity. You don't need complex setup or extra code - just write a Python function that starts with test_. For example:

def test_addition(): assert 2 + 2 == 4

This clean approach sets Pytest apart from other testing tools like Python's built-in unittest. Want to learn more? Check out this great guide on Python testing with Pytest.

Structuring Your Test Directory

As your project grows, organizing tests becomes important. Create a tests folder in your project's root directory. Inside this folder, organize your test files to match your main code structure. This makes it easy to:

- Find tests for specific features

- Run related tests together

- Keep track of what's tested

- Add new tests in logical places

Running Pytest From the Command Line

Pytest's command line tools give you good control over your testing. From your project folder, simply run pytest to execute all tests. Need to run specific tests? Use commands like:

pytest tests/test_api.py- Run tests in one filepytest -k "test_user"- Run tests with "user" in the namepytest tests/unit/- Run all tests in a directory

This flexibility helps you test exactly what you need, when you need it. Focus on specific areas during development while still having full test coverage for releases.

Crafting Tests That Actually Catch Bugs

Writing tests is just the first step - they need to actively find potential issues. Let's explore proven strategies for building strong test suites that catch real problems before they reach production.

Going Beyond Basic Assertions

While simple assertions like assert 2 + 2 == 4 are a starting point, effective tests need to dig deeper. Think about what could go wrong in real usage. For a discount calculator function, test:

- Positive discount amounts

- Zero discounts

- Negative values

- Invalid inputs like text strings

- Edge cases at the boundaries

Structuring Tests for Maximum Effectiveness

As your codebase grows, test organization becomes critical. Match your test structure to your application's architecture - group related tests together under clear categories. This makes it much easier to:

- Find relevant tests quickly

- Understand test coverage

- Maintain and update tests

- Debug failures effectively

Common Testing Pitfalls to Avoid

Don't try to test everything in one giant test. Break it down into focused, specific test cases that check one thing at a time. Keep your test logic simple and clear - complex test code often has its own bugs that mask real issues.

Using Fixtures Effectively

Pytest fixtures help set up consistent test environments. Use them to:

- Create test data

- Configure mock services

- Handle database connections

- Share setup between related tests

This reduces duplicate code and ensures reliable test conditions.

Handling Edge Cases with Parametrization

Use parametrized tests to check multiple scenarios efficiently. For input validation, test various cases:

| Input Type | Example | Expected Result |

|---|---|---|

| Empty | "" | Error Message |

| Too Long | "a" * 1000 | Truncated/Error |

| Special Chars | "!@#$%^" | Sanitized/Error |

By combining focused test cases, smart organization, fixtures, and parametrization, you can build a test suite that finds real issues early. The goal is writing tests that actively protect your code quality, not just satisfy a coverage metric.

Mastering Advanced Pytest Features

Let's explore some powerful Pytest features that help teams build better test suites. These tools make testing more flexible and efficient, helping catch bugs early in development.

Fixtures: Setting the Stage for Success

Fixtures handle test setup and cleanup in a clean, reusable way. Think of them like stage crew - they set everything up before the show and clean up afterward. For example, a fixture could open a database connection before tests run and close it when they finish. This keeps your tests organized and prevents resource waste.

Markers: Categorizing and Controlling Tests

Markers are like labels that help you organize and run specific groups of tests. You can tag tests based on what they do, what environment they need, or any other category that makes sense. This gives you fine-grained control over which tests run when.

Common marker examples:

@pytest.mark.slow: For tests that take extra time@pytest.mark.regression: For regression testing@pytest.mark.smoke: For basic functionality checks

Parametrization: Testing with Multiple Inputs

Parametrization lets you run the same test with different inputs. This is perfect for checking how functions handle various scenarios without writing duplicate test code.

Here's how it works with an email validator:

| Input | Expected Result |

|---|---|

| "valid_email@example.com" | True |

| "invalid_email" | False |

| "" | False |

One test function can now check multiple cases efficiently.

Extending Pytest with Plugins

Plugins add extra features to Pytest. For example, the pytest-system-statistics plugin tracks CPU and memory usage during tests. Install it with python -m pip install pytest-system-statistics and use flags like --sys-stats to monitor resource usage. This helps spot performance issues in your test suite.

By combining fixtures, markers, parametrization, and plugins, you can build more effective test suites. These features help keep your tests clean, organized, and thorough - key ingredients for reliable software testing.

Building Scalable Test Architecture

As your test suite grows alongside your project and pytest expertise, keeping your code organized becomes crucial. This section looks at real-world practices teams use to build test architectures that stay maintainable even as complexity increases.

Organizing Test Files and Directories

A clean test directory structure makes everyone's life easier. Many teams mirror their source code layout in their tests folder. For instance, if you have my_app/users.py, put the corresponding tests in tests/my_app/test_users.py. This straightforward approach helps developers quickly find and update related tests.

Managing Dependencies and Shared Utilities

Test suites often need common helper functions and setup code. Keep these in a dedicated utils or helpers directory to avoid repeating code. For example, store database connection utilities in tests/helpers/database.py. When you need to update these shared functions, the changes automatically apply everywhere they're used.

Creating Maintainable Test Hierarchies

Group related tests logically using classes or folders. This makes your test suite easier to understand and run. You can target specific test groups like pytest tests/api/ for API tests or pytest -k "authentication" for auth-related tests, saving time during development.

Handling Test Data Effectively

Good test data management prevents headaches in larger projects. Instead of hardcoding test data in your test files, store it separately in JSON or CSV files. This separation lets you modify test data without touching the test code itself.

Case Study: Scaling Tests at a Fast-Growing Startup

A recent industry survey found that 85% of developers say well-organized tests make code easier to maintain. One startup showed this in practice - they built a modular test architecture with pytest, organizing by feature and using fixtures for shared resources. This approach helped them grow from hundreds to thousands of tests while keeping their codebase clean. The result? They shipped features faster and caught more bugs before production.

Optimizing Test Performance and Reliability

An efficient testing strategy is essential for software quality. Let's explore proven techniques to make your tests faster and more reliable. These methods will help you transform problematic tests into valuable quality checks.

Parallel Testing with pytest-xdist

Running tests one after another takes too long when you have many tests. The pytest-xdist plugin helps by running tests in parallel. To use it:

- Install with

pip install pytest-xdist - Run tests with

-nflag and number of processes (example:pytest -n 4) - Watch your test execution time drop significantly

Smart Test Ordering for Quick Results

The sequence of your tests affects how quickly you find problems. Consider these tips:

- Run critical tests first to catch major issues early

- Group similar tests together to use the cache better

- Use pytest-ordering to control test sequence

Finding Test Problems Quickly

When tests fail, you need to know why. Pytest gives you detailed error messages to help solve issues. Try these debugging tools:

- Use

--pdbto start the debugger when tests fail - Check variables and step through code

- Find exactly where problems occur

Finding Slow Tests

Speed up your test suite by finding the slowest parts first. The pytest-profiling plugin shows you which tests take longest. Look for tests that:

- Spend too much time on setup

- Have slow database operations

- Make too many API calls

Managing Complex Test Cases

Some tests are harder to write and maintain. Pytest offers several tools to help:

- Use pytest-mock to replace external services

- Try pytest-rerunfailures for unstable tests

- Keep tests independent to prevent interference

With these techniques, your tests will run faster and more reliably. This helps you find problems sooner and ship better code.

Implementing Production-Ready Testing Strategies

Moving tests from development to production brings a whole new set of challenges. Let's explore how teams effectively use Pytest in production environments and tackle real testing obstacles.

Adapting Pytest for Different Applications

Pytest works well with many types of applications. For web services, plugins like pytest-flask and pytest-django make testing easier. When working with data-heavy applications, fixtures help manage database connections and test data consistently.

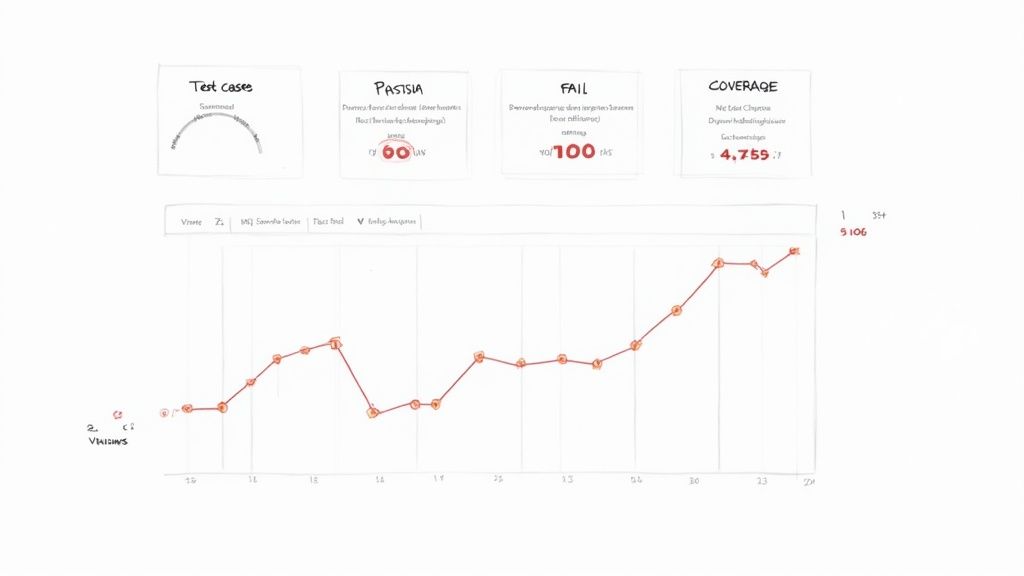

Balancing Test Coverage and Practicality

Most teams know that 100% test coverage isn't realistic in production. Smart developers focus on testing the most critical parts of their code. They prioritize high-risk areas and key user workflows, finding a practical balance between thorough testing and available resources.

Handling Common Production Testing Challenges

Production testing comes with specific hurdles like flaky tests, external service dependencies, and complex deployment setups. Pytest offers several solutions:

- The pytest-rerunfailures plugin helps manage unreliable tests

- Mocking libraries isolate code from external services

- Fixtures create consistent test environments

Case Study: Pytest at a Large E-commerce Platform

A major online store recently started using Pytest in production. They struggled with slow tests and inconsistent data. By using pytest-xdist for parallel testing and fixtures for data management, they achieved:

- Faster test execution times

- More reliable test results

- Quicker feature deployments

Best Practices for Production-Ready Pytest

- Focus testing on high-risk code areas

- Use fixtures to handle complex setup/teardown

- Run tests in parallel when possible

- Mock unreliable external dependencies

- Track and analyze test metrics regularly

Improve your development process with Mergify. Our platform helps automate merges, boost code security, and reduce CI costs. Learn how Mergify can help your team.