Master pytest skip test: Boost Your Workflow

The Power of Strategic Test Skipping in Pytest

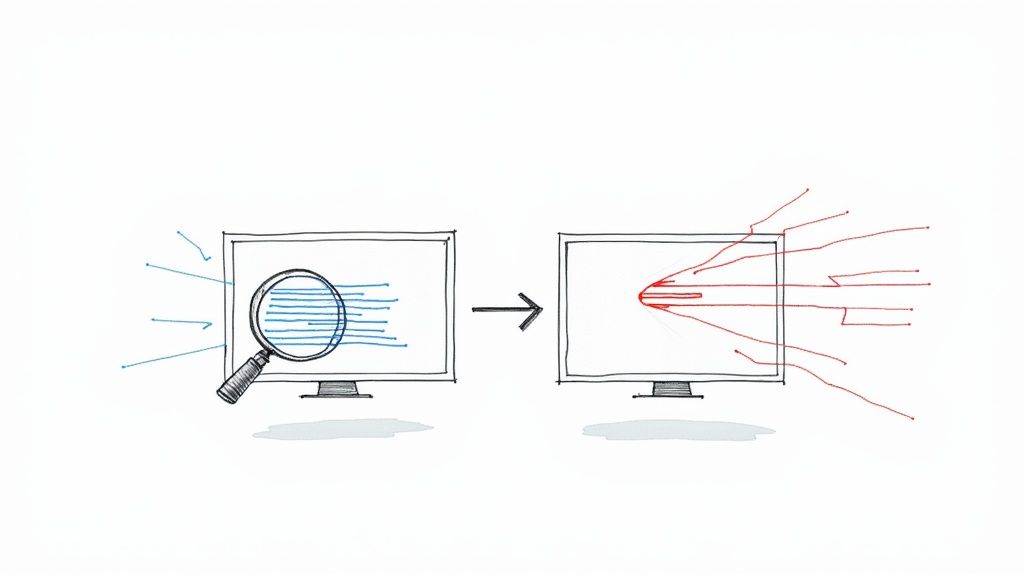

Running every test, every time, can be inefficient. Strategic test skipping optimizes your testing workflow by selectively running tests based on specific conditions. This drastically reduces testing time and highlights critical areas needing immediate attention, focusing your efforts where they matter most. It's all about understanding when and why to skip a test.

Understanding the When and Why of Test Skipping

The pytest skip test functionality precisely removes tests from execution. This is invaluable when running a test is impossible or unproductive. For instance, if a test depends on a temporarily unavailable external service, you can skip it until the service is restored, rather than having your entire suite fail. This maintains tight, relevant feedback loops.

Another common scenario is during feature development. Tests might be incomplete or expected to fail. Skipping these avoids clutter in test reports, allowing you to focus on functional areas. This is more effective than ignoring failing tests, as it explicitly marks their incomplete status. Efficient test management has led to wider adoption of techniques like pytest skip test.

As of 2024, Pytest has been widely adopted, allowing for skipping tests based on criteria like operating system compatibility. The increased use of this feature is due to its efficient management of test suites under various constraints. Learn more here: pytest skip. While seemingly unrelated, understanding security vulnerabilities, perhaps through resources like social engineering training, can inform robust testing strategies.

Types of Skipping in Pytest

Pytest offers several skipping methods, providing flexibility for various situations.

- Unconditional Skipping:

@pytest.mark.skipentirely removes a test, regardless of external factors. This suits permanently obsolete or significantly outdated tests. - Conditional Skipping:

@pytest.mark.skipifskips tests based on a boolean expression. This is where strategic skipping shines. Skip tests based on the OS, Python version, or dependencies. - Expected Failures:

@pytest.mark.xfailflags tests expected to fail. They still run, but failures are reported differently. This is perfect for tests covering known bugs or incomplete features.

This granular control allows precise definition of when and why tests are skipped, creating a more streamlined and insightful process. Your test suite becomes dynamic, adapting to your project's current state. This focus on relevant feedback leads to faster iterations and more efficient development.

Implementing Your First Pytest Skip Tests

So, you understand why skipping tests is important. Now, let's explore how to do it. Pytest provides several ways to implement pytest skip test functionality, from simple commands to more complex conditional logic. This section will equip you with the knowledge to start using these techniques right away.

Unconditional Skipping With @pytest.mark.skip

The easiest way to skip a test is using the @pytest.mark.skip decorator. This removes the test from execution, regardless of any conditions. Think of it as permanently ignoring a specific test. This is perfect for tests that are outdated or require significant revisions.

import pytest

@pytest.mark.skip(reason="This test is obsolete.") def test_old_feature(): # This test will never run assert True

Including a reason explains why a test is skipped. This improves team communication and avoids confusion. This descriptive reason shows up in your test reports, providing valuable context. It's much more helpful than seeing a generic "skipped" status.

Conditional Skipping With @pytest.mark.skipif

For more control, use @pytest.mark.skipif. This decorator lets you skip tests based on a boolean condition. This allows your test suite to adapt to different environments or configurations. You might skip tests relevant only to specific operating systems or Python versions.

import sys import pytest

@pytest.mark.skipif(sys.platform == 'win32', reason="Does not work on Windows") def test_linux_specific_feature(): # This test will only run on non-Windows platforms assert True

This shows platform-specific skipping. The test_linux_specific_feature runs only if the platform is not Windows. This keeps test reports clean and relevant, focusing only on applicable tests. This targeted method ensures quicker feedback and more efficient testing.

Skipping Within a Test Function With pytest.skip()

Sometimes, you need to skip a test during execution based on runtime information. The pytest.skip() function allows this. Calling it within a test immediately stops execution.

import pytest

def test_dynamic_behavior(): feature_enabled = get_feature_status() # Imagine a function that checks a config flag if not feature_enabled: pytest.skip("Feature not enabled.")

# ... rest of the test, executed only if the feature is enabled

assert True

This method is very flexible, adapting to factors discovered during testing. For example, check if an external service is available; if not, skip dependent tests dynamically. This targeted approach avoids running tests destined to fail.

Communicating Clearly with Skip Messages

Always write clear and informative reason messages, no matter your skipping method. Imagine a large test suite with many skipped tests and no explanations. This makes debugging difficult and can hide bigger problems. Good skip messages help your team understand the context and decide whether to revisit or remove a test. This is crucial for a healthy and efficient test suite, especially in a continuous integration environment like Mergify.

@pytest.mark.skipif(database_unavailable(), reason="Test requires a database connection. Check if the database is running.") def test_database_interaction(): # ...

This message clearly explains the dependency and guides troubleshooting. Clear messages are crucial as projects and teams grow, simplifying debugging and maintenance.

To illustrate the differences between the various skip methods, let's look at a comparison table:

Pytest Skip Methods Comparison

This table compares different ways to skip tests in pytest, outlining their syntax and use cases.

| Method | Syntax | Use Case | Output in Reports |

|---|---|---|---|

@pytest.mark.skip |

@pytest.mark.skip(reason="reason for skipping") |

Permanently skip a test. Ideal for obsolete or broken tests needing major rework. | Shows as "skipped" with the specified reason. |

@pytest.mark.skipif |

@pytest.mark.skipif(condition, reason="reason for skipping") |

Conditionally skip tests based on a boolean condition. Useful for environment-specific tests or feature flags. | Shows as "skipped" with the reason if the condition is true. |

pytest.skip() |

pytest.skip("reason for skipping") |

Skip a test dynamically from within the test function based on runtime conditions. | Shows as "skipped" with the specified reason. |

This table highlights the core differences, showing how each method offers a unique approach to skipping tests. By understanding these distinctions, you can choose the most appropriate technique for different scenarios.

By mastering these techniques, you'll effectively use pytest skip test to manage your test suite, resulting in faster feedback and a more focused testing strategy.

Mastering Conditional Test Execution

Going beyond simply skipping tests, conditional test execution lets you build a dynamic test suite that adapts to different environments. This is vital for projects supporting multiple operating systems, optional dependencies, or feature flags. This section explores using pytest.mark.skipif to create these dynamic conditions.

The Power of pytest.mark.skipif

The @pytest.mark.skipif decorator is central to conditional test skipping in pytest. It allows attaching a boolean expression to a test. The test runs only if the expression is False. This simple mechanism offers great flexibility.

For example, you can skip tests on certain platforms:

import sys import pytest

@pytest.mark.skipif(sys.platform == 'win32', reason="Does not run on Windows") def test_linux_feature(): # ... test logic ... assert True

This test runs only if sys.platform is not win32. This keeps Windows test results clean, preventing failures from platform incompatibility. You can also skip tests based on Python versions or installed modules. This adaptability makes pytest's skip functionality important for robust CI/CD pipelines, particularly in platforms like Mergify where automated testing is key.

Dynamic Skip Conditions with Functions

Functions within skipif conditions enable dynamic evaluation:

import pytest

def database_available(): # ... Logic to check database connection ... return True # or False

@pytest.mark.skipif(not database_available(), reason="Requires database connection") def test_database_interaction(): # ... test logic ... assert True

Here, test_database_interaction is skipped if database_available() returns False. This allows complex environment checks within a dedicated function, improving maintainability. This promotes modularity and allows reusing the check for multiple tests. Keeping these functions separate within your test suite aids easy modification.

Complex Conditional Logic

For more complicated logic, combine conditions with and, or, and not:

import pytest

@pytest.mark.skipif(sys.version_info < (3, 8) or not feature_enabled(), reason="Needs Python 3.8+ and feature flag") def test_new_feature(): # ... test logic ... assert True

This handles scenarios with multiple dependencies. Combining logical operators creates complex yet manageable skip conditions. This targeted approach minimizes false positives and ensures efficient CI/CD resource use.

Managing Conditional Skipping in Larger Projects

As projects grow, numerous skipif conditions can arise. Centralizing skip logic maintains organization:

skip_utils.py

import sys

def is_windows(): return sys.platform == 'win32'

def has_optional_dependency(): # ...check for optional library... return True

test_module.py

import pytest from skip_utils import is_windows, has_optional_dependency

@pytest.mark.skipif(is_windows(), reason="Windows not supported") def test_feature_a(): # ...

@pytest.mark.skipif(not has_optional_dependency(), reason="Requires optional dependency") def test_feature_b(): # ...

This modular approach promotes reusability and cleaner test files. These helper functions simplify managing pytest skip conditions in complex projects. Mastering these techniques allows using pytest skip test with skipif to intelligently manage execution, adapting tests to various conditions for a more accurate and efficient testing process.

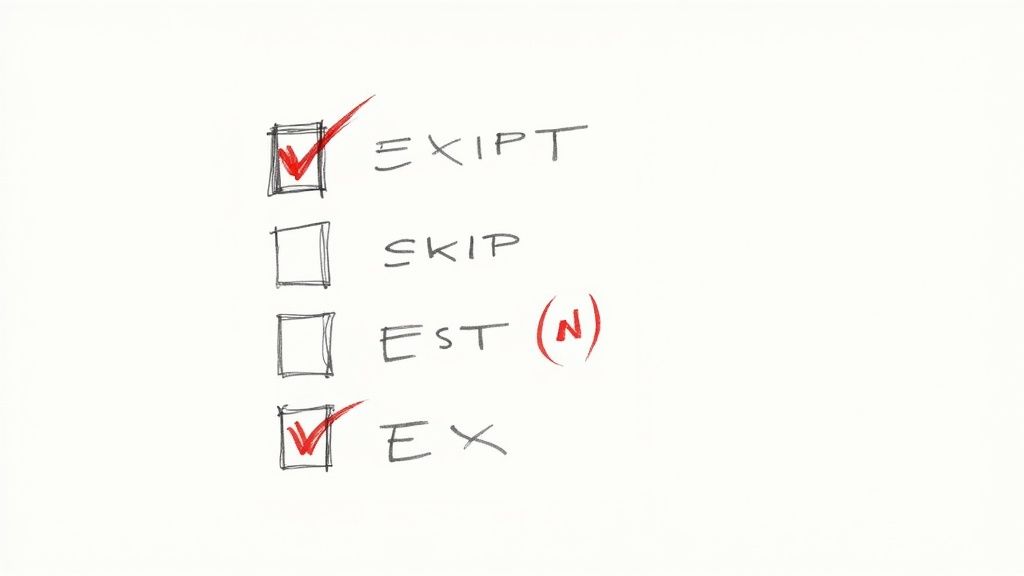

Skip vs. Xfail: Choosing the Right Tool

Understanding the difference between skipping a test (pytest.skip()/@pytest.mark.skip) and marking it as expected to fail (@pytest.mark.xfail) is crucial for effective test management. Both prevent tests from immediately signaling a "failed" build, but they communicate different things about the state of your project. This subtle difference can significantly affect how your team interprets results and prioritizes future work.

Key Differences: Skip vs. Xfail

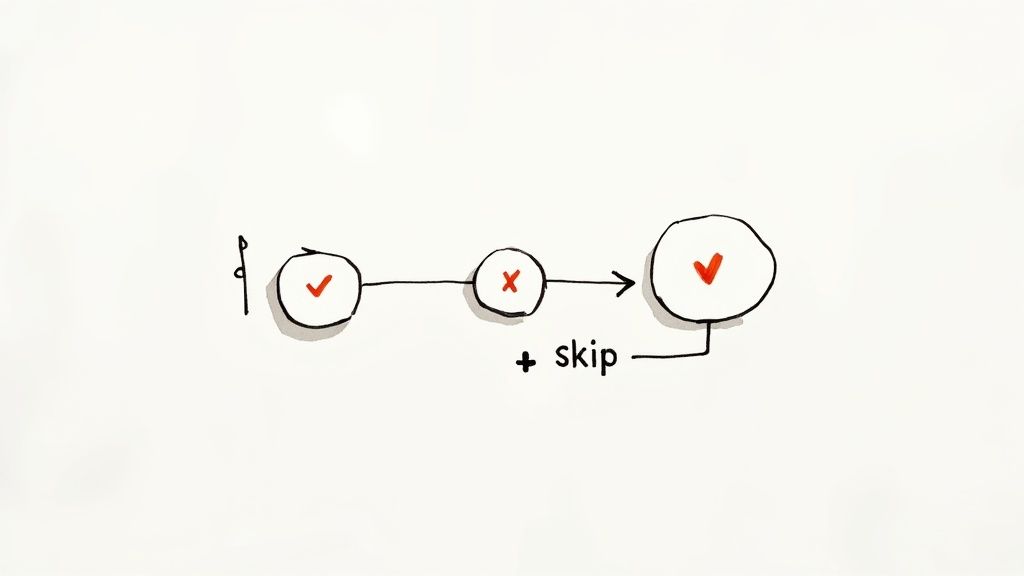

The core distinction lies in execution. A skipped test is not run at all. This is best suited for tests that simply aren't applicable in the current environment, such as when specific dependencies are unavailable. Xfailed tests, on the other hand, are executed. They are expected to fail, and a passing result would actually be flagged as unexpected, or "xpassed". This is useful for tracking known bugs or for testing features that are still under development.

Choosing the correct approach provides clarity regarding the status of your project. A skipped test signals that a specific part of your code remains untested in a particular context. An xfailed test acknowledges a known issue, pinpointing an area that needs attention, but without halting other development work. These choices are valuable when managing complex feature rollouts or deployments through platforms like Mergify.

How Skip and Xfail Impact Test Reports

This difference in execution has a direct impact on your test reports. Skipped tests are clearly marked as "skipped," instantly conveying that the test was bypassed. Xfailed tests are labeled according to their outcome: "xfailed" (as expected) or "xpassed" (unexpectedly passed). This clear distinction is important for accurately monitoring development progress and recognizing which aspects of your system are fully functional.

Statistical Significance of Skip and Xfail

Statistically, strategically using pytest.skip() and xfail can contribute to higher overall test coverage. As of early 2024, nearly 75% of projects using pytest utilize these markers to handle known issues and features still in progress. You can find more detailed information at LambdaTest's blog post on pytest skip. Using xfail, for example, permits continued testing against an incomplete feature, ensuring the test remains active and visible without impacting the build’s overall perceived success. It's crucial to be mindful, however, as this practice can sometimes mask more significant underlying failures if not carefully managed.

When to Choose Skip and When to Choose Xfail

To further clarify when to employ each approach, let's examine a comparison table. The following table highlights the key differences and best-use scenarios for skip and xfail:

| Feature | Skip | Xfail | When to Use |

|---|---|---|---|

| Execution | Not executed | Executed | Skip: Test is not runnable. Xfail: Test can run but expected to fail. |

| Expected Result | N/A | Failure | Skip: Test is not applicable. Xfail: Known bug or incomplete feature. |

| Reporting | Skipped | Xfailed (expected), Xpassed (unexpected) | Skip: Environment limitations or missing dependencies. Xfail: Tracking known issues and development progress. |

This table helps to make an informed decision between the two. Choose skip when running a test is simply impossible or entirely irrelevant in the given context. Select xfail when you anticipate a failure due to a known issue or ongoing development. Ultimately, transitioning a test from xfail to a successful pass indicates forward momentum and supports agile, dynamic test strategies in continuous integration environments.

Advanced Skip Patterns for Complex Projects

Building upon basic pytest skip test methods, let's explore advanced strategies used by experienced teams to manage complex projects. These techniques help maintain a clean, efficient, and insightful testing process.

Dynamic Skipping Based on Fixture Outcomes

Fixtures, used for setting up preconditions for tests, can also dynamically control skipping. Imagine a test requiring a database connection. A fixture can attempt to establish this connection, but if it fails, the dependent tests should be skipped.

import pytest

@pytest.fixture def database_connection(): try: connection = connect_to_database() # Your connection logic return connection except Exception as e: pytest.skip(f"Database connection failed: {e}")

@pytest.mark.usefixtures("database_connection") def test_database_query(database_connection): # This test will only run if the database_connection fixture succeeds # ... test logic ... assert True

This dynamic approach ensures tests relying on external resources are only executed when those resources are available, leading to more meaningful results. This prevents misleading failures caused by environmental problems, not code defects.

Parametrized Test Skipping

When parametrizing tests – running the same test logic with different inputs – you might want to skip specific parameter combinations. This granular control is crucial for targeted testing.

import pytest

@pytest.mark.parametrize("os, feature_enabled", [ ("linux", True), ("windows", True), ("macos", False), # Skip this combination ]) def test_feature(os, feature_enabled): if not feature_enabled: pytest.skip("Feature disabled for this OS.")

# ... test logic assuming feature is enabled ...

assert True

This method keeps your test definitions concise while allowing fine-grained control over which scenarios are tested. Skipping certain combinations avoids unproductive test runs and focuses on relevant scenarios.

Custom Skip Markers

For project-specific needs, create custom skip markers for better categorization and filtering. This adds a layer of organization beyond standard pytest markers.

import pytest

pytest_plugins = 'pytester' # for the pytester fixture

def pytest_addoption(parser): group = parser.getgroup('general') group.addoption('--expensive', action='store_true', help='run expensive tests')

def pytest_configure(config): config.addinivalue_line("markers", "expensive: marks tests as expensive (deselect with '-m "not expensive"')")

def test_is_expensive(pytester): pytester.makepyfile( """ import pytest @pytest.mark.expensive def test_one(): pass """ ) res = pytester.runpytest() assert res.ret == 0

res = pytester.runpytest("-m", "not expensive")

assert res.ret == 0 # expensive tests are skipped

res = pytester.runpytest("-m", "expensive")

assert res.ret == 0 # only expensive tests run

This custom marker allows running or skipping specific tests based on command-line arguments, enhancing flexibility. This helps categorize tests by various criteria, like resource requirements, essential for large projects.

Organizing Skip Conditions for Maintainability

In large projects, centralizing skip logic in helper functions or dedicated modules improves readability and maintainability. This keeps code organized and reusable. This structured approach manages complex pytest skip test logic effectively. By centralizing skip conditions, test code becomes easier to navigate and update. This practice promotes consistency and collaboration within teams. This is especially beneficial for Continuous Integration and Continuous Delivery (CI/CD) processes, optimizing test runs within pipelines such as those managed by Mergify.

Building a Disciplined Test Skipping Strategy

A well-structured test skipping strategy is crucial for a healthy and efficient test suite. Randomly skipping tests can destabilize your testing process and mask underlying problems. Instead, a disciplined approach to using pytest skip test is key.

Identifying Problematic Skip Patterns

Over-relying on skipped tests is a major warning sign. If a large percentage of your tests are regularly skipped, it could indicate a deeper issue. This can create a false sense of security, as skipped tests might hide real bugs. Similarly, unclear skip conditions lead to confusion and make it hard to understand why a test was skipped. Tests permanently disabled by outdated skip conditions clutter the test suite, reducing its effectiveness.

Implementing Best Practices for Test Skipping

High-performing teams establish clear guidelines for using pytest skip test. Thoroughly documenting skip conditions, including the reasoning behind the decision, is vital. This documentation should explain why a test is skipped and when it should be re-enabled. For complex projects, consider skipping tests in the CI/CD pipeline based on specific conditions.

It's also important to establish conventions for choosing between skipping a test and using alternatives like xfail (expected failures) or conditional execution. Skipping prevents a test from running at all, while xfail allows the test to run but marks it as expected to fail. This decision depends on the situation. Skip a test when it's completely inapplicable; use xfail when you expect a test to fail due to a known bug or incomplete feature.

Maintaining Visibility and Measuring the Health of Your Test Suite

Regular reviews of skipped tests ensure they aren't forgotten. This means periodically checking if skip conditions are still valid and re-enabling tests when appropriate. This prevents the buildup of permanently disabled tests that no longer serve a purpose.

Go beyond simple pass/fail metrics. Track skip patterns across your entire test suite. This can reveal systemic problems or areas with consistently inadequate testing. Tools that visualize skip trends over time can be extremely helpful. This pinpoints areas needing attention and enables continuous improvement of your testing strategy. This proactive maintenance allows pytest skip test to improve, not hinder, your quality assurance.

A disciplined pytest skip test strategy isn't just about knowing how to skip tests; it's about knowing when and why. This requires clear communication, consistent review, and a focus on maintaining the overall health of your test suite.

Ready to improve your testing efficiency? Explore Mergify.