pytest run single test: Boost Your Testing Workflow

The Power of Targeted Test Execution

In software development, speed and efficiency are crucial. Every second saved contributes to faster releases and increased productivity. This is where targeted test execution comes into play, allowing you to run a single test with pytest. This ability is incredibly valuable.

Targeted testing lets developers zero in on specific code areas for validation, saving time and resources. Imagine working on a complex feature with hundreds of tests. Instead of running the whole suite after every small tweak, you can run just the relevant tests for immediate feedback.

This focused approach is even more critical when debugging. Quickly isolating the problem is key when a test fails. Running only the failing test lets you iterate quickly on a solution without the overhead of running unrelated, passing tests.

This significantly reduces debugging time, making it easier to find the root cause of the failure. It's a major shift from traditional debugging, which often relies on print statements scattered throughout the code. With targeted testing, the test itself becomes the main debugging tool.

Practical Applications of Single Test Runs

Running a single test is standard practice in Test-Driven Development (TDD). It’s especially helpful when focusing on a specific feature or debugging. Developers can use the pytest command followed by the specific test module, function, or class they wish to execute.

For example, to run a specific test function, you might use pytest tests/unit/test_functions.py::test_add_positive_numbers. This is incredibly useful when isolating a failing test without running the entire suite. Learn more at pytest-with-eric.com.

The benefits extend beyond debugging and TDD. This technique is particularly helpful when:

- Refactoring Code: Running tests related to the refactored code ensures changes don’t introduce unintended side effects.

- Working on a Specific Feature: Focus development efforts and receive immediate feedback.

- Integrating with Continuous Integration (CI): Trigger specific tests based on code changes for faster CI cycles.

The ability to run individual tests is a potent tool that can dramatically improve your development workflow. Integrating this into your daily routine can streamline your process and help you write more efficient, robust code. This practice is a key component of effective testing strategies, improving the overall quality and maintainability of software projects. It's like having a precision tool for your code, empowering you to make accurate changes with confidence.

Command Line Mastery for Single Test Execution

The command line is where the true power of pytest shines when it comes to running specific tests. This section explains the different ways you can target individual tests, from selecting whole files to zeroing in on particular functions. Learn how to construct commands that pinpoint exactly what you need, whether it's a module, a class, or a single test function. This precision is key for efficient debugging and focused development.

Basic Test Selection

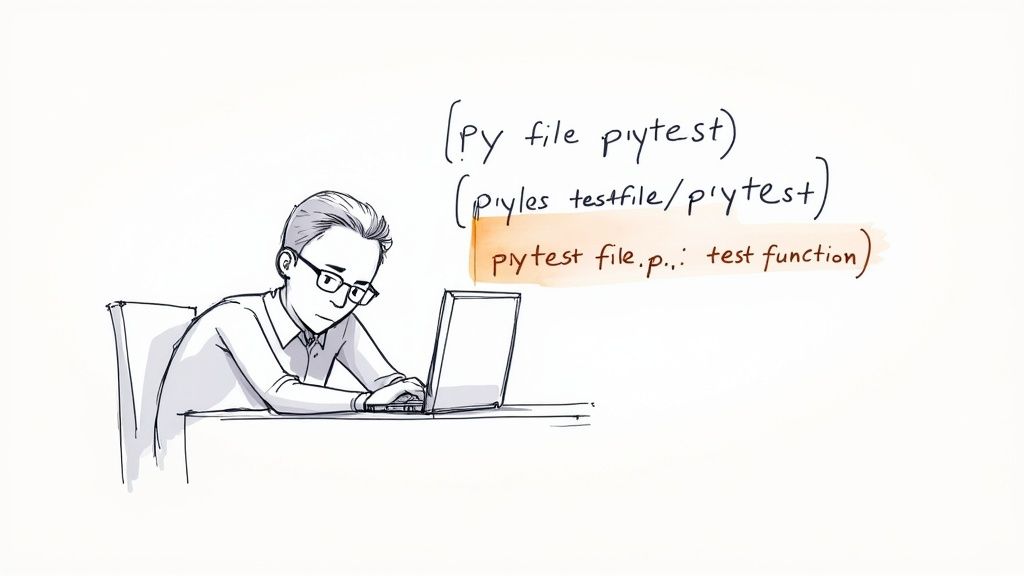

One of the simplest ways to run a single test is by specifying the file path and the test function name.

For example, to run the test_addition function inside the test_calculations.py file, use: pytest tests/test_calculations.py::test_addition. This is a quick and easy way to target one specific test.

You can also target a specific test class. Append the class name to the file path, separated by double colons. For instance, pytest tests/test_calculations.py::CalculationsTests::test_addition will only run the test_addition function inside the CalculationsTests class. This narrows the scope of your test execution even further.

Advanced Command Flags

Pytest provides command-line flags to enhance single test execution. The -v flag generates verbose output, providing detailed information about each test run. This is especially helpful for analyzing test failures and understanding the surrounding context.

The -x flag is another important one. It stops the testing session after the first failure. This speeds up debugging by immediately highlighting the problem test, so you don't have to wait for the entire test suite to complete. This is particularly useful in larger projects, where running the full suite can be very time-consuming.

Pattern Matching for Multiple Tests

You can run multiple related tests without executing the entire suite by using pattern matching with the -k flag. For example, pytest -k "addition or subtraction" will run any tests with either "addition" or "subtraction" in their names. This is a more focused testing approach, allowing you to group and run related tests together.

Troubleshooting Test Selection

Sometimes, your test selection might not behave as expected.

- File Paths and Test Names: Ensure they're correct, paying close attention to case sensitivity.

-kExpressions: Double-check them for accurate pattern matching, especially with complex logical operators. Even a small typo can stop the right tests from running.

The following table provides practical command patterns for running specific tests with pytest:

To better illustrate practical usage, let's explore some common pytest commands:

Pytest Commands for Running Single Tests: Common command patterns for running specific tests with pytest

| Command Pattern | Description | Example | When to Use |

|---|---|---|---|

pytest <path_to_file.py>::<test_function> |

Run a specific test function | pytest tests/test_math.py::test_add |

Isolate and run a single function |

pytest <path_to_file.py>::<test_class>::<test_method> |

Run a specific test method within a class | pytest tests/test_math.py::MathTests::test_subtract |

Test specific class methods |

pytest -k <expression> |

Run tests matching a specific expression | pytest -k "test_add or test_multiply" |

Run related tests using logical operators |

pytest -v <path_to_file.py>::<test_function> |

Run a specific test with verbose output | pytest -v tests/test_math.py::test_add |

Get detailed information on a specific test run |

pytest -x <path_to_file.py> |

Stop execution after the first failure | pytest -x tests/test_math.py |

Quickly isolate failing tests |

This table summarizes common ways to target specific tests, offering examples for each scenario. The "When to Use" column provides context for choosing the most effective command.

By mastering these command-line techniques, you gain fine-grained control over test execution, making pytest a powerful tool for any development team. This control speeds up development, debugging, and the integration of testing into your workflow.

Mastering the -k Flag for Precision Test Selection

Building on the command-line proficiency discussed earlier, the -k flag in pytest gives you granular control over test execution. Instead of running all tests in a file or class, the -k flag lets you select tests based on keyword expressions. This targeted approach streamlines your testing workflow, saving you valuable time.

Constructing Keyword Expressions

The -k flag uses string expressions as filters. A simple example is pytest -k "test_api", which runs any test with "test_api" in its name. The true power comes from combining expressions with logical operators.

Using and, or, and not refines your selection further. pytest -k "database and not integration" runs tests that include "database" but not "integration". Similarly, pytest -k "auth or security" targets tests related to either "auth" or "security", offering maximum flexibility.

Advanced Keyword Expression Patterns

For more complex scenarios, use parentheses to group expressions. pytest -k "(auth or security) and not slow" isolates authentication and security tests, excluding any marked as "slow". This is particularly helpful when debugging specific parts of your code.

The -k flag also works with parameterized tests. Suppose you have test_my_feature parameterized with value_1 and value_2. You can run the specific case pytest -k 'test_my_feature[value_1-value_2]'. This is especially useful for debugging with specific inputs. Modern pytest versions have made significant strides in filtering parameterized tests, simplifying this process. Learn more about parameterized test execution with pytest.

Real-World Applications and Troubleshooting

The -k flag shines when isolating tests during debugging. Imagine a bug related to user authentication. pytest -k "auth" quickly narrows down the relevant tests. For automated testing, a well-structured CI/CD pipeline is crucial. This article on CI/CD pipeline best practices provides valuable insights into optimization strategies.

Incorrect -k expressions can lead to unexpected behavior. Ensure your test names are consistent and descriptive for easy targeting. Remember that expressions are case-sensitive. pytest -k "TestLogin" will not match test_login. These seemingly small errors can cause significant confusion. Double-check your syntax, especially with logical operators, to avoid accidentally excluding critical tests.

By mastering the -k flag, you dramatically improve testing efficiency, shortening feedback loops and speeding up development. This precision allows you to focus your testing efforts exactly where they're needed.

Streamlining Test Execution in Modern IDEs

Modern Integrated Development Environments (IDEs) significantly improve workflow efficiency, especially for running tests with pytest. Leading Python developers rely on these tools to execute tests quickly and easily. Running individual tests within your IDE simplifies debugging and accelerates development cycles. Proper IDE configuration seamlessly integrates testing into your daily coding routine.

PyCharm Integration

PyCharm, developed by JetBrains, offers excellent pytest support. It provides a built-in mechanism for running single tests. You can right-click a test and select "Run" or use the test tool window to select individual tests. This feature is invaluable when dealing with large test suites.

Imagine a project where running the full test suite takes several minutes. PyCharm lets you isolate specific test cases, saving valuable development time. This targeted approach simplifies debugging and boosts productivity by allowing you to stay within your preferred workflow. Learn more at the JetBrains guide for running single tests in PyCharm.

VS Code and Other IDEs

VS Code, another popular IDE, supports pytest through extensions. These extensions often provide similar functionality, allowing you to run single tests and view results directly within the editor. Other IDEs, like Atom and Sublime Text, can also be configured to run pytest tests using plugins. IDE integration minimizes context switching, keeping you focused on coding. It's a significant improvement over solely using the command line for running tests.

The following table compares single test execution support across different IDEs.

IDE Support for Running Pytest Tests Comparison of features for running single tests across popular IDEs

| IDE | Test Discovery | Run Single Test Method | Debugging Support | Configuration Options |

|---|---|---|---|---|

| PyCharm | Automatic | Right-click, Test Tool Window | Integrated Debugger | Extensive Run Configurations |

| VS Code | Via Extensions | Extension Dependent | Via Extensions & Debugger | Extension & Workspace Settings |

| Atom | Via Plugins | Plugin Dependent | Plugin Dependent | Plugin & Editor Settings |

| Sublime Text | Via Plugins | Plugin Dependent | Plugin Dependent | Plugin & Editor Settings |

As shown above, PyCharm offers tightly integrated features, while other IDEs often rely on extensions or plugins to achieve similar functionality. This distinction is important when choosing the best IDE to suit your specific development needs.

Custom Run Configurations and Debugging

Most IDEs offer the ability to create custom run configurations. These configurations provide granular control over how tests are executed. You can define command-line arguments, set environment variables, and even select specific test modules or individual functions. This level of control is essential for projects with complex testing requirements.

Debugging support within IDEs is another major advantage. Integrated debuggers work seamlessly with pytest. You can set breakpoints, step through your code, and inspect variable values, all within the familiar IDE interface. This streamlined debugging process simplifies identifying and resolving errors in your test code and the code under test.

Visualizing Test Results

IDEs vary in how they present test results. Some use a straightforward pass/fail indicator, while others provide more detailed breakdowns of test execution, including timings and error messages. Some even visually represent code coverage, highlighting sections of your code not covered by tests. This visualization offers valuable insights into your test suite’s comprehensiveness.

Customizing for Workflows

Customizing your testing environment is essential. Different projects and team workflows have unique needs. Some teams favor automated testing within a highly integrated environment, while others prefer a more manual approach. IDEs cater to diverse preferences, ensuring compatibility with specific requirements. This adaptability makes them powerful tools for individual developers and teams. For example, creating distinct run configurations for unit tests, integration tests, and system tests can greatly enhance team efficiency.

By harnessing the full potential of your IDE’s testing features, you can go beyond the command line and optimize your testing process. This approach allows for more efficient test writing and debugging, ultimately improving the quality of your Python code.

Strategic Test Organization With Pytest Markers

As your test suite expands, running individual tests by filename or function name becomes less efficient. Pytest markers offer a robust solution for categorizing and selecting tests strategically, going beyond basic file or function identification. Leading development teams utilize markers to organize complex projects, improving both test selection and reporting.

Designing Custom Markers For Your Project

Pytest markers empower you to create custom categories that reflect your project’s structure. Imagine a project with unit, integration, and end-to-end tests. You can define markers like @pytest.mark.unit, @pytest.mark.integration, and @pytest.mark.e2e. This enables you to execute specific test types quickly. Running pytest -m unit, for example, executes only the unit tests.

This categorization extends beyond test types. You can use markers for features, complexity levels, or other relevant attributes. Markers like @pytest.mark.slow, @pytest.mark.database, or @pytest.mark.authentication provide granular control over test execution. This adaptable system evolves with your project’s needs.

Combining Markers With Other Selection Techniques

Markers integrate seamlessly with other pytest selection methods. You can execute a single test within a marked group. For example, pytest -m integration tests/test_api.py::test_login runs only the test_login function within the integration tests. This focused execution accelerates development cycles.

Combining markers with the -k flag provides even finer control. pytest -m unit -k "auth" executes all unit tests related to authentication. This is especially helpful when debugging specific features or issues. The flexibility of combining selection techniques creates a highly adaptable testing strategy.

Integrating Markers Into Your Workflow

Integrating markers into your Continuous Integration (CI) workflow offers numerous advantages. You can configure your CI system to run specific marked tests based on changes in a pull request. This speeds up CI cycles by eliminating unnecessary test runs.

Markers also enhance test documentation and maintainability. They clearly indicate the purpose and characteristics of tests, making the codebase easier to understand, particularly in larger teams. Improved organization simplifies test maintenance by making it easier to locate and update specific test groups.

Gradual Marker Adoption

Adding markers to existing test suites doesn’t have to be disruptive. Start by applying markers to crucial test categories and gradually expand coverage over time. This incremental approach minimizes disruption to existing workflows. Prioritize marker adoption based on factors like test type, execution frequency, and relevance to common development tasks.

By strategically using markers, you cultivate a more organized and efficient test suite, reducing the need to manually select individual tests. This empowers your team to target specific areas of your application and manage the increasing complexity of your project.

Advanced Test Selection for Complex Projects

As projects grow, efficiently selecting tests becomes critical. Managing extensive test suites can become a major bottleneck, slowing down development and increasing feedback times. This section explores advanced techniques expert testers use to maintain efficiency, even with massive test suites, ensuring you run the right tests at the right time.

Leveraging Pytest Plugins for Streamlined Workflows

Several Pytest plugins enhance test selection in complex projects. For example, pytest-xdist enables parallel test execution. Running your selected tests across multiple CPU cores dramatically reduces execution time. This is particularly helpful for slower integration or end-to-end tests.

pytest-picked automatically identifies tests affected by code changes. This targeted approach ensures you test only what's relevant, minimizing the risk of missing critical issues. It's like a smart assistant that pre-selects tests based on your development activity.

pytest-focus lets you save and reuse test selections. This is invaluable for debugging, eliminating the need to manually re-select tests, allowing you to focus on fixing the problem.

Creating Meaningful Test Subsets

Defining meaningful test subsets is vital for efficient testing. Imagine a project with different functional areas, like user authentication, payments, and reporting. Creating subsets for each area lets you quickly test specific functions without running the entire suite, significantly reducing execution time and providing faster feedback.

Managing Test Dependencies

Managing dependencies is essential when running individual tests. Consider testing a user authentication feature that depends on a database connection. Ensuring the database is available and correctly configured before running these tests prevents false failures.

Pytest's fixture mechanism helps manage such dependencies. Fixtures set up preconditions for tests, ensuring each test runs in a consistent environment. This is vital for isolated test execution, allowing reliable testing of individual components without unexpected side effects.

Extending Pytest with Custom Hooks

Pytest's flexibility extends to custom hooks, allowing you to tailor test selection to your project's unique requirements. For instance, if you need to select tests based on specific configuration settings or environment variables, custom hooks enable you to define sophisticated selection logic that seamlessly integrates with Pytest. This adaptability makes Pytest suitable for various project architectures and testing strategies.

Efficient test selection is crucial for maintaining development speed in complex projects. By combining Pytest's features with powerful plugins and thoughtful test organization, you create a streamlined testing workflow. This helps your team quickly identify and address issues, delivering high-quality software faster.

Ready to enhance your CI/CD pipeline and simplify your merging process? Discover Mergify today! and see the difference a powerful merge automation tool can make.