pytest print to console: Debug Like a Pro

Understanding the Pytest Output Ecosystem

Debugging can be a real headache when you can't see what your code is doing. This is especially true with testing frameworks like Pytest, which often handle output in unconventional ways. Pytest manages pytest print to console actions differently than standard Python scripting.

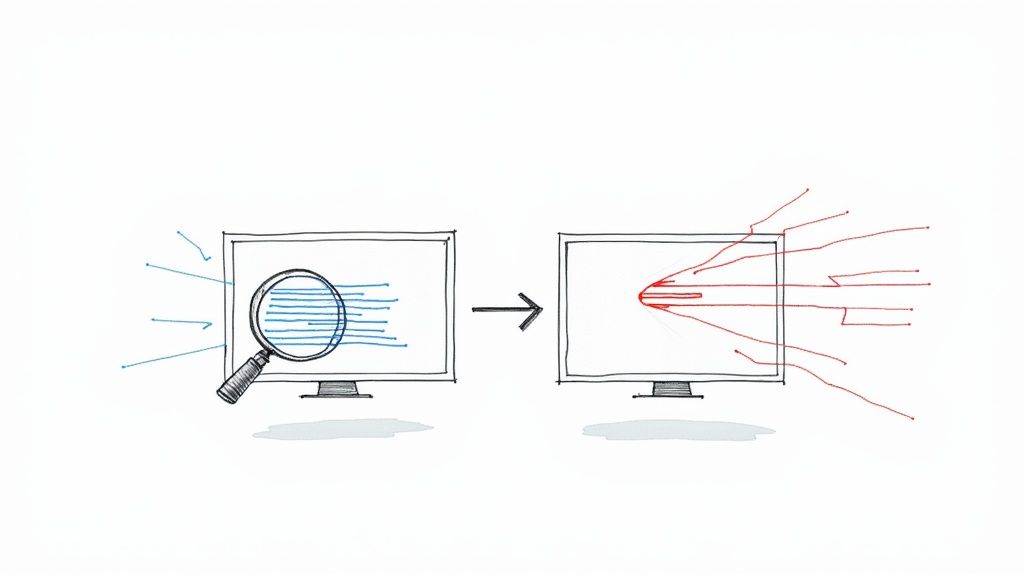

This difference stems from Pytest's default behavior of capturing standard output (stdout) and standard error (stderr). This means your helpful print statements might not appear when running tests.

This capture mechanism keeps test output clean, focusing on the results. However, it complicates debugging when you need to see intermediate steps or variable values. Imagine trying to find a plumbing leak without seeing the water flow. Pytest's default output capture is similar – clean presentation, but problematic for diagnostics. This raises the question: How can I see my print statements during a Pytest run?

Pytest offers ways to manage and display output, giving developers control over their debugging. Verbosity flags are one powerful tool. For instance, -v displays each test name as it runs, and -vv provides even more detail. This granular output control is invaluable. Pytest significantly improves test output management through these flags. By default, print statements are hidden unless a test fails or options like -s or --capture=no are used. Efficient test output management is crucial for streamlining debugging, especially in large projects or automated CI/CD pipelines. Learn more about this in the Pytest Output Management documentation. These options provide the information you need without overwhelming you with irrelevant details. And this is just the beginning of understanding Pytest's output ecosystem.

Mastering Pytest's Output Capture

Effective debugging with Pytest hinges on understanding and controlling its output capture mechanisms. Think of it like a recording studio. By default, microphones (capturing stdout and stderr) are on, but the speakers are muted. You can unmute the speakers (using -s) to hear the live performance (your print statements). Or, you can listen to the recording (captured output) afterward, but only if there was a problem (a failed test).

This control balances a clean testing environment with necessary debugging visibility. In the following sections, we'll explore these options and advanced techniques to master pytest print to console strategies for a more efficient debugging workflow.

Command-Line Magic: Unlocking Pytest Console Output

As we've seen, Pytest's default output capture can sometimes hide valuable debugging information. Fortunately, Pytest provides a robust set of command-line options to give you the console output you need. These options offer granular control, allowing you to see pytest print to console output precisely when necessary.

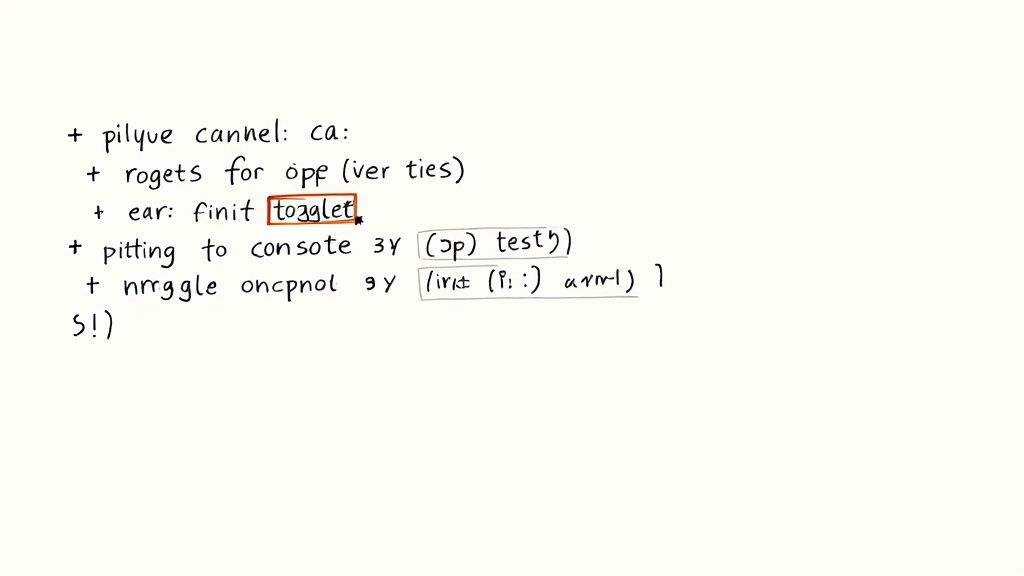

Essential Pytest Flags for Output Control

A frequently used command-line option for enabling pytest print to console output is the -s flag. This flag disables Pytest's output capture, effectively unmuting your print statements. Think of -s as a simple "show all output" switch, invaluable for quick debugging. It's equivalent to --capture=no, providing a shorter syntax for everyday use.

Another useful set of flags are -v and -vv, which offer more refined control over output verbosity. The -v flag (verbose mode) displays the names of individual tests as they run. This gives a more granular view of the testing process. The -vv flag (very verbose mode) provides even more detail, including full text diffs when assertions fail. These are particularly helpful when diagnosing failures in larger test suites.

Combining Command-Line Options for Powerful Debugging

Combining these options can be incredibly powerful. For instance, pytest -s -v displays all print statements along with the names of the running tests. This provides a balance between detailed output and a clear overview, excellent for local troubleshooting, especially during development when understanding flow and state changes is crucial. Imagine debugging a complex data transformation; this combined output would let you track each step and ensure data integrity throughout.

Advanced Command-Line Options

Pytest offers more advanced flags for specific output challenges. The --show-capture flag lets you select specific captured streams to display, such as stdout, stderr, or log. This is invaluable for focusing on particular aspects of program behavior during testing.

For example, pytest -s --show-capture=stderr displays print statements and any error messages, without cluttering the output with standard output logs. This is especially helpful when working with external libraries that generate verbose logging, allowing you to focus on the information relevant to your tests.

To help illustrate the different options, let's look at a comparison table:

The following table, "Pytest Console Output Command Options," compares different command-line options and their effects:

| Command Option | Equivalent | Effect on Output | Best Used When |

|---|---|---|---|

-s |

--capture=no |

Disables output capture, showing all print statements. |

Quick debugging and seeing all output. |

-v |

N/A | Displays the names of individual tests. | Provides a granular view of the testing process. |

-vv |

N/A | Displays very detailed output, including full text diffs for failures. | Useful for in-depth debugging and failure analysis. |

--show-capture=stdout |

N/A | Shows captured standard output. | Debugging program output. |

--show-capture=stderr |

N/A | Shows captured standard error. | Debugging errors specifically. |

-s --show-capture=stderr |

--capture=no --show-capture=stderr |

Shows all print statements and standard error output. |

Useful for comprehensive local troubleshooting. |

As you can see from the table, understanding the various command-line options allows for a highly customizable debugging experience.

These command-line options offer a versatile toolkit for controlling pytest print to console output. By understanding and combining them, you can create a debugging environment tailored to your needs, significantly improving your testing workflow. Mastering these commands transforms how you interact with Pytest's console output, making debugging more efficient and insightful.

Beyond Basic Commands: Advanced Output Control Techniques

Command-line options like -s and -v are invaluable for controlling pytest print to console output. However, sometimes you need more precise control. This is where advanced techniques become essential, offering a finer degree of management over what appears in your console.

Fixtures for Capturing Specific Outputs

Fixtures in Pytest offer a robust mechanism for managing resources during testing. One effective strategy is to create a fixture that captures output streams, like stdout and stderr, only when needed. This way, you can isolate specific tests for detailed output analysis without cluttering the console during routine test runs. It's like having a focused lens for examining particular test outputs, keeping the overall picture clean and clear.

This targeted approach provides granular control, ensuring that you see the detail you need, when you need it, without overwhelming the console with unnecessary information. It's all about finding the right balance between comprehensive logging and a clear overview.

Programmatic Control Over Output Visibility

Beyond fixtures, you can manage pytest print to console behavior directly within your test code. Conditional logic offers a dynamic approach, letting you determine output visibility based on the specific test context. This is particularly helpful for complex tests where output requirements vary.

Imagine a test suite where some tests require verbose logging while others only need a summary. Programmatic control gives you the flexibility to tailor the output accordingly, ensuring that the console displays only the most relevant information for each test.

Custom Hooks and Decorators

Pytest's plugin system allows for deep customization via hooks. You can create custom hooks to intercept and modify console streams before they hit your terminal. This allows you to filter, reformat, or even redirect output as required. Think of it as a powerful filter system for your test output.

Decorators offer another layer of control, allowing you to manage output selectively for specific test cases or groups of tests. This is especially helpful for tailoring output without modifying the underlying test logic. These methods provide a level of granular control that significantly streamlines your testing workflow.

Integrating with Pytest Plugins

Pytest boasts a thriving community and a wealth of plugins. Many of these plugins focus specifically on enhancing output or integrating with logging frameworks. Rather than building custom solutions, leveraging existing plugins can save time and effort.

Some plugins might provide specialized formatting, color-coded results, or integration with logging frameworks like the Python built-in logging library. This provides structured, searchable logs alongside your pytest print to console output.

The software testing market, valued at approximately $87.82 billion in 2021, is expected to grow to $128.46 billion by 2026. This growth underscores the increasing importance of robust testing tools like Pytest, particularly for larger projects and continuous integration pipelines where efficient output control is crucial.

By mastering these advanced pytest print to console techniques, you gain finer control over your testing environment. This leads to more efficient debugging, simplified workflows, and ultimately, more reliable tests. These techniques are especially valuable for professional developers working in complex environments where basic command-line options are insufficient.

Strategic Debugging: Making Your Print Statements Count

Mastering pytest debugging involves more than just adding print statements to your code. It's about strategically placing them to understand your application's state during testing. This targeted approach reduces debugging time and leads to stronger tests.

Structuring Your Console Output For Clarity

Unstructured console output makes debugging difficult. Imagine searching for a single grain of rice in a huge bag. A simple technique is to prefix your print statements with clear identifiers. Instead of print(variable), use print(f"Variable Value: {variable}"). This clarifies what each value represents. Consistent formatting and clear labels make different parts of your test output easier to distinguish.

Identifying Optimal Print Placement

Where you place your print statements is critical. Concentrate on points where your application’s state changes significantly. This might be before and after a function call, inside a loop, or within a conditional statement. These strategically placed prints act like breadcrumbs, revealing the execution flow and making it simpler to find errors.

Illustrating Effective Print Statements

Consider this example:

def process_data(data): print("Starting data processing...") # Entry point for item in data: print(f"Processing item: {item}") # Inside loop # ... data processing logic ... print("Data processing complete.") # Exit point

These print statements offer clear visibility into the processing steps. This makes it easier to track the flow and locate potential problems.

Temporary Vs. Permanent Logging: Choosing The Right Approach

Temporary print statements are great for quick debugging. However, a proper logging solution is better for long-term maintenance. Libraries like Python's built-in logging module provide structured logging with different levels (debug, info, warning, error). This allows control over output verbosity and creates a permanent record of your application's behavior, crucial for diagnosing post-deployment issues.

Common Pitfalls And How To Avoid Them

Too many print statements can create information overload, hiding the real problem. Forgetting to remove temporary debug prints also leads to cluttered output. A disciplined approach is to remove temporary prints after use and rely on logging for ongoing monitoring.

To better understand the differences between these debugging methods, let's examine a comparison table.

Debugging Methods Comparison: Comparison of different debugging approaches when working with pytest

| Debugging Method | Implementation | Pros | Cons | Best For |

|---|---|---|---|---|

print statements |

Directly within the code | Quick and easy for temporary debugging. | Can clutter the console. | Ad-hoc debugging and quick checks. |

Logging (e.g., Python's logging module) |

Configurable logging framework | Structured, persistent logs with different levels of verbosity. | Requires some setup and configuration. | Long-term maintenance, production environments, and detailed analysis. |

This table highlights the trade-offs between using simple print statements and a more robust logging framework. While print statements offer a quick solution for simple debugging, logging provides better organization and persistence for complex projects and production environments.

By strategically placing print statements and using a structured approach, you can transform your debugging workflow. Combined with a robust logging strategy, these techniques, frequently used by experienced Python teams, will improve your ability to quickly identify and resolve issues, enhancing your software's quality and reliability.

Making Pytest Shine in CI/CD Environments

When your tests move from your local machine to automated Continuous Integration/Continuous Delivery (CI/CD) pipelines, managing what you see in the console becomes crucial for effective troubleshooting. Unlike testing on your own computer, you can't easily rerun tests in a CI/CD environment. This means you need specific techniques to ensure your pytest print to console statements give you useful information within platforms like GitHub Actions, Jenkins, and CircleCI.

Preserving Critical Output From Failed Tests

Capturing output from failed tests is paramount in CI/CD. Most CI/CD platforms offer ways to log test output. Set up your pipeline to store these logs. This way, even if you can't directly debug a failing test, you have a record of the print statements leading up to it. Think of it as a flight recorder for your tests, providing valuable post-incident analysis data.

Filtering Noise in CI/CD Output

CI/CD environments often produce a lot of log output, making it hard to find the information you need. Using verbosity flags (like -v or -vv) strategically is essential. Too much output can hide important signals. Consider using custom logging solutions that focus on test-specific information, filtering out unnecessary system messages from the CI/CD platform.

Handling Parallelized Test Execution

Many CI/CD systems run tests in parallel to save time. This can mix output from different tests, making it hard to follow individual test flows. Pytest's -n flag for parallel execution can help, but you often need other strategies as well. Techniques like giving each test's output a unique identifier or using specialized logging frameworks that handle concurrent output streams can greatly improve clarity.

Practical Examples in CI/CD Platforms

Imagine using GitHub Actions for your CI/CD. You can configure your workflow to capture test output with the actions/upload-artifact action. This saves the output even after the job finishes. For example, you could store the logs from pytest -vv to keep detailed information about failed tests, including your pytest print to console output.

Similarly, in Jenkins, you can set up the console output to be saved with the build artifacts. This lets you access the full output later, including print statements from both passing and failing tests. Keeping this output is a great resource for finding intermittent problems and understanding complex failures.

In CircleCI, you can use the store_artifacts step to save test logs. When combined with pytest -s, you can capture all the unfiltered output, which helps with debugging. For example, if a test fails intermittently, you can check the saved logs for print statements that show specific conditions leading to the failure, even if it doesn't happen every time.

By using these strategies, frustrating CI failures become easily diagnosable problems. Capturing the right pytest print to console output at the right time allows for faster identification and resolution, drastically cutting down debugging time and boosting your overall CI/CD efficiency. This makes your CI/CD pipeline a powerful tool for delivering high-quality software.

Real-World Pytest Print Strategies From The Trenches

Theory is important, but putting it into practice is what truly matters. This section demonstrates practical, real-world examples of how experienced Python developers use pytest print to console for debugging. We'll see how simple print statements can become powerful tools in various testing scenarios.

Data Transformation Pipelines

Imagine building a data pipeline that processes and transforms large datasets. pytest print to console is essential for verifying each step of the process. For example, insert print statements after each transformation to check the data's shape and values.

def transform_data(data): # Stage 1: Data Cleaning cleaned_data = clean_data(data) print(f"Cleaned Data Shape: {cleaned_data.shape}")

# Stage 2: Data Normalization

normalized_data = normalize_data(cleaned_data)

print(f"Normalized Data Sample: {normalized_data.head()}")

# ... further processing ...

return processed_data

This approach provides immediate feedback, allowing you to quickly identify any issues that arise during transformation.

API Testing: Ensuring Request/Response Visibility

When testing APIs, verifying requests and responses is crucial. pytest print to console helps you inspect these interactions directly within your tests.

def test_api_endpoint(): response = requests.get("/my_api_endpoint") print(f"Request Headers: {response.request.headers}") print(f"Response Status Code: {response.status_code}") print(f"Response Body: {response.json()}")

assert response.status_code == 200

This provides clear insight into the API's behavior, making it easier to find discrepancies between expected and actual results. You can use the Requests library for making HTTP requests.

Performance Testing: Precision Timing Information

In performance testing, precise timing is everything. Strategically using pytest print to console can help identify bottlenecks.

def test_performance(): start_time = time.time() # ... performance-critical operation ... end_time = time.time() elapsed_time = end_time - start_time print(f"Elapsed Time: {elapsed_time:.4f} seconds")

assert elapsed_time < 0.5 # Performance threshold

This lets you accurately track execution time and identify areas for optimization. Using the built-in time module is a simple way to achieve this.

Choosing the Right Strategy

While these examples demonstrate basic print statements, remember that for more complex test suites or production environments, a dedicated logging framework like the built-in logging module provides more robust and structured logging options. The best choice between print and logging depends on the situation and your specific requirements.

Alternative Strategies and Considerations

When dealing with large volumes of output, consider combining print with command-line options such as -s and verbosity flags (-v, -vv) to manage the console output effectively. Using a structured logging framework allows you to categorize and filter output, making analysis much easier. By studying these examples and considering alternative approaches, you’ll gain a solid understanding of effective pytest print to console techniques, improving your testing efficiency and creating more robust tests. This moves your testing process from basic validation to a more professional standard.

Ready to improve your development workflow and enhance your CI/CD process? Explore Mergify and discover how it can improve your team's efficiency. Mergify offers intelligent automation for pull requests, leading to smoother code integration, reduced CI costs, and better overall code quality.