Pytest Parametrize Fixtures: Essential Testing Tips

Demystifying Pytest Parametrize Fixtures: Core Concepts

Pytest, a popular Python testing framework, offers powerful features like fixtures and parametrization to boost testing efficiency. Fixtures provide a way to manage reusable test resources. Parametrization, on the other hand, allows you to run the same test with different inputs. Combining these two – pytest parametrize fixtures – creates a dynamic and robust testing approach. This section explores the core concepts behind this potent combination and explains why they are essential for modern Python testing.

Understanding the Basics of Fixtures

Fixtures are functions that provide data or resources to test functions. They are helpful in setting up preconditions, providing test data, and handling cleanup operations. Think of a fixture as a dedicated setup crew for your tests, ensuring everything is ready before a test begins and tidying up afterward.

This approach promotes code reusability and reduces redundancy in your test suite. For instance, a fixture could establish a database connection, provide a test user, or initialize a specific application state.

The Power of Parametrization

Parametrization empowers you to run a single test function with multiple sets of inputs. Imagine testing a login function. Instead of writing separate tests for valid and invalid credentials, you can write one parametrized test that accepts different username-password combinations.

This drastically reduces code duplication and makes your tests more concise and maintainable. The pytest.mark.parametrize decorator has significantly improved testing efficiency. It allows a single test function to run with multiple sets of arguments, which reduces redundancy and improves readability. Explore this topic further in the official pytest documentation.

Combining Fixtures and Parametrization

The real magic happens when you combine fixtures and parametrization. A parametrized fixture provides different values to a test function based on the parameters passed to the fixture. This is especially useful when the setup itself needs to vary based on the input.

For example, imagine testing a web application. You might have a fixture that creates a user. A parametrized version of this fixture could create different types of users (admin, regular, guest) based on the test case. This provides a more realistic and varied testing environment.

Implementing Parametrized Fixtures

To create a parametrized fixture, you use the @pytest.fixture(params=[...]) decorator. The params argument takes a list of values. For each value in this list, the fixture function will execute, and the returned value will be passed to the test function.

This creates a dynamic testing environment where the setup changes based on the test parameters. This flexibility helps cover a wider range of scenarios, ultimately improving the overall quality and reliability of your test suite. This approach is particularly beneficial when managing complex testing scenarios and contributes to a more maintainable testing framework.

Test Function Vs. Fixture Parametrization: Choosing Wisely

Many developers face a dilemma when choosing between two pytest parametrization strategies: directly parametrizing the test function using @pytest.mark.parametrize or using parametrized fixtures. Understanding each approach is key to building a robust testing strategy. This section clarifies their differences and helps you choose the best fit for your needs.

Direct Test Parametrization: Simple and Effective

Direct test parametrization with @pytest.mark.parametrize shines when testing a function with various inputs. This approach is straightforward, keeping the parameters closely tied to the test logic. A prime example is testing a mathematical function with different number combinations. This simplifies the test design while offering flexibility for defining parameters and adapting to different testing scenarios.

Fixture Parametrization: Modular and Reusable

Parametrized fixtures offer modularity and reusability. They are especially useful when the setup logic needs to change based on the input. Imagine testing a web application with different user roles (admin, regular user, guest). A parametrized fixture can create the appropriate user for each test, ensuring realistic testing scenarios. This approach abstracts away setup details not central to the test itself, such as user creation.

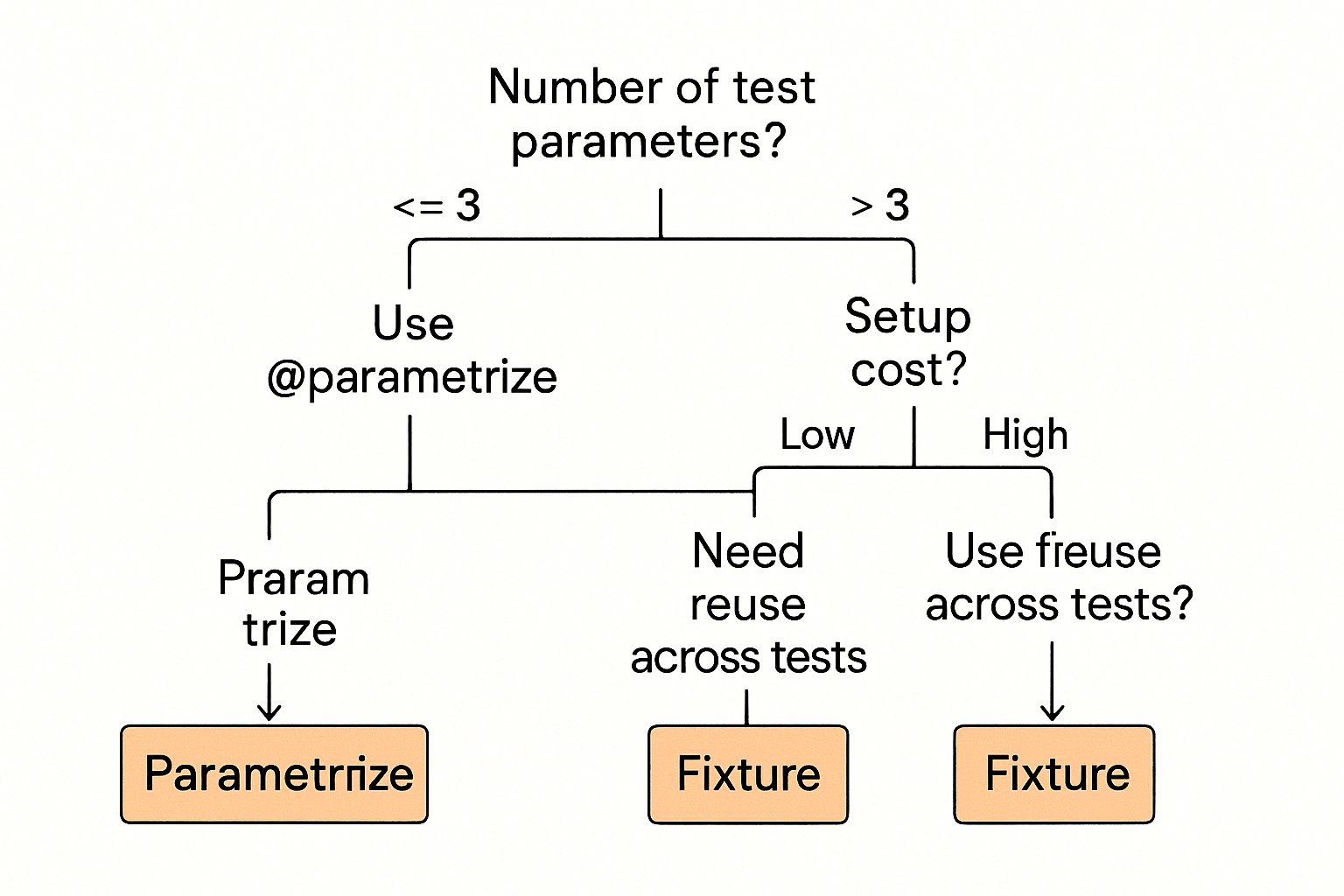

To illustrate the decision-making process for choosing a pytest parametrization method, consider the following decision tree. It visually represents how to choose between direct parametrization and fixture parametrization based on the specifics of your test scenario.

This decision tree outlines how to choose the best strategy. Begin by determining whether your setup changes based on the input. If so, use parametrized fixtures. If not, ask yourself if the parameters apply only to a single test. If yes, use @pytest.mark.parametrize. If the parameters are used in multiple tests, opt for a regular fixture to promote code reuse.

Let's delve deeper into comparing these two approaches with a table summarizing their key features and best use cases.

To help you further understand the differences and choose the best approach, let's look at a comparison table. This table summarizes the key features of each method.

| Feature | pytest.mark.parametrize |

Parametrized Fixtures | Best Used When |

|---|---|---|---|

| Modularity | Low | High | Setup logic varies with input |

| Reusability | Low | High | Same setup logic used across multiple tests |

| Simplicity | High | Medium | Parameters are specific to one test |

| Coupling | Tightly coupled to the test function | Loosely coupled, setup logic separated | Test logic and setup are distinct concerns |

| Example | Testing a function with different numerical inputs | Testing a web app with different user roles | - |

As the table highlights, @pytest.mark.parametrize is best suited for simple scenarios where parameters are specific to a single test. Parametrized fixtures excel when the setup logic is complex and needs to be reused across multiple tests. By carefully considering these factors, you can make an informed decision and optimize your testing strategy.

By considering these factors, you can write more effective pytest tests and build a more maintainable and efficient test suite, resulting in higher quality code and a more streamlined development process.

Optimizing Test Performance With Parametrized Fixtures

Test performance is crucial for efficient development. Slow tests can create bottlenecks in your CI/CD pipeline, hindering rapid iteration. This post explores how pytest's handling of parametrized fixtures can significantly improve execution speed, especially for large test suites. We'll examine practical techniques and real-world examples to help you optimize test performance.

Fixture Scoping and Resource Utilization

Fixture scoping is vital for test performance. Pytest offers different scopes for fixtures: function, class, module, and session. Choosing the correct scope significantly impacts resource usage. For instance, a session-scoped database connection fixture can be reused across all tests, minimizing the overhead of repeated connections.

However, if each test needs a clean database, a function-scoped fixture might be necessary. This approach sets up and tears down the database per test, but it comes at the cost of speed. Understanding how fixture scoping interacts with pytest parametrize is key. A broad scope for a parametrized fixture can reduce setup executions, saving valuable time.

Measuring Performance Impact

Before optimizing, establish a baseline. Tools like pytest-benchmark help measure execution times. By comparing performance before and after optimization, you can quantify the impact of your changes. This data-driven approach helps identify bottlenecks and prioritize optimization efforts.

Focusing on areas with the largest potential performance gains is key. Teams have seen CI pipeline time reductions of 40% or more through strategic fixture scoping and parametrization. These gains translate to faster feedback loops and quicker releases. Optimized tests can also be run more often, catching errors earlier.

Common Performance Pitfalls and Solutions

Overusing function-scoped fixtures for expensive operations is a common pitfall. Refactoring into a higher-scoped fixture can drastically improve execution time if a similar setup is used across multiple tests. Parameter explosion is another issue. While powerful, excessive parametrization leads to a combinatorial explosion of test cases, slowing down execution.

Carefully design your parametrization strategy, perhaps by combining parameters strategically or using a smaller, representative data subset. Pytest optimizes performance with parametrized fixtures by minimizing the number of active fixtures. This efficient approach groups tests by fixture instances.

This is particularly helpful for tests involving global state. It ensures reliability by preventing conflicts from shared resources. Discover more insights about fixtures here. This modular approach facilitates reliable testing, crucial for maintaining large codebases and ensuring quality releases.

By understanding pytest parametrize fixtures and using these techniques, you can greatly improve your test suite's performance. This not only enhances development workflow but also contributes to faster, more reliable software delivery.

Mastering Advanced Pytest Parametrize Patterns

Building upon the foundational concepts of pytest parametrization, this section explores more sophisticated techniques to enhance your testing strategy. These advanced patterns are commonly used by experienced testing teams to handle complex scenarios and improve overall test coverage.

Nested Parametrization: Conquering Interdependent Scenarios

When you need to test multiple layers of input variations, nested parametrization is a powerful solution. This involves using the pytest.mark.parametrize decorator multiple times within the same test function, creating a matrix of test cases.

Imagine testing a function that processes different file formats and compression algorithms. Nesting allows you to test all combinations efficiently.

import pytest

@pytest.mark.parametrize("file_format", ["txt", "csv", "json"]) @pytest.mark.parametrize("compression", ["gzip", "bzip2", "none"]) def test_file_processing(file_format, compression): # Test logic for different combinations pass

This ensures comprehensive coverage without writing separate tests for each combination, resulting in a more concise and maintainable test suite.

Dynamic Parameter Generation: Adapting to Change

Sometimes, test parameters aren't known beforehand. Dynamic parameter generation creates parameters on-the-fly, based on factors like environmental conditions or data availability.

For example, consider testing against different database versions. You can dynamically generate parameters based on the available versions.

import pytest

def get_available_db_versions(): # Logic to retrieve available versions return ["1.0", "2.0", "3.0"]

@pytest.fixture(params=get_available_db_versions()) def db_version(request): return request.param

def test_database_compatibility(db_version): # Test logic for different versions pass

This adaptable approach avoids hardcoded values, making your test suite more robust.

Combining Multiple Parametrized Fixtures: Building Comprehensive Test Matrices

For complex scenarios, combining multiple parametrized fixtures is essential. This technique allows you to build intricate test matrices without code duplication.

Consider testing a web application across different browsers and operating systems.

import pytest

@pytest.fixture(params=["Chrome", "Firefox"]) def browser(request): return request.param

@pytest.fixture(params=["Windows", "macOS"]) def os(request): return request.param

def test_web_app_compatibility(browser, os): # Test logic for browser/OS combinations pass

This method creates four distinct test cases, covering all browser and operating system combinations, demonstrating the power of combined fixtures.

To further illustrate these patterns, let's look at a table summarizing their applications:

The following table provides an overview of advanced parametrization techniques and their use cases.

| Pattern | Implementation | Use Cases | Complexity Level |

|---|---|---|---|

| Nested Parametrization | Multiple @pytest.mark.parametrize decorators |

Testing all combinations of multiple input variations (e.g., file formats and compression algorithms) | Medium |

| Dynamic Parameter Generation | Fixtures with dynamically generated parameters | Adapting tests to changing environments or data (e.g., testing against different database versions) | Medium |

| Combining Multiple Parametrized Fixtures | Multiple fixtures with different parameters | Creating complex test matrices without code duplication (e.g., testing across different browsers and operating systems) | Medium to High |

This table highlights the various ways you can use these advanced patterns to structure your tests effectively.

Real-World Examples and Solutions

These advanced patterns offer elegant solutions to complex testing challenges. One team used nested parametrization and dynamic parameter generation to significantly reduce test code while increasing coverage for a complex data pipeline.

Another team effectively tested a microservice architecture with varying dependencies by combining parametrized fixtures to simulate different versions and configurations, ensuring robust testing without unnecessary repetition. These real-world examples showcase the practical benefits of advanced Pytest parametrization in improving software quality. By implementing these techniques, you can create more efficient, maintainable, and comprehensive test suites.

Connecting External Data Sources to Parametrized Tests

Building robust test suites often involves more than just basic, predefined inputs. To accurately mirror real-world scenarios, your tests should utilize a wide range of data, frequently sourced externally. This section explores how to connect these external data sources to your pytest parameterized fixtures, enabling more comprehensive and realistic testing.

Loading Test Parameters From Files

One common method involves loading test parameters from files such as JSON, YAML, or CSV. This allows you to manage test data separately from your test code, improving maintainability. For instance, you could store test cases in a CSV file, where each row represents a distinct set of inputs.

import pytest import json

@pytest.fixture(params=json.load(open("test_data.json"))) def test_data(request): return request.param

def test_api_endpoint(test_data): # Use test_data['input'] to make API call # Assert against test_data['expected_output'] pass

This technique is especially useful for testing APIs or functions with multiple input variations, keeping your test code concise and well-structured. It also allows non-technical team members to contribute to testing by modifying the external data files.

Integrating With Databases

For larger datasets or more intricate data structures, direct integration with a database can be a valuable approach. You can create a fixture that queries the database and returns the relevant data as parameters.

import pytest import sqlite3

@pytest.fixture(scope="module") def db_connection(): conn = sqlite3.connect('test_database.db') yield conn conn.close()

@pytest.fixture(params=[row for row in db_connection.execute("SELECT * FROM test_cases")]) def test_case(request): return request.param

def test_database_interaction(test_case): # Use data from test_case tuple in your tests pass

This enables you to use existing data for testing, ensuring relevance to actual scenarios. Using targeted database queries lets you select specific subsets of data, especially helpful for managing extensive datasets.

Designing a Flexible Data Loading Architecture

As your test suite expands, a well-defined data loading architecture becomes essential. Create dedicated functions or classes to handle loading data from various sources. This separation of concerns promotes maintainability and allows easy switching between data sources without changing core test logic. It also simplifies updating test data as your application evolves.

Efficiently Handling Large Datasets

Large datasets present unique obstacles. Loading the entire dataset into memory can slow down your test suite. Consider strategies like lazy loading or pagination to load data as needed. Implement filtering or sampling in your data loading functions to reduce the amount of data per test run, focusing on relevant test cases.

The flexibility of pytest's parametrization features extends to integrating external data sources. Developers can externalize test data into files like YAML or JSON, then used to parameterize tests. This simplifies test data management, particularly for complex or large datasets. For example, testing user profiles with various parameters (e.g., active/inactive status) can be done by defining parameters in an external file and using pytest's parametrization to run tests with each set. This improves test coverage by consistently executing relevant test cases across different environments. Learn more about pytest parametrization here: Pytest Parametrization Explained

Connecting external data sources to your pytest parameterized fixtures allows your tests to mirror real-world usage patterns, creating a more thorough and valuable testing process. This enables confident delivery of high-quality software equipped to handle the complexities of real-world scenarios.

Pytest Parametrize Fixtures in Action: Real-World Examples

Having explored the core concepts and advantages of pytest parametrize fixtures, let's see how they tackle real-world testing problems. These examples showcase their practical use across various fields, from web development to data processing.

Case Study 1: Streamlining Fintech Testing

A fintech company needed to test various transaction scenarios across different account types. Initially, they had separate tests for each combination, leading to a large, redundant codebase. By using pytest parametrize fixtures, they significantly reduced their test code.

They created a fixture providing different account types (e.g., checking, savings, investment) based on input parameters. This fixture was then used with another parametrized fixture for transaction types (e.g., deposit, withdrawal, transfer).

This combined approach streamlined testing, reducing the test code base by 60% and improving test coverage. All combinations were thoroughly tested. This resulted in more manageable test suites and faster execution times.

Case Study 2: Comprehensive Healthcare API Validation

A healthcare API required thorough endpoint validation. Each endpoint needed testing with various valid and invalid input data, including different authentication credentials. Using pytest parametrize fixtures, they created a fixture to supply different authentication tokens based on user roles.

This fixture worked in conjunction with another parametrized fixture yielding various request payloads. This included both valid and invalid data, allowing them to test all endpoint functions, including authentication, data validation, and error handling.

Parametrizing API requests and user roles within fixtures improved the healthcare API testing process. This led to comprehensive endpoint validation and improved test coverage. It also made tests more adaptable to changes in authentication or data formats.

Case Study 3: Automating E-commerce User Journeys

An e-commerce platform wanted to automate testing of complex user journeys, involving multiple steps like adding items to a cart, applying discounts, and completing checkout with various payment methods. They used pytest parametrize fixtures to create fixtures representing different stages of the user journey.

These fixtures injected parameters for item selection, discount codes, and payment details, enabling automated testing of various user flows and edge cases. This ensured a smooth and reliable checkout process. Maintainability also improved, as changes to a specific journey stage could be easily updated within its corresponding fixture.

Refactoring and Measurable Improvements

These examples highlight the effectiveness of pytest parametrize fixtures. Refactoring often involves identifying repetitive test setup logic and extracting it into parametrized fixtures. This improves developer productivity by reducing code duplication and enhancing test readability.

The advantages go beyond code organization. Teams often see significant improvements in test reliability and reduced debugging time. This leads to faster development cycles and higher-quality software. Testing numerous scenarios with minimal code changes makes pytest parametrize fixtures a valuable asset for any Python project. It promotes comprehensive test coverage and a more efficient development process.

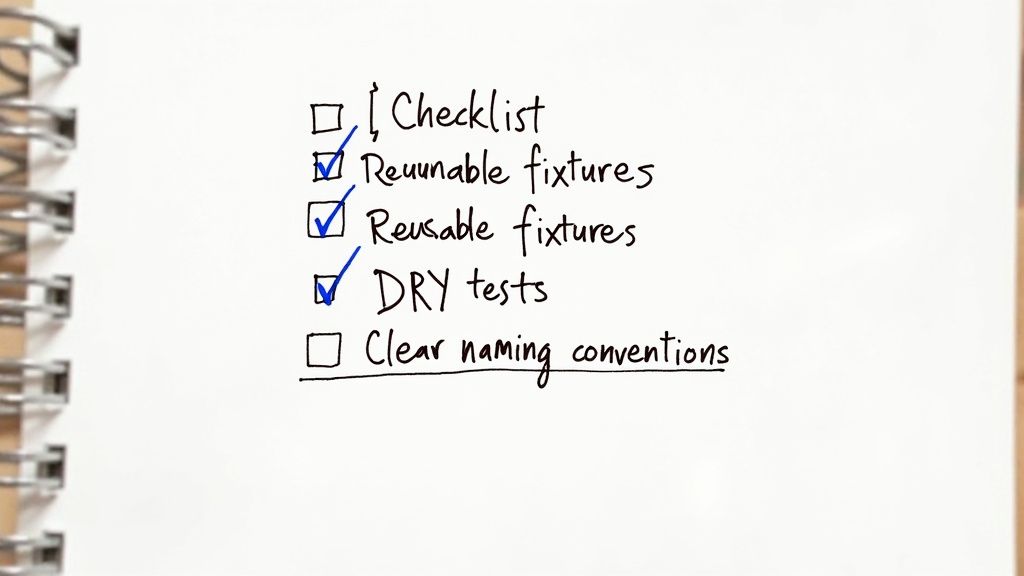

Avoiding Pitfalls: Pytest Parametrize Fixture Best Practices

Successfully implementing pytest parametrize fixtures requires careful planning to avoid common problems that can lead to frustrating debugging and inefficient tests. This section highlights some frequent pitfalls and offers practical solutions for writing clean, effective, and maintainable tests.

Scope Conflicts: Understanding Fixture Lifecycles

A common issue stems from misunderstanding fixture scopes. If a parametrized fixture has a broader scope (like module or session) than the tests using it, unexpected behavior can occur. For instance, if a module-scoped fixture modifies a shared resource, later tests in that module using the same fixture will encounter the modified state, potentially causing test failures.

- Solution: Carefully choose the right scope for your fixtures. If tests need isolated states, use

functionscope. If resources can be safely shared, considerclassormodulescope for performance gains.

Parameter Explosion: Managing Test Case Combinations

While powerful, overusing parametrization can create a combinatorial explosion of test cases. This can significantly slow down test execution and make it harder to find the cause of failures. Imagine testing a function with three parameters, each accepting five values. This creates 5 * 5 * 5 = 125 test cases. Adding one more parameter with five values jumps the total to 625.

- Solution: Strategically combine parameters. If you don't need all combinations, use a representative subset. Consider dynamically generating parameters based on relevant criteria. This balances thorough testing with reasonable execution time.

Naming Conventions: Enhancing Readability and Maintainability

Clear and consistent naming conventions improve test readability and maintainability. Use descriptive names for fixtures and parameters that clearly explain their purpose. For example, use valid_user_data or invalid_input_data instead of just data.

- Solution: Create and follow naming standards for your project. This helps everyone (including your future self) understand the purpose of your tests and parameters.

Over-Parametrization: Finding the Right Balance

Parametrizing everything can make tests overly complex and hard to understand. Focus on the core functionality you're testing. Don't parametrize aspects that don't directly affect the test's outcome.

- Solution: Use parametrization wisely. Focus on the inputs and conditions most relevant to the test's goal. Avoid overly complex test scenarios that obscure the core test logic.

Organization and Structure: Scaling Your Test Suite

As your project grows, maintaining a well-organized test suite is crucial. Group related tests together, use descriptive file names, and use pytest’s marking system to categorize tests by functionality or priority.

- Solution: Create a clear organizational structure for your tests. Consider grouping tests by feature or module. This simplifies navigating and maintaining your test suite as it grows.

By following these best practices, you can avoid common pytest parametrize fixture problems and create a robust, efficient, and maintainable test suite. This ensures your tests remain valuable throughout your project's lifecycle.

Ready to optimize your CI/CD workflow? Explore Mergify.