Mastering Pytest Fixtures: Build Efficient Test Suites

The Power of Pytest Fixtures: Why They Matter

Imagine setting up a complex science experiment. You meticulously calibrate instruments, prepare solutions, and carefully control the environment. Repeating this for every single test would be incredibly tedious. That's where pytest fixtures come in. They're like your lab assistants, handling the repetitive setup and teardown for your Python tests. This frees you to concentrate on the core of your work: the test logic itself.

Pytest fixtures are functions decorated with @pytest.fixture. They establish a defined and consistent baseline for your tests. By ensuring each test runs in a known state, fixtures eliminate unexpected side effects and improve the reliability of your results. For instance, if several tests require a database connection, a fixture can establish and close this connection before and after each test, maintaining data consistency and preventing resource leaks.

This approach differs significantly from traditional setup methods, where each test function often handles its own setup and teardown. As your test suite expands, this method becomes unwieldy and prone to errors. Duplicated code also makes maintenance difficult, requiring changes in multiple places. Pytest fixtures offer a centralized, reusable way to manage test dependencies. This modularity also enhances readability, simplifying onboarding for new team members. For more background on testing, take a look at this introduction to web application testing.

Why Fixtures Are a Game Changer

Pytest is a popular testing framework for Python, and it introduced fixtures to enhance the testing process by minimizing redundancy. A 2023 Stack Overflow survey showed that 57.6% of professional developers rely on pytest, highlighting its wide adoption. Fixtures provide a way to define reusable code for test setup, which is especially beneficial in larger projects where setup can be extensive. Learn more about pytest fixtures here.

Fixtures also significantly improve test isolation. By providing a fresh environment for each test, they prevent unintended interactions, leading to more accurate results. This isolation simplifies debugging by pinpointing the cause of failures without the worry of interference. Moreover, fixtures provide better control over resources, letting you manage everything from database connections to network settings consistently.

Beyond Basic Setup: Advanced Fixture Capabilities

Pytest fixtures offer more than simple setup and teardown. They offer a range of features to handle complex testing scenarios. You can parameterize fixtures to run the same test with varying inputs, reducing code duplication. Fixtures can also depend on other fixtures, creating a hierarchy that mirrors your application's structure. This allows you to build sophisticated test environments efficiently.

Imagine needing to test user authentication. A fixture could create a test user, log them in, and log them out after the test. Any test requiring an authenticated user could then use this fixture, ensuring a consistent and secure testing environment. This control and reusability are what make pytest fixtures so powerful for building robust and maintainable test suites. This, in turn, contributes to improved software quality and faster development cycles.

From Zero to Hero: Implementing Your First Fixtures

Now that we understand the importance of pytest fixtures, let's explore how to create them. We'll take a hands-on approach, demonstrating their practical application from basic syntax to more advanced concepts.

The Anatomy of a Pytest Fixture

A pytest fixture is simply a function decorated with @pytest.fixture. This decorator tells Pytest that this function provides a resource or performs setup actions for your tests. Within this function, you define the logic for creating and returning the resource. This could involve creating a database connection, initializing an object, or even just returning a value.

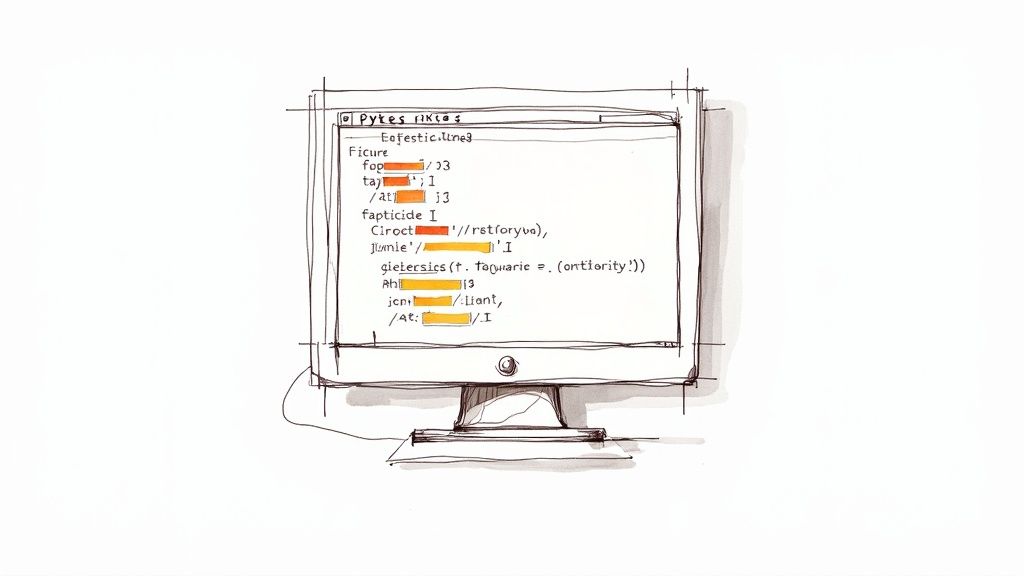

Let's create a fixture providing a simple string:

import pytest

@pytest.fixture def my_string(): return "Hello, fixtures!"

def test_string(my_string): assert my_string == "Hello, fixtures!"

Here, my_string is the fixture function. The test_string function receives the return value of my_string by including it as a parameter. This streamlined approach eliminates the need for explicit setup calls in your test function.

Maximizing Reusability With Fixture Hierarchies

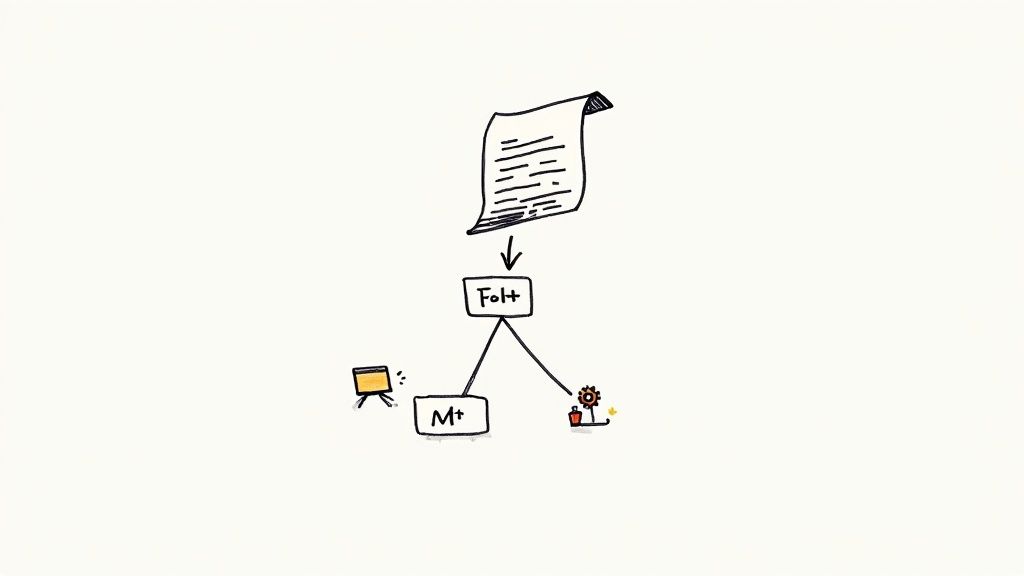

To get the most out of fixtures, experienced developers use fixture hierarchies. This involves creating fixtures that depend on other fixtures. This is especially helpful when setting up complex scenarios requiring multiple resources or configurations.

Imagine you need both a database connection and a user object for a set of tests. You could create a user fixture that depends on a db_connection fixture:

@pytest.fixture def db_connection(): # Logic to establish database connection return connection

@pytest.fixture def user(db_connection): # Logic to create a user using the db_connection return user

Any test needing a user automatically receives both the database connection and the user object. This simplifies test functions, increasing maintainability and reducing duplicate code.

Scope and Naming: Key Considerations for Effective Fixtures

Fixture scope is a crucial element. Pytest provides different scopes, from function (the default, which runs the fixture for each test) to session (runs once per test session). Choosing the right scope significantly affects test performance, especially for resource-intensive setups like database connections.

If you need a database connection for many tests, a session-scoped fixture can significantly improve speed. The connection is established only once and reused across the entire session.

Clear, descriptive names are also essential. Use consistent naming conventions for fixtures. For example, prefixes like mock_ or test_ quickly clarify the fixture's purpose, improving readability and team collaboration.

To further illustrate fixture scopes, let's look at a comparison table:

To help understand the different scopes available for pytest fixtures, the following table provides a breakdown of each scope, its description, common use cases, and a practical example.

Pytest Fixture Scope Levels

| Scope | Description | When to Use | Example |

|---|---|---|---|

| function | Runs once per test function | Default scope; suitable for most cases where a fresh instance is needed for each test | Creating a temporary file |

| class | Runs once per test class | When a resource is shared amongst all tests in a class | Setting up a class-level database connection |

| module | Runs once per module | When setup is required at the module level | Initializing module-level configurations |

| package | Runs once per package | When a shared resource is used across multiple modules within a package | Setting up package-wide external dependencies |

| session | Runs once per test session | Ideal for expensive resources like database connections that can be reused across multiple tests | Initializing a database connection for the entire test suite |

This table highlights the key distinctions between each fixture scope, allowing for more effective fixture utilization in your test suite. Choosing the appropriate scope is essential for optimizing test performance.

Transforming Existing Tests With Pytest Fixtures

Refactoring current tests to utilize fixtures might seem like a big task, but the improvements are worth the effort. Begin by identifying common setup code in your tests. These parts are ideal candidates for fixture extraction. By gradually moving these blocks into dedicated fixtures, you'll steadily enhance the organization and maintainability of your test suite.

This process, while potentially requiring some initial time investment, leads to a more robust and efficient testing framework. Your code becomes easier to understand, debug, and extend, significantly improving the overall quality of your testing process.

Beyond Basics: Advanced Pytest Fixture Techniques

Building on the fundamentals of pytest fixtures, let's delve into techniques that significantly improve your testing process. These advanced strategies empower you to create dynamic test environments and tackle complex scenarios, leading to efficient and maintainable test suites. Automated testing has become a cornerstone of modern software development, with pytest fixtures playing a crucial role. The World Quality Report 2023 indicates that 77% of organizations utilize automated testing. Pytest fixtures are a common practice, especially within Python projects. Learn more at the pytest fixtures documentation.

Parametrized Fixtures: Testing Multiple Scenarios

Repeatedly testing the same logic with different scenarios can lead to redundant code. Parametrized fixtures offer a solution by enabling a single test function to run with various inputs. This is invaluable for testing diverse configurations, datasets, or boundary conditions.

For instance, consider validating email addresses. A parametrized fixture could supply a list of valid and invalid email formats. This allows you to execute the same validation test against all emails without rewriting the test logic, simplifying maintenance and updates.

Fixture Factories: Dynamic Test Environments

Sometimes, tests demand fixtures created on demand. Fixture factories are functions that return fixture functions, enabling dynamic fixture generation during test execution. This is particularly helpful for managing complex configurations or when tests require slightly modified versions of the same fixture.

Imagine testing API interactions with varying user roles. A fixture factory could dynamically create user fixtures with specific permissions tailored to each test, ensuring a flexible and adaptable testing environment.

Fixture Composition: Building Complex Setups Efficiently

Fixture composition involves creating fixtures that rely on other fixtures, mirroring your application's structure. This is especially powerful for complex setups requiring multiple interconnected resources. By structuring these dependencies, you build sophisticated test environments efficiently.

A database fixture might depend on a configuration fixture, which in turn relies on a logging fixture. This layered approach simplifies the code and clarifies each fixture's responsibility.

Autouse Fixtures and Yield Fixtures

Autouse fixtures execute automatically for every test within their scope without explicit declaration. They're ideal for background processes, logging, or operations requiring consistent execution. However, use them carefully to avoid unintended consequences.

Yield fixtures elegantly handle both setup and teardown actions. Code before the yield statement performs setup, while the code after handles teardown. This ensures a clean test environment, regardless of test outcomes, and is essential for proper resource management, such as closing database connections.

Practical Application: Streamlining Your Test Suite

These advanced techniques are crucial for effectively testing complex Python applications. By applying them strategically, you can create pytest fixtures that are:

- Reusable: Write once, use many times, saving development time.

- Maintainable: Centralized logic makes updates and modifications easier.

- Scalable: Adapt to expanding test suites without sacrificing efficiency.

- Robust: Reliable setup and teardown contribute to accurate and consistent testing.

Integrating these techniques results in a pytest-powered test suite that enhances reliability and developer productivity, leading to higher-quality code and faster development cycles.

Conquering Database and API Testing With Fixtures

Integration tests, especially those involving databases and APIs, can be notoriously tricky. They're often flaky and hard to manage. Luckily, pytest fixtures offer powerful tools to make these essential tests more robust and reliable. Many leading development teams now rely on pytest fixtures to create consistent and isolated testing environments.

This shift has resulted in some significant improvements. Teams see increased reliability and better developer productivity. One report indicated a 30% reduction in test failures after adopting fixtures due to the improved isolation they offer. Accurate bug identification and fixes rely on this kind of stability. You can learn more about Python testing through resources like this Python testing tutorial.

Database Testing With Pytest Fixtures

Database testing is simplified with pytest fixtures. They handle the sometimes complex setup and teardown of database instances for each test. This guarantees a clean slate for every test, eliminating unwanted side effects and boosting the accuracy of results. Fixtures also streamline database migrations, making sure the schema is correct for every single test.

- Setup: Fixtures can create fresh database instances, apply migrations, and seed any required data before a test runs.

- Testing: Test functions can then interact with this pre-configured database instance, confident in its state.

- Teardown: Once the test is complete, the fixture cleans up. It might drop the database or revert it to a known good state, preventing resource leaks and maintaining a tidy test environment.

This isolation ensures that database changes from one test don't affect others, creating consistent and dependable outcomes.

API Testing With Pytest Fixtures

API integrations are a critical piece of most applications. Thoroughly testing these integrations is vital, but it can present real challenges. Pytest fixtures help to manage the complexity of API testing in a structured and manageable way. You can mock external API responses, simulating a range of situations, from successful requests to errors and timeouts.

- Mocking Responses: Fixtures can mock different API responses, simulating success, error codes, and specific data formats.

- Authentication: They can handle the setup of authentication, ensuring your tests use the correct credentials to access the API.

- Complex Scenarios: Fixtures allow you to simulate complex scenarios such as rate limiting, network issues, or particular server behaviors.

Building Reliable Test Environments

Imagine an API that provides user data. A fixture could be set up to:

- Mock a Successful Response: This would return a predefined JSON structure containing user details.

- Mock an Error Response: This would simulate a server error or an invalid request.

- Mock a Timeout: This tests how your application handles API delays.

Using fixtures for these scenarios offers precise control over the testing environment. This granular level of control allows you to validate how your application behaves under various conditions, leading to more reliable API interactions.

Consistent and Trustworthy Validation

Pytest fixtures transform unpredictable integration tests into a predictable and dependable process. Instead of worrying about setup and teardown, developers can focus on writing effective test logic. This is especially valuable in continuous integration and continuous deployment (CI/CD) pipelines, ultimately accelerating development velocity and time-to-market for new features. By adding pytest fixtures to your testing strategy, you empower your team to manage complex database and API integrations with greater efficiency, resulting in a stronger and more maintainable codebase.

Turbocharging Test Performance With Smart Fixtures

Is your test suite starting to feel sluggish? As projects expand, test suites can become unwieldy and slow, a real roadblock to rapid development. Optimizing your pytest fixtures can significantly reduce these execution times. This section explores identifying performance bottlenecks, implementing efficient caching strategies, and leveraging session-scoped fixtures for a substantial performance boost.

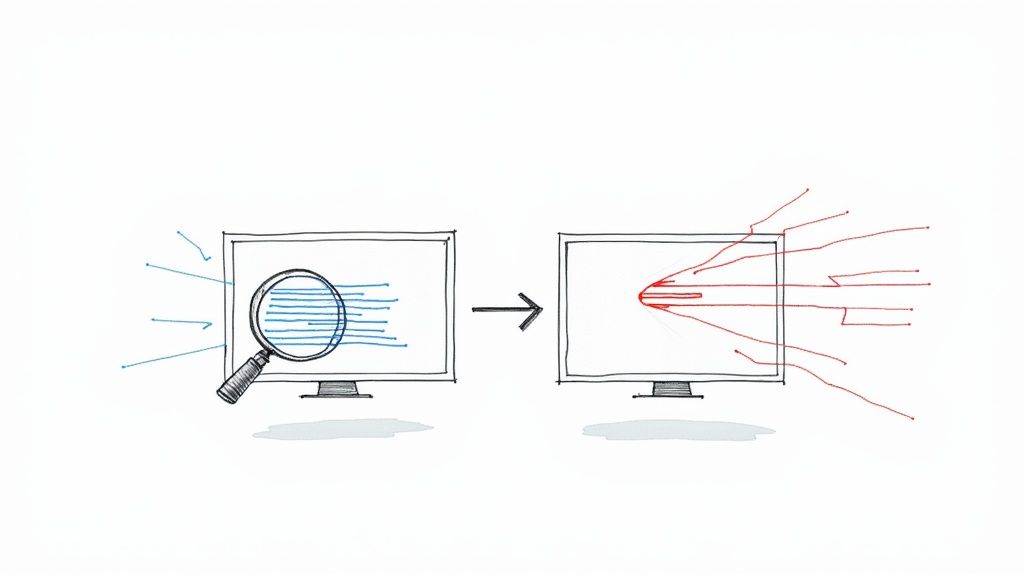

Identifying Performance Bottlenecks

Before jumping into optimization, it's crucial to pinpoint what's actually slowing down your tests. Profiling tools are invaluable for this. They offer insights into the time spent on different parts of your suite, highlighting which fixtures consume the most resources. For example, if a database connection fixture takes a long time, it’s a prime candidate for optimization. This focused approach lets you prioritize the most impactful improvements.

Caching Strategies for Fixture Optimization

Fixture caching is a powerful way to reduce setup times without affecting test isolation. Imagine a fixture that loads a large dataset. Instead of reloading this data for each test, you can cache it after the first load. Subsequent tests then reuse the cached data, dramatically reducing I/O operations and processing time.

- Function-scoped fixtures: These are helpful if you need fresh data for every single test.

- Module-scoped fixtures: These cache data for all the tests within a module. This scope is ideal when tests share a common dataset.

- Session-scoped fixtures: These cache data for the entire test session. This is very effective for resource-intensive operations like database connections or loading external resources. These fixtures are invoked only once per session, which minimizes overhead.

This strategic caching helps maintain speed without sacrificing the isolation or accuracy of individual tests.

Leveraging Session-Scoped Fixtures

Fixtures are also valuable for setting up and tearing down test environments, particularly in API testing. You can explore this further in this API testing tutorial. Session-scoped fixtures are particularly effective. Heavy operations like database connections, usually a major performance drain, become negligible when executed only once per session.

To illustrate the impact, let's consider some comparative benchmarks. A test suite involving many database interactions could see a 50-75% reduction in execution time by simply moving the database connection to a session scope. This kind of optimization is particularly crucial in CI/CD pipelines where speed is paramount.

Profiling and Targeted Optimizations

Profiling helps you pinpoint exactly where time is being wasted within your fixtures, enabling highly targeted optimization efforts. Perhaps a fixture performs complex calculations that could be simplified or optimized with more efficient algorithms. Profiling will expose these areas, allowing you to focus your efforts where they’ll have the greatest impact. This helps maintain fast feedback cycles even as your test suite grows. Continuous monitoring and optimization of fixture performance is essential to maintaining a rapid development pace, even with a very large number of tests.

To illustrate potential performance gains, let’s take a look at the following table:

Fixture Performance Comparison: Benchmark data showing execution time differences between fixture optimization strategies

| Optimization Strategy | Setup Time | Test Execution Speed | Memory Usage | Best For |

|---|---|---|---|---|

| No Optimization | High (e.g., 5s per test) | Slow | High | N/A (avoid) |

| Function-Scoped Caching | Moderate (e.g., 2s per test) | Moderate | Moderate | Tests needing fresh data |

| Module-Scoped Caching | Low (e.g., 1s initial, then negligible) | Fast | Moderate | Tests sharing common data within a module |

| Session-Scoped Caching | Very Low (e.g., 5s once per session) | Very Fast | High | Expensive operations used across many tests |

| Algorithmic Optimization | Variable (dependent on algorithm) | Fast | Variable | Fixtures with complex calculations |

This table showcases a few common strategies and their potential impact. The actual performance gains will vary based on the specifics of your tests and fixtures. However, even small improvements add up across a large suite. By strategically applying these techniques, you can significantly boost your test performance and keep your development cycles fast and efficient.

Fixture Best Practices From the Trenches

Building upon the basics of pytest fixtures, let's explore practical strategies used by teams managing large test suites. These best practices focus on maintainability, clarity, and efficiency, helping you build a robust fixture foundation that scales with your project. Teams using fixtures often report a 50% reduction in debugging time, thanks to the improved clarity and organization they offer. Find more detailed statistics here.

Organizing Fixtures for Scalability

As your project grows, the number of fixtures inevitably increases. Effective organization is crucial to prevent fixture sprawl and maintain a clear overview.

- Modular Design: Group related fixtures into separate files within a

fixturesdirectory. This modularity enhances discoverability and allows for focused maintenance. For example, database-related fixtures could live infixtures/database.py, while API fixtures could reside infixtures/api.py. - Conftest.py for Shared Fixtures: Use

conftest.pyfiles strategically to share fixtures across multiple test files within a directory or package. Avoid overusingconftest.pyfor every fixture. Largeconftest.pyfiles can become difficult to navigate and maintain.

Naming Conventions for Clarity

Well-chosen fixture names significantly improve code readability and team collaboration.

- Descriptive Names: Choose names that clearly explain the fixture's purpose. For example,

db_connectionis better thanconn. - Consistent Prefixes: Use prefixes to categorize fixtures.

mock_api_responseimmediately indicates a mocked API response, whiletest_usersuggests a fixture providing a test user object. This consistency makes it easy to understand fixture roles.

Avoiding Common Pitfalls

Fixtures, while powerful, can present challenges if not used carefully. Being aware of these pitfalls can prevent debugging headaches.

- Circular Dependencies: Fixtures depending on each other can create unsolvable cycles. Ensure a clear hierarchy and avoid scenarios where fixture A relies on B, and B relies on A.

- Scope Conflicts: Overusing broad scopes like

sessioncan lead to unexpected side effects if fixtures modify shared state. Favor narrower scopes when possible and useyieldfixtures to ensure proper cleanup, reducing the risk of test interference. - Overuse of Autouse: While convenient, autouse fixtures can obscure test dependencies and make debugging more challenging. Use them sparingly for truly global setup and consider explicit fixture injection for better clarity.

Building a Future-Proof Fixture Strategy

Implementing these best practices creates a solid foundation that supports your team’s current needs and adapts to future growth. This structured approach improves maintainability, readability, and scalability, resulting in a more efficient and reliable testing process. These practices contribute to a well-organized, easy-to-understand, and adaptable testing structure, reducing friction and maximizing the value of your tests. A robust testing strategy directly contributes to increased developer productivity and more dependable software.

Pytest Fixtures in CI/CD: From Local to Production

Moving tests smoothly from local development to a robust CI/CD pipeline can be tricky. But, pytest fixtures, used strategically, can bridge this gap, ensuring consistent testing across development stages. Let's explore how effective teams use pytest fixtures to maintain test integrity and efficiency, from developer machines to production deployments.

Handling Environment-Specific Dependencies

A key challenge in CI/CD is managing environment-specific dependencies. Your local machine and your CI server may have different libraries or configurations. Pytest fixtures help manage these variations without needing multiple fixture sets.

- Configuration Files: Keep environment-specific configurations (like database credentials) in separate files (e.g.,

config_local.py,config_ci.py). - Conditional Fixture Logic: Use environment variables to choose the right configuration file within your fixtures. This allows your fixture to adapt to the environment. For example:

import os import pytest

@pytest.fixture def db_connection(): env = os.environ.get("ENVIRONMENT", "local") # Default to local if env == "ci": config = import_config("config_ci.py") else: config = import_config("config_local.py") # ... use config to establish the connection

This ensures the correct settings are used without adding environment-specific logic to your tests. It also allows for consistent test setup across different environments, reducing inconsistencies between local and CI test results. Reports show teams using pytest fixtures streamline testing and increase test coverage by an average of 25%. Higher test coverage usually means better software quality and fewer production bugs. Learn more at the pytest fixtures documentation.

Parallelizing Fixture-Based Tests

CI environments often support parallel test execution for quicker feedback. Fixtures can be complex when running tests concurrently. Pytest handles this with fixture scopes and dependency management.

- Session-Scoped Fixtures: For resources shared across tests (like a database connection), use the

sessionscope. This initializes the resource once per test session, minimizing overhead and preventing conflicts in parallel environments. - Proper Scope Selection: Choose the narrowest scope for other fixtures (

function,class,module) to maximize test isolation and prevent interference during parallel runs. Structuring fixture dependencies carefully avoids race conditions and ensures test isolation.

Managing External Service Dependencies in CI

Many applications rely on external services. Testing these in CI requires careful planning. Pytest fixtures, with mocking and containerization, can manage these dependencies effectively.

- Containerized Dependencies: Use Docker to containerize dependencies (like databases or message queues). Fixtures can start and stop containers, providing consistent test environments in CI. This prevents test contamination and accurately reflects deployment conditions.

- Effective Mocking: For services difficult or expensive to containerize, mocking is essential. Fixtures create mock objects simulating external services, letting you test interactions without depending on the actual service during CI runs.

- Example - Mocking an API Response:

import pytest import requests from unittest.mock import patch

@pytest.fixture def mock_api_response(): with patch("requests.get") as mock_get: mock_response = mock_get.return_value mock_response.status_code = 200 mock_response.json.return_value = {"data": "mocked_data"} yield mock_response

def test_api_integration(mock_api_response): # Use requests.get; it will be patched to return the mock response response = requests.get("https://example.com/api") assert response.json()["data"] == "mocked_data"

This makes tests robust and less prone to failures from external service issues, improving your CI/CD pipeline's reliability.

Want to optimize your CI/CD workflow and reduce costs? See how Mergify can improve your team's efficiency and streamline your development.