Master pytest fixture: Boost Python Testing

The Power and Purpose of Pytest Fixtures

Pytest fixtures are a powerful mechanism for streamlining setup and teardown processes in software testing. They address a common developer pain point: managing resources like database connections, temporary files, or network mocks. Without a structured approach, this management can become unwieldy. Fixtures bring order to this potential chaos, providing a reusable and maintainable solution.

Why Use Pytest Fixtures?

Traditional test setup often involves redundant code within each test function. This repetition bloats the test suite and makes maintenance difficult. Imagine changing database connection parameters – you'd have to modify numerous test functions individually. Fixtures centralize these setup steps into reusable functions, drastically reducing code duplication and improving maintainability and readability.

For example, if several tests require a connection to a test database, a fixture can establish this connection. Each test needing this connection can then simply request the fixture, avoiding repeated connection setup code. Pytest fixtures are also inherently modular. They can be as simple or complex as needed, handling resource setup, data loading, or even more sophisticated test-specific actions.

This modularity fosters code reusability. A fixture created for one test suite can be reused in another, maximizing efficiency. If you have a standard setup procedure for all database tests, you can encapsulate that logic in a fixture and reuse it across all projects. This reusability saves significant time and reduces the risk of inconsistencies between different test setups. Pytest fixtures are crucial for Python testing, especially for setting up and tearing down resources. As of February 2025, a detailed pytest tutorial on LambdaTest had over 251,209 views, highlighting its popularity in automated testing. This widespread adoption speaks volumes about its effectiveness in Python testing.

Benefits of Using Pytest Fixtures

Fixtures offer several key advantages for more efficient and reliable testing:

- Improved Code Organization: Fixtures encapsulate setup and teardown logic, making test code cleaner and more focused on the actual test logic.

- Reduced Code Duplication: Centralized setup and teardown procedures eliminate redundant code, shrinking the overall test suite size and improving maintainability.

- Increased Test Reliability: Standardized fixtures create consistent and predictable test environments, reducing the likelihood of unexpected test failures due to inconsistent setups.

- Enhanced Test Reusability: Fixtures can be shared and reused across multiple tests and even different test suites.

- Improved Test Scalability: As your project grows, fixtures make managing increasingly complex test environments easier.

By using these features, pytest fixtures help developers build robust, maintainable, and scalable test suites. They contribute to faster development cycles, lower testing costs, and ultimately, higher quality software. These benefits highlight why fixtures have become essential to modern Python testing.

Creating Your First Pytest Fixture That Actually Works

Building effective Pytest fixtures doesn't have to be intimidating. Let's move beyond the theoretical and dive into practical construction. We'll begin with basic function fixtures, the most common type, and gradually explore more advanced techniques employed by professional developers. This practical approach will equip you to create fixtures that tackle real-world testing scenarios.

Structuring Fixture Functions

A Pytest fixture is essentially a Python function decorated with @pytest.fixture. This decorator indicates to Pytest that this function provides resources or performs setup/teardown actions for tests. For instance, a simple fixture might establish a database connection:

import pytest

@pytest.fixture

def db_connection():

# Code to establish database connection

connection = establish_connection()

yield connection

# Provide the connection to tests

# Code to close the connection

connection.close()Notice the use of yield. This keyword allows the fixture to provide the connection object to any test that needs it. Critically, it also allows the code following yield to execute after the test finishes, guaranteeing proper cleanup. This structure is vital for maintaining a clean and predictable test environment. Fixtures can be used to prepare the testing environment, much like setting up the stage for efficient API testing.

Utilizing the @pytest.fixture Decorator

The @pytest.fixture decorator sits at the core of Pytest fixtures. It offers several options to customize fixture behavior. Let's examine some key parameters. The following table provides a detailed overview of these parameters.

To understand how you can customize your fixtures for maximum effectiveness, take a look at the options available within the @pytest.fixture decorator.

Key Pytest Fixture Decorator Parameters

| Parameter | Default Value | Description | Example Usage |

|---|---|---|---|

scope |

function |

Determines how often the fixture is invoked (function, class, module, session) | @pytest.fixture(scope="module") |

autouse |

False |

Automatically use this fixture in any test that can accept it | @pytest.fixture(autouse=True) |

params |

None |

List of values to parametrize the fixture | @pytest.fixture(params=[1, 2, 3]) |

name |

function name | Override the default fixture name (the function name) | @pytest.fixture(name="my_fixture") |

These options offer fine-grained control over fixture execution and resource management, crucial for optimizing larger test suites. For example, a fixture with scope="module" will execute once per module, not once per test function. This provides performance benefits when setting up shared resources.

Avoiding Common Pitfalls

Even seasoned developers occasionally encounter issues with fixture implementation. A common oversight is omitting the yield keyword when cleanup is necessary. This can result in resource leaks or inconsistencies between tests. Another frequent mistake involves improper use of autouse fixtures, potentially leading to unintended side effects if not carefully considered.

By grasping the core concepts of fixture structure and the @pytest.fixture decorator options, you can construct robust and reliable fixtures. This, in turn, significantly improves the quality and maintainability of your test suite. Understanding these parameters will help you write cleaner, more efficient tests.

Mastering Fixture Scopes for Optimal Performance

The secret to efficient Pytest test suites lies in understanding fixture scopes. Choosing the right scope drastically impacts execution time and resource usage. This section demystifies the five available scope levels and demonstrates how to choose the optimal scope for your testing scenarios.

Understanding Pytest Fixture Scopes

Pytest offers five distinct fixture scopes:

- function: (Default) The fixture is invoked for each test function.

- class: The fixture is invoked once per test class.

- module: The fixture is invoked once per module.

- session: The fixture is invoked once per test session.

- package: The fixture is invoked once per package.

Selecting the correct scope depends on the resource's lifecycle and how your tests use it. For instance, a temporary file needs a function scope for isolation between tests.

However, a database connection might use a session scope to avoid the overhead of repeated connections.

Impact of Scope on Performance

Fixture scope is directly related to performance. A function scoped fixture, while providing isolation, can add overhead if the setup is complex. A session scoped fixture minimizes setup overhead. However, it might create state management issues if tests modify the shared resource.

Consider an API client. A session scoped fixture could initialize it once, improving performance across multiple tests. But, if tests change the client's state (like authentication tokens), it could affect other tests. Understanding this trade-off between performance and isolation is crucial.

To help illustrate these differences, let's take a look at the following table:

The following table, "Comparison of Pytest Fixture Scopes", describes how different fixture scopes affect setup timing, teardown, and overall performance.

| Scope | When Setup Occurs | When Teardown Occurs | Best Used For | Performance Impact |

|---|---|---|---|---|

| function | Before each test function | After each test function | Test-specific resources, ensuring isolation | Highest setup overhead |

| class | Before the first test in a class | After the last test in a class | Resources shared within a class | Moderate setup overhead |

| module | Before the first test in a module | After the last test in a module | Resources shared across tests within a module | Low setup overhead |

| session | Before the entire test session | After the entire test session | Resources shared across all tests | Lowest setup overhead |

| package | Before the first test in a package | After the last test in a package | Resources shared across tests within a package | Low setup overhead |

As you can see, selecting the appropriate scope depends on the specific needs of your testing scenario. Balancing performance with isolation is a critical decision.

Proper Teardown with yield and Finalizers

Pytest fixtures use the yield keyword for streamlined teardown. Code after yield runs after a test, even if it fails. This ensures resources are cleaned up, preventing issues such as open files or database connections.

Pytest also supports finalizers for more complex teardown logic. Finalizers guarantee resource release, regardless of test outcomes. This complements yield, providing flexibility for more involved cleanup procedures.

The use of pytest fixtures has increased significantly due to their adaptability. According to the pytest documentation, fixtures are reusable, which improves traditional methods. Mastering fixture scopes and employing robust teardown methods builds efficient and reliable test suites, ensuring the quality of your Python code.

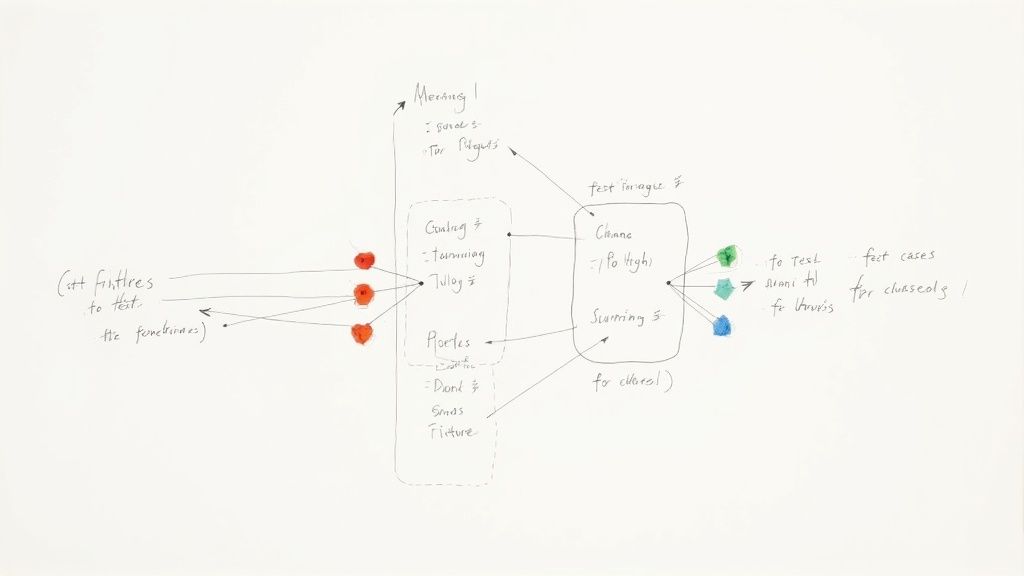

Supercharging Tests With Fixture Parameterization

Pytest fixtures are invaluable for setting up the preconditions for your tests. Their utility increases significantly when combined with parameterization. This technique allows you to execute the same test logic against a range of different inputs, greatly expanding your test coverage without writing excessive amounts of repetitive code. This helps guarantee consistent behavior across various input types.

Basic Parameterization

The simplest method for parameterizing a pytest fixture is using the params argument within the @pytest.fixture decorator. Imagine you have a fixture that establishes a database connection. You could parameterize it to test against different database engines:

import pytest

@pytest.fixture(params=["sqlite", "postgres", "mysql"])

def db_connection(request):

engine = request.param

# Code to establish database connection based on 'engine'

# ...

yield connection

# ... teardown codeThis fixture will run three times, once for each database engine specified in params. The request.param provides access to the current parameter's value. This allows you to create versatile testing scenarios without redundant fixtures.

Indirect Parameterization

When direct parameterization within the fixture is too limiting, pytest offers indirect parameterization. This method passes parameters from the test function to the fixture:

import pytest

@pytest.fixture

def user(request):

data = request.param

# Code to create a user based on 'data' return user

@pytest.mark.parametrize("user",

[{"name": "Alice"}, {"name": "Bob"}],

indirect=True)

def test_user_creation(user):

# ... test logic ...In this example, the user fixture receives its parameters indirectly from the @pytest.mark.parametrize decorator. This is particularly useful when the parameters require complex setup. It decouples the fixture from the test data, enabling more dynamic configurations.

Dynamic Parameter Generation

In some cases, you may need to generate parameters dynamically based on runtime conditions. This can be accomplished using factory functions or external libraries like factory_boy:

import pytest from factory

import Factory, Sequence

class UserFactory(Factory):

class Meta:

model = object # Or your actual model

username = Sequence(lambda n: f'user{n}')

@pytest.fixture

def dynamic_user(request):

return UserFactory()

def test_user_creation(dynamic_user):

assert dynamic_user.username.startswith("user")This approach offers significant flexibility, especially for complex test scenarios. It generates data tailored to specific tests without manual intervention. Dynamic parameterization allows you to efficiently create a wide array of test cases, increasing test coverage.

By mastering fixture parameterization, you can develop robust pytest test suites that are efficient, concise, and capable of handling diverse scenarios. This ultimately increases confidence in your code's stability and robustness. This approach also reduces code duplication and improves the maintainability of your test suite.

Building Complex Test Environments With Fixture Chains

The true power of pytest fixtures shines when you combine them. This approach, known as fixture chaining, allows developers to build intricate test environments efficiently. By creating modular, reusable fixture components, you can use pytest's dependency injection to assemble the exact environment your tests need. This section explains how to design robust, maintainable test setups using fixture chains.

Creating Modular Fixture Components

Fixture chaining involves defining fixtures that depend on other fixtures. Think of it like building with LEGO bricks: individual bricks (fixtures) combine to create complex structures (test environments). For example, imagine testing a web application:

import pytest

@pytest.fixture

def database_connection():

# Code to establish database connection

yield connection

# Teardown code

@pytest.fixture

def api_client(database_connection):

# Initialize API client using the database connection

return clientHere, the api_client fixture depends on the database_connection fixture. Pytest automatically resolves this dependency, ensuring the database connection is established before the API client is initialized. This modular approach improves code organization and reduces redundancy.

Mapping Fixtures To Application Architecture

Fixture hierarchies can mirror your application's architecture. This improves test clarity and maintainability by directly mapping the test setup to the system under test. Imagine testing an application that interacts with an external service:

@pytest.fixture

def external_service_mock():

# Mock the external service yield mock

# Teardown

@pytest.fixture

def application_instance(database_connection, external_service_mock):

return Application(database_connection, external_service_mock)Structuring fixtures this way encapsulates dependencies, making it clear how different components interact within your application. This makes tests easier to read, debug, and maintain.

Avoiding Dependency Pitfalls

Fixture chaining is powerful, but it can be challenging. Circular dependencies, where Fixture A depends on Fixture B, and Fixture B depends on Fixture A, are a common problem. Pytest will detect and report these, but debugging them can be tricky.

Another pitfall is creating excessively long fixture chains. A few levels of dependency are usually manageable, but deeply nested fixture chains can obscure test logic and make the overall environment hard to understand.

@pytest.fixture def component_a():

#... pass

@pytest.fixture def component_b(component_a):

#... pass

@pytest.fixture def component_c(component_b):

#... passAnd so on...

Long chains like this often signal tightly coupled components and might suggest a need to refactor your application or testing approach. Keep fixture dependencies minimal and focused. If a fixture has many dependencies, consider refactoring it into smaller, more manageable units. This simplifies the structure and improves the testability of individual components. Choosing the correct scope for each fixture significantly impacts performance and clarity. By understanding how fixture scope interacts with fixture chaining, you can create tests that are both efficient and easy to understand.

Pytest Fixture Patterns That Scale With Your Project

As your project expands, managing pytest fixtures can become increasingly intricate. Well-structured fixtures are fundamental for creating maintainable tests. This section explores advanced techniques gathered from experience across numerous projects, encompassing naming conventions, organizational strategies, and best practices for documentation. These strategies will empower you to build a resilient and adaptable test suite.

Effective Naming Conventions for Clarity

Clear fixture names are vital for instantly understanding their function. A consistent naming system enables quick identification of the resource a fixture offers. Consider using prefixes like mock_, stub_, or fixture_ to differentiate fixtures from standard functions. For instance, fixture_database_connection clearly signifies a fixture providing a database connection. Furthermore, suffixes such as _factory can denote fixtures that create objects, like user_factory. Well-named fixtures improve readability and promote self-documenting test code.

Organizing Fixtures for Maintainability

As your test suite grows, organizing fixtures is essential to prevent code sprawl. Consider grouping fixtures by functionality or module into separate files within a dedicated fixtures directory. This method keeps related fixtures together, simplifying their location. Employing a conftest.py file within each test directory to define area-specific fixtures is also beneficial. However, be cautious of excessive conftest.py nesting, which can complicate tracing fixture origins.

Documenting Fixtures for Collaboration

Thorough fixture documentation is key for effective team collaboration. Utilize docstrings to clarify each fixture's purpose, parameters, scope, and any return values. This practice enables new team members to quickly grasp how to use existing fixtures. Tools like Sphinx can automatically create documentation from these docstrings, further enhancing maintainability. Well-documented fixtures facilitate understanding of their roles and dependencies.

Managing Stateful Fixtures in Parallel Execution

Stateful fixtures, those altering their internal state during testing, can pose difficulties in parallel execution. To avoid conflicts between concurrent tests, use the scope="function" setting. This guarantees each test receives a new fixture instance. Consider implementing mechanisms within the fixture to reset its state after each test execution as an alternative. This approach enables safe parallel execution.

Handling External Dependencies Reliably

Fixtures can interact with external resources, such as databases or APIs. These dependencies might be unavailable during testing. Implement fallback systems within your fixtures to address such situations. For example, a fixture could employ a mock database connection if the actual database is offline. This strengthens tests and allows them to run even with unavailable external systems.

Optimizing Fixture Performance

As your test suite scales, fixture performance becomes increasingly critical. Use the suitable scope to minimize setup overhead. If a resource is required by every test in a module, use scope="module" rather than scope="function". Moreover, optimize fixture code for efficiency by avoiding redundant calculations or I/O operations during setup and teardown.

These strategies will aid you in effectively structuring pytest fixtures, improving the maintainability, scalability, and reliability of your test suite. Adhering to these guidelines allows you to build a robust testing environment that supports your project's growth.

Ready to enhance your CI/CD pipeline and optimize your team’s development workflow? Discover the powerful capabilities of Mergify and witness the difference today!