Essential Pytest Example: Quick & Efficient Testing

Your First Pytest Example: From Installation to Execution

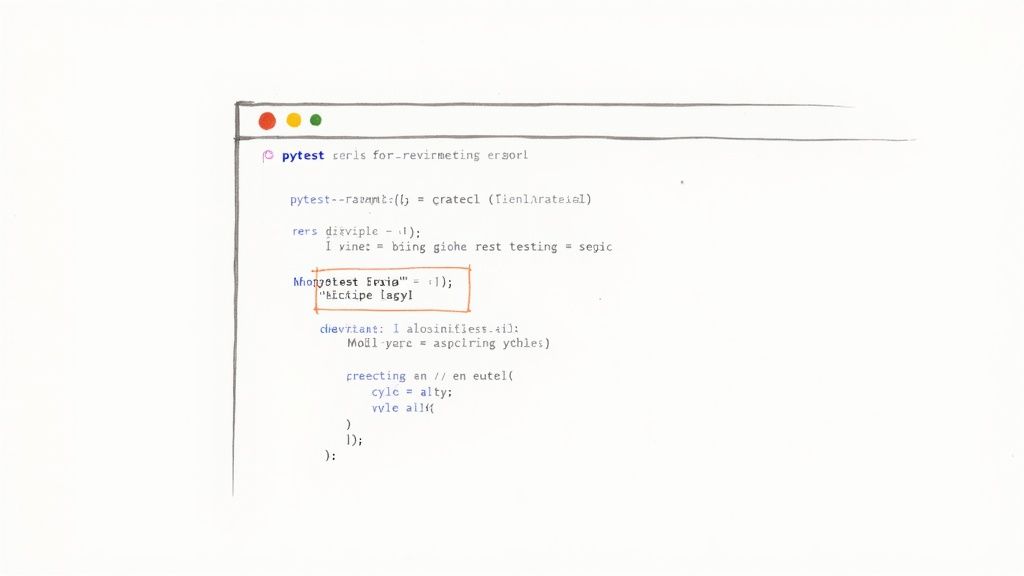

Getting started with pytest is remarkably straightforward. Begin by setting up your environment. This usually involves a quick installation using pip install pytest.

Once installed, verify everything is working correctly with pytest --version. This command confirms pytest is ready to go and displays the installed version. Now you’re all set to craft your first pytest example.

Writing Your First Test

A basic pytest example often involves testing a simple function. Let’s imagine a function called add that, well, adds two numbers. You can create a corresponding test function to check its behavior. By convention, this test function starts with test_:

def add(x, y): return x + y

def test_add(): assert add(2, 3) == 5

This straightforward pytest example demonstrates the essence of pytest: using the assert statement for validation.

When you execute pytest, it automatically discovers and executes any function prefixed with test_. A successful assertion means the test passes. If the assertion fails, pytest provides detailed information, helping you quickly identify the source of the error.

Executing Your Test and Interpreting Results

Running your test is as simple as typing pytest in your project directory within your terminal. Pytest’s output is designed for clarity. A period (.) indicates a passed test, followed by a summary of the test run.

A failing test is marked with an F, accompanied by details of the failed assertion. This clear feedback loop allows for rapid error identification and correction. Wider adoption of testing frameworks like pytest correlates strongly with improved software quality and faster development cycles. Over 7,387 companies in 81 countries use pytest, a testament to its global reach and effectiveness within the Python ecosystem. Explore this topic further This widespread usage underscores pytest’s ability to improve testing processes and overall software development efficiency.

Basic Pytest Commands

Beyond basic execution, pytest provides a rich set of commands for controlling tests and generating reports. These commands offer significant flexibility, allowing you to focus on specific tests or adjust the level of detail in the output.

To help you get started, here's a comparison of essential pytest commands and their equivalents in other testing frameworks, specifically unittest.

Basic Pytest Commands Comparison:

| Command | Pytest Syntax | Unittest Equivalent | Description |

|---|---|---|---|

| Run all tests | pytest |

python -m unittest |

Executes all tests within the current directory and subdirectories. |

| Run specific file | pytest test_file.py |

python -m unittest test_file.py |

Runs tests only within the specified file. |

| Run specific test | pytest test_file.py::test_specific_function |

python -m unittest test_file.py.TestClass.test_specific_function |

Executes only the specified test function. |

| Verbose output | pytest -v |

python -m unittest -v |

Provides more detailed information about each test execution. |

| Help | pytest --help |

python -m unittest --help |

Displays all available pytest options and their usage. |

This table provides a quick reference for common testing scenarios. Understanding these commands empowers you to tailor your testing approach based on project needs.

These essential pytest commands provide a strong foundation for effectively running and managing your tests. This level of control allows you to tailor your testing approach depending on the size and specific requirements of your projects. From executing all tests at once to zeroing in on individual functions, pytest delivers the feedback and control necessary to build and maintain a robust, high-quality codebase.

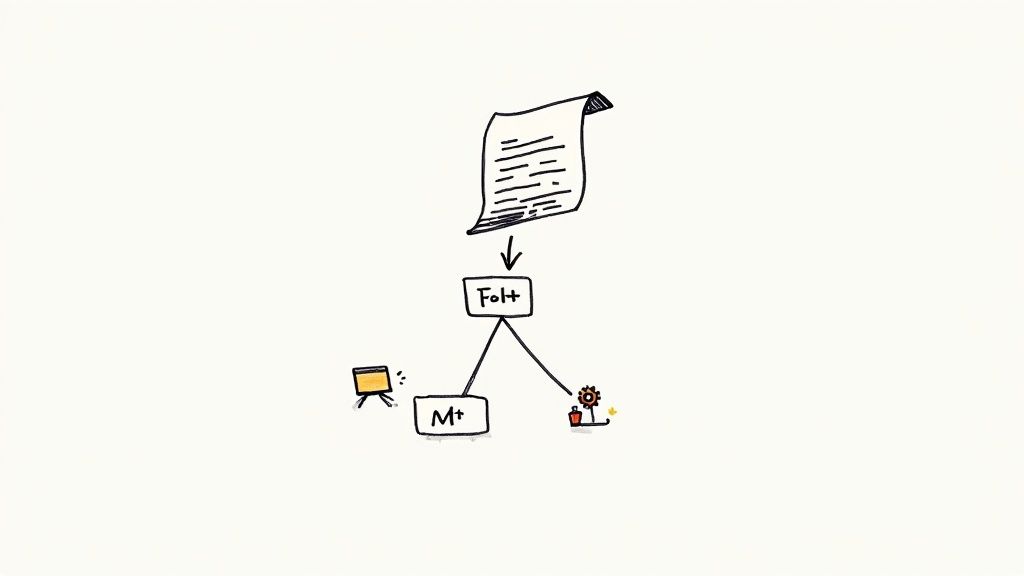

Fixture Magic: Transforming How You Manage Test States

Fixtures are a core part of pytest, offering a robust way to manage the setup and teardown processes for your tests. Think of them as reusable building blocks you can insert into your test functions, providing a clean and organized way to handle resources, states, and any necessary configurations. New to pytest? Plenty of online resources can help you get started. The first step is installation, and you can find details in the Installation documentation.

Understanding Fixture Scopes and Their Impact

One of the biggest advantages of fixtures is their ability to efficiently manage resources using scopes. A fixture's scope defines how often it's called during your testing session. This has a significant impact on both test performance and resource usage. Common scopes include function, class, module, and session.

A function-scoped fixture runs for each test function, while a session-scoped fixture runs only once per session. Choosing the right scope is crucial for optimizing your test execution time. For instance, initializing a database connection once per session, rather than for every single test, can drastically speed up your test suite.

Implementing Parameterized Fixtures for Diverse Test Scenarios

Parameterized fixtures are another powerful feature of pytest, letting you run the same test logic with different input values. This is invaluable for testing functions or modules with various scenarios, edge cases, and input combinations. You create these fixtures using the @pytest.fixture(params=[...]) decorator. The params argument takes a list of values that pytest will feed into the fixture function.

Here's a quick example showing how parameterized fixtures can help you write concise and easily maintainable tests covering a broad spectrum of input values:

import pytest

@pytest.fixture(params=[1, 2, 3]) def number(request): return request.param

def test_positive_number(number): assert number > 0

This simple example demonstrates how a single test function can effectively evaluate three different inputs. This dramatically reduces code duplication and makes maintenance much simpler.

Real-World Patterns for Fixture Usage

Fixtures are highly versatile and have a wide range of real-world applications. They can manage database connections, set up API clients, initialize complex objects, or control application states. This consistent, reusable approach improves the maintainability of your tests. This ties in with Python's growing popularity across various domains. In 2022, Python saw a 46% usage rate in web development and 54% in data analysis, as reported here. This highlights the increasing importance of testing tools like pytest in ensuring code quality and reliability.

Organizing and Managing Fixtures in Growing Codebases

As your project scales, organizing your fixtures becomes crucial for maintaining clean and manageable test code. Consider grouping fixtures into dedicated files, using dependency injection to manage complex relationships between them, and adopting clear naming conventions that reflect their purpose. A well-structured fixture setup improves test maintenance and facilitates better team collaboration. This leads to a more streamlined testing process, allowing developers to concentrate on writing effective tests and guaranteeing code quality.

Parameterized Testing: Write Once, Test Many Scenarios

Building upon the concept of fixtures, let's delve into parameterized testing within Pytest. This powerful technique lets you execute a single test function with varying inputs, minimizing redundant code and boosting test coverage. The @pytest.mark.parametrize decorator is central to this process.

Introduction to @pytest.mark.parametrize

The @pytest.mark.parametrize decorator is the cornerstone of efficient parameterized tests. It accepts two primary arguments: a comma-separated string of parameter names and a list of corresponding values. These values can range from basic data types to complex objects, accommodating diverse testing scenarios.

You can define various inputs, including integers, strings, or combinations thereof, to thoroughly examine your function's behavior. This systematic approach simplifies edge case testing and ensures comprehensive validation.

Practical pytest example of Parameterization

Imagine a function designed to validate email addresses. Parameterized testing allows you to evaluate various valid and invalid email formats without creating individual test functions for each.

import pytest

@pytest.mark.parametrize("email, expected_result", [ ("test@example.com", True), ("invalid_email", False), ("another.test@sub.domain.com", True), ]) def test_validate_email(email, expected_result): assert validate_email(email) == expected_result

This example demonstrates how @pytest.mark.parametrize efficiently tests three distinct email formats within a single function, considerably reducing code volume.

Advanced Parameterization Techniques

Parameterized testing extends beyond basic applications. You can combine multiple parameters, apply parameters to fixtures, and construct complex test matrices for intricate scenarios.

Consider a function influenced by both an input string and a boolean flag. @pytest.mark.parametrize can test all possible input combinations for comprehensive coverage. Furthermore, combining parameterization with fixtures creates reusable test setups for different scenarios, enhancing test efficiency and maintainability. For example, parameterizing a fixture responsible for database connections enables testing against various database configurations.

Parameterization in Real-World Scenarios

This versatile mechanism excels in API endpoint testing, data validation routines, and edge case verification. Think of a user authentication API returning different response codes based on the provided credentials.

Parameterization allows you to define a list of credentials and expected responses, verifying the API's behavior under each condition. This strengthens your tests and simplifies maintenance. This scalability aligns well with the growing Python market, projected to expand from USD 3.6 million in 2021 to USD 100.6 million by 2030, with a CAGR of 44.8%. This growth fuels the demand for robust testing tools like Pytest, making proficiency in features like parameterized testing increasingly valuable. Find more detailed statistics here.

Benefits of Parameterized Testing

Parameterized testing reduces code, improves test coverage, and streamlines maintenance. This leads to more efficient testing and higher-quality software. As your codebase evolves, parameterized tests adapt readily to new scenarios, ensuring continued comprehensive coverage. These advantages make parameterized testing indispensable for any development team striving to build robust and reliable applications.

Mock and Patch: Isolate Your Code for Reliable Testing

Writing reliable tests often means isolating the code under scrutiny from its external dependencies. This is where mocking and patching come into play as essential tools within your pytest toolkit. These techniques let you simulate the behavior of external systems, ensuring your tests focus solely on your code's logic. This is particularly crucial when working with databases, APIs, and other external services. Let's explore how pytest, combined with monkeypatch and unittest.mock, helps you achieve this isolation.

Mocking With unittest.mock

The unittest.mock library provides a powerful mechanism for creating mock objects. A mock object stands in for a real object, letting you control its behavior and verify interactions. This simplifies testing complex scenarios like network failures or unusual edge cases. Consider a pytest example that tests a function making an API call:

from unittest.mock import patch import requests

def fetch_data(url): response = requests.get(url) return response.json()

@patch('requests.get') def test_fetch_data(mock_get): mock_response = mock_get.return_value mock_response.json.return_value = {'key': 'value'} data = fetch_data('https://example.com/api') assert data == {'key': 'value'}

This pytest example demonstrates how patch replaces the actual requests.get with a mock. You can then define the mock's behavior, ensuring the test receives the expected data without making a real API call.

Patching With monkeypatch

The monkeypatch fixture, built into pytest, offers a handy way to temporarily modify attributes, dictionaries, or environment variables during testing. This is particularly useful for simulating specific conditions or isolating parts of your code. For example, consider testing your code's behavior when a specific environment variable is set:

import os

def get_config(): return os.environ.get("CONFIG_VALUE")

def test_get_config(monkeypatch): monkeypatch.setenv("CONFIG_VALUE", "test_value") config = get_config() assert config == "test_value"

Here, monkeypatch.setenv temporarily sets the environment variable, ensuring the test remains independent of the actual environment setup.

Practical Applications of Mocking and Patching

Mocking and patching are versatile techniques within a pytest framework. They are particularly helpful when dealing with:

- API Responses: Simulate various API responses, including both successes and failures, to thoroughly test your code's handling of different outcomes.

- Database Connections: Replace real database interactions with mocks, avoiding test dependencies on a live database and guaranteeing consistent test data.

- File Operations: Mock file system calls to prevent tests from affecting your actual file system, improving test reliability and repeatability.

- Third-Party Services: Isolate your code from external services by mocking their behavior, ensuring your tests run quickly and reliably, regardless of external service downtime or unexpected changes.

Effective Techniques for Mocking Edge Cases and Failures

Mocking and patching shine when simulating edge cases and failures that are difficult or impossible to reproduce in a real environment. This allows you to test scenarios like:

- Network Failures: Simulate connection timeouts or other network problems to verify the resilience of your code's error handling.

- Exception Scenarios: Test how your code gracefully handles exceptions by configuring mock objects to raise specific exceptions.

By mastering these techniques, you gain precise control over your test environment, leading to more robust and thorough testing. This isolation builds confidence in your application's ability to handle both expected behavior and unexpected situations. These tools are essential for building and maintaining complex applications, ultimately ensuring the quality and reliability of your code.

Beyond Assert: Powerful Validation Techniques That Matter

Moving beyond simple assert statements is crucial for writing effective tests with pytest. This section explores powerful validation techniques that experienced developers use to handle a variety of testing scenarios. These methods not only make your pytest examples more robust, but also improve the clarity and maintainability of your test suite.

Testing For Exceptions With pytest.raises

Sometimes, you expect your code to raise an exception under specific conditions. pytest.raises allows you to verify this behavior, ensuring your code handles errors as intended.

For example, consider a function that divides two numbers:

def divide(x, y): if y == 0: raise ZeroDivisionError("Cannot divide by zero") return x / y

def test_divide_by_zero(): with pytest.raises(ZeroDivisionError): divide(10, 0)

This pytest example showcases the use of pytest.raises. This makes your tests more explicit and helps catch unexpected behavior. This approach is particularly helpful for validating error handling logic within your functions.

Validating Warnings With pytest.warns

Similar to exceptions, warnings can indicate potential issues. pytest.warns enables you to test for expected warnings, promoting proactive error detection.

import warnings

def deprecated_function(): warnings.warn("This function is deprecated", DeprecationWarning)

def test_deprecated_function(): with pytest.warns(DeprecationWarning): deprecated_function()

This example shows how you can test for warnings to ensure proper documentation and help manage technical debt.

Approximate Equality For Floating-Point Numbers

Directly comparing floating-point numbers with assert can lead to unexpected failures due to precision limitations. Pytest offers pytest.approx to address this:

def test_floating_point_calculation(): result = 0.1 + 0.2 assert result == pytest.approx(0.3)

This example demonstrates the use of pytest.approx, which ensures tests are not overly sensitive to minor variations in calculations involving decimals.

Validating Complex Data Structures

For complex data structures like dictionaries or lists, using assert for deep comparisons can become cumbersome. Pytest allows direct comparison with concise results. This clarity speeds up the debugging process, especially when dealing with nested data.

Creating Custom Assertions

For complex validation logic, custom assertions are often necessary. These make your tests more readable and maintainable, improving the overall quality of your test suite. A practical example is validating the structure of API responses, where you check that mandatory fields are present and have the correct types.

To help you choose the right assertion method for your tests, let's look at a table summarizing common pytest assertions categorized by data type.

Pytest Assertion Methods by Data Type Common assertion techniques for different types of data validation in pytest

| Data Type | Assertion Method | Example Code | Common Use Case |

|---|---|---|---|

| Numbers | ==, !=, >, <, >=, <=, pytest.approx |

assert value == 5 |

Verifying numerical results |

| Strings | ==, !=, in, startswith, endswith |

assert string.startswith("Hello") |

Checking string content and formatting |

| Booleans | is True, is False |

assert condition is True |

Validating logical operations |

| Lists/Tuples | ==, in, len |

assert item in my_list |

Verifying list/tuple elements & sizes |

| Dictionaries | ==, in, len, .get |

assert my_dict.get("key") == "value" |

Checking dictionary key-value pairs |

| Exceptions | pytest.raises |

with pytest.raises(ValueError): |

Ensuring proper exception handling |

| Warnings | pytest.warns |

with pytest.warns(UserWarning): |

Checking for anticipated warnings |

This table provides a quick reference for common assertion methods, helping you select the most effective technique for each data type you’re working with. By mastering these techniques, you can create more robust and expressive tests, effectively handling various validation scenarios in Python development.

Supercharge Your Workflow: Essential Pytest Plugins

Extending pytest's core functionality with plugins is a common practice for experienced Python developers. These plugins can tackle specific testing issues, improve reporting, and boost overall workflow efficiency. This section explores some must-have pytest plugins that can significantly improve your testing process. We'll cover installation, common uses, and situations where these tools truly excel.

Pytest-Cov: Measure and Improve Your Test Coverage

The pytest-cov plugin delivers detailed test coverage reports, showing which parts of your code are covered by your tests and, importantly, which parts aren't. This helps you find gaps in your testing strategy, guiding you to build more comprehensive test suites. Installing pytest-cov is easy: pip install pytest-cov. After installation, getting coverage reports is straightforward: pytest --cov=my_project. This creates a report showing the percentage of your code covered by tests, broken down by file and even line number.

- Actionable Insights: pytest-cov provides more than just numbers. It highlights untested code blocks, giving you clear direction.

- Targeted Testing: This focused approach lets you zero in on areas that need more testing, optimizing your time and effort.

- Improved Quality: By steadily increasing your test coverage, you minimize the chance of hidden bugs and improve the overall quality of your code.

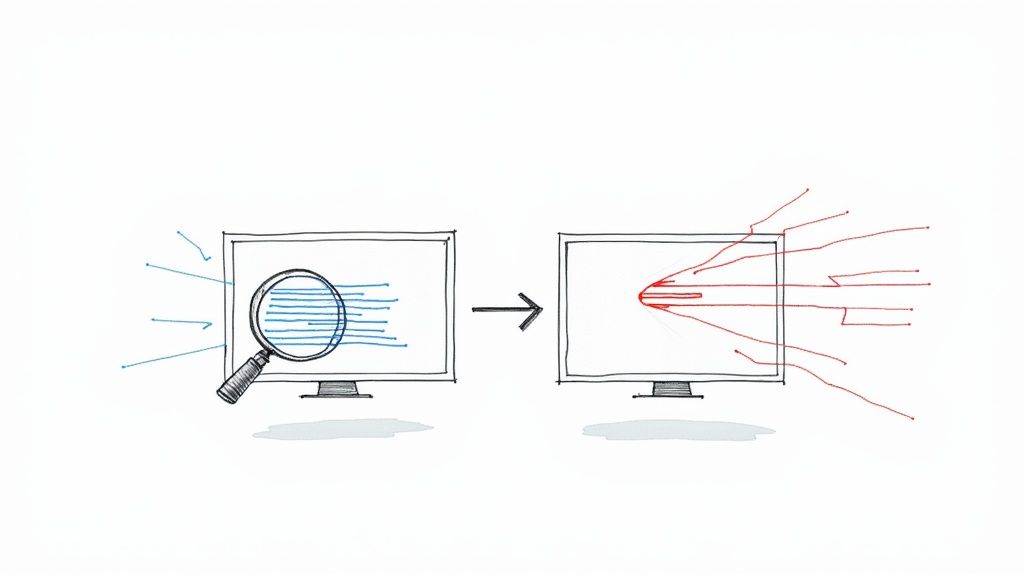

Pytest-Xdist: Parallelize Your Tests for Faster Feedback

Running tests one after another can be slow, especially with larger projects. pytest-xdist lets you run tests in parallel across multiple CPU cores, significantly cutting down test execution time. This is a real benefit for projects with extensive test suites. Install pytest-xdist using pip install pytest-xdist. Parallel testing is then as simple as pytest -n NUM_CORES, where NUM_CORES is the number of CPU cores to use.

- Reduced Test Times: Running tests concurrently drastically cuts down the feedback loop, allowing for faster iteration during development.

- Improved Productivity: This speed boost translates directly to improved developer productivity, as you spend less time waiting for tests to finish.

- Efficient CI/CD: pytest-xdist is extremely valuable in CI/CD pipelines, where fast feedback is crucial.

Pytest-Mock: Simplify Mocking for Enhanced Readability

Mocking is a vital testing technique, and pytest-mock makes it more elegant and readable. This plugin offers a mocker fixture, simplifying the creation and use of mock objects in your tests. Install it using pip install pytest-mock. The mocker fixture offers a clean, efficient way to mock objects or functions, improving the readability and maintainability of your test code.

- Streamlined Mocking: pytest-mock provides a straightforward interface for creating mocks, avoiding verbose setups needed with unittest.mock.

- Improved Test Clarity: This clear syntax makes test code easier to understand and follow the intended behavior of mocks.

- Simplified Management: The plugin automatically cleans up mocks, reducing the chance of tests interfering with each other.

Web Framework Specific Plugins: Pytest-Django and Pytest-Flask

For developers working with popular web frameworks like Django and Flask, dedicated plugins like pytest-django and pytest-flask offer specialized tools for web application testing. These plugins simplify testing database interactions, handling requests and responses, and other framework-specific tasks. They offer features like database fixtures and test clients tailored to the framework, which greatly streamlines the testing workflow.

Pytest-Asyncio: Simplify Testing of Asynchronous Code

With the increasing use of asynchronous programming in Python, testing asynchronous code presents unique challenges. pytest-asyncio addresses this by providing tools that make writing and running tests for asynchronous functions and coroutines easier. Install it with pip install pytest-asyncio and create asynchronous test functions. This plugin makes testing asynchronous code as straightforward as testing synchronous code, helping you maintain consistent test quality across your application.

By adding these important pytest plugins to your toolkit, you can drastically improve your testing workflow, shorten test times, and enhance the overall reliability and maintainability of your test suite.

Real-World Pytest Examples: Complete Testing Patterns

This section combines everything we've discussed about Pytest by exploring complete testing implementations for common Python applications. We'll see how seasoned developers use various Pytest features to create maintainable test suites for different project types.

Testing REST APIs With Authentication

Testing REST APIs often requires handling authentication and different HTTP methods. Let's look at an API endpoint requiring a bearer token:

import requests import pytest

def get_user_data(api_url, token): headers = {"Authorization": f"Bearer {token}"} response = requests.get(api_url, headers=headers) return response.json()

@pytest.fixture def valid_token(): # Replace with your token retrieval logic return "your_test_token"

@pytest.fixture def api_endpoint(): return "https://api.example.com/user"

def test_get_user_data_authenticated(api_endpoint, valid_token): data = get_user_data(api_endpoint, valid_token) assert data["username"] == "testuser"

@pytest.mark.parametrize("invalid_token", ["", "expired_token"]) def test_get_user_data_unauthenticated(api_endpoint, invalid_token): with pytest.raises(requests.exceptions.HTTPError): get_user_data(api_endpoint, invalid_token)

This example uses fixtures to manage the API endpoint and authentication tokens. It also shows how to handle successful and unauthorized requests using pytest.raises.

Data Processing Pipelines With Large Datasets

Testing data processing pipelines often means handling large datasets and potential edge cases. Here's how to test a function processing CSV data:

import pandas as pd import pytest

def process_data(csv_file): df = pd.read_csv(csv_file) # Perform data processing steps return df

@pytest.fixture def sample_csv_file(tmpdir): # Create a temporary CSV file with sample data file_path = tmpdir.join("data.csv") data = {'col1': [1, 2, 3], 'col2': [4, 5, 6]} df = pd.DataFrame(data) df.to_csv(file_path, index=False) return str(file_path)

def test_process_data(sample_csv_file): processed_df = process_data(sample_csv_file) assert len(processed_df) == 3

This example uses a fixture to create a temporary CSV file for testing, ensuring a clean dataset for each test. The test then verifies the processed dataframe's length.

CLI Applications With Complex User Interactions

CLI applications often require testing complex user interactions and command-line arguments. Consider testing a command with various options:

import subprocess import pytest

def run_command(command): result = subprocess.run(command, capture_output=True, text=True) return result

@pytest.mark.parametrize("option", ["-v", "-h", "-q"]) def test_cli_options(option): result = run_command(["my_cli_app", option]) assert result.returncode == 0

def test_cli_invalid_option(): result = run_command(["my_cli_app", "-x"]) assert result.returncode != 0

Here, parameterization efficiently tests various command-line options, including error scenarios with invalid options.

Web Applications With Database Dependencies

For web applications that use databases, mocking database connections is important. Let's see how to test a web app route interacting with a database:

from unittest.mock import patch import pytest from my_web_app import get_user

@patch('my_web_app.db.get_user') def test_get_user_route(mock_db_get_user): mock_user = {'id': 1, 'name': 'Test User'} mock_db_get_user.return_value = mock_user user = get_user(1) assert user['name'] == 'Test User'

This example uses unittest.mock to patch the database interaction, enabling route logic testing without a real database connection.

These examples show how Pytest's features can be combined to address real-world testing challenges across diverse application types. By understanding these patterns, you can build robust, maintainable test suites that grow with your project.

Ready to improve your merge workflow and manage your CI process? Check out Mergify. Learn more about automated merges with Mergify.