Unleash pytest: Boost Your Python Testing

Why pytest Is Revolutionizing Python Testing

Testing is crucial for software development, ensuring quality and reliability. Traditional testing methods can be cumbersome. pytest, a popular Python testing framework, simplifies this process, making testing more efficient.

The Simplicity and Flexibility of pytest

pytest's intuitive design is its key strength. Unlike frameworks requiring extensive boilerplate code, pytest uses simple functions for tests. This reduces overhead, letting developers focus on test logic instead of complex syntax.

This ease of use has led to widespread adoption. Simple assert statements make testing straightforward, improving readability and maintainability. This streamlined approach contributes to pytest's growing popularity.

pytest's flexibility is another advantage. It integrates with other testing tools and supports various testing styles, from unit to integration testing. This adaptability makes it suitable for projects of all sizes. Thorough testing contributes to software quality and stability, increasing confidence and reducing production issues.

Pytest is widely adopted for its simplicity and flexibility. As of 2023, many Python developers use it. It simplifies workflows compared to the built-in unittest module. For example, pytest uses simple functions instead of classes, reducing boilerplate code.

This ease of use has made it very popular, with many projects migrating from unittest to pytest for improved test management and reporting. The pytest ecosystem includes plugins like pytest-system-statistics, tracking system resource usage during tests. Find more detailed statistics here

pytest vs. Other Python Testing Frameworks

pytest’s rich plugin ecosystem enhances functionality. Plugins like pytest-cov measure code coverage, providing insights into test thoroughness. pytest-xdist enables parallel test execution, reducing runtime for large test suites.

To illustrate the key differences between pytest and other popular Python testing frameworks, let's examine the following comparison table:

| Feature | pytest | Unittest | Nose |

|---|---|---|---|

| Test Discovery | Automatic | Explicit | Automatic |

| Fixtures | Built-in | Requires setup | Limited support |

| Assertions | assert statements |

Specific methods | assert statements |

| Plugins | Extensive ecosystem | Limited | Moderate |

| Reporting | Customizable | Basic | Customizable |

As shown in the table, pytest offers a compelling combination of automatic test discovery, built-in fixtures, a simple assertion style, a rich plugin ecosystem, and customizable reporting. These features significantly enhance the testing process compared to more traditional frameworks like Unittest and Nose.

A Growing Ecosystem and Community Support

The pytest ecosystem constantly evolves with new plugins addressing specific testing needs. This vibrant community keeps pytest up-to-date. Active community support and resources facilitate adoption and effective utilization. This collaborative environment ensures pytest's robustness and reliability.

The combination of simplicity, flexibility, and community support sets pytest apart. Leading development teams are increasingly using pytest to streamline workflows and build higher-quality software. This shift recognizes pytest’s ability to simplify and improve testing, leading to more reliable and maintainable code.

From Zero to pytest Hero: Getting Started Right

Starting with pytest can seem intimidating, but with a practical approach, it's surprisingly simple. This guide walks you through building a robust pytest environment, employing techniques used by successful development teams to ensure your testing process is efficient and effective.

Setting Up Your pytest Environment

The first step is installation. Use pip install pytest – a single command that lays the groundwork for writing and executing your tests. Next, think about how you want to structure your test files.

It's common practice to dedicate a tests directory for this purpose. Name your test files within this directory following the test_*.py or *_test.py convention. This naming is essential for pytest's automatic test discovery.

Writing Your First pytest Test

With your environment ready, let's create a simple test. Inside your tests directory, make a file called test_example.py. In this file, define a function that begins with test_:

def test_addition(): assert 1 + 1 == 2

This basic example demonstrates pytest's assertion system. The assert statement verifies a condition, and if it's false, pytest reports the error. This straightforward syntax makes tests easy to read and understand.

Now, run your test from your terminal using pytest. Pytest will automatically find and run all test functions located in your tests directory. You should see a passing test result, confirming your setup is working.

Command-Line Tricks for Efficient Debugging

Pytest offers helpful command-line options to improve your testing workflow. The -v flag generates verbose output, displaying each test's status individually. This detail is invaluable when debugging.

Another useful flag is -k. This lets you run specific tests based on keywords. For example, pytest -k "addition" would only run tests with "addition" in their names. This targeted execution saves time when working on specific areas of your code.

Organizing Tests for Scalability

As your project expands, good organization becomes crucial. Pytest supports grouping tests into classes, enhancing structure and readability.

For example, you can group all tests for a particular feature into a single class. This makes it easier to find and run tests relevant to a specific part of your application. This structured approach is key for managing large test suites. Using pytest effectively builds a foundation for scalable and maintainable testing, ensuring code quality as your projects grow.

Mastering pytest Fixtures: The Testing Superpower

Building upon the basics of pytest tests, let's explore how fixtures can significantly improve your testing approach. Fixtures are a core component of pytest, offering a robust mechanism for managing test dependencies and establishing preconditions. They provide a way to encapsulate setup and teardown logic, leading to cleaner, more modular, and more effective tests. This is especially helpful when working with complex environments or shared resources.

The Power of pytest Fixtures

Imagine needing a database connection for multiple tests. Instead of repeatedly setting up the connection in each test, you can create a fixture to handle it. This fixture becomes a reusable element, injected directly into your test functions. This not only minimizes code duplication but also guarantees consistency across tests.

Another common scenario is setting up test data. A fixture can generate and populate a database table with the specific data your tests require. This eliminates the need for individual tests to manage their own data, leading to more focused test logic. This is especially beneficial for intricate test cases.

Defining and Using Fixtures

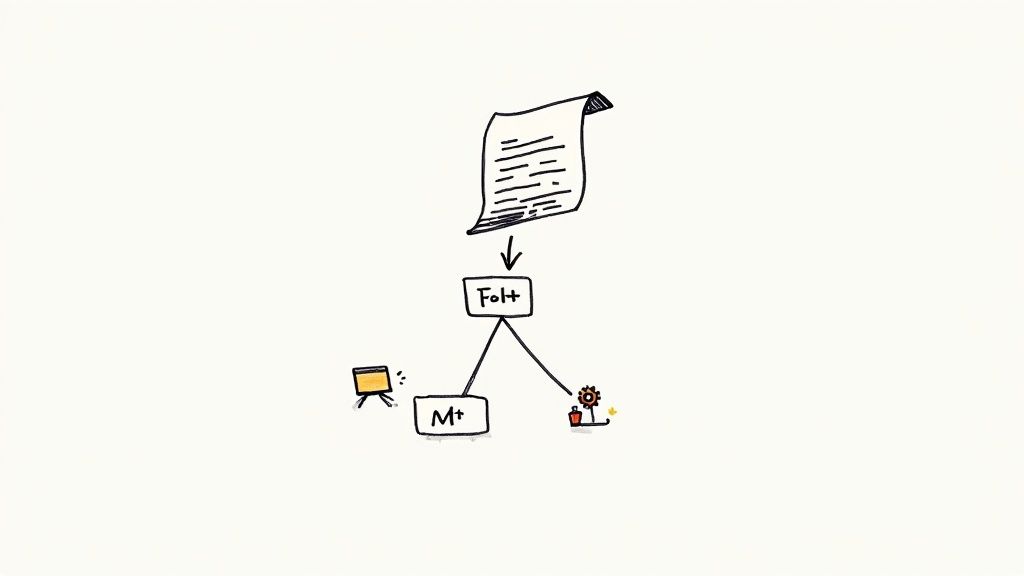

Defining a fixture is simple. Use the @pytest.fixture decorator above a function, transforming it into your fixture. Inside the function, you define the setup steps, such as creating a database connection, preparing data, or configuring a testing environment. This encapsulated logic helps maintain a consistent approach to test preconditions.

To use a fixture, simply include its name as an argument to your test function. Pytest automatically recognizes the fixture and injects its return value into your test function.

import pytest

@pytest.fixture def database_connection(): # Logic to establish a database connection connection = ... return connection

def test_database_query(database_connection): # Use the injected database connection result = database_connection.query(...) assert ...

This example demonstrates the clean injection of dependencies by fixtures, enhancing code reusability and maintainability.

Leveraging Fixture Scopes

Pytest offers different scopes for fixtures, enabling you to control how often a fixture executes. This allows for efficient resource management. The function scope (the default) executes the fixture for each test function. The module scope runs once per module, optimizing setup efficiency.

Additional scope options include session, which runs once per test session, and class, which runs once per test class. Selecting the appropriate scope is crucial for optimizing test performance while preserving necessary isolation levels. The popularity of pytest plugins like pytest-mock further highlights the importance of dependency injection in testing best practices.

Fixture Factories for Dynamic Data

Fixture factories enhance fixture flexibility. They are functions that return a fixture, enabling you to dynamically generate and configure fixtures. For example, a fixture factory could create user accounts with different roles, providing tailored test data for specific scenarios. This dynamism adds significant versatility to your testing strategy.

Using fixture factories can dramatically streamline your testing process. This allows for a more adaptable and responsive approach to handling test data. By parameterizing your fixtures, you can maximize test coverage without unnecessary code duplication, ultimately increasing the effectiveness of your test suite. Using fixtures strategically simplifies testing, allowing you to test complex situations with greater efficiency and maintainability.

Essential Pytest Plugins That Transform Your Workflow

Pytest is a powerful testing framework, but its true potential lies in its extensive plugin ecosystem. These community-driven extensions expand Pytest's core functionality, offering tailored solutions for a variety of testing needs. From streamlined mocking to comprehensive reporting, these plugins can significantly enhance your development workflow.

Boosting Efficiency and Readability

Effective testing relies on clean, understandable code. The pytest-mock plugin simplifies the process of mocking dependencies, making your tests easier to read and maintain. It provides a simplified interface to the unittest.mock library within your Pytest tests.

Another crucial aspect of testing is understanding how much of your code is actually being tested. The pytest-cov plugin measures code coverage, providing insights into which parts of your codebase are exercised by your tests. This helps identify gaps in testing and improve overall test quality.

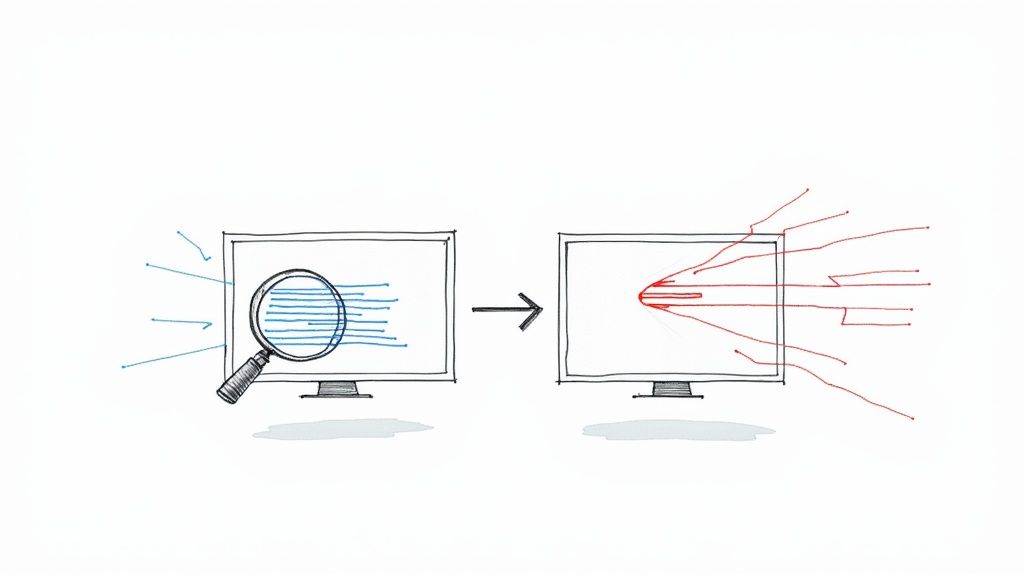

For larger projects with extensive test suites, pytest-xdist is invaluable. This plugin enables parallel test execution, drastically reducing testing time. Faster feedback cycles lead to quicker identification and resolution of potential issues.

Enhancing Reporting and Analysis

Clear and informative test reports are essential for effective analysis. The pytest-html plugin generates detailed HTML reports that are easy to navigate and understand. This simplifies the process of analyzing results and identifying trends, especially when sharing results with stakeholders.

The pytest-metadata plugin adds valuable context to your test reports, including information about the testing environment. This additional data can greatly assist in diagnosing test failures and reproducing issues, providing a richer understanding of test behavior over time.

Addressing Specific Testing Challenges

Some plugins target specific testing challenges. pytest-rerunfailures, for instance, automatically reruns failed tests, a helpful feature for identifying intermittent failures. This can save valuable debugging time. pytest-timeout prevents tests from hanging by automatically aborting them if they exceed a specified time limit, keeping your CI/CD pipeline running smoothly.

For projects with a large number of tests, pytest-split can significantly optimize test execution. It divides the test suite into smaller, manageable sub-suites that can be run in parallel or distributed across multiple machines, further improving efficiency.

Optimizing Resource Usage and Performance

Monitoring resource consumption during testing is crucial for performance optimization. The pytest-system-statistics plugin tracks CPU and memory usage, helping pinpoint resource-intensive tests. You can install this plugin using python -m pip install pytest-system-statistics. Identifying these bottlenecks allows you to optimize tests for better performance. Learn more about pytest-system-statistics here.

The Pytest ecosystem offers a wide array of plugins tailored to various testing needs. By selecting and integrating the right plugins, you can customize your Pytest workflow to improve efficiency, enhance reporting, and address specific testing challenges. Choosing the right tools empowers your team to build a robust and effective testing process.

Pytest Performance Testing: Beyond Basic Assertions

Moving beyond simple pass/fail results, performance testing becomes crucial for code optimization, especially in applications that demand significant resources. Pytest offers robust performance measurement and analysis capabilities through its extensible architecture. This allows teams to base decisions on data, leading to more efficient and scalable applications.

Benchmarking With Pytest

Pytest benchmarking helps developers measure execution times and other performance metrics, offering valuable insights into code behavior. The pytest-benchmark plugin helps establish performance baselines and detect regressions early in the development cycle. This proactive approach to performance management helps prevent slowdowns in production.

Suppose you're comparing algorithms for sorting large datasets. pytest-benchmark can automatically run each algorithm multiple times, collecting statistics like minimum, maximum, and average execution times. This data-driven approach guides informed decisions about the best-performing option.

Pytest benchmarking is a powerful feature, enabling comparisons of algorithm or code snippet performance. With the pytest-benchmark plugin, developers measure execution times and other metrics like minimum, maximum, mean, and standard deviation. This is especially useful in scientific computing and data analysis where optimizing code for speed is critical. For example, when comparing sorting algorithms like bubble sort and quicksort, pytest-benchmark can visually represent performance under various conditions, helping determine the optimal algorithm for production. Learn more at pytest-benchmark.

Let's illustrate this with a practical example. The following table presents sample benchmark data, showcasing how pytest-benchmark presents performance metrics for comparison.

pytest Benchmark Results Example: Sample benchmark data comparing different algorithm implementations to demonstrate how pytest-benchmark presents performance metrics.

| Algorithm | Min (ms) | Max (ms) | Mean (ms) | StdDev | Median (ms) |

|---|---|---|---|---|---|

| Algorithm A | 10 | 15 | 12 | 1.5 | 12 |

| Algorithm B | 15 | 25 | 20 | 3.0 | 20 |

| Algorithm C | 8 | 12 | 10 | 1.2 | 10 |

As you can see, Algorithm C exhibits the lowest minimum, mean, and median execution times, suggesting it's the most efficient of the three. The standard deviation also shows its performance is the most consistent.

Visualizing Performance Trends

Visualizations are key to understanding performance trends beyond raw numbers. pytest-benchmark integrates with visualization tools, letting you see performance changes over time or across code revisions. These visuals help identify patterns and potential bottlenecks, simplifying the process of pinpointing areas for improvement.

Testing Under Varying Load Conditions

Real-world applications often face fluctuating load conditions. Simulating these during testing is crucial to ensure consistent performance under stress. Pytest, along with tools like Locust, allows simulation of high-traffic scenarios, yielding valuable performance data under pressure.

Ensuring Consistent Performance Across Environments

Consistent performance across different environments (development, testing, production) is essential. Pytest's fixture system enables environment-specific settings and dependencies, ensuring consistent test execution and reducing environment-specific performance issues. Addressing these factors contributes to a more robust and dependable application.

Transitioning to pytest: A Practical Migration Path

Moving from the familiar unittest framework to pytest can feel like a big leap, but the journey doesn't have to be disruptive. Many teams have successfully made the switch, providing a practical roadmap for a smoother experience. This involves careful planning, incremental conversion, and tackling common hurdles along the way. This allows teams to gradually enjoy pytest's advantages without sacrificing their current test coverage.

Gradual Conversion for Continuous Integration

A core strategy is gradual conversion. Rather than attempting a complete overhaul, begin by running your existing unittest tests using pytest. This is possible due to pytest's compatibility with unittest test suites. This compatibility minimizes initial disruption and allows you to maintain continuous integration. As you develop new tests, leverage pytest's simpler syntax and powerful features. Over time, convert older tests to the pytest style as needed, rather than all at once.

Transforming unittest to pytest

The transition involves transforming unittest's often verbose boilerplate into cleaner pytest code. For example, setUp and tearDown methods in unittest are replaced with pytest fixtures. Fixtures provide a more modular and reusable approach for managing test setup and teardown logic. This modularity dramatically enhances code organization and maintainability. Similarly, complex assertEqual assertions in unittest become more straightforward assert statements in pytest.

Another helpful aspect of this transition is pytest’s seamless compatibility with existing unittest tests. pytest can execute tests written for unittest without major modifications. This, coupled with pytest’s rich plugin ecosystem, makes it an attractive framework. For example, pytest integrates with doctest, allowing seamless testing of documentation examples. This flexibility contributes to its widespread adoption across diverse Python projects. For a deeper look into the world of pytest, check out Paul Rougieux's Pytest Overview.

Overcoming Common Migration Challenges

Migration isn’t always straightforward. One common challenge is managing dependencies between tests. pytest’s fixture system addresses this by providing a centralized method to define and share test resources. This centralized management avoids conflicts and boosts test reliability. Another hurdle is adapting to pytest’s test discovery mechanisms, which differ from unittest. Understanding how pytest locates and executes tests is crucial for a smooth transition.

Hybrid Environments and Long-Term Strategies

During the transition, you’ll likely operate in a hybrid environment, running both unittest and pytest tests. This is perfectly normal. Concentrate on progressively increasing the number of pytest tests while ensuring the integrity of your current test suite. For extensive codebases, consider tools that automate the conversion process, minimizing manual effort and potential errors.

A successful transition to pytest hinges on a well-planned, incremental approach. By leveraging pytest's compatibility with unittest, concentrating on gradual conversion, and utilizing fixtures effectively, teams can realize pytest's advantages without disrupting their workflow. In the long run, this migration improves code maintainability, simplifies testing, and boosts the overall quality of your Python projects. Investing in this transition yields a more efficient and resilient testing process.

Pytest at Scale: Enterprise Best Practices

Implementing pytest effectively across large teams presents unique challenges. Maintaining a robust test suite alongside a growing codebase requires careful planning and a strategic approach. This involves addressing test isolation, dependency management, and configuration for different environments.

Structuring Tests for Scalability

One key practice is structuring test suites for scalability. As your codebase expands, a well-organized test structure is crucial. Organize tests by functionality, mirroring your application’s architecture. This makes it easier to locate and maintain tests related to specific features. This modular approach also encourages better code reusability and simpler debugging.

Managing Test Dependencies and Configurations

Large projects often have complex dependencies. Test isolation is essential to prevent unintended side effects between tests. Use pytest's fixture system to manage these dependencies effectively. Fixtures enable you to create reusable components for setting up and tearing down test environments. This ensures each test runs in a clean state, minimizing interference and improving the reliability of individual test results.

Implementing Tagging Systems for Selective Execution

Running every test for every code change becomes inefficient in large projects. Implement tagging systems to categorize tests based on functionality or criticality. This enables selective test execution, allowing you to run only the relevant tests for specific changes. For instance, you might tag tests related to user authentication and run only those when modifying the login system. This targeted approach significantly reduces testing time and streamlines the developer workflow.

Designing Custom Reporting for Meaningful Insights

Standard pytest reports might not be sufficient for complex enterprise needs. Consider creating custom reports that provide more detailed information tailored to your specific requirements. This could involve integrating with reporting tools like ReportPortal, dashboards, or generating custom metrics relevant to your business goals. These specialized reports provide a more practical way to monitor test performance and identify trends.

Integrating pytest with CI/CD Pipelines

Integrating pytest into your CI/CD pipeline is vital for automated testing. Configure your CI system, such as Jenkins or GitHub Actions, to run the appropriate tagged tests for each commit or pull request. This ensures consistent testing and helps catch regressions early in the development cycle. For instance, use the pytest --junitxml=report.xml command to generate reports compatible with most CI systems. This integration provides faster feedback and smoother deployments.

Handling Environment-Specific Testing Challenges

Testing across multiple environments (development, staging, production) is common in enterprise settings. Pytest's fixture system allows you to handle environment-specific configurations. This ensures your tests run correctly in each environment by setting up environment-specific dependencies, database connections, or configuration values. Addressing these variations improves the robustness and reliability of your tests in different deployment scenarios.

By adopting these best practices, organizations can effectively use pytest for enterprise-level testing. These strategies help teams scale their testing efforts, maintain code quality, and improve development workflows, even in complex projects. A well-implemented pytest strategy leads to more robust, maintainable, and reliable software.

Ready to streamline your merge workflow and improve your CI/CD pipeline? Discover how Mergify can help your team.