Pull Request Review Best Practices: A Step-by-Step Guide to Code Excellence

Mastering the Art of Small Pull Requests

Want to improve your code review process? Start by making your pull requests smaller. This simple change can make reviews faster and more effective. Many successful engineering teams keep their pull requests under 50 lines of code when possible - a practice that leads to better feedback and quicker turnaround times.

Why Smaller Pull Requests Are Better

Think of reviewing code like proofreading text. It's much easier to catch errors in a single paragraph than in a lengthy chapter. The same principle applies to code reviews - with fewer lines to analyze, reviewers can better understand the context and changes. This focused attention helps them spot potential issues and suggest meaningful improvements.

Small pull requests also make testing and debugging more manageable. Writing unit tests becomes less overwhelming when you're working with limited chunks of code. When problems crop up, finding their source is straightforward since you're dealing with a smaller scope. And if something goes wrong, rolling back a focused change is simple and causes minimal disruption. Compare this to large pull requests where tracking down bugs often feels like searching for a needle in a haystack.

Implementing the Small Pull Request Strategy

To break down large features into smaller pieces, start by changing how you plan your work. Rather than implementing an entire feature at once, identify independent components that can stand alone. This requires careful thought about how different parts will work together and what depends on what.

Within each pull request, make each commit represent one clear change. This approach helps others follow your thought process and makes it easier to undo specific changes if needed. Tools like Mergify can help by handling routine tasks automatically, letting developers focus on writing good code instead of managing pull request logistics.

Creating a team environment that values step-by-step development is key to making this work. Talk openly about how to split up features and make sure everyone understands why smaller pull requests matter. When teams consistently apply these practices, they often find their development process becomes smoother and more efficient, leading to better code quality overall.

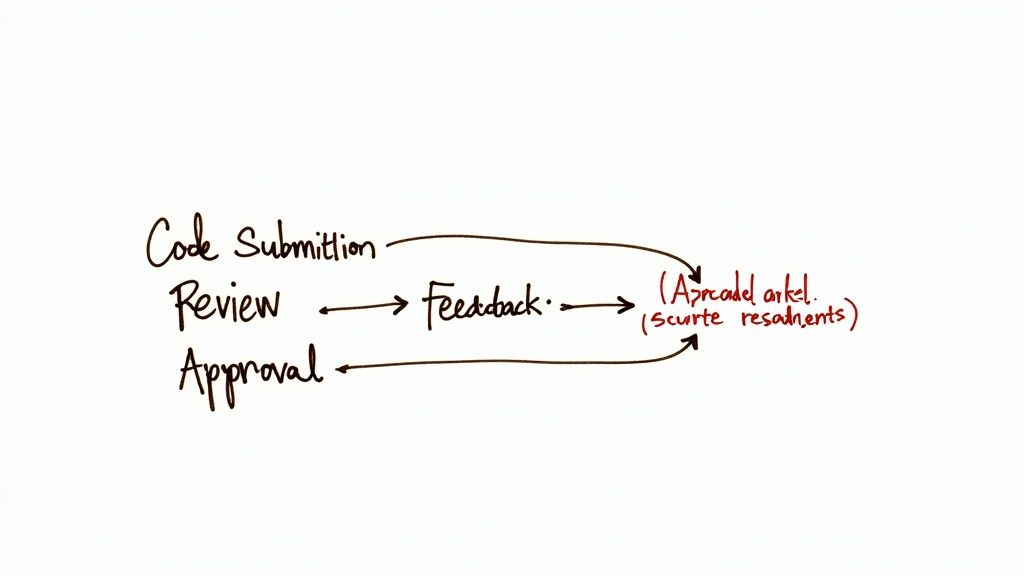

Creating Review Workflows That Actually Work

Making smaller pull requests work well depends heavily on having good review practices. Many teams struggle with basic issues like unclear response expectations or overwhelming notification systems. Let's explore how successful teams build review processes that help rather than hinder their development work.

Establishing Realistic Response Times

Clear timing expectations are essential for smooth code reviews, but what counts as "timely" varies by team and project. While some companies like Google aim for next-day reviews, that pace isn't realistic for everyone. Instead of strict deadlines, consider creating flexible guidelines based on your team's needs. Account for factors like PR complexity, submission timing, and reviewer availability. The goal is maintaining steady progress rather than racing to meet arbitrary targets.

Optimizing Notifications and Avoiding Overload

Good notification systems keep reviewers informed without drowning them in alerts. Too many notifications leads to tuning them out entirely. The key is setting up smart filters - for example, only getting alerts for specific repos or types of changes that matter most to you. This helps reviewers stay focused and give better feedback by reducing unnecessary interruptions.

Adapting Workflows to Team Size and Methodology

The best review process looks different depending on your team size and development approach. Small teams often work well with informal reviews, while larger groups need more structure. Teams using Agile methods typically want quick, frequent feedback, but those following Waterfall may prefer formal review phases.

Here's how workflows typically vary:

| Team Size/Methodology | Workflow Characteristics |

|---|---|

| Small Teams | Peer reviews, flexible timelines |

| Large Teams | Formalized stages, assigned roles (e.g., primary and secondary reviewers) |

| Agile | Continuous integration, frequent reviews |

| Waterfall | Scheduled reviews at specific milestones |

Tools like Mergify can help by handling routine PR tasks automatically, freeing up reviewers to focus on code quality. The key is matching your workflow to your specific needs and team dynamics. This creates a sustainable process that maintains high standards without creating bottlenecks.

"Building a Sustainable Review Culture" in a more natural, blog-like style:

Building a Sustainable Review Culture

Great code review processes don't just happen - they need to be actively cultivated and maintained. While having clear guidelines is important, the real challenge lies in creating an environment where thorough code reviews become second nature to your team. This means thinking carefully about how to handle common challenges like reviewer fatigue and shifting workloads, especially during busy periods.

Balancing Reviewer Workload and Preventing Burnout

Code review requires the same level of focus and mental energy as writing code. When team members get overloaded with review requests, the quality of feedback suffers and burnout becomes a real risk. One effective approach is setting up a rotation system where review responsibilities are shared across the team. This not only spreads out the work but has the added benefit of helping developers become familiar with different parts of the codebase.

Having backup reviewers is also essential. People take time off, get sick, or become temporarily swamped with other work. By designating backup reviewers upfront, you prevent pull requests from getting stuck waiting for feedback. Tools like Mergify can help by automatically managing reviewer assignments based on team availability and workload rules.

Cultivating a Culture of Quality Code Review

Building sustainable review practices means creating an environment where thoughtful code review is seen as valuable, not just another item on a checklist. The goal is to make code review a natural opportunity for learning from each other and improving code quality together.

Setting clear expectations helps everyone get on the same page. Teams should agree on what makes a good review - like checking not just if the code works, but if it's easy to read and maintain. Having shared standards for what reviewers should look for helps make feedback more consistent and constructive.

Adapting and Scaling Your Review Process

As your team grows and projects evolve, your review process needs to grow with them. What works for a small team often needs adjustment at a larger scale. For example, a casual peer review system might work well initially, but larger teams may need more structure, like having primary and secondary reviewers for each pull request.

Similarly, expectations around review timing may need to shift based on team capacity and the complexity of work being reviewed. A simple bug fix might get quick feedback, while major architectural changes need more thorough review time.

Measuring key metrics helps gauge if your process is working. By tracking things like how long it takes to get initial feedback on pull requests and how many revision cycles are typically needed, you can spot bottlenecks and areas for improvement. This data helps you refine your approach over time, ensuring code review continues to add value rather than create friction. Regular check-ins with the team about what's working and what isn't allow you to keep evolving your practices to match your team's needs.

Crafting Feedback That Drives Improvement

Good code review feedback does more than point out errors - it creates opportunities for learning and growth. By focusing on collaboration and constructive dialogue, teams can use the review process to improve code quality while building stronger relationships. Here's how to provide feedback that makes a real difference.

Focusing on the Code, Not the Coder

When reviewing code, keep comments focused on the changes themselves rather than making assumptions about the author. Instead of saying "You did this wrong," try "This approach could lead to performance issues - what do you think about using X instead?" This small shift in language keeps the discussion collaborative and solution-focused. Remember that reviews are about improving the codebase together, not finding fault.

Providing Specific and Actionable Feedback

Generic comments like "needs improvement" don't help authors understand what to change. Be clear about what isn't working and suggest concrete fixes. For example, rather than just saying "This function is too complex," explain: "This function handles multiple tasks at once. Breaking it into smaller, single-purpose functions would make it easier to read, maintain and test. Here's one way we could split it up..." This gives the author a clear path forward.

Balancing Positive and Negative Feedback

While identifying issues is important, taking time to point out what's working well reinforces good practices and keeps morale high. Start review threads by acknowledging strengths before suggesting improvements. For instance: "The error handling here is really solid. One small suggestion to make it even better..." This balanced approach creates a more constructive review experience and encourages open dialogue.

Handling Disagreements Constructively

Different opinions are normal in code reviews, but how we handle them matters. Skip dismissive responses in favor of curiosity and collaboration. Try phrases like "I see your point about X. I'm wondering if Y might help address the performance concerns?" or "Here's another option to consider - what are your thoughts on this approach?" This turns potential conflicts into chances to find better solutions together.

The Power of Examples

Sometimes the best way to explain an idea is to show it in action. When suggesting different approaches or highlighting concerns, include code samples that demonstrate your points. If you recommend refactoring a function, share a quick example of how you envision the improved version. Concrete examples make feedback clearer and help authors implement changes more effectively.

By applying these review practices thoughtfully, teams can transform code reviews from a basic quality check into a powerful tool for collective growth. Tools like Mergify can help automate parts of the process, letting reviewers focus their energy on providing meaningful, constructive feedback that helps everyone improve.

Using Automation to Enhance Code Reviews

Well-designed automation complements human code reviews rather than replacing them. By handling routine checks automatically, reviewers can focus on what they do best - evaluating code design, architecture, and complex logic. This leads to more thorough reviews and better quality code.

Automated Basic Checks for Better Human Reviews

Think about how much time reviewers spend commenting on simple formatting issues or style violations. These mechanical checks are perfect candidates for automation. By adding tools like linters, formatters, and static analyzers to your continuous integration pipeline, you free up reviewers to focus on more valuable aspects like logic, security, and design decisions. For example, automated tools can instantly catch problems like unused variables, inconsistent spacing, or style guide violations - eliminating the need for humans to point these out.

Setting Up Quality Gates for Consistent Standards

Quality gates in your CI pipeline provide automated enforcement of code standards. These gates verify that pull requests meet specific criteria before they can be merged, such as minimum test coverage levels, acceptable complexity scores, and passing static analysis checks. Think of it as an initial review pass that ensures code meets your team's baseline requirements. For instance, you might require 80% test coverage and zero critical security findings before allowing a merge.

Creating Smooth Workflows with CI/CD Integration

When you integrate automated checks directly into your CI/CD pipeline, you create a seamless review process. As soon as a developer opens a pull request, the pipeline runs all configured checks automatically. Results appear right in the pull request interface, giving immediate feedback to both the author and reviewers. This helps catch issues early when they're easiest to fix. Tools like Mergify can further streamline things by automatically assigning reviewers, adding labels, and even merging approved pull requests that pass all checks.

Selecting and Configuring the Right Tools

The success of automation depends heavily on choosing appropriate tools and configuring them thoughtfully. While excellent options like SonarQube, ESLint, and Prettier exist, pick ones that match your team's specific needs and tech stack. Tools that are too strict or poorly configured can slow development instead of helping it. For example, configuring linters to auto-fix minor style issues can reduce time spent on formatting discussions in reviews.

Using automation effectively shifts code reviews from finding basic errors to improving overall code quality through thoughtful design discussions. This helps teams work faster while maintaining high standards. The result is better code with less technical debt and a team culture focused on continuous improvement.

Measuring and Improving Review Performance

Your pull request review process needs regular assessment and fine-tuning to remain strong. Like tracking code quality metrics helps improve your codebase, evaluating your review workflow helps identify what's working well and what needs improvement. By carefully measuring key indicators and acting on that data, you can spot problems early, make targeted improvements, and help your team deliver better code faster.

Key Metrics for Evaluating Review Effectiveness

Smart teams know that their review process must adapt as their needs change. By tracking specific metrics over time, they can spot patterns and make data-backed decisions about what to improve. Here are some of the most useful metrics to monitor:

- Time to First Review: How quickly does a pull request get its first reviewer feedback? Long delays here often reveal problems that need fixing. For example, if PRs regularly sit for days without initial comments, you may need to adjust reviewer workloads, clarify who should review what, or make reviews a higher priority task.

- Total Review Time: This covers the full span from when a PR opens until it merges. When reviews drag on too long, it usually points to specific issues - maybe PRs are too big, communication is slow, or the back-and-forth process needs streamlining. Watching this metric helps pinpoint where time gets lost.

- Review Depth: While quick reviews matter, thorough reviews matter more. You can gauge review quality by looking at metrics like comments per changed line or issues found per review. This shows if reviewers are truly engaging with the code. Too few comments might mean surface-level reviews, while too many could indicate unclear coding standards.

- Revision Cycles: How many rounds of changes does it take to get a PR ready to merge? Multiple revision cycles often stem from unclear requirements, insufficient testing before review, or communication gaps. Tracking this helps identify where better upfront clarity could speed things up.

Gathering Meaningful Feedback and Identifying Bottlenecks

Numbers tell only part of the story. Getting real input from your team reveals the full picture of how your review process works day-to-day. Regular check-ins about review experiences help uncover hidden problems and point to practical solutions. Simple questions like "What slows down your reviews most?" or "What would make reviewing easier?" often lead to valuable insights.

For example, if multiple reviewers say they feel swamped by too many PRs, you might need to spread the review load more evenly or adjust how much new code comes in at once. Similarly, complaints about inconsistent feedback can show where better guidelines or training would help.

Implementing Improvements That Stick

Making your review process better takes patience and experimentation. The key is to try new approaches thoughtfully, measure if they help, and keep adjusting based on real results. You might test different tools to automate parts of the process, update your review guidelines, or try new ways to assign reviewers.

For instance, if you notice first reviews taking too long, you could try automated reviewer assignment or instant notifications for new PRs. Track whether these changes actually speed up initial reviews and adjust your approach based on what you learn.

This cycle of measuring, testing improvements, and refining your approach helps your review process stay effective as your team grows and changes. It turns code review from a simple quality check into a powerful way to share knowledge and help everyone write better code. Tools like Mergify can handle many routine review tasks automatically, letting your team focus on giving valuable feedback that improves code quality.

Ready to make your code reviews more efficient and effective? See how Mergify can help streamline your workflow and help your team deliver better code.