10 Proven Automated Testing Best Practices: A Complete Guide to Test Automation Excellence

Testing in The Modern Software Development World

Software teams are rapidly moving toward automated testing to meet the growing demands for faster releases and better quality. Recent data shows this shift clearly - by 2020, 44% of IT organizations had automated at least half of their testing processes. This widespread adoption reflects how companies now see automation as essential for effective quality assurance. But success with test automation requires more than just picking tools - it needs thoughtful integration throughout the development process.

Combining Human Skills with Automation

The goal isn't to replace manual testers with automated tools. Instead, think of it like a partnership where each side contributes its strengths. Automated tests excel at repetitive work like regression testing, which frees up testers to focus on tasks that need human insight - exploring edge cases, evaluating usability, and understanding how real users interact with the software. For example, while automated tests efficiently verify that core features still work after changes, human testers can spot subtle usability issues and unexpected scenarios that automated tests might miss.

Assessing Your Testing Progress

Before expanding your automated testing, take time to understand where your testing stands today. This means looking honestly at your current processes, tools, and team capabilities.

Key areas to examine include:

- Current Automation Coverage: How much of your testing runs automatically? Are you still mostly testing manually, or have you already automated certain test types?

- Tools and Setup: Which testing tools do you use now? Is your testing infrastructure ready to support automated test runs and results tracking?

- Team Knowledge: Does your team know how to create and maintain automated tests? Are they comfortable with the programming languages and frameworks needed for your testing tools?

- Test Scope: How well do your current tests check important features? Which critical areas need better test coverage through automation?

Learning from Others' Experiences

One of the best ways to improve your automated testing is to study how other teams have handled it. Both success stories and cautionary tales offer valuable lessons. For instance, you might learn how one team successfully connected their automated tests to their continuous integration pipeline, helping them catch issues faster. Or you could discover how another group solved the challenge of maintaining complex test suites as their application grew. These real examples help you avoid common problems and apply proven solutions as you build your own automated testing approach. By learning from others while following testing best practices, teams can develop strong testing strategies that improve software quality and speed up delivery.

Building Your Automation Foundation for Success

Creating effective test automation requires more than just picking tools and writing scripts. Like constructing a solid building, you need a strong foundation that can support both your current testing needs and future growth. While learning from others' experiences provides valuable insights, developing your own robust automation strategy means carefully considering your framework choices and best practices.

Choosing the Right Framework Architecture

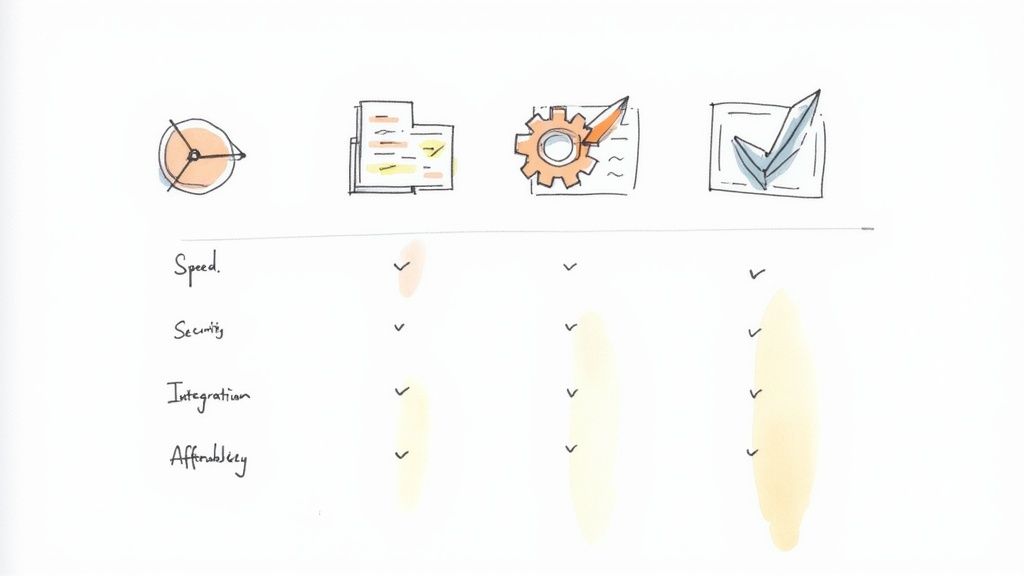

The framework architecture you select shapes how you'll organize tests, handle test data, and interact with your applications. Here are the main approaches to consider, each with its own strengths:

- Linear Scripting: This basic approach uses individual scripts for each test case. While it's simple to start with, it's like building without blueprints - fine for small projects but difficult to scale as your test suite grows.

- Modular Framework: By breaking tests into reusable components, this approach reduces duplicate code and makes maintenance easier. Think of it as using standard building blocks that you can mix and match for different testing needs.

- Data-Driven Framework: This method separates test logic from test data, letting you run the same tests with different inputs. It's similar to having a flexible template that you can use repeatedly with various configurations.

- Keyword-Driven Framework: Using keywords to represent test actions makes scripts more readable for the whole team. It creates a common language that both technical and non-technical team members can understand and work with.

- Hybrid Framework: This approach combines elements from different frameworks to match your specific needs. Like using multiple construction techniques to build the ideal structure, you can pick the best parts of each framework type.

Your choice depends on factors like project scope, team skills, and application complexity. Making the right decision early on helps set up your automation efforts for long-term success.

Implementing Maintainable Practices

After choosing your framework, focus on practices that keep your test suite stable and reliable as it grows. Here are key approaches that make a real difference:

- Clear Naming Conventions: Use consistent, descriptive names for test cases, variables, and functions. Good naming helps everyone understand the purpose and structure of your tests at a glance.

- Version Control: Using Git helps track changes and enables smooth team collaboration. It's like having a detailed project history that shows how your test suite has evolved over time.

- Code Reviews: Regular peer reviews catch potential issues early and maintain high standards. Just as building inspections ensure quality construction, code reviews help ensure your tests are well-built and reliable.

- Documentation: Keep clear records of your framework design, test cases, and processes. Good documentation helps new team members get up to speed quickly and serves as a valuable reference for the whole team.

These practices help reduce flaky tests, lower maintenance costs, and ensure your automated testing continues to provide reliable feedback throughout development. By investing time in these foundational elements, you create a testing framework that serves your needs today and adapts well to future changes.

Maximizing Coverage Without Sacrificing Speed

Teams often struggle to balance thorough testing with fast feedback cycles. While some successful teams achieve 73% automation coverage, getting there requires careful planning and smart choices about what and how to automate. Let's explore practical ways to expand test coverage while keeping execution times quick.

Prioritizing Your Testing Efforts

The key is understanding that not every test carries equal weight. Critical user flows deserve more attention than edge cases that rarely affect users. Take a banking app as an example - testing money transfers should take priority over verifying statement formatting. By focusing on business-critical features first, teams can make the most of their testing resources and ensure core functionality works reliably.

Identifying High-Value Automation Candidates

Choosing what to automate is just as important as writing the tests themselves. The best targets are tests that are repetitive, time-consuming, or prone to human error when done manually. Regression testing is a perfect example - automating these checks ensures code changes don't break existing features. This strategic approach frees up manual testers to focus on exploratory testing and other tasks where human judgment adds the most value.

Maintaining Speed and Efficiency in Automated Testing

Speed matters as much as coverage. Running tests in parallel can dramatically cut execution time. Well-designed tests also help - keep them focused on specific features and avoid unnecessary dependencies. It's like tuning a race car - extra weight only slows you down. By staying lean and efficient, tests can provide quick feedback without sacrificing thoroughness.

Measuring Testing Effectiveness

Regular monitoring helps keep testing on track. Key metrics like execution speed, coverage levels, and bug detection rates show if your strategy is working. For example, if automated tests keep missing important bugs, that's a clear sign to adjust your approach. Track the business impact too - looking at reduced manual testing needs and fewer production issues helps justify automation investments. This creates a cycle of continuous improvement, helping you refine coverage while maintaining quick feedback loops.

The Role of AI in Test Automation

Strong test automation needs more than just solid foundations and good coverage - it requires staying current with new technology. Many teams are now exploring how artificial intelligence can make their testing more efficient and effective. Let's look at how AI is changing key aspects of test automation.

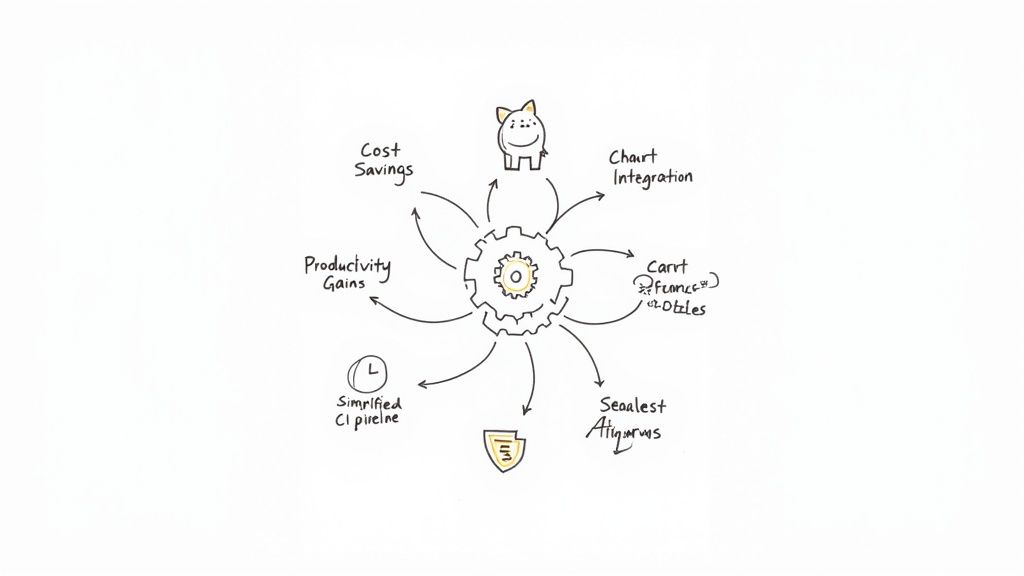

How AI Helps Maintain Tests

Test maintenance can be a major headache as applications evolve. AI helps solve this by automating many maintenance tasks. For instance, self-healing tests can automatically detect and fix broken element locators when the UI changes. This means less time spent on manual updates and more reliable tests, even as the application continues to change. AI can also monitor code changes to spot potential test impacts early, helping teams address issues proactively.

Smarter Test Execution

AI brings intelligence to how tests are run. By studying past test results and code changes, AI tools can identify which tests to run first based on risk level. This targeted approach helps catch important bugs faster while reducing overall test runtime. This is especially helpful for teams using Jenkins or other CI/CD tools where quick feedback matters. AI can also help optimize test environments for more consistent results.

Better Test Result Analysis

AI shines at finding patterns in test data that humans might miss. Machine learning algorithms can process large amounts of test results to identify unstable tests, regression-prone areas, and potential future issues based on historical trends. This helps teams make smarter decisions about where to focus their testing efforts. Rather than just tracking pass/fail rates, teams get deeper insights to guide quality improvements.

Setting Up for AI Testing Success

To make the most of AI in testing, you need the right foundation. Key things to focus on include:

- Data Collection: Gather detailed test data that AI can learn from - including logs, results, and execution metrics

- Tool Selection: Choose AI testing tools that fit your needs and work well with your current testing setup

- Team Training: Help your team build the skills to effectively use and maintain AI-powered testing tools

While AI can significantly improve test automation, it works best as a complement to human expertise rather than a replacement. The key is using AI strategically to enhance your testing process while still relying on your team's knowledge and judgment. When implemented thoughtfully, AI can help teams test more efficiently and deliver better quality software.

Measuring Success Through Meaningful Metrics

Understanding the real impact of your automated testing requires more than just tracking basic pass/fail rates. While building a strong foundation and maximizing test coverage are crucial first steps, you need concrete data to optimize your testing strategy effectively. The right metrics help demonstrate value to stakeholders and guide continuous improvement of your automation efforts.

Key Performance Indicators for Automated Testing

Let's explore the essential metrics that reveal how well your automated testing strategy is performing. These key performance indicators (KPIs) provide clear data to identify areas for improvement and showcase the business benefits of your automation investments.

- Test Coverage: This basic but vital metric shows what percentage of your codebase has automated test coverage. While many teams aim for high overall coverage (some achieve 73% or more), it's smarter to focus first on thoroughly testing your core features and critical user paths. Quality coverage of key functionality matters more than hitting an arbitrary percentage target.

- Test Execution Time: The speed of your test runs directly impacts development velocity. Keep close track of how long your test suites take to complete. Slow test execution can create development bottlenecks and delay releases. For example, running tests in parallel can dramatically cut execution time and maintain fast feedback for your team.

- Defect Detection Rate: This metric reveals how effectively your automated tests catch bugs before they reach production. When your tests consistently find issues early (like an 80% detection rate), it shows you're properly covering critical functionality. Early bug detection means fewer customer-facing issues and reduced costs from production problems.

- Maintenance Cost: As your codebase evolves, automated tests need ongoing updates. Track the time your team spends maintaining existing tests versus creating new ones. Lower maintenance overhead means more resources for expanding test coverage. This data helps justify automation investments by showing reduced manual testing needs.

- Automation ROI: The ultimate measure is business impact - faster releases, fewer production issues, and reduced manual testing effort. Tracking these outcomes proves the value of your automation strategy to leadership and guides future investments.

Establishing a Measurement Framework

To build an effective measurement system, start by defining clear goals for your automation program. Choose metrics that align with those objectives and consistently track them over time. Use dashboards to visualize trends and share progress with stakeholders.

Meet regularly with your team to review the data and spot areas needing attention. For instance, if bug detection rates drop, you may need to update existing tests or add coverage for new features. This ongoing cycle of measurement and improvement helps you maximize the benefits of your automated testing investment. Regular metric reviews ensure your strategy stays effective and continues delivering value to the business.

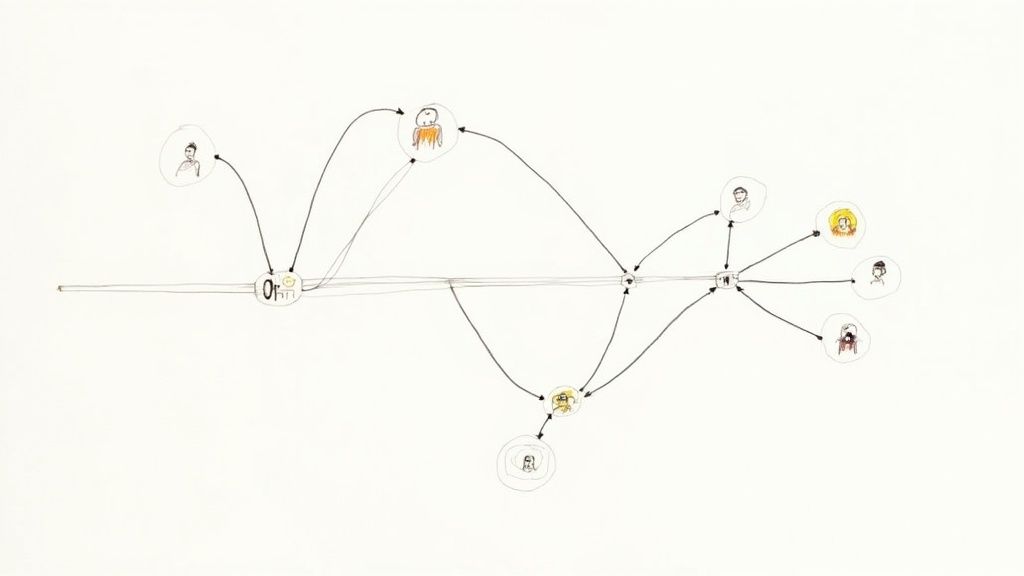

Integrating Automation Into Your Development Pipeline

Automated testing is essential for any modern development pipeline. When properly integrated into Continuous Integration/Continuous Delivery (CI/CD) workflows, it provides quick feedback and helps maintain quality standards. Rather than waiting to catch issues in production, teams can identify and fix bugs early in the development cycle when they're less expensive and time-consuming to address. The data supports this approach - companies that effectively integrate automated testing typically catch up to 80% of defects before code reaches production environments.

Strategies for Seamless Integration

To smoothly integrate automated testing into your workflow:

- Test Parallelization: Running multiple tests simultaneously dramatically speeds up the testing process. Think of it like assembly line manufacturing versus building one product at a time - parallel testing lets you process more tests faster. Tools like Jenkins make it easy to split testing across different machines.

- Environment Management: Just as scientific experiments need controlled conditions, automated tests require consistent environments to produce reliable results. Using tools to automatically set up and manage test environments prevents environment-specific issues from impacting your test results.

- Centralized Reporting: Having all your test results in one place makes it simple to spot problems quickly. Rather than hunting through different systems and reports, a single dashboard gives you an immediate view of your software's health and testing status.

Optimizing Execution Efficiency

Beyond basic integration, you'll want to optimize how your tests run:

- Prioritize Business-Critical Tests: Start by automating tests for your core features and critical user paths. While high overall test coverage is great (some teams achieve 73% or more), focusing first on the most important functionality ensures you're testing what matters most.

- Optimize Test Suite Design: Build tests that are focused and independent, following the same principles you use for clean code. This approach makes tests run faster and makes it easier to find and fix issues when they occur.

- Leverage Caching Mechanisms: Speed up test runs by caching frequently used data and dependencies. Similar to how browsers cache web pages, your testing tools can save time by reusing common resources instead of loading them repeatedly.

Addressing Integration Challenges

Common challenges when integrating automated testing include:

- Complex Dependencies: When different services and systems need to work together, testing becomes more complicated. Use service virtualization and mocking to isolate components and maintain stable tests.

- Legacy Systems: Older systems often resist automation efforts. Focus on API testing or create wrapper code around legacy components to make them more testable.

Following these guidelines helps teams successfully add automated testing to their development pipeline while maintaining quick feedback cycles and high quality standards.

Ready to improve your development workflow? Mergify helps teams reduce CI costs and enhance code quality through automated merge queue management, CI monitoring, and smart merge scheduling.