Performance Testing Strategies That Actually Work: A Modern Guide to Application Success

Building a Foundation for Performance Success

A solid performance testing approach needs to be woven into your development process from the start. Just as a house needs strong structural support before adding rooms and features, your application requires ongoing performance validation to stay stable as it grows. Taking this proactive stance, rather than scrambling to fix issues later, is what helps applications thrive under real-world usage.

Why Early Testing Is Crucial

Starting performance testing early saves significant time and money down the road. The math is clear - according to the Standish Group's research, fixing performance problems after release can cost up to 100 times more than addressing them during development. Think of it like maintaining your car - regular oil changes and tune-ups prevent expensive engine repairs later. Those small investments in early testing can prevent major headaches and budget overruns once your application is live and serving real users.

Implementing Automated Testing Frameworks

Manual performance testing quickly becomes impossible at scale. Consider trying to simulate thousands of concurrent users hitting your application - you simply can't replicate that by hand. This is where automated testing frameworks prove essential. By automatically running performance checks with each code change, your team can catch issues immediately before they reach production. The time saved through automation lets developers focus on building new features rather than manually running tests. But automation only works when the tests are thoughtfully designed and consistently maintained.

Choosing the Right Tools and Techniques

Finding the right performance testing tools makes a big difference in your testing success. Popular options like JMeter, Gatling, and k6 each have different strengths - some excel at simulating heavy loads while others specialize in measuring specific metrics. The key is matching the tool to your application's needs and your team's skills. These tools help track critical indicators like response times, throughput, and error rates. Getting them integrated into your continuous integration pipeline helps catch performance regressions early.

Avoiding Common Pitfalls

Building effective performance testing takes more than just running basic load tests. Many teams focus solely on handling normal traffic levels while neglecting stress testing for traffic spikes or testing sustained high usage over time. Creating realistic test scenarios based on actual user behavior patterns helps avoid misleading results. When you address these common challenges directly, you build a testing approach that truly protects your application's performance. Taking this comprehensive view helps ensure your system stays responsive even under unexpected conditions.

Choosing Metrics That Drive Real Results

With your performance testing foundation in place, it's time to focus on the metrics that truly matter. Raw data collection alone won't cut it - you need to zero in on measurements that directly reflect how users experience your application and how that impacts your business goals. Let's explore how to move past surface-level metrics to ones that drive real improvements.

Identifying Key Performance Indicators (KPIs)

The right KPIs act as a compass for your testing efforts by connecting technical measurements to business outcomes. Take an e-commerce site, for example - tracking the average checkout completion time reveals more than just speed. A sluggish payment process often leads to abandoned carts and missed sales opportunities. For social platforms, metrics like session length and interaction rates may matter more than pure server response times. The key is understanding which indicators best capture user satisfaction and business success in your specific context.

Setting Meaningful Benchmarks

After selecting your KPIs, you'll need clear targets to measure against. These benchmarks should come from three key sources: proven industry standards, analysis of your competitors, and most crucially, what your users expect. Studies consistently show that users want pages to load in under two seconds. Missing this mark risks losing visitors and damaging trust in your brand. Strong benchmarks keep your testing grounded in the real needs of your users rather than arbitrary goals.

Avoiding Common Measurement Traps

Watch out for common pitfalls as you develop your testing approach. One frequent mistake is getting lost in technical metrics like CPU usage without connecting them to the user's actual experience. High server load doesn't automatically mean poor performance if users still see quick response times. Another oversight is forgetting to account for different user segments. Mobile users often face different constraints than desktop users due to network limitations. Build your testing to reflect these varied scenarios to get an accurate picture of how your application performs across all use cases.

Presenting Results to Stakeholders

The final piece is communicating your findings effectively to drive action. Raw numbers rarely inspire change on their own - you need to present data in ways that resonate with both technical and business stakeholders. Visual tools like trend graphs can powerfully illustrate progress, such as showing how optimization work has steadily improved load times across releases. Clear, compelling communication helps build support for performance initiatives and creates momentum for continued improvements. When everyone understands the impact of performance testing, it becomes a natural part of the development culture.

Mastering the Three Pillars of Performance Testing

Once you've set clear performance metrics and benchmarks, it's time to dive into the three essential testing strategies that work together to fully evaluate your application's capabilities. Load testing, stress testing, and endurance testing each play a unique role in uncovering different aspects of performance. Understanding how these approaches complement each other helps build applications that consistently perform well under real conditions. Let's explore how each type of testing contributes to a complete performance evaluation.

Load Testing: Simulating Real-World Usage

Load testing recreates typical daily usage patterns to verify that your application performs well under normal conditions. Think about how users actually interact with your application - How many people use it simultaneously? What actions do they take? How often do they perform different tasks? For instance, an e-commerce site might test hundreds of concurrent users browsing products, adding items to carts, and completing purchases. This reveals whether the application can smoothly handle everyday traffic levels and helps identify where bottlenecks might form during regular use.

Stress Testing: Pushing the Limits

While load testing examines normal conditions, stress testing deliberately overloads your application to find its breaking point. This process steadily increases traffic and usage beyond expected levels, similar to testing a bridge's maximum weight capacity. For example, a social media platform might simulate the sudden spike in activity that occurs when content goes viral. This helps reveal how the system behaves under extreme pressure and where it starts to fail. The insights gained from stress testing guide decisions about infrastructure scaling and help teams prepare for unexpected traffic surges.

Endurance Testing: The Long Haul

Endurance testing, also called soak testing, examines how your application performs during extended periods of continuous use. Like a marathon runner who needs to maintain steady performance over many hours, your application needs to stay stable during long periods of activity. This testing approach uncovers issues that only surface over time, such as memory leaks or gradual slowdowns. A financial system, for example, might run several days of non-stop simulated transactions to verify its long-term stability. This helps ensure the application remains reliable during extended periods of use - especially critical for systems that need to run 24/7.

By combining these three testing approaches, you create a thorough evaluation that examines your application from multiple angles. Each type of testing reveals different potential issues, from everyday performance bottlenecks to long-term stability concerns. Together, they provide the insights needed to build an application that delivers consistent performance across all usage scenarios.

Making Sense of Your Performance Data

After running performance tests, you'll end up with lots of raw data that needs careful analysis to be truly useful. Raw numbers alone don't tell the full story - you need to analyze patterns, visualize results, and share insights in ways that drive real improvements. Here's how to transform your performance data into clear, actionable insights that help optimize your application.

Utilizing Statistical Methods for Analysis

Going beyond basic averages reveals important patterns in your data. Take response times, for instance - an average of 2 seconds might look fine on paper, but could mask serious issues affecting some users. That's where percentiles come in handy. The 90th percentile shows you what the slowest 10% of users experience, helping identify problems that averages hide. Standard deviation is another key metric - if it's high, it means your application's performance is inconsistent, which frustrates users. Pick statistical methods that match your application type and specific goals.

Visualizing Data for Clear Insights

With thousands of data points collected during testing, charts and graphs help spot important patterns quickly. A simple line graph tracking response times across 24 hours clearly shows peak usage periods and potential slowdowns. Histograms display how response times are distributed, making it easy to spot unusual spikes or issues. While Apache JMeter includes basic visualization tools, dedicated analytics platforms can provide deeper analysis when needed.

Communicating Findings and Driving Action

The best analysis only matters if it leads to improvements. Present your findings in ways that make sense to both technical teams and business stakeholders. Instead of drowning people in numbers, use clear visuals and focused summaries. A dashboard showing key metrics like response time, error rate, and throughput gives everyone a quick view of application health. Connect performance metrics to business goals - like how slower load times affect sales or user satisfaction. This helps non-technical stakeholders understand why performance matters and support optimization efforts. When you combine thorough analysis with clear communication, performance testing leads to real improvements that users notice. Focus on explaining what the data means for the business and users, then outline specific steps to address any issues found.

Creating Test Scenarios That Actually Matter

Building a solid performance testing plan means moving beyond simple tests to capture how your application really behaves. Performance tests need to reflect actual user behavior, traffic spikes, and complex interactions in the real world to find true bottlenecks and maintain reliability.

Designing Tests for Real User Behavior

The best performance tests match how people actually use your application. This starts with mapping out common user journeys - for example, an e-commerce site might simulate customers browsing products, adding items to carts, and checking out. Tests should account for different devices, browsers, and interaction patterns like quick clicks versus slower browsing to create realistic scenarios.

Simulating Realistic Load Patterns

Just testing average traffic isn't enough - your tests need to cover all kinds of load variations. Think peak hours, seasonal spikes, and sudden surges. For instance, a news site might get flooded with visitors during breaking news. Using tools like Apache JMeter or k6, you can simulate these scenarios and ensure your infrastructure can handle the demand. Missing these variations leads to blind spots that can cause problems in production.

Replicating Complex Interactions and Data

Modern apps handle many complex interactions and data scenarios that impact performance. A social platform, for example, needs to manage users posting updates, liking content, and sharing posts all at once. Your tests should also work with different types and amounts of data to find issues in how your system processes and retrieves information.

Examples of Effective Test Scenarios

Here are some real-world examples of good performance testing approaches:

- E-commerce Platform: Testing a big sale event with many users adding items, processing payments, and using discount codes at the same time

- Gaming Application: Simulating hundreds of players in real-time gameplay, including movement, combat, and purchases

- SaaS Application: Testing different user roles accessing various features with different data loads, matching actual usage patterns

Creating test scenarios that mirror real usage gives you clear insights into how your application performs under pressure. This helps you spot current issues and prepare for future challenges as your user base grows. The result is an application that stays reliable even during intense usage spikes.

Putting It All Together: Your Performance Testing Playbook

Building an effective performance testing strategy requires careful planning, clear objectives, and the right tools. This guide lays out the key elements needed to build performance testing capabilities that deliver real results. Let's walk through the essential components step by step.

Defining Your Performance Testing Goals

Clear goals provide the foundation for any successful performance testing effort. Start by identifying what you want to achieve - whether that's finding system bottlenecks, validating scalability, or measuring stability under load. Your specific objectives will guide your testing approach. For instance, if you're preparing for a major product launch, focus on peak traffic simulation. For applications requiring high availability, prioritize long-term stability testing through endurance runs. Well-defined goals ensure your testing activities align with what matters most for your business.

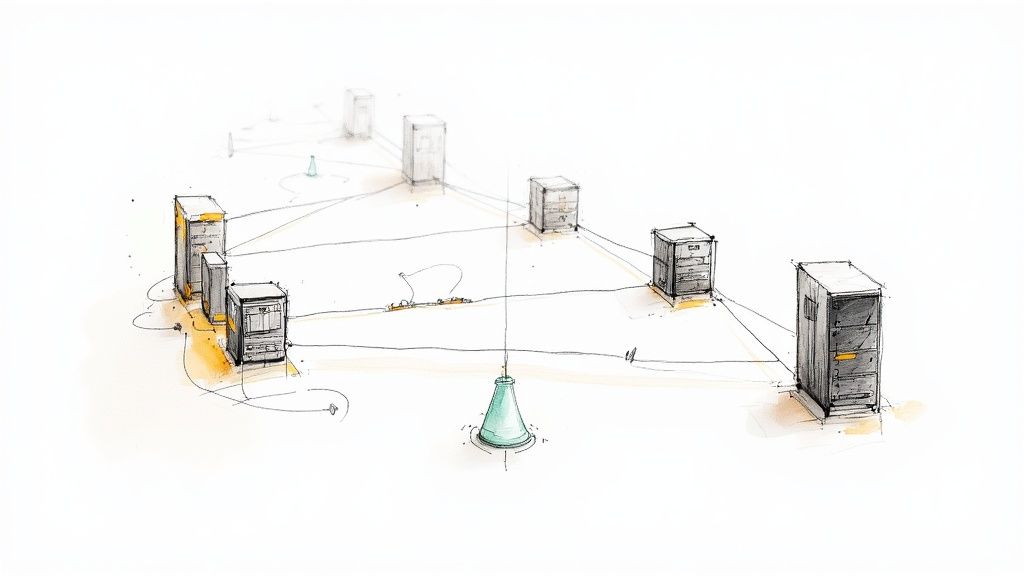

Building Your Performance Testing Toolkit

The right tools make all the difference in performance testing. Popular options like Apache JMeter, Gatling, and k6 each have unique strengths. JMeter works well for simulating heavy loads, while k6 shines with its developer-friendly scripts and seamless CI/CD integration. Choose tools that match your application architecture, team skills, and testing needs. Adding monitoring capabilities helps track system behavior in real-time during test runs.

Implementing Your Performance Testing Strategy

Once you've set goals and selected tools, implementation involves several key phases:

- Creating Realistic Test Scenarios: Model actual user behaviors in your tests. Study common user journeys, identify busy periods, and build test scenarios that reflect real usage patterns.

- Establishing Baselines: Measure current performance before making changes. Like taking initial fitness measurements, baseline metrics let you track improvements and spot problems over time.

- Executing Tests and Analyzing Results: Run tests consistently and dig into the data. Look for patterns, find bottlenecks, and use statistical analysis to understand performance variations. Raw numbers only tell part of the story - focus on extracting actionable insights.

- Iterating and Refining: Keep evolving your testing approach as your application changes. Gather feedback from development teams, operations staff, and users to continuously improve your test coverage and value.

Maintaining Testing Consistency

Regular, automated testing keeps performance in check over time. Build performance tests into your CI/CD pipeline to catch issues early with each code change. Think of it like routine car maintenance - consistent testing prevents bigger problems down the road. Keep test scripts current as your application evolves to ensure they remain effective.

By following these practices, teams can spot and fix performance issues before they impact users. Making performance testing a core part of development creates a foundation for stable, responsive applications that keep users happy. Focus on building these capabilities systematically and maintaining them over time.

Streamline your team's code integration process and improve performance testing efficiency with Mergify.