Mastering Flaky Unit Tests: A Developer's Guide to Reliable Testing

The Hidden Cost of Flaky Tests in Modern Development

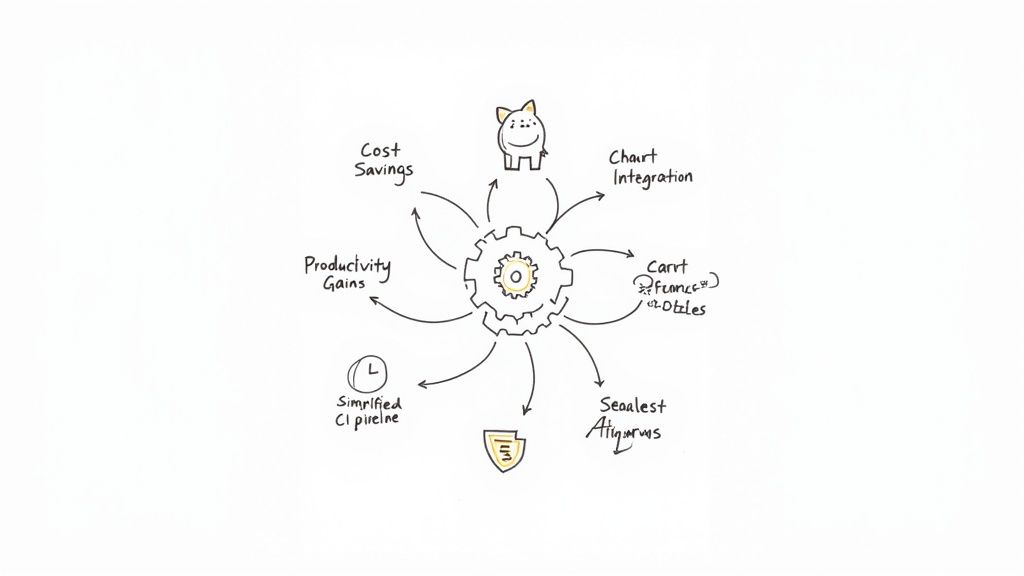

When unit tests pass and fail randomly without code changes, they create more than just frustration. These "flaky" tests silently drain development resources and team energy in ways that often go unnoticed. Even small inconsistencies in test results can cascade into major disruptions across development workflows, schedules, and team dynamics.

Quantifying the Impact of Flaky Unit Tests

While it's tempting to brush off occasional test failures as minor glitches, the cumulative impact tells a different story. Picture a developer spending half an hour investigating a failed test, only to find it was flaky all along. This scenario plays out frequently - 15% of developers encounter flaky tests daily, while another 24% face them weekly. When you multiply those lost hours across an entire development team, the toll becomes clear. Teams are now measuring this drain in concrete terms: delayed releases, wasted developer hours, and real financial costs that directly impact the bottom line.

The Erosion of Trust and Its Consequences

The damage from flaky tests goes deeper than lost time. These unreliable results slowly chip away at developers' confidence in the entire testing process. When team members regularly face false alarms, they start doubting test results altogether. This leads to dangerous shortcuts - developers might start habitually rerunning failed tests or worse, ignoring them completely. Real bugs can slip through these cracks and surface in production, affecting end users. The constant uncertainty also weighs heavily on team morale, making testing feel like a burden rather than a safety net.

The Ripple Effect on Development Workflows

Flaky tests create bottlenecks throughout the development process. They can bring continuous integration pipelines to a halt, pushing back builds and releases. Developers hesitate to merge their code when they can't trust whether a test failure points to a real problem or another false alarm. This uncertainty makes it harder to use advanced testing techniques like fault localization effectively. The result? A slower, more cautious development cycle that holds back the entire team.

Communicating the Cost of Flaky Tests

Solving the flaky test problem requires more than technical fixes - it needs clear communication about their real impact. Teams must show stakeholders exactly how these inconsistent tests affect development speed, release timing, and project costs. By putting concrete numbers behind these impacts, development teams can make a strong case for investing in test reliability. This shift in focus does more than improve code quality - it creates an environment where developers can work confidently and efficiently, knowing they can trust their testing tools to guide them toward better software.

Spotting Patterns in Test Instability

When unit tests fail inconsistently, finding the root cause requires careful observation and analysis. The subtle patterns in test behavior often hold the key to understanding why tests that should be reliable sometimes pass and sometimes fail. By learning to spot these patterns, development teams can build more stable test suites and catch issues earlier.

Common Causes of Flaky Unit Tests

Through analyzing flaky tests across many projects, clear patterns emerge that help explain why tests become unreliable. Here are the main culprits that make tests unpredictable:

- Test Order Dependency: Some tests only fail when run in a specific sequence because they affect shared resources that other tests depend on. For instance, if one test changes a database record or global variable without cleaning up properly, it can cause later tests to fail unexpectedly.

- Asynchronous Operations: Tests involving network calls, file operations, or other async tasks often become flaky due to timing issues. A test might expect an operation to complete within 100ms, but network latency or system load could make it take longer sometimes. This leads to seemingly random failures when the timing assumptions don't hold.

- Resource Leaks: When tests don't properly release resources like memory, file handles, or database connections, problems build up over time. While a single leaked resource may not cause immediate issues, the cumulative effect eventually leads to sporadic failures as resources get exhausted.

- External Dependencies: Tests that rely on external services introduce uncertainty since those services may experience slowdowns or outages. For example, a test calling a third-party API might work fine most of the time but fail during brief API downtimes or when network connectivity is poor.

Recognizing the Telltale Signs

To effectively debug flaky tests, you need to identify specific signs that point to different types of problems:

- Intermittent Failures: When a test passes 9 times out of 10 with no code changes between runs, that's a clear sign of flakiness. These random-seeming failures often hide timing or resource issues that only manifest occasionally.

- Environment-Specific Failures: Tests that work on developers' machines but fail in continuous integration usually indicate environmental differences. The root cause might be variations in operating systems, installed dependencies, resource constraints, or network settings between environments.

- Timing-Dependent Failures: Tests that rely on specific timing, like waiting for operations to complete, are prone to flakiness. For example, a test using a fixed sleep duration might fail when the system is under heavy load and operations take longer than expected.

By learning these patterns and signs, teams can more quickly diagnose what's causing their flaky tests. This knowledge helps focus debugging efforts on the most likely causes rather than investigating randomly. The result is more stable test suites that teams can trust, leading to faster development cycles and fewer false alarms.

Building Your Flaky Test Detection Strategy

A solid test detection strategy is essential for catching flaky tests before they disrupt your workflow. By implementing the right automated tools and processes early, you can spot problematic tests quickly and maintain confidence in your test suite.

Automated Detection: Your First Line of Defense

Automation forms the foundation of effective flaky test detection. Rather than depending on developers to spot intermittent failures, automated systems can continuously monitor test results and flag inconsistencies. One straightforward approach is implementing a retry mechanism - if a test fails, the system automatically runs it again several times. For example, if a test passes 4 out of 5 runs, this pattern signals potential flakiness. This method works well for catching non-deterministic, order-independent (NDOI) flaky tests that fail randomly regardless of execution order.

However, retry mechanisms alone aren't enough for all scenarios. Order-dependent flaky tests (both deterministic and non-deterministic) require more in-depth analysis tools. These advanced tools examine test histories, execution patterns, and environmental factors to identify root causes. For instance, Mergify can integrate with your CI pipeline to automatically detect and report flaky tests while improving other CI processes.

Integrating Detection into Your CI Pipeline

Adding flaky test detection directly into your CI pipeline is key for consistent monitoring. Here are practical ways to implement this:

- Set Up Retry Rules: Configure your CI system to automatically retry failed tests 3-5 times

- Create Monitoring Dashboards: Build dashboards showing flaky test trends and patterns over time. Track metrics like flakiness rate (flaky tests divided by total tests) to gauge overall health. For instance, you might discover that 10% of tests show flaky behavior.

- Generate Detailed Reports: Create reports for each flaky test showing failure rates, failing conditions, and links to relevant code to speed up debugging

Balancing Accuracy and Build Time

While thorough detection matters, you also need to consider practical build time limitations. Running multiple retries for every test can significantly slow down your CI pipeline. Studies show 59% of developers deal with flaky tests regularly, which impacts development speed. To handle this trade-off effectively:

- Focus on Critical Tests: Run more retries on core functionality tests while reducing retries for less essential ones

- Use Parallel Execution: Run tests simultaneously to minimize the time impact of retries

- Implement Smart Retries: Create systems that adjust retry counts based on each test's history. Tests that often show flaky behavior may need more retries than stable ones.

A strong flaky test detection approach combines automated tools, smart CI integration, and careful performance optimization. Investing in these areas helps build reliable tests, keeps developers productive, and maintains a healthy codebase. In the next section, we'll explore how to create strong test foundations.

Creating Rock-Solid Test Foundations

Just like a building needs solid groundwork, software tests require careful planning and execution to deliver reliable results. The key is taking a thoughtful approach to test design from the start, focusing on proper isolation between tests, smart handling of dependencies, and careful management of timing-sensitive operations. When you get these fundamentals right, you end up with tests that consistently give accurate results you can trust.

Designing for Isolation: Preventing Test Interference

Tests that step on each other's toes are a common headache that leads to unreliable results. This happens when tests accidentally share resources or state, causing failures that depend on which test runs first. For example, if one test changes a global variable that another test needs in its original state, you've got trouble. That's why keeping tests truly separate is so important.

Think of each test as living in its own protective bubble. Here's how to maintain that isolation:

- Dependency Injection: Pass dependencies into tests as parameters instead of hardcoding them. This gives you control over test conditions and ensures fresh instances for each test.

- Test Doubles: Use mocks, stubs and spies to simulate external systems and keep tests focused only on the code you're testing. When testing database interactions, for instance, mock those calls instead of hitting a real database.

- Setup and Teardown Methods: Use setup code to prepare a clean test environment and teardown code to clean up afterward. This maintains a consistent starting point for every test.

Managing External Dependencies: Reducing Uncertainty

When tests rely on databases, network services or third-party APIs, they become vulnerable to problems like slow networks, service outages and varying response times. Here's how to keep those external factors from causing test failures:

- Network Mocking: Tools like WireMock or Nock let you simulate network requests without depending on real connections.

- Service Virtualization: Create fake versions of external services that behave predictably during tests.

- Contract Testing: Set clear expectations between services to prevent changes in one from breaking tests in another.

Taming Asynchronous Operations: Ensuring Predictability

Modern apps often use asynchronous operations that make timing tricky in tests. A test might expect something to happen quickly, but network delays or system load can cause intermittent failures. Here's how to handle those timing challenges:

- Explicit Waits: Rather than using fixed delays, wait for specific conditions like elements appearing or requests completing.

- Asynchronous Test Frameworks: Choose testing tools that handle promises, callbacks and other async patterns naturally.

- Timeouts: Set reasonable time limits to catch problems rather than letting tests hang indefinitely.

Getting these basics right - test isolation, dependency handling, and async operation management - creates a strong foundation for reliable tests. Taking time to design robust tests pays off by reducing flaky results, building team confidence, and helping projects move faster while maintaining quality. Recent studies show 59% of developers regularly struggle with unreliable tests, highlighting how important it is to tackle these issues head-on with smart testing practices.

Implementing Effective Test Maintenance Workflows

Like any critical system, test suites need regular upkeep and care. When teams neglect test maintenance, they often face an increasing number of flaky tests, reduced confidence in results, and more bugs making their way to production. Creating sustainable maintenance workflows helps teams stay ahead of these issues and maintain a healthy test suite over time.

Triaging Flaky Unit Tests: A Systematic Approach

When you encounter a flaky test, resist the urge to simply rerun it and move on. Instead, treat it like any other bug by following a structured investigation process. Start by documenting key details about the test's behavior - how often does it fail? Under what conditions? Are there patterns, like failures on specific operating systems or after certain other tests run? This information provides the foundation for effective debugging.

Once documented, prioritize fixes based on impact. Tests covering core functionality need immediate attention, while less critical tests can be temporarily quarantined (while still tracking their flaky status). This focused approach helps teams tackle the most disruptive issues first.

Setting Appropriate Retry Policies: A Balancing Act

While retry mechanisms in your CI/CD pipeline can help manage flaky tests, finding the right balance is key. Too many retries slow down builds significantly, while too few may miss genuine instability. Many teams start with 3-5 automatic retries for failed tests. If a test consistently fails these retries, it signals a deeper issue needing investigation.

Consider implementing dynamic retry policies based on test history. Tests with a track record of flakiness might get more retry attempts than historically stable ones. This targeted approach helps maintain efficiency while catching real issues.

Maintaining Test Health Metrics: Data-Driven Improvement

Just as you track application metrics, monitoring test suite health reveals important patterns. Pay attention to key indicators like the flakiness rate - what percentage of your tests are unstable? Set clear thresholds (like keeping flakiness below 5%) and take action when metrics trend in the wrong direction.

Track the time spent investigating and fixing flaky tests too. This data helps demonstrate the real cost of unstable tests to stakeholders and justify investing in test infrastructure improvements.

Collaboration and Knowledge Sharing: The Human Factor

Strong test maintenance depends heavily on good teamwork between developers and QA. Set up clear channels for reporting and tracking flaky tests, and make test review a regular part of team meetings. This shared approach prevents duplicate work and helps maintain consistent testing practices across the team.

Build a culture where everyone feels responsible for test quality. When developers treat tests with the same care as production code, you create a foundation for long-term reliability. This mindset shift, combined with good processes, helps teams move from constantly fighting fires to preventing issues before they start. The result? Better code quality, faster development cycles, and a more satisfied team.

Building a Culture of Test Quality

Great tests require more than just technical know-how - they need a supportive team culture. When teams treat testing as a shared responsibility rather than a chore, quality improves naturally. Let's explore how to build this culture through practical changes in how teams work together and think about testing.

Shared Ownership: Everyone Owns Quality

Getting rid of flaky unit tests starts with everyone understanding that quality matters. This means developers write tests alongside their code from the start, rather than treating them as an afterthought. Code reviews should examine test quality just as closely as the code itself. For instance, when reviewing a pull request, teams can discuss both the implementation and its test coverage. This collaborative approach helps maintain high standards across the entire codebase.

Meaningful Metrics: Measuring What Matters

Good metrics help teams track progress and show the value of investing in test quality. Keep an eye on your flakiness rate - what percentage of tests are unreliable? Set concrete goals, like keeping flaky tests under 5% of your test suite. Time spent fixing flaky tests is another key metric. When stakeholders see how many hours go into dealing with unstable tests, they better understand why investing in reliable testing pays off.

Maintaining Momentum: Celebrating Success and Overcoming Challenges

Building better testing habits takes ongoing effort. Take time to recognize team members who consistently write solid tests or help fix flaky ones. These small wins add up and keep everyone motivated.

Of course, you'll face some resistance. Some developers might see improved testing as extra work without clear benefits. Address these concerns openly - provide training, share success stories, and demonstrate how reliable tests save time in the long run. For example, if someone worries about spending more time on testing upfront, show how it reduces time spent debugging issues later.

Practical Steps for Cultural Change

Turn good intentions into real change with these concrete steps:

- Integrate Test Quality into Performance Reviews: Make reliable testing part of how you evaluate individual contributions

- Establish Clear Testing Guidelines: Create and maintain documentation that helps everyone write consistent, high-quality tests

- Dedicated "Test Improvement" Sprints: Set aside specific time periods focused purely on cleaning up and strengthening existing tests

By getting everyone involved, measuring what counts, and working through challenges together, teams can build a culture where quality testing becomes second nature. This leads to more stable software, faster development, and happier developers.

Ready to improve how your team handles flaky tests? Mergify helps teams detect and manage unstable tests right in their workflow, letting developers focus on building great software. See how Mergify can help your team today: https://mergify.com