Mastering Automated Integration Testing: A Strategic Guide for Modern Development Teams

Breaking Down the Automated Testing Journey

Shifting from manual to automated testing is a major adjustment for development teams. While the long-term benefits are clear, making this transition effectively requires thoughtful planning and persistence. Let's look at how successful engineering teams have made this change work for them.

From Manual To Automated: A Transformation Story

Manual testing is often where teams start, but it becomes increasingly difficult to maintain as software grows more complex and release cycles speed up. The solution? Automated integration testing. Teams that make this switch typically see dramatic improvements - what used to take days of manual testing can often be completed in just a few hours with automation. This frees up developers to focus on building new features instead of running repetitive tests by hand.

Overcoming the Hurdles

The path to automated testing isn't always smooth. Teams commonly face several key challenges:

- Initial Investment: Getting started requires dedicating time to set up the automation framework and create the first test suites

- Resistance to Change: Team members who are comfortable with manual testing may hesitate to learn new tools and methods

- Maintaining Test Suites: Tests need regular updates as code changes, which takes ongoing effort

But with the right approach, teams can successfully work through these obstacles.

Addressing Common Fears

When introducing automation, it's essential to tackle concerns head-on. Many team members worry about the complexity of automation tools or wonder if their jobs are at risk. The solution is two-fold: provide thorough training so everyone can confidently work with automated tests, and communicate openly about how automation will complement rather than replace human expertise. This helps create an environment where team members embrace automation as a helpful tool.

Maintaining Momentum

For automated testing to deliver lasting value, teams need clear processes for creating, running and maintaining tests. Mergify can help by automating pull request updates and using strategic CI batching to keep codebases stable. Regular monitoring of test results and quick responses to failures help keep the testing process running smoothly as projects grow. When maintained well, automated tests become an invaluable part of delivering reliable software consistently.

Building Test Suites That Actually Work

The success of automated integration testing depends heavily on creating test suites that are both effective and maintainable. Many teams struggle with unreliable tests, complex dependencies between systems, and data management challenges that can quickly undermine automation efforts. Building robust test suites requires careful planning and attention to key principles.

Structuring Tests for Long-Term Value

Think about automated integration tests as smaller versions of real user interactions with your system. Each test should verify specific flows between different components while focusing on expected behaviors. For example, when testing an e-commerce system, you might verify that submitting a new order properly updates inventory levels and processes the payment transaction. This approach keeps tests relevant and meaningful as your application grows.

Breaking tests into clear setup, execution, and cleanup phases makes them easier to understand and maintain over time. This clear structure helps developers quickly debug issues when tests fail and allows common setup code to be shared across multiple test cases.

Handling Test Data and Dependencies

Good test data management is essential for reliable integration testing. Setting up dedicated test environments with controlled datasets helps prevent tests from interfering with each other. Using data factories or builders to generate consistent test data reduces duplication and makes tests more stable. This becomes especially important when testing interactions between multiple systems that need to share consistent data.

System dependencies often make integration tests brittle and complex. One effective solution is using mocks or stubs to simulate dependency behavior. For instance, if your test needs to interact with a payment API, you can mock the API responses to create predictable test conditions without relying on the actual network service.

Building a Resilient Automation Framework

A solid test automation framework forms the foundation for successful integration testing. Picking appropriate testing tools and following coding best practices like descriptive naming and modular design keeps your test code clean and maintainable. Running tests automatically in your CI/CD pipeline provides quick feedback on changes. Tools like Mergify can help by efficiently managing CI runs, creating a more stable testing process. This integrated approach ensures tests run consistently as part of development.

Test flakiness remains a common challenge that requires active management. Regularly reviewing test logs, identifying patterns in intermittent failures, and implementing smart retry logic helps maintain reliable test execution. While this ongoing maintenance takes effort, it's crucial for building trust in your automated tests. Recent studies show that 73% of testing teams now rely on automation for functional and regression testing, highlighting the value of investing in strong test infrastructure.

Smart Test Selection and Execution Strategies

After setting up your test suite and managing dependencies, the next challenge is deciding which tests to run and when to run them. Running every single test after each code change quickly becomes impractical as your project grows. The key is being selective and strategic about test execution.

Prioritizing Tests for Optimal Feedback

Some tests matter more than others. Tests covering core user flows like login or checkout deserve more attention than those for rarely-used features. A practical approach is to group tests based on their importance - considering factors like business impact, usage patterns, and recent code changes. This helps catch critical issues early while still maintaining good overall coverage.

One effective method is risk-based testing, where you focus on code sections that tend to break more often or could cause serious problems if they fail. By analyzing past bugs and complex areas of your codebase, you can identify these high-risk spots and test them more thoroughly.

Parallel Execution: Speeding Up the Process

As test suites grow larger, running tests one after another becomes too slow. The solution? Run multiple tests at the same time across different environments. While this makes tests finish faster, it requires careful planning. You'll need proper infrastructure to handle parallel runs and smart test design to prevent tests from interfering with each other, especially when they work with shared data.

Minimizing Test Execution Time Without Compromising Coverage

Making tests run faster isn't just about raw speed - it's about running the most relevant tests efficiently. Here are proven ways to cut down testing time while keeping good coverage:

- Change-Based Testing: Only run tests related to code that actually changed. This needs tools that can spot which tests are affected by specific code changes.

- Test Impact Analysis: Use tools that map how code and tests are connected to figure out exactly which tests need to run for each change.

- Optimized Test Environments: Slow environments make tests take longer than necessary. Keep your test setup well-configured with enough resources to run smoothly.

Google's experience shows why this matters - their research found that most code changes only affect a small part of the system. In fact, 96% of changes don't require running all tests. Teams that switched to smart test selection have seen dramatic improvements, turning hours-long test runs into minutes-long ones. This means they can test more often and get feedback faster without sacrificing quality.

By being strategic about which tests you run and when you run them, you can make your testing process much more efficient. This leads to faster development cycles and better software quality - the ultimate goal of automated testing.

Integrating Tests Into Your Development Workflow

The key to quality software development lies in making automated integration testing a natural part of how teams work. Rather than treating testing as a final checkpoint, smart teams weave it throughout their development process. This approach catches issues early when they're easier and less expensive to fix. Let's explore practical ways to incorporate automated testing into your daily workflow to build better software faster.

Balancing Speed and Reliability With Automated Integration Testing

Many developers worry that thorough testing will slow them down. But in practice, automated integration testing actually helps teams work faster. Quick feedback from automated tests lets developers spot and fix integration problems right away, rather than wrestling with complex bugs later. Consider how a team running tests after each code commit can immediately catch and fix issues before they affect other parts of the system. This prevents small problems from growing into major headaches.

Pre-Commit Checks: Ensuring Code Quality From the Start

Pre-commit checks act as an early warning system by running automated tests before code gets merged into the main codebase. This is especially helpful for remote teams working across different time zones. For example, if a developer accidentally breaks an integration with an external API, pre-commit checks would catch this immediately. The developer can then fix the issue before it impacts their colleagues' work.

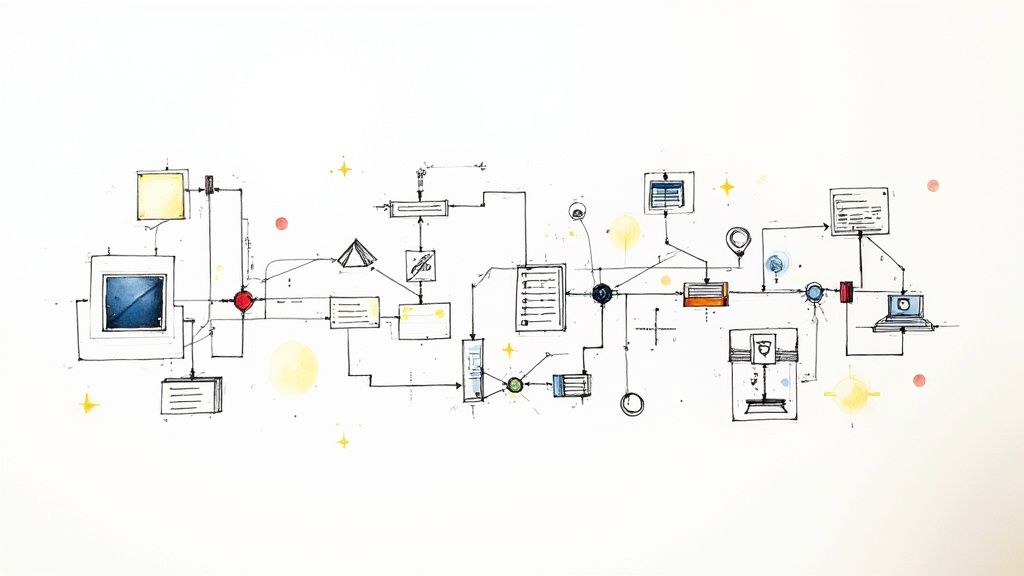

Building Confidence With CI/CD Pipelines

Automated integration tests are essential for reliable Continuous Integration/Continuous Delivery (CI/CD) pipelines. Running tests automatically within CI/CD gives teams quick insights into how code changes affect the whole system. This builds trust in deployments and helps prevent integration problems from reaching production. Tools like Mergify can improve this process by handling pull request updates and CI runs smoothly, making the entire pipeline more stable.

Managing Test Environments for Seamless Integration

Good test environments are crucial for effective automated integration testing. These separate environments should closely match production settings so tests run against realistic scenarios. This prevents tests from interfering with each other and ensures results accurately show how the system works together. Using tools to create consistent test data makes automated integration testing even more reliable. When tests use the same data patterns, it's easier to spot the real effects of code changes and trust the test results.

This practical approach to testing, along with careful attention to environments and test data, helps teams deliver quality software confidently. When automated integration testing becomes a natural part of daily work, it supports rather than slows down development.

Making AI Work for Your Testing Strategy

AI is opening up new possibilities in automated integration testing that go beyond basic test automation. Teams are finding that AI-powered tools can help solve longstanding testing challenges in ways that weren't possible before. The impact is especially noticeable in how much faster and easier it becomes to create and maintain complex integration tests.

AI-Powered Test Generation

One of the biggest benefits of AI in testing is its ability to automatically generate test cases. Rather than writing every test by hand, AI can analyze your system requirements, code, and user behavior patterns to create relevant test scenarios. This is particularly helpful for integration testing, where you need to verify many different component interactions. AI can spot potential edge cases that human testers might miss, leading to better test coverage and earlier bug detection.

Optimizing Test Execution With AI

AI also makes test execution more efficient. As we discussed earlier, running all integration tests after every code change isn't practical for larger projects. AI tools can look at your code changes and past test results to figure out which tests are most likely to catch problems. For example, if you change one module, AI can identify just the integration tests that work with that module, skipping tests that aren't relevant. This focused approach saves significant time in your CI/CD pipeline.

AI-Driven Failure Analysis

When tests fail, finding the root cause can eat up a lot of time. AI speeds this up by automatically sorting failures into categories, finding similar issues, and suggesting likely causes. This helps developers zero in on problems and fix them faster. AI can also spot patterns in test failures over time - for instance, if tests consistently fail when interacting with a particular dependency, it might mean you need better error handling there.

The Future of AI in Testing and Its Challenges

While AI brings major improvements to automated integration testing, it's not without its challenges. AI tools need lots of training data to work well and may struggle with situations they haven't seen before. Adding AI tools to your existing workflow often means adjusting your processes and tools. Still, AI's role in testing keeps growing. The fact that 73% of testing teams now use automation for functional and regression testing shows a clear move toward smarter, more efficient testing approaches. Tools like Mergify can help by automating parts of the CI/CD pipeline, making AI-driven testing workflows more reliable. Even with current limitations, AI is changing how teams handle automated integration testing, helping them ship better software faster.

Measuring Success and Driving Continuous Improvement

A successful automated testing strategy requires more than just implementation - you need to measure results and consistently refine your approach. By focusing on meaningful metrics and analysis, you can identify what's working, what needs improvement, and how to maximize the impact on code quality and delivery speed.

Key Metrics for Automated Integration Testing

While basic metrics like test counts provide a starting point, truly effective measurement requires tracking more insightful data points:

- Test Execution Time: The runtime of your complete integration test suite directly impacts feedback cycles and testing frequency. This is especially important for integration tests that coordinate multiple systems and can be time-intensive.

- Test Coverage: While 100% coverage isn't always feasible, aim to thoroughly test critical system interactions where integration issues commonly occur. Focus coverage on key component interfaces and data flows.

- Defect Escape Rate: Track how many bugs make it to production that integration tests should have caught. This helps evaluate if your tests effectively prevent issues from reaching users.

- Test Stability: Monitor how often tests fail due to environmental issues rather than actual code problems. Unreliable "flaky" tests reduce confidence and make it harder to spot real defects. Maintaining test stability is essential for reliable results.

Analyzing Test Results and Identifying Improvement Opportunities

Raw metrics only become valuable through thoughtful analysis that reveals opportunities to improve. For example, if many defects escape to production, you may need to expand test coverage or redesign test cases. Long execution times could point to inefficient test code or resource constraints. Regular review of test failures and patterns helps identify and fix unstable tests.

This data-driven approach enables targeted improvements. You might optimize slow test scripts after analyzing execution bottlenecks. Understanding which types of defects slip through helps focus testing on higher-risk areas.

Building a Culture of Testing Excellence

Creating lasting improvement requires more than tools and numbers - it needs cultural change. Teams must view testing as a core part of development, not just a final checkbox. Foster an environment of continuous learning where teams regularly evaluate practices, share knowledge, and strive to improve.

Recognize and celebrate testing achievements to reinforce its importance and motivate ongoing enhancement. Seamlessly integrate automated tests into your CI/CD workflow using tools like Mergify to ensure consistent execution and feedback. Even small gains in stability or speed compound over time into major improvements in quality and delivery.

Driving Continuous Improvement With Mergify

An efficient CI/CD pipeline forms the foundation for effective automated testing. Mergify helps teams reduce CI costs, strengthen code security, and eliminate developer friction. By automating pull request updates and strategically batching CI runs, Mergify minimizes conflicts and maintains code stability. This lets teams focus on improving test quality rather than managing CI/CD complexity. Visit https://mergify.com to learn how Mergify can help your testing efforts succeed.