Integration Tests vs Unit Tests: The Complete Guide to Modern Testing

Software teams often debate the right mix of testing types for their projects. While the classic testing pyramid suggests focusing heavily on unit tests (70%), moderately on integration tests (20%), and lightly on end-to-end tests (10%), real-world applications demand a more flexible approach. Let's explore how to build an effective testing strategy that matches your team's actual needs.

Why the Traditional Pyramid Needs a Modern Update

The original testing pyramid made sense when applications were simpler - unit tests were fast, cheap, and caught bugs early. But today's software landscape looks very different. Modern apps often involve multiple services, external APIs, and complex user interfaces that can't be properly tested through units alone.

Take a typical e-commerce site that integrates with payment providers and shipping services. Unit tests might verify that your checkout logic works, but they won't catch issues with API timeouts or data sync problems between services. This is where integration tests prove invaluable. Similarly, with rich web applications becoming the norm, end-to-end tests play a bigger role in ensuring features work as users expect them to.

Finding the Right Balance: Unit Tests vs. Integration Tests

The key is finding the right mix of test types for your specific application. Think of it like building a house - unit tests check individual components like electrical wiring and plumbing, while integration tests verify that rooms function together as living spaces. End-to-end tests ensure the whole house meets the owners' needs.

Unit tests excel at quickly catching bugs in isolated pieces of code. They're great for core business logic and utility functions. But they can't replicate how components interact in production. Integration tests fill this gap by verifying that different parts of your system work together correctly. While they take longer to run, they catch issues that unit tests miss.

Practical Frameworks for Assessment and Improvement

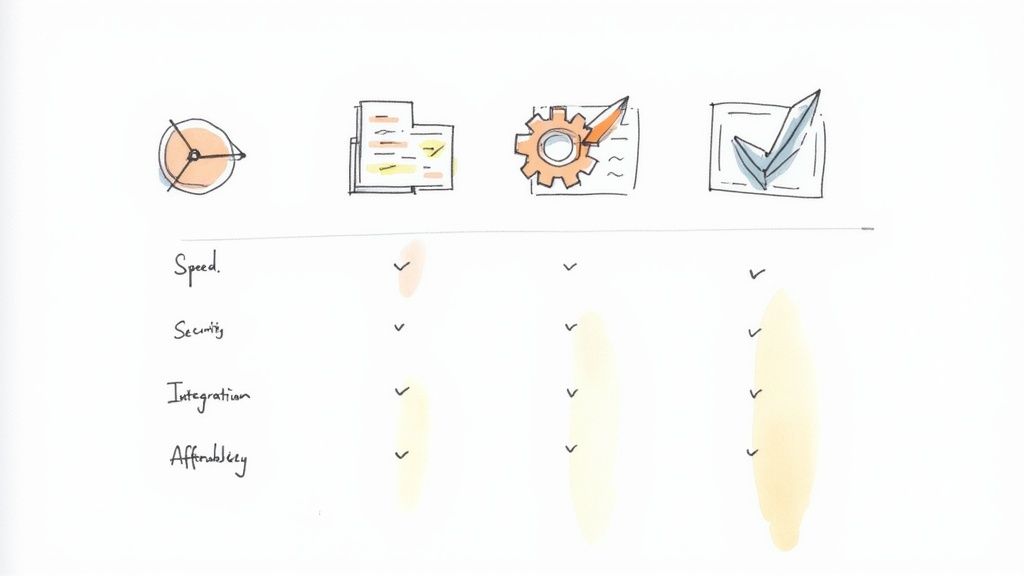

When evaluating your testing approach, consider these key factors:

- Application Complexity: More moving parts and integrations usually require more integration testing

- Risk Tolerance: Critical systems may need extensive end-to-end testing despite higher costs

- Team Experience: Teams with strong unit testing skills can often rely more heavily on that approach

- Development Speed: Find a balance that catches bugs without slowing down releases

Tools like Mergify can help optimize your testing pipeline by automating test runs and providing insights into test performance. With smart automation handling routine merges, developers can focus on writing meaningful tests at all levels. This allows teams to adapt their testing mix based on real needs rather than following rigid rules. The goal is thorough testing coverage while keeping development moving smoothly.

Mastering Unit Tests: Beyond the Basics

A strong testing strategy starts with effective unit tests. Beyond just creating tests that pass, it's crucial to develop tests that actively improve code quality and speed up development. Let's explore how to make unit tests truly valuable, since passing tests alone don't guarantee bug-free code.

Avoiding Common Pitfalls: Over-Mocking and Brittle Tests

One of the biggest challenges developers face is finding the right balance with mocking. While mocks help isolate code for testing, too much mocking can be counterproductive. Consider a user authentication service test - if you mock the database to always return successful logins, you'll miss real issues in the database interaction logic.

Tests that are too closely tied to implementation details create another problem. These "brittle" tests break whenever you change the code's internal workings, even if the core functionality stays the same. For example, a test might fail just because you changed how you store data internally, though the data processing still works perfectly. This constant need to update tests slows down development significantly.

Techniques for Effective Unit Testing: Edge Cases and Test Data

The best unit tests focus on the challenging scenarios where bugs like to hide - the edge cases and boundary conditions. Take a discount calculation function - you'll want to test it with zeros, negative numbers, and very large values to ensure it handles unexpected inputs correctly. These thorough checks help catch subtle bugs before they reach production.

Smart test data management makes a big difference too. Instead of embedding test data directly in your test code, use data-driven testing approaches that separate test cases from test logic. This makes it much easier to add or modify test scenarios without touching the core test code. Tools like parameterized tests work especially well for handling multiple test cases, which is common in real applications.

Writing Tests as Living Documentation

Unit tests can serve as excellent documentation when written thoughtfully. Clear, well-structured tests show new team members exactly how different parts of the system should behave. A test named test_invalid_user_login_returns_error immediately tells you what should happen when someone tries to log in with bad credentials. Amazon Web Services notes that unit tests typically make up about 70% of testing efforts, highlighting how important they are for understanding code behavior.

Taking this detailed approach to unit testing does more than just catch bugs - it helps teams develop faster and with more confidence. By writing meaningful tests that cover edge cases and avoid common traps, developers can make changes boldly while minimizing regression risks. This foundation of solid unit tests sets the stage perfectly for effective integration testing, which we'll cover next.

Integration Testing That Actually Works

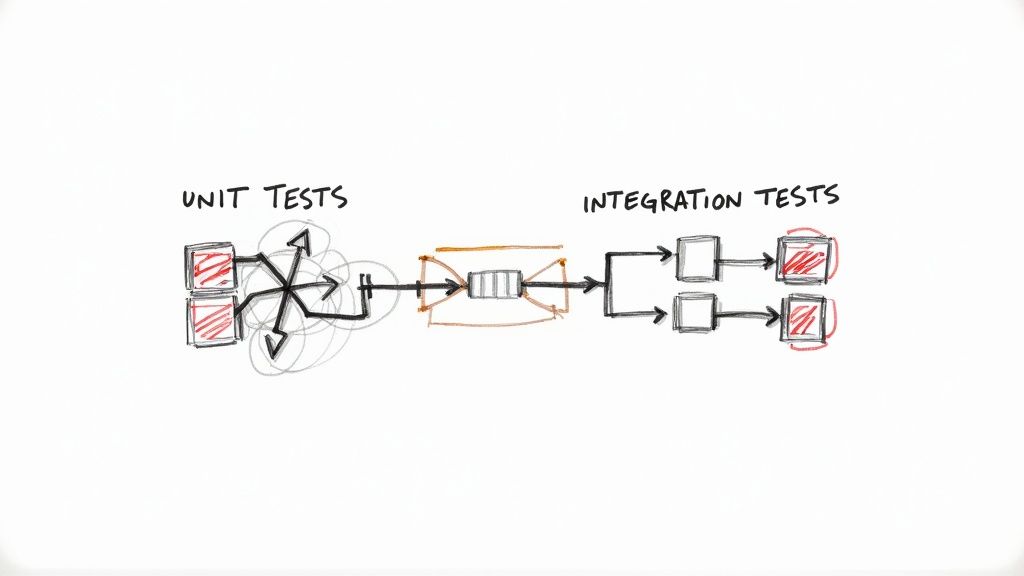

While unit tests verify individual code components, integration testing ensures these pieces work together smoothly as a complete system. This additional layer of testing becomes especially important when building complex applications that rely on multiple services, APIs, and microservices. Let's explore how to make integration testing practical and effective.

Managing Test Environments and Dependencies

One of the biggest challenges in integration testing is handling test environments and external dependencies effectively. For instance, if your application processes payments through a third-party gateway, you'll need to simulate those interactions during testing. Service virtualization offers a solution by creating mock versions of external services that mirror real behavior. Tools like Docker help create consistent test environments that minimize setup headaches and let developers focus on writing meaningful tests rather than fighting configuration issues.

Building Reliable Test Suites

Good integration tests should catch real problems that unit tests miss, but they come with their own challenges. Since integration tests cover more complex interactions between components, pinpointing the exact cause of failures can be tricky. The key is writing focused tests that target specific interaction points between components. Including detailed logging in your tests makes debugging much easier when issues arise.

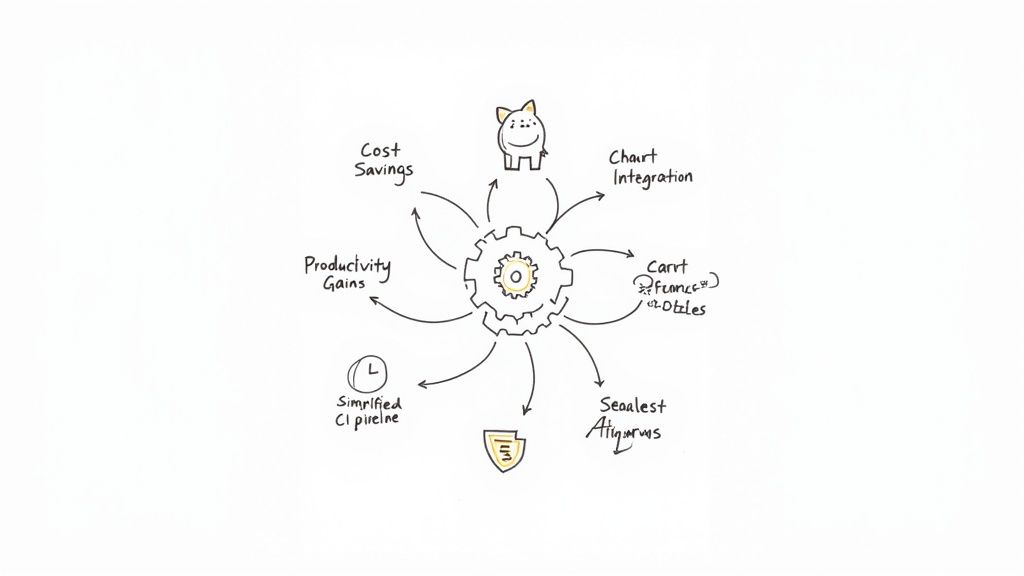

Integration tests also tend to run slower than unit tests, especially in larger systems. This can slow down development if you try to run all integration tests frequently. To address this, identify your most critical integration points and prioritize testing those areas first. Mergify and similar tools can help automate test runs and provide insights into test performance, allowing teams to optimize their continuous integration pipeline for efficient testing.

Implementing Integration Tests for Real Confidence

Think of integration testing like assembling a car - unit tests confirm individual parts work, while integration tests verify everything functions correctly when put together. These tests provide real confidence that your system's components interact properly.

Following AWS guidelines, integration tests typically make up 10-20% of a testing strategy, compared to unit tests at 70%. While fewer in number, integration tests play a crucial role in verifying system behavior. This targeted approach acknowledges their more complex nature while maximizing their value. Well-structured integration tests combined with solid unit testing give you valuable insights into how your application performs in realistic scenarios. Together, they create a strong foundation for comprehensive end-to-end testing that ensures your complete system works as intended.

Smart Resource Allocation in Testing

Testing requires careful planning and resource allocation, just like any other business investment. Development teams need to make strategic decisions about where to focus their testing efforts to get the best results. This means weighing the benefits of thorough testing coverage against practical constraints like time, budget, and ongoing maintenance costs. Let's examine how successful teams approach these decisions.

Balancing Coverage and Maintenance Burden

Every testing team faces tough choices between maximizing test coverage and keeping the test suite manageable. While complete test coverage sounds great in theory, maintaining an extensive set of tests requires significant ongoing work. Tests that are too closely tied to implementation specifics often break when the code changes, creating extra maintenance overhead. But testing too little leaves room for serious bugs to slip through. The solution is to concentrate testing efforts on the most critical and complex parts of your system - areas where failures would have the biggest impact.

Managing Test Execution Time in CI/CD Pipelines

The time it takes to run tests becomes crucial when using continuous integration and delivery. Lengthy test suites can slow down the entire development process and delay releases. Tools like Mergify help optimize testing workflows by automating test execution and tracking performance metrics. This helps teams spot slow tests that need optimization. Finding the right mix of test types also affects speed - unit tests run quickly and should make up most of your suite (around 70% according to AWS), while slower but valuable integration tests typically cover 10-20% of testing.

Identifying Which Parts of Your Codebase Need Which Types of Tests

Different parts of your code base need different testing approaches. Core business logic, utility functions, and individual components work well with unit tests since these tests are quick to create and maintain while catching bugs early. Integration tests make more sense for checking how different parts of the system work together, like testing database and business logic interactions. The key is matching the test type to what you're trying to verify.

Measuring Testing ROI and Making Data-Driven Decisions

Your testing strategy should be guided by real data and metrics. By tracking things like bug rates, test coverage levels, and execution times, you can see which testing approaches are most effective. This helps you make better decisions about where to invest testing resources. For example, if integration tests consistently catch important issues, it may be worth dedicating more time there even if it increases total test runtime. Regular evaluation of these metrics helps you improve your testing approach over time, showing how testing directly improves code quality and development speed.

Building Your Testing Strategy From the Ground Up

An effective testing strategy goes beyond simply following prescribed testing ratios. Success comes from understanding the distinct value that unit tests and integration tests provide, then crafting an approach that maps to your specific project needs. This section explores practical ways to enhance your testing practices while maintaining development velocity.

Laying the Foundation: Gradual Improvement

Creating better testing practices doesn't require a complete overhaul of your current approach. Begin by evaluating your existing tests - are your unit tests properly isolating components? Do your integration tests effectively validate system interactions? Map out areas that need attention and rank them based on potential impact. For example, if your app heavily depends on third-party APIs, you might start by strengthening integration tests around those external connections.

Mergify can help enable this incremental progress by automating test execution and delivering insights about test performance in your CI/CD pipeline. When routine tasks are automated, developers can focus their energy on writing meaningful tests that add real value.

Establishing Effective Testing Patterns

After identifying key improvement areas, create clear testing guidelines for your team. This includes setting unit test coverage goals, determining critical integration points, and deciding on the right amount of end-to-end testing. For instance, aim to achieve strong unit test coverage (around 70% according to AWS) for core business logic and utility functions to verify components work correctly in isolation.

For integration tests, focus on testing essential component interactions like database operations and API calls. This targeted strategy helps maximize the value of integration tests (which typically make up 10-20% of testing according to AWS) without unnecessarily extending test runtime. Clear patterns help maintain consistency across your entire test suite.

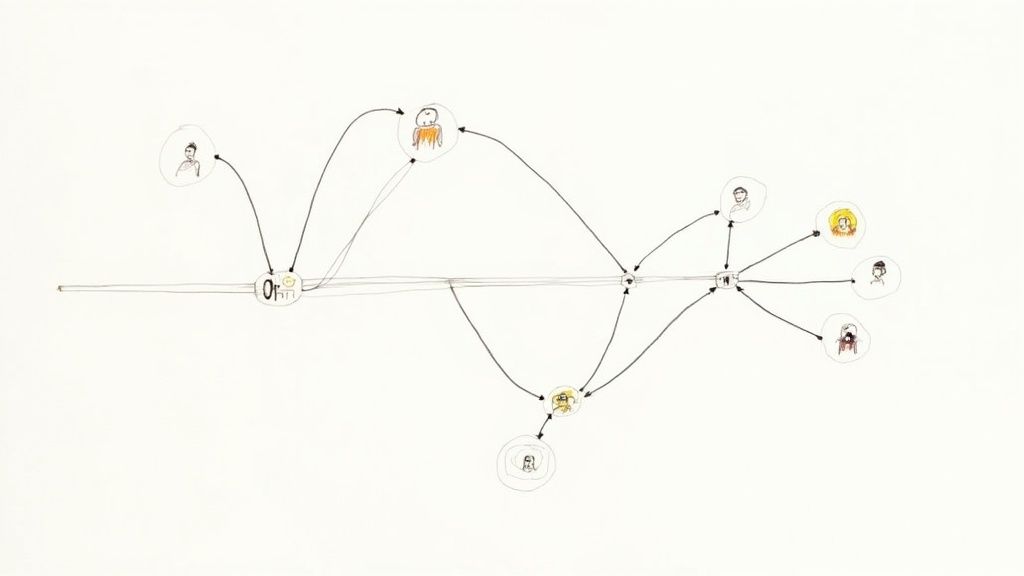

Building Buy-in and a Culture of Quality

For a testing strategy to succeed, the whole team needs to be on board. Create an environment where quality is a shared responsibility, not just relegated to QA. Help developers understand the benefits of different test types and give them the skills to write effective tests. Make testing practices a regular discussion topic in team meetings to encourage ongoing feedback and improvements.

Show concrete examples of how thorough testing has caught issues before they reached users - this demonstrates the direct value of testing and motivates team members to actively participate in quality efforts.

Scaling Your Testing Strategy

Your testing approach needs to evolve as your application grows. What works for a small project may not scale for enterprise needs. Regularly assess and adjust your strategy based on your project's changing requirements. This might mean adopting new testing tools or refining existing processes.

For instance, as your application becomes more complex, you may need to invest in service virtualization or similar advanced testing approaches to properly handle dependencies and simulate production scenarios. By proactively adapting your testing strategy, you maintain high quality standards throughout your application's growth. This ensures your tests continue providing value by increasing confidence in your codebase and enabling reliable releases.

Overcoming Common Testing Roadblocks

Teams regularly face key challenges when building comprehensive testing strategies that include both unit and integration tests. From inconsistent test results to data management complexities and scaling issues, testing can feel like navigating an obstacle course. Let's examine practical approaches that development teams use to tackle these common testing hurdles.

Taming Flaky Tests: Strategies for Stability

Tests that pass and fail randomly without code changes quickly become a major headache for development teams. A primary culprit is shared state between tests - for example, when multiple tests modify the same database table, causing results to vary based on execution order. To prevent this, isolate each test by resetting environments between runs or using separate data sets. Asynchronous code poses another challenge that can destabilize tests. Adding proper wait mechanisms and ensuring proper timing for async operations helps prevent race conditions that lead to inconsistent results.

Mastering Test Data: Effective Management Techniques

Good test data management is essential for reliable testing but often proves tricky to get right. Hard-coding test data directly in tests makes them fragile and hard to maintain over time. A better approach is data-driven testing, which keeps test logic separate from test data. This lets you run identical test logic against multiple data scenarios - for instance, testing a registration form with valid emails, invalid emails, and missing emails stored in external files. Test data factories and builders also help by programmatically generating realistic test data for complex objects, making test setup cleaner and maintenance easier.

Maintaining Quality at Scale: Long-Term Strategies

As codebases grow, keeping test suites effective becomes increasingly difficult. One key strategy is test prioritization - focus first on thoroughly testing the most business-critical features and core functionality with both unit and integration tests. This targeted approach helps teams get the most value from limited testing resources. For example, prioritize testing main user workflows and core business logic ahead of less critical features. Regular test suite maintenance is also crucial - removing duplicate tests, improving test clarity, and addressing technical debt helps preserve the long-term value of tests as documentation of intended system behavior.

Automating for Efficiency: Tools and Techniques

Test automation is essential for testing effectively at scale. Tools like Mergify can significantly improve testing workflows by automating test runs, code merging, and providing visibility into test performance. This frees developers to focus on writing valuable tests rather than managing testing infrastructure. The automation helps catch integration issues early, even before code merges, and ensures smooth test execution. Mergify's automated merge process gives developers more time for creating robust tests, leading to better code quality and faster releases.