7 Essential Infrastructure as Code Best Practices for 2025

The era of manual server configuration and deployment uncertainty is over. Infrastructure as Code (IaC) has transformed how we build, manage, and scale digital environments by treating infrastructure with the same rigor and discipline as application code. Adopting an IaC tool like Terraform, Pulumi, or CloudFormation is a critical first step, but true mastery comes from implementing a consistent, battle-tested methodology. Without a solid foundation of best practices, IaC can create more problems than it solves, leading to brittle, insecure, and unmanageable systems.

This guide provides a comprehensive roadmap for success, diving deep into seven crucial infrastructure as code best practices. We move beyond generic advice to deliver actionable strategies, real-world code snippets, and practical tips for integration into your CI/CD pipelines. You will learn not just what to do, but how and why each practice is essential for building a robust, scalable, and secure foundation for your applications.

Each section is designed to be a self-contained guide to a specific principle, covering core concepts and implementation details for:

- Version Control Everything: Treating infrastructure definitions as a single source of truth.

- Modularize and Reuse Code: Building composable, reusable infrastructure components.

- Implement Proper State Management: Protecting and managing your infrastructure's state file.

- Environment Consistency and Isolation: Creating predictable and isolated environments.

- Automated Testing and Validation: Integrating quality gates into your IaC workflow.

- Secrets and Sensitive Data Management: Handling credentials and secrets securely.

- Immutable Infrastructure Patterns: Minimizing configuration drift and ensuring reliability.

By mastering these techniques, your team can transform infrastructure management from a reactive bottleneck into a proactive, strategic advantage.

1. Version Control Everything

The absolute cornerstone of modern infrastructure as code best practices is to version control everything. This means treating all your infrastructure definitions, configuration files, scripts, and even related documentation with the same rigor and discipline as your application source code. By storing these assets in a Version Control System (VCS) like Git, you create a single source of truth that is auditable, collaborative, and reversible.

This foundational practice transforms infrastructure management from an opaque, manual process into a transparent, programmatic one. Every change, whether it's provisioning a new server, modifying a firewall rule, or updating a Kubernetes deployment, is captured as a commit. This historical record is invaluable for debugging, auditing security compliance, and understanding the evolution of your environment over time.

Why This Practice is Essential

Placing your infrastructure code under version control unlocks several critical capabilities. It enables peer review through pull requests (PRs), ensuring that changes are vetted for errors and adhere to team standards before being applied. If a change introduces an issue, you can quickly revert to a previously known good state, minimizing downtime and business impact.

Companies like Netflix and Airbnb have pioneered this approach. Netflix manages its massive global infrastructure using Git repositories for all its Terraform configurations, while Airbnb enforces strict PR review processes on GitHub to maintain stability and security. This discipline is not just for tech giants; any organization can adopt it to achieve greater control and reliability.

Key Insight: Version control is the prerequisite for automation. Without a reliable, versioned source of truth, building robust CI/CD pipelines for infrastructure is nearly impossible.

Actionable Implementation Tips

To effectively implement version control for your infrastructure, follow these specific strategies:

- Establish Clear Branching Strategies: Adopt a branching model like GitFlow or a simpler trunk-based development model. Define clear rules for feature branches, bug fixes, and releases to keep the repository organized and prevent conflicting changes.

- Enforce Meaningful Commit Messages: Implement a commit message convention (e.g., Conventional Commits) that clearly explains the why behind a change, not just the what. A message like

feat(networking): add egress rule for payment processor APIis far more useful thanupdated firewall. - Utilize

.gitignoreDiligently: Your repository should only contain code, not state files, temporary credentials, or sensitive data. Use a.gitignorefile to explicitly exclude files liketerraform.tfstate,.tfvarssecrets files, and provider-specific cache directories. - Protect Key Branches: Use branch protection rules in your VCS (e.g., GitHub, GitLab) to enforce required status checks and PR reviews before any code can be merged into critical branches like

mainorproduction. - Integrate Pre-Commit Hooks: Use tools like

pre-committo automatically lint, format, and validate your IaC code before it's even committed. This catches simple errors early and maintains a consistent codebase.

By treating infrastructure as code with this level of discipline, you lay the groundwork for a scalable, secure, and highly automated environment. For a deeper dive into the fundamentals of version control, you can explore this comprehensive developer's guide to Git.

2. Modularize and Reuse Code

A core tenet of effective infrastructure as code best practices is to modularize and reuse your code. Instead of writing monolithic configurations for your entire infrastructure, break it down into smaller, reusable, and composable modules. Each module should encapsulate a specific piece of functionality, like a virtual network, a database cluster, or a Kubernetes node pool, creating a standardized building block that can be deployed repeatedly.

This approach transforms your infrastructure code from a single-use script into a catalog of reliable, well-defined components. By abstracting away complexity, you can provision complex environments with confidence, knowing that each underlying piece has been tested and validated. This dramatically improves maintainability, reduces code duplication, and accelerates the deployment of new services and environments.

Why This Practice is Essential

Modularization prevents the "copy-paste" anti-pattern that leads to configuration drift and maintenance nightmares. When you need to update a component, you modify the source module once, and the change propagates to every environment that uses it. This ensures consistency and makes it easier to enforce security policies, naming conventions, and architectural standards across your organization.

Companies like Gruntwork built their entire business around this concept, providing a library of production-grade, reusable Terraform modules. Similarly, Google's Cloud Foundation Toolkit and Microsoft's Azure Landing Zones leverage modularity to help organizations deploy secure and scalable cloud foundations. This practice enables teams to focus on application logic rather than reinventing infrastructure primitives.

Key Insight: Modules are the functions of infrastructure. They accept inputs (variables), perform an action (create resources), and return outputs, enabling you to build complex systems from simple, testable units.

Actionable Implementation Tips

To effectively modularize your infrastructure code, consider these strategies:

- Start Simple and Abstract Later: Begin by building a working configuration, then identify logical groupings of resources that can be refactored into a module. Don't over-engineer from the start; let patterns emerge naturally.

- Establish a Private Module Registry: For organizational use, set up a private registry (like Terraform Cloud/Enterprise, GitLab, or Artifactory) to host and version your internal modules. This makes discovery and consumption easy for all teams.

- Use Semantic Versioning: Treat your modules like software artifacts. Use semantic versioning (e.g.,

v1.2.0) to signal breaking changes, new features, and bug fixes, allowing consumers to adopt updates safely. - Document Inputs, Outputs, and Examples: Every module should have a

README.mdthat clearly documents all input variables, outputs, and provides at least one clear usage example. This is crucial for adoption and long-term maintenance. - Implement Automated Module Testing: Use tools like

TerratestorKitchen-Terraformto write automated tests for your modules. This validates their functionality and ensures that changes don't introduce regressions before they are published.

By embracing a modular design, you create a more manageable, scalable, and resilient infrastructure codebase. For an excellent example of a public module ecosystem, you can explore the official Terraform Registry.

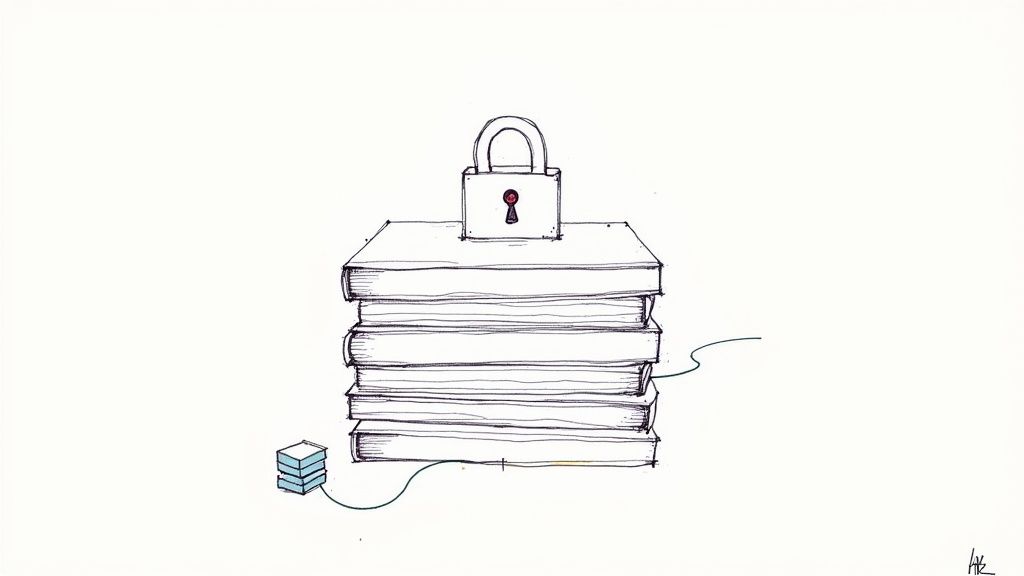

3. Implement Proper State Management

A critical aspect of infrastructure as code best practices is implementing proper state management. State is the mechanism IaC tools like Terraform use to map real-world resources to your configuration. It's a snapshot of your managed infrastructure, tracking resource metadata and dependencies. Neglecting state management can lead to resource drift, conflicting changes, and catastrophic data loss.

Effective state management means moving the state file from a local developer machine to a secure, remote, and shared location. This remote backend acts as the single source of truth for the infrastructure's current condition, preventing a scenario where multiple team members have different, outdated versions of the state. This practice is fundamental for collaborative, safe, and predictable infrastructure automation.

Why This Practice is Essential

Centralizing your infrastructure state is non-negotiable for any team. It enables state locking, a crucial feature that prevents multiple users from running commands like terraform apply at the same time. This locking mechanism stops "race conditions" that can corrupt the state file and leave your infrastructure in an inconsistent or broken condition.

Industry leaders rely on robust state management. Spotify famously uses AWS S3 with DynamoDB locking to manage Terraform state across hundreds of engineering teams, ensuring consistency and safety at scale. Similarly, Shopify leverages Terraform Cloud for its centralized state management, providing a collaborative and auditable platform for their global e-commerce infrastructure. These patterns, popularized by HashiCorp and major cloud providers, are essential for mature IaC workflows.

Key Insight: Treat your infrastructure state file as a critical, sensitive asset, equivalent to a production database. Its integrity is paramount to the stability and predictability of your entire environment.

Actionable Implementation Tips

To implement a secure and reliable state management strategy, follow these specific guidelines:

- Use Remote State Backends: Immediately configure a remote backend for your state files. Common choices include AWS S3 with a DynamoDB table for locking, Azure Blob Storage, or Google Cloud Storage. Never commit state files to your version control system.

- Enable State Locking: Always choose a backend that supports native state locking. This is a built-in feature for most cloud storage backends when configured correctly (e.g., using DynamoDB with S3) and is a core function of platforms like Terraform Cloud.

- Isolate State by Environment: Do not use a single, monolithic state file for all your infrastructure. Create separate state files for distinct environments (e.g.,

dev,staging,prod) and even for different application components. This reduces the blast radius of any potential errors. - Implement Versioning and Backups: Enable versioning on your remote storage bucket (e.g., S3 Versioning). This allows you to roll back to a previous version of your state file in case of accidental corruption. Regularly test your state backup and recovery procedures.

- Encrypt State Data: Your state file can contain sensitive information. Ensure your remote backend is configured to encrypt data both at rest and in transit. This is a standard security control and a crucial part of a comprehensive IaC security posture.

4. Environment Consistency and Isolation

One of the most critical infrastructure as code best practices is to maintain identical configurations across different environments while ensuring they are properly isolated. This principle, often called "environment parity," dictates that your development, staging, and production environments should be as similar as possible. By using the same IaC definitions to provision each environment, you drastically reduce the risk of unexpected failures when promoting code from one stage to the next.

This approach ensures that what works in staging will reliably work in production, eliminating the infamous "it worked on my machine" problem. Consistency removes environmental variables as a source of deployment-related bugs, allowing teams to test and validate changes with high confidence. Proper isolation, typically achieved through separate cloud accounts or network boundaries, prevents actions in lower environments from impacting production.

Why This Practice is Essential

Achieving environment parity is fundamental to building a reliable CI/CD pipeline. It allows for accurate testing of infrastructure and application changes in a production-like setting before they reach customers. This practice prevents configuration drift, where subtle differences between environments accumulate over time, leading to unpredictable behavior and hard-to-diagnose outages.

Companies like Uber and Slack are prime examples of this principle in action. Uber maintains identical Kubernetes cluster configurations across its dev, staging, and production environments using Terraform, ensuring application behavior is predictable. Similarly, Slack uses Pulumi with environment-specific parameter files to manage its AWS infrastructure, guaranteeing that each environment is a faithful replica of the others, just with different scale and security parameters.

Key Insight: The goal is not to have identical data or scale but identical configuration. Your staging environment doesn't need the same traffic as production, but it must have the same networking rules, service permissions, and software versions.

Actionable Implementation Tips

To successfully achieve consistency and isolation across your environments, implement the following strategies:

- Use Environment-Specific Variable Files: Abstract environment-specific values (like instance sizes, domain names, or account IDs) into separate configuration files (e.g.,

prod.tfvars,staging.tfvars). Your core IaC modules should remain generic and reference these variables, ensuring the underlying infrastructure definition is identical. - Implement Automated Environment Refresh: Periodically tear down and rebuild non-production environments from scratch using your IaC code. This practice, often called "phoenix environments," prevents configuration drift and validates that your infrastructure can be fully recovered automatically.

- Establish Clear Naming Conventions: Adopt a strict resource naming convention that includes an environment identifier (e.g.,

prod-app-db,stg-app-db). This makes resources easily identifiable and prevents accidental changes to the wrong environment. - Use Infrastructure Templates with Overlays: Employ a templating or overlay tool (like Kustomize for Kubernetes) that allows you to define a common base configuration and then apply small, environment-specific patches or modifications. This keeps the core logic consistent while allowing for necessary differences.

- Implement Monitoring for Configuration Drift: Use tools like AWS Config or third-party solutions to continuously monitor your environments and alert you when a manually applied change causes a deviation from the IaC-defined state.

By embracing environment parity, you build a foundation for safer, faster, and more reliable deployments. For more insights into creating effective pre-production environments, you can learn more about building a robust test environment strategy.

5. Automated Testing and Validation

Just as application code requires rigorous testing, so does your infrastructure code. Automated testing and validation is a critical practice that involves implementing a comprehensive suite of tests to ensure infrastructure changes are safe, compliant, and function as expected before they reach production. This moves beyond basic syntax checks to include security scanning, policy compliance, and functional integration tests.

This best practice shifts quality assurance left, catching potential issues early in the development lifecycle. By integrating automated validation into your CI/CD pipeline, you build a safety net that prevents misconfigurations, security vulnerabilities, and policy violations from being deployed. This transforms infrastructure changes from a high-risk activity into a reliable, predictable process.

Why This Practice is Essential

Automated testing provides confidence that your infrastructure code will behave as intended. It validates that a change to a network security group won't inadvertently expose a sensitive database or that a new VM configuration complies with corporate security standards. Without this, teams are left with manual reviews and risky "apply and see" deployment strategies.

Leading organizations build their reliability on this principle. Atlassian famously uses Terratest to write and run comprehensive integration tests for its Terraform modules in Go. Similarly, financial institutions like Goldman Sachs leverage policy-as-code frameworks like Open Policy Agent (OPA) to enforce strict regulatory and security compliance checks automatically within their pipelines.

Key Insight: Untested infrastructure code is a production incident waiting to happen. Automated validation is the only scalable way to ensure the safety, security, and compliance of your infrastructure as it evolves.

Actionable Implementation Tips

To effectively implement automated testing for your infrastructure as code, follow these specific strategies:

- Adopt the Testing Pyramid: Start with a broad base of fast, simple tests and build up to more complex ones. Begin with static analysis (linting, format checks), add unit/contract tests, and layer in a smaller number of comprehensive integration and end-to-end tests.

- Implement Policy as Code (PaC): Use tools like Open Policy Agent (OPA), HashiCorp Sentinel, or native cloud services like AWS Config to define and enforce rules. This allows you to check for things like mandatory tags, forbidden instance types, or overly permissive IAM policies automatically.

- Leverage IaC Testing Frameworks: Don't reinvent the wheel. Use established frameworks designed for IaC testing. Terratest is excellent for Go-based integration testing of Terraform, while Kitchen-Terraform and Pulumi's built-in testing framework offer robust alternatives.

- Create Ephemeral Test Environments: Configure your CI/CD pipeline to spin up a temporary, isolated environment to run your tests. The pipeline should apply the infrastructure change, run validation checks, and then automatically tear down the environment, ensuring a clean state for every test run.

- Integrate Security Scanning: Embed security tools like

tfsec,checkov, orterrascandirectly into your pre-commit hooks and CI pipeline. These tools scan your code for common security misconfigurations and vulnerabilities before they are ever deployed.

By integrating these validation steps, you ensure that every infrastructure change is thoroughly vetted, reducing risk and increasing deployment velocity. For a more in-depth look at building a robust testing strategy, you can explore this guide to automated testing best practices.

6. Secrets and Sensitive Data Management

A critical component of any secure infrastructure as code best practices is how you handle secrets and sensitive data. This means implementing a robust strategy for managing passwords, API keys, TLS certificates, and other confidential information. Hardcoding these values directly into your IaC files is a severe security vulnerability that exposes your entire infrastructure to risk if the code is ever leaked or accessed by unauthorized users.

Effective secrets management involves externalizing sensitive data from your codebase and injecting it securely at runtime. Instead of storing a database password in a .tf or .yaml file, you store a reference to a secret stored in a dedicated, encrypted, and access-controlled system. This decouples the sensitive data from the infrastructure logic, making your code safer to share and store in version control.

Why This Practice is Essential

Failing to properly manage secrets is one of the most common and damaging security mistakes in modern infrastructure. Committing a single API key to a public Git repository can lead to immediate and widespread compromise. Using a dedicated secrets management tool provides a centralized, auditable, and secure location for all credentials, enforcing strict access controls and enabling automated rotation.

Tech leaders have built entire platforms around this principle. Netflix heavily integrates AWS Secrets Manager with its Spinnaker and Terraform pipelines to dynamically inject credentials into applications and infrastructure at deployment. Similarly, organizations using Kubernetes often leverage solutions like the External Secrets Operator to sync secrets from external stores like HashiCorp Vault or Google Secret Manager directly into their clusters, avoiding the less secure default Kubernetes Secrets mechanism for storing sensitive information.

Key Insight: Treat secrets as dynamic, ephemeral data that is injected at runtime, not as static configuration that is committed to version control. Your infrastructure code should define that a secret is needed, but never what the secret is.

Actionable Implementation Tips

To build a secure secrets management workflow for your infrastructure, implement the following strategies:

- Never Commit Secrets to Version Control: This is the golden rule. Use tools like

git-secretsor pre-commit hooks to scan for and block commits that contain anything resembling a secret. Even in private repositories, it is a dangerous practice. - Utilize a Dedicated Secret Manager: Adopt a purpose-built tool like HashiCorp Vault, AWS Secrets Manager, Azure Key Vault, or Google Secret Manager. These services provide encryption at rest and in transit, fine-grained access policies, and detailed audit logs.

- Inject Secrets at Runtime: Configure your CI/CD pipeline to authenticate with the secret manager and fetch the necessary credentials during the deployment process. These can then be passed into your IaC tool as environment variables or injected directly into the target environment.

- Implement Secret Rotation: Your secrets management tool should be configured to automatically rotate credentials on a regular schedule. This limits the lifespan of any single secret, drastically reducing the window of opportunity for an attacker if a key is ever compromised.

- Enforce Least Privilege Access: Configure IAM policies in your secrets manager to ensure that a given service, application, or pipeline only has access to the specific secrets it absolutely needs to function. Regularly audit these permissions.

7. Immutable Infrastructure Patterns

A pivotal shift in modern cloud operations is the adoption of immutable infrastructure patterns. This approach mandates that once an infrastructure component like a server or container is deployed, it is never modified. To make a change, whether for an update, patch, or configuration adjustment, you replace the existing component with a new, updated instance built from a revised definition.

This practice fundamentally eliminates configuration drift, a common problem where manual, ad-hoc changes to live servers lead to inconsistencies and unpredictable behavior. By treating infrastructure components as disposable and replaceable artifacts, you create a more reliable, predictable, and easily managed environment. Every deployment starts from a known, version-controlled state, ensuring consistency across all environments, from development to production.

Why This Practice is Essential

Adopting immutability dramatically simplifies rollbacks and enhances system reliability. If a new deployment introduces a bug, the recovery process isn't a frantic effort to undo changes on a live server. Instead, you simply deploy the previous, known-good version of the infrastructure, a process that is often faster and far less risky. This is a core tenet of effective infrastructure as code best practices.

Pioneers like Netflix and Spotify have built their highly resilient global platforms on this principle. Netflix's "bake, don't fry" philosophy involves creating fully configured Amazon Machine Images (AMIs) that are deployed into Auto Scaling Groups. Spotify leverages immutable Docker containers orchestrated by Kubernetes, ensuring every service instance is identical and disposable. This approach moves the complexity from runtime configuration management to the build and deployment pipeline, where it can be tested and automated.

Key Insight: Immutability transforms infrastructure management from a process of state repair to a process of state replacement. This shift dramatically reduces complexity and enhances the predictability of your deployments.

Actionable Implementation Tips

To effectively implement immutable infrastructure patterns, integrate these strategies into your workflow:

- Utilize Image Building Tools: Employ tools like HashiCorp Packer, Docker, or native cloud services (e.g., AWS EC2 Image Builder) to create "golden images." These pre-configured machine or container images contain the operating system, dependencies, and application code, ensuring every instance is identical.

- Implement Blue-Green or Canary Deployments: Use deployment strategies that allow you to stand up new infrastructure alongside the old. A blue-green deployment directs traffic to the new "green" environment only after it passes all health checks, allowing for instant, zero-downtime rollbacks by simply redirecting traffic back to the "blue" environment.

- Design for Statelessness: Architect your applications to be as stateless as possible. Any persistent state, such as user sessions, databases, or file uploads, should be offloaded to external managed services like Amazon RDS, S3, or ElastiCache. This allows compute instances to be terminated and replaced without data loss.

- Automate Health Checks and Rollbacks: Your CI/CD pipeline must include robust, automated health checks that validate a new deployment before it receives production traffic. If these checks fail, the pipeline should be configured to automatically trigger a rollback to the previous stable version.

- Tag and Version Your Artifacts: Every immutable artifact (AMI, Docker image, etc.) must be tagged with a unique, traceable version identifier, such as a Git commit hash or a semantic version number. This makes it easy to identify exactly what is running in production and to select a specific version for rollback.

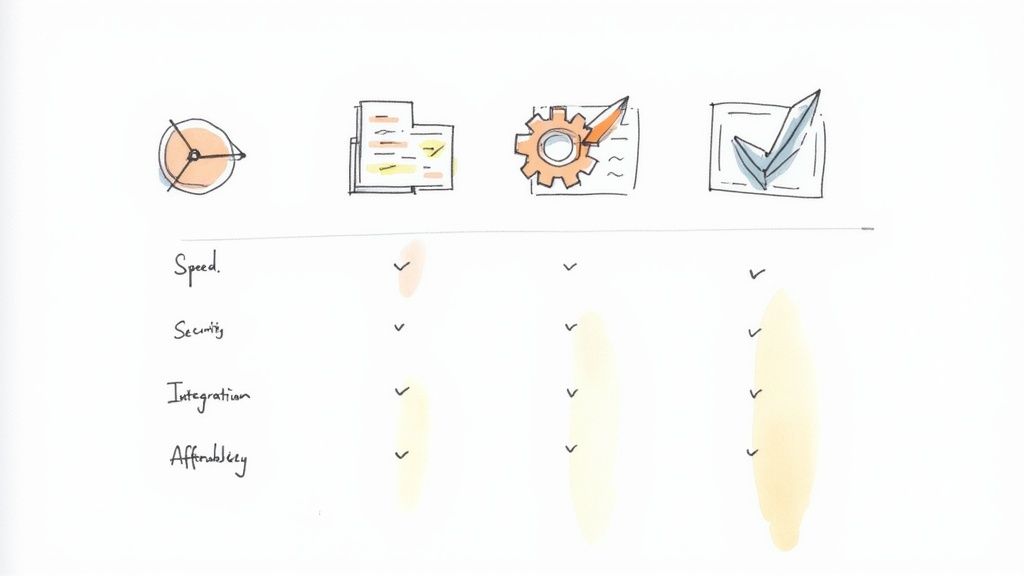

7 Best Practices Comparison Matrix

| Practice | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Version Control Everything | Medium - requires disciplined commits and workflow setup | Low to Medium - primarily software tools | Full traceability, auditability, rollback capability | Any organization needing reliable infrastructure change tracking and compliance | Strong audit trail, disaster recovery, collaboration |

| Modularize and Reuse Code | Medium to High - designing interfaces and managing versions | Medium - module registry and testing infrastructure | Reduced duplication, consistent infrastructure, easier maintenance | Teams building large, reusable infrastructure components | Improved reusability, maintainability, and consistency |

| Implement Proper State Management | Medium to High - setup remote state storage and locking | Medium - additional storage and infrastructure | Prevents state corruption, enables collaboration without conflicts | Teams managing shared infrastructure with multiple contributors | Centralized state, conflict prevention, disaster recovery |

| Environment Consistency and Isolation | Medium to High - managing multiple environments and configurations | High - duplicate environments increase costs | Reduced deployment risk, accurate testing, environment parity | Organizations with dev/staging/prod separation and strict testing | Deployment reliability, easier debugging, automated promotions |

| Automated Testing and Validation | High - requires setup of testing frameworks and policies | Medium to High - test infrastructure and tooling | Early error detection, compliance enforcement, reduced failures | Organizations emphasizing quality, security, and compliance | Improved code quality, security enforcement, reliable deployments |

| Secrets and Sensitive Data Management | High - integrating secret management systems and policies | Medium to High - secret management services | Secure handling of sensitive data, compliance adherence | Teams handling sensitive credentials and compliance requirements | Prevents leaks, enables rotation, centralized secret control |

| Immutable Infrastructure Patterns | High - requires redesigning deployment and rollback approaches | High - resource duplication during deployments | Eliminates drift, predictable deployments, simplified rollbacks | High-availability systems needing stability and zero-downtime deploys | Consistency, reliability, simplified debugging |

Building Your Future-Proof IaC Strategy

Navigating the landscape of modern infrastructure management requires more than just adopting a new tool; it demands a fundamental shift in mindset and methodology. Throughout this guide, we've explored the core pillars that support a robust, scalable, and secure system. These aren't just isolated tips; they are interconnected principles that form the foundation of a truly effective infrastructure as code (IaC) strategy. By embracing these infrastructure as code best practices, you empower your team to move beyond reactive firefighting and into a realm of proactive, strategic engineering.

The journey begins with treating your infrastructure with the same discipline you apply to application code. Versioning everything in Git provides an auditable history and a single source of truth, eliminating configuration drift and enabling confident rollbacks. This foundational practice directly supports the shift towards immutable infrastructure, where servers are replaced, not patched, ensuring consistency and predictability across every environment.

From Principles to Production-Ready Pipelines

Adopting these practices is not an overnight switch but a continuous journey of refinement. The key is to start small and build momentum.

- Modularization: Breaking down monolithic configurations into reusable, composable modules is your first step toward efficiency and scalability. It reduces duplication, simplifies maintenance, and allows teams to build complex systems from standardized, pre-approved components.

- State Management: Proper state management is the bedrock of reliable IaC. Whether you use remote backends with state locking or more advanced techniques, protecting your state file prevents corruption and ensures that your team can collaborate on infrastructure changes without conflict.

- Automated Validation: The real power of IaC is unlocked when you integrate automated testing and validation into your CI/CD pipelines. Static analysis, unit tests, and integration tests catch errors long before they reach production, turning your pipeline into a powerful quality gate that enforces compliance and security policies automatically.

- Security by Design: Managing secrets and sensitive data must be a primary concern, not an afterthought. Integrating tools like HashiCorp Vault or cloud-native secret managers ensures that credentials are never hardcoded, access is tightly controlled, and your infrastructure remains secure by default.

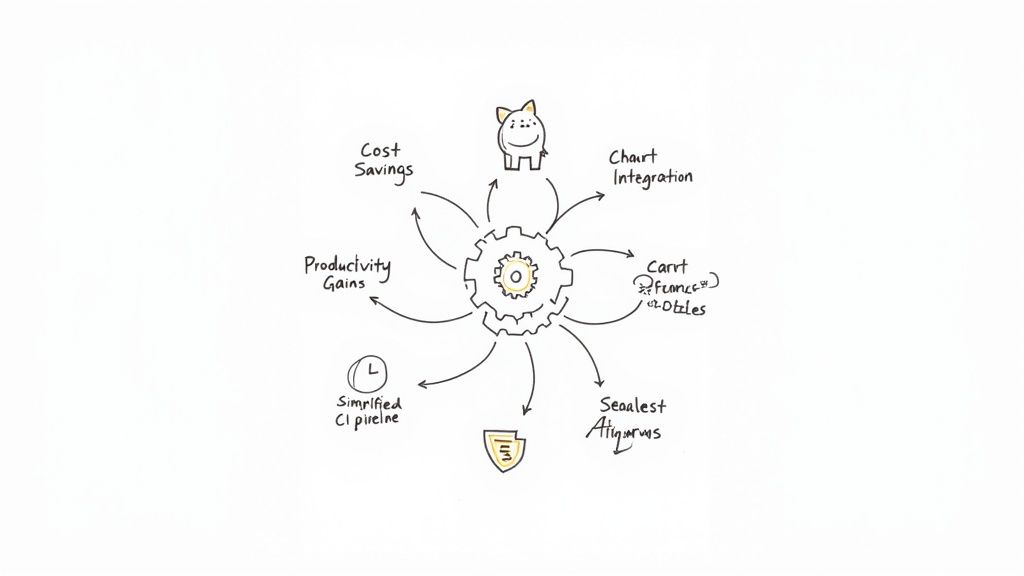

The True Value of Mastering IaC

Mastering these infrastructure as code best practices delivers a powerful competitive advantage. It translates directly into faster deployment cycles, reduced operational overhead, and significantly improved system reliability. When your infrastructure is defined as code, it becomes testable, repeatable, and self-documenting. This transparency fosters better collaboration between development, operations, and security teams, breaking down silos and creating a unified engineering culture.

Your IaC repository becomes a living blueprint of your entire digital estate, enabling you to replicate environments, perform disaster recovery, and scale resources on demand with unparalleled speed and confidence. The result is a resilient, agile system that can adapt to changing business needs without compromising on stability or security. The ultimate goal is to create a workflow where infrastructure changes are routine, predictable, and low-risk events, freeing your engineers to focus on innovation and delivering value to your customers.

Ready to automate the enforcement of these best practices directly within your pull request workflow? Mergify empowers you to build sophisticated, automated merge queues and CI/CD pipelines that ensure every IaC change is validated, secure, and compliant before it ever reaches your main branch. Stop manually checking and start building a truly automated, future-proof infrastructure workflow by visiting Mergify today.