How to Write Test Cases: A Field Guide to Modern Software Testing Excellence

Getting Started with Test Case Fundamentals

Creating good test cases is essential for any software testing effort. A properly written test case helps the entire team understand what to test, the testing method, and how to determine success or failure. The key is breaking down software requirements into clear, actionable test steps. Let's explore the building blocks that make test cases effective and easy to maintain.

Essential Elements of a Test Case

Just like following a recipe requires specific ingredients and steps, test cases need certain key components to work properly. Here are the essential elements every test case should include:

- Test Case ID: A unique identifier that makes it easy to find and reference specific tests, similar to how a library catalog number helps locate books

- Test Case Name: A brief description that clearly states what feature or scenario is being tested

- Pre-Conditions: The required system state before testing begins - for example, having a registered user account before testing login functionality

- Test Steps: Step-by-step instructions that anyone can follow, regardless of technical knowledge

- Test Data: The actual values used during testing, such as specific usernames, passwords or other inputs

- Expected Results: What should happen if the software works correctly - without this, you can't determine pass/fail

- Actual Results: What actually happened when following the test steps

- Status: Whether the test passed, failed or was blocked, which helps track progress and identify problems

Practical Example: Testing a Search Function

Here's a real-world example of testing a website's product search feature. This shows how the different test case elements work together:

| Test Case ID | TC_Search_001 |

|---|---|

| Name | Verify Search Functionality with Valid Keyword |

| Pre-Condition | User is on the website homepage |

| Steps | 1. Enter "Laptop" in the search bar. 2. Click the search button. |

| Test Data | "Laptop" |

| Expected Result | Search results page displays a list of laptops |

This structured approach to test cases becomes especially important as more companies move toward test automation. Recent data shows over 24% of companies now automate at least half their test cases. The trend toward "shift-left" testing, where testing starts earlier in development, also makes well-documented test cases critical. Having clear, detailed test cases helps teams catch issues sooner and manage both manual and automated testing more effectively.

Crafting Test Cases That Actually Work

While understanding test case basics is important, creating truly effective test cases requires careful thought and strategy. The key is going beyond basic templates to design tests that can find real issues. Let's explore how to write test cases that uncover bugs before they reach production.

Identifying Edge Cases and Subtle Issues

Take a standard login form as an example. Good test cases look beyond the obvious valid username/password combinations. What happens when someone enters an unusually long password? Or uses emojis in their username? These edge cases often reveal hidden problems. The testing scope should also consider ripple effects - does a failed login impact session timeouts or security logs? By thinking through these scenarios carefully, you can catch issues that basic testing might miss.

Comprehensive Test Scenarios and Thorough Steps

Testing complex features like an e-commerce checkout requires understanding how real users interact with the system. Beyond basic order completion, you need to verify different payment types, shipping calculators, promo codes, and error handling for issues like declined cards or invalid addresses. For each scenario, write steps that are clear enough for anyone on the team to follow exactly. Think of it like writing a cooking recipe - the instructions should produce consistent results no matter who follows them.

Balancing Coverage With Efficiency

As software gets more complex, the number of possible test cases grows quickly. But trying to test every possible scenario usually isn't practical. A better approach is focusing on the most important user workflows and highest-risk areas first. For a banking app, security-related tests deserve more attention than cosmetic features. This targeted strategy helps find critical bugs while keeping the test suite manageable. Many companies are moving toward test automation - industry data shows 24% are working to automate major portions of their testing. This makes it even more important to write clear, repeatable test cases that automation tools can execute reliably.

Building Your Automation Strategy

A well-planned automation strategy is essential for successful software testing. Getting it right means carefully choosing which tests to automate and organizing them in a way that makes sense long-term. The key is incorporating automation considerations from the start when designing test cases.

Identifying Automation Candidates

Some tests work better with automation than others. Manual testing still makes sense for tests involving complex user interactions or visual checks. But tests that you run frequently, use lots of data, or repeat often are perfect for automation. Take regression testing for example - these tests check if new code changes broke existing features, making them ideal automation targets. By spotting good automation opportunities early in development, you can build your automated suite progressively rather than trying to automate everything at once later.

Writing Automation-Friendly Test Cases

Writing test cases for automation requires a different approach than manual test cases. Good automated tests are simple, focused, and work independently. Each test should check one specific thing and not depend on other tests running first. This makes it much easier to find and fix issues when tests fail. Test data management also needs careful thought. Instead of hardcoding data directly in tests, store it separately and feed it into the tests. This lets you easily test different scenarios by changing the input data, improving both coverage and efficiency.

Structuring Test Cases for Long-Term Success

As your software grows, your test suite will too. Good organization becomes crucial for keeping tests manageable. Group related tests together based on what they check - for example, putting all login tests in one group and profile management tests in another. Clear naming also helps, like using "TC_Login_ValidCredentials" to immediately show what a test does. Adding brief comments explaining the test scenario and expected results saves time when investigating problems later. With over 24% of companies now automating at least half their testing, these organizational practices are becoming standard for efficient test automation.

Balancing Manual and Automated Testing

While automation brings huge benefits, it's not the answer for everything. Human judgment and intuition remain essential for certain types of testing. Exploratory testing helps uncover unexpected issues, usability testing needs actual user perspective, and complex interactions often require manual verification. The best approach combines automated and manual testing strategically - each handling the types of tests they do best. This balanced strategy leads to more thorough and effective testing overall.

Maximizing Test Coverage Without the Bloat

Building strong test coverage requires a thoughtful, strategic approach. While it's tempting to create as many test cases as possible, this often leads to an unwieldy test suite that's difficult to maintain. The key is finding the right balance between comprehensive testing and practical maintainability. Let's explore how to create impactful test cases while avoiding unnecessary complexity.

Identifying Redundancies and Streamlining Your Suite

Test suites tend to grow organically over time, often accumulating duplicate tests that check similar functionality. For example, you might find multiple tests verifying the same user login flow with only minor input variations. This redundancy adds unnecessary complexity and slows down test execution. The solution? Regularly review your test cases to spot overlapping functionality. Look for tests that verify the same core behaviors and combine them into fewer, well-structured tests. This keeps your suite focused while maintaining solid coverage.

Prioritizing Critical Paths and Risk Assessment

Some features matter more than others to your users and business. Your testing strategy should reflect these priorities. Take an e-commerce site as an example - the checkout process demands more thorough testing than updating a user profile picture since it directly impacts revenue. Focus your testing efforts on these essential workflows first. Pay special attention to components that handle sensitive operations like security features, database interactions, or third-party integrations. These areas typically have a higher risk of issues and deserve extra testing attention.

Data-Driven Approaches to Test Case Design

Data-driven testing helps you expand test coverage efficiently without creating tons of separate test cases. Instead of writing individual tests for each scenario, create a single test template that runs with different sets of test data. Consider a login form test - rather than writing separate tests for valid logins, invalid usernames, and wrong passwords, build one test that reads different credentials from a data source. This approach makes it easier to test edge cases and boundary conditions while keeping your test suite manageable.

Measuring and Analyzing Test Coverage Gaps

Finding gaps in your test coverage requires both tools and judgment. Code coverage tools show which parts of your code run during tests, but they don't tell the whole story about test quality. For instance, a test might execute a piece of code without properly checking if it works correctly. Regular reviews help ensure your tests actually verify the right behaviors. Keep your test suite current by updating tests when code changes and incorporating feedback from real user issues. This ongoing refinement process helps maintain an effective, efficient test suite that catches bugs without becoming bloated.

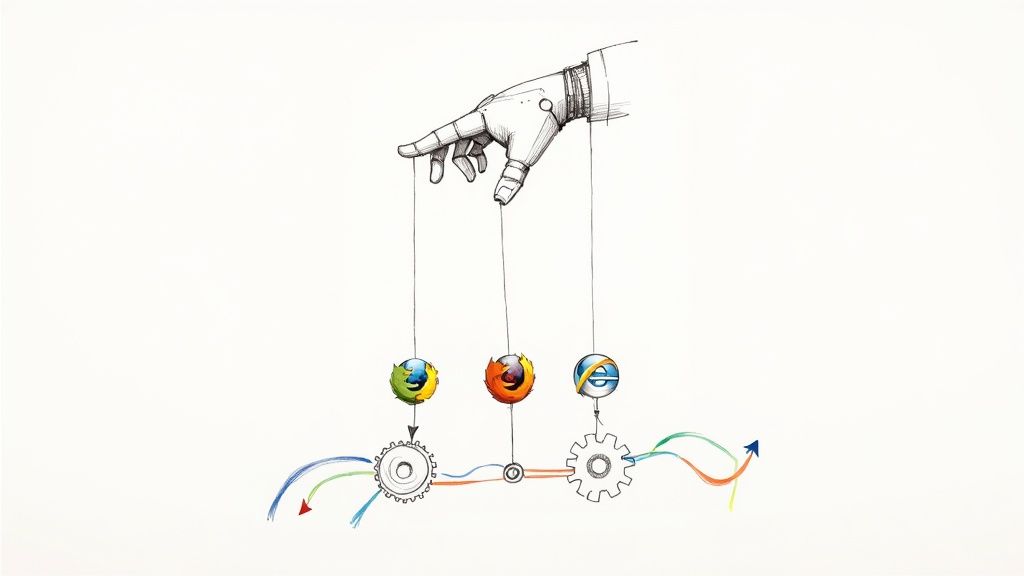

Integrating AI Tools Without Losing Control

Software testing teams increasingly use AI-powered tools, but they need a thoughtful approach to get real value. Adding AI tools without a clear strategy won't automatically improve your test cases. Let's explore how teams can use AI effectively while keeping human judgment and oversight at the forefront.

Choosing the Right AI Tools for Test Case Writing

Finding AI tools that match your team's specific needs is essential. Look at your current testing workflow and pinpoint areas where AI could help the most. For example, if generating test data takes up too much time, an AI tool focused on data creation could be worth exploring. Start with basic tools that solve your immediate challenges before moving to more complex options. The goal is to pick tools that complement your process, not complicate it.

Validating AI-Generated Test Cases: The Human Element

While AI can create test cases quickly, human review remains crucial. AI systems learn from training data, which means they can inherit biases or miss important scenarios. For instance, AI might overlook key edge cases or create tests that don't match actual user behavior. Think of AI-generated test cases as first drafts that need expert review. Let experienced testers check that the tests cover all necessary situations and align with project goals. Their expertise helps catch issues that AI might miss.

Defining Clear Roles and Responsibilities in AI-Assisted Testing

Adding AI to your testing process means updating how your team works together. Teams need to decide who will choose which tests to automate, who reviews AI-generated tests, and who maintains the AI systems. Clear roles help everyone understand their part and make it easier to measure how well AI is working. For example, having senior testers review AI-generated tests helps maintain quality standards and builds trust in the process.

Identifying Scenarios Where Traditional Test Case Writing Still Reigns

AI brings many benefits but isn't always the best choice. Some types of testing work better with traditional human approaches. Take exploratory testing - it needs human creativity to find unexpected problems. Or usability testing, which requires understanding how real people interact with software. Complex scenarios with detailed user interactions or visual checks often need human judgment. Use AI for repetitive tasks and data-heavy testing while letting human testers focus on areas that need critical thinking. Like choosing the right tool for different woodworking tasks, picking the right testing approach - whether AI or manual - leads to the best results.

Understanding where AI helps and where human expertise is essential helps teams get the most from both. This balanced approach improves testing efficiency while maintaining thorough coverage and quality.

Building a Culture of Testing Excellence

Creating effective test cases is a crucial first step, but there's something just as important: establishing an environment where testing becomes second nature. The best software teams understand that testing shouldn't be an afterthought - it needs to be woven into every part of development. Let's look at how successful teams build this kind of testing mindset through teamwork, well-defined processes, and steady progress.

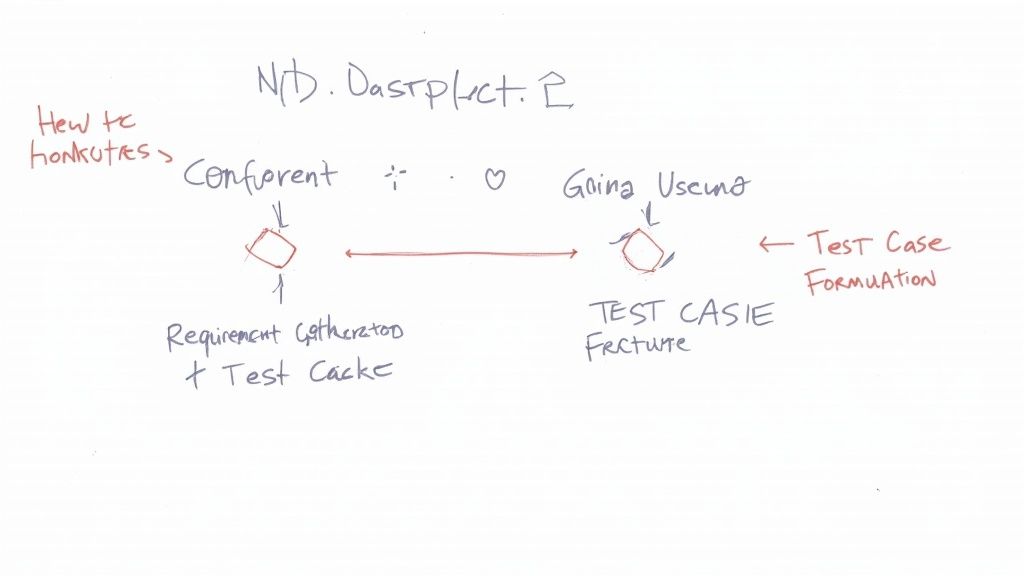

Collaboration: The Key to Effective Test Case Management

Good testing starts with tearing down barriers between teams. When developers, testers, and other team members work closely together, testing becomes much more effective. For instance, having developers review test cases early often surfaces potential problems before coding begins. Regular check-ins with product owners and business teams help focus testing on what matters most to users. This shared approach makes testing a natural part of building software rather than just a final checkbox.

Review Processes: Catching Bugs Before They Hatch

Just as good writing needs editing, test cases need thorough reviews to catch problems early. Think of reviews as your quality safety net - they help spot unclear test steps, missing scenarios, and gaps in test coverage before issues reach users. Teams can do quick peer reviews between testers or more detailed reviews with the whole development group. The important thing is creating an open environment where everyone feels comfortable giving honest feedback to make tests better.

Continuous Improvement: Evolving Your Test Suite

Software keeps changing, and testing needs to keep up. Building strong testing practices means always looking for ways to improve. Regularly step back and ask key questions: Are we testing the things that matter most? Could our tests be more efficient? Are there duplicate tests we could combine? The goal is steady progress toward better testing over time.

Looking at data helps guide these improvements. Track things like how long tests take to run, how many bugs they find, and how much of your code they cover. These numbers show what's working well and what needs attention. Make it easy for the whole team to suggest ways to make testing better. Like agile development, testing works best when you can adapt quickly based on what you learn.

Mergify helps streamline testing by automatically handling pull request merges based on your test results. This frees up your team to focus on building better tests and delivering quality software. See how Mergify can help improve your testing workflow today.