How to Write Integration Tests: A Practical Playbook for Modern Development Teams

Breaking Down Integration Testing Fundamentals

Integration testing examines how different parts of a software system work together, going beyond testing individual components in isolation. Think of it like assembling a car - even if the engine, transmission, and wheels each work perfectly on their own, the car won't move unless they're properly integrated. The same principle applies to software components, which need thorough testing to ensure they function smoothly as a unified system.

Why Integration Tests Matter

Well-designed integration tests catch problems early, before they become expensive headaches. By identifying issues where components interact, these tests help teams spot and fix bugs during development rather than after deployment. This early detection saves significant time and resources while giving confidence that the system will work reliably for end users.

Key Concepts in Integration Testing

Understanding these core concepts helps create better integration tests:

- Scope: Be clear about what you're testing - whether it's the interaction between two specific modules or broader system functionality. A focused scope keeps tests manageable and purposeful.

- Dependencies: Map out how components connect and share data. Knowing these relationships helps create tests that catch real-world issues.

- Environment: Tests should run in conditions that match production as closely as possible, including realistic data and simulated external services like databases.

Practical Tips for Writing Effective Integration Tests

Here's how to create integration tests that deliver results:

- Start with a Plan: Map out which integration points need testing, what good behavior looks like, and what test data you'll use.

- Test Critical Paths First: Focus on the connections most vital to your system's core functions or most likely to cause problems. This ensures you catch the most important issues.

- Use a Suitable Testing Framework: Pick tools that make writing and running tests easier. xUnit works well for .NET projects, offering solid test organization and reporting.

- Keep Tests Independent: Each test should stand alone without relying on other tests' results. This lets you run tests in any order without unexpected failures.

- Document Your Tests: Write clear descriptions of what each test checks and why. Good documentation helps team members understand and maintain tests over time.

Following these principles leads to integration tests that truly protect code quality. The result? More reliable software that's easier to maintain and update as your project grows.

Crafting Your Testing Strategy Blueprint

A clear testing strategy is essential for effective integration testing. Just as an architect needs a blueprint before construction, software teams need a solid testing plan to guide their efforts and use resources efficiently. Let's explore how to develop a testing strategy that works for your project.

Choosing the Right Integration Testing Approach

Each integration testing method has distinct advantages and limitations. Your choice should align with your project's specific needs, considering factors like team size, expertise, and acceptable risk levels.

- Big Bang: This method tests all components together as one unit. While it might seem efficient, research from Microsoft shows it can increase debugging time by 40% since finding specific issues becomes challenging [4]. Picture trying to locate a broken wire in a complex circuit all at once - it quickly becomes overwhelming. This approach works best with simple, smaller systems.

- Bottom-Up: Starting with the lowest-level modules and gradually adding higher components, this approach builds testing from the ground up. Like constructing a house starting with the foundation, bottom-up testing creates a stable base. It works especially well when components at similar levels are ready together, making it easier to find problems since issues typically appear in newly added parts.

- Top-Down: Beginning with high-level modules, this method uses stubs to stand in for lower components. It helps catch major design issues early but requires careful attention to stub creation and upkeep. While effective for validating core features first, managing the stubs can become complex.

- Incremental: This method combines bottom-up and top-down approaches by testing small component groups and gradually expanding. Teams get quick feedback and can fix issues more easily than with Big Bang testing, while maintaining good structure.

Pick the approach that matches your project's complexity, component readiness, and team capabilities.

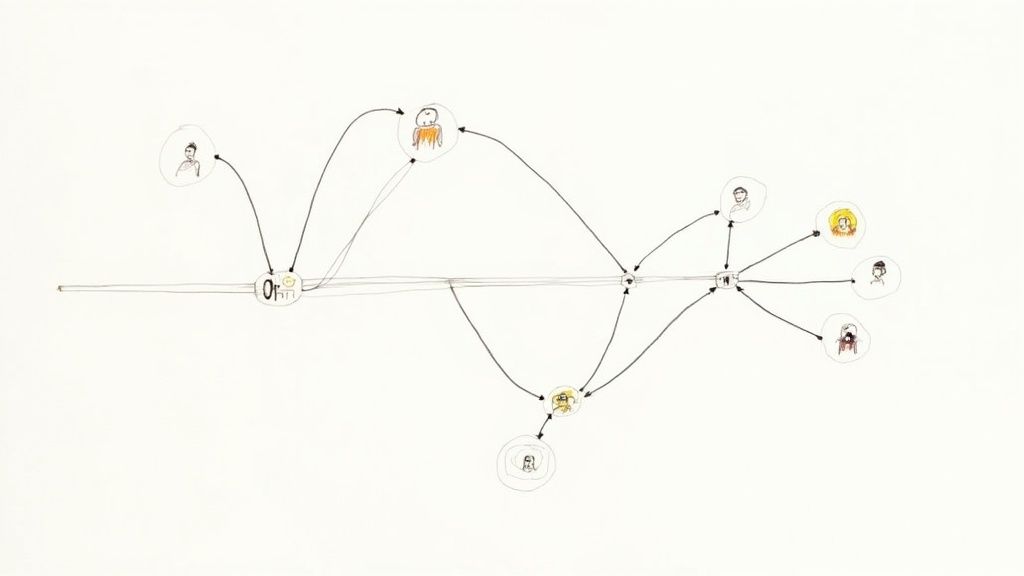

Prioritizing Your Integration Tests

Some integrations matter more than others. Focus your testing efforts based on risk levels, business importance, and how often features are used. Take an e-commerce site as an example - testing between payment processing and order management deserves more attention than testing the product recommendation system. Like a doctor handling patients, address critical issues first. This targeted approach helps you make the most impact with your testing resources.

Documenting Your Testing Strategy

Writing down your strategy helps everyone stay aligned. Include details about your chosen testing approach, which tests take priority, and why you made these choices. Think of it like a detailed recipe - good documentation helps anyone on the team achieve consistent results. It also makes it easier to maintain and adjust tests as your software grows.

A well-planned testing strategy forms the foundation of successful integration testing. By selecting appropriate methods, setting clear priorities, and maintaining good documentation, you create an efficient testing process that leads to better software. Focus on writing tests that not only check functionality but also help build a reliable system that's easy to maintain. This careful planning helps teams work faster and deliver higher quality products to users.

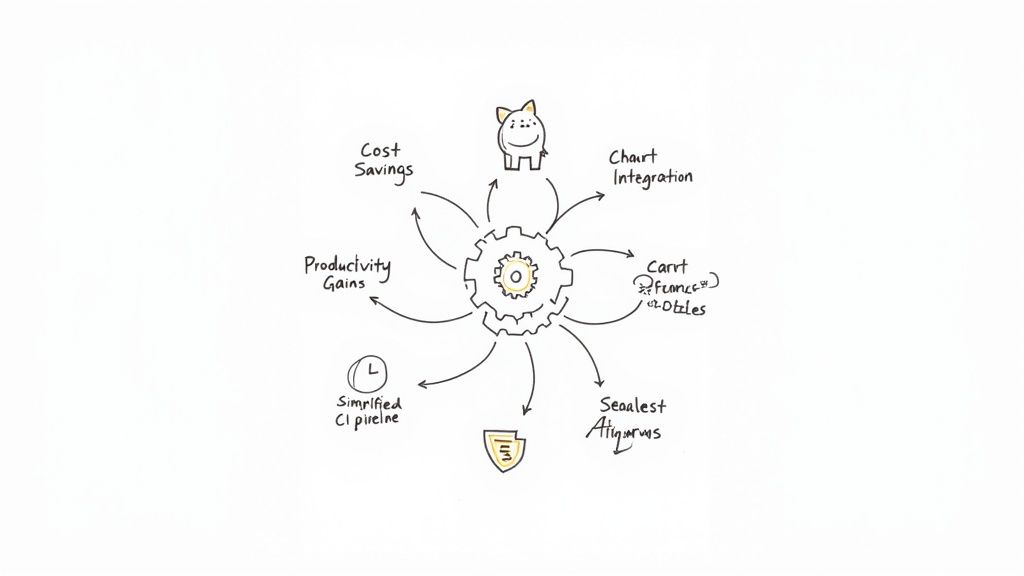

Making Automation Work for Your Team

While a solid testing strategy creates the foundation, smart automation is what enables teams to run integration tests efficiently. By combining the right tools with well-designed workflows, development teams can reduce testing time significantly while improving accuracy. Teams that get this right enjoy faster deployments and deliver more reliable software to their users.

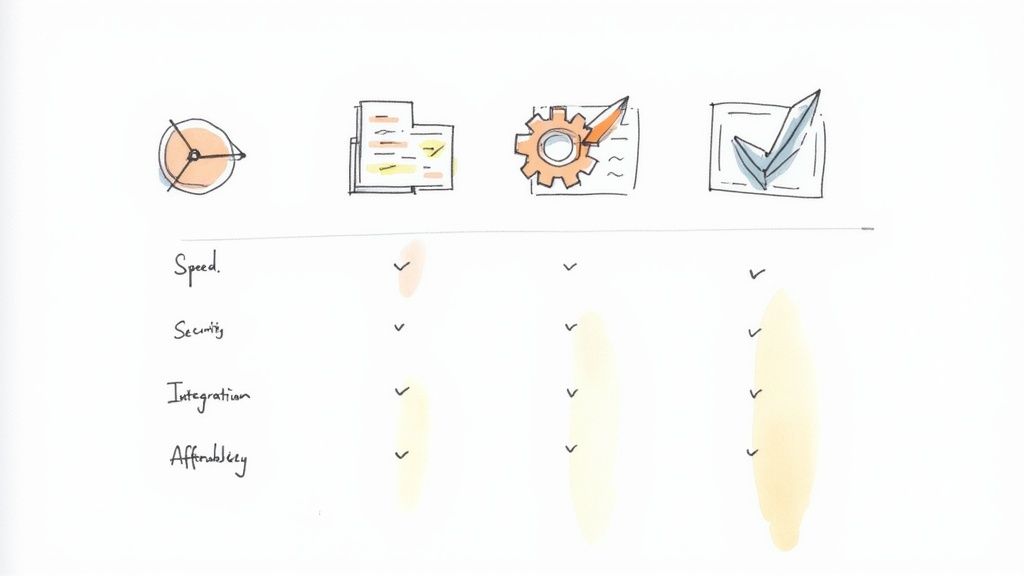

Selecting the Right Automation Tools for Integration Tests

Finding the right automation tools for integration testing requires careful consideration. Your choice should align with your existing technology stack, application complexity, and your team's experience with different frameworks.

- API Testing Tools: For testing web services and APIs, Postman makes it simple to verify API responses, organize test suites, and connect with CI/CD systems.

- UI Testing Frameworks: Selenium, widely used in the industry, helps teams automate browser interactions for thorough UI testing. This is particularly useful when testing complex workflows that span multiple parts of your system.

- Specialized Testing Platforms: Services like Mergify provide dedicated features for code integration and merge processes. Their merge queues and automated protections help teams work faster while maintaining security. They also use AI to quickly spot common CI issues, saving valuable debugging time.

The key is choosing tools that match your specific needs, enabling your team to test efficiently and effectively.

Integrating Automation into Your CI/CD Pipeline

Getting automation tools in place is just the start. The real value comes from connecting these automated tests into your CI/CD pipeline. When done right, tests run automatically with every code change, giving immediate feedback and catching integration problems early.

For instance, if a developer makes a change that breaks payment processing, having automated tests in the CI/CD pipeline means catching that issue before it affects customers. This quick feedback helps teams fix problems faster and avoid costly production issues.

Optimizing Automation Performance

Poor test performance can slow down development and reduce the benefits of automation. Here are practical ways to keep your automated tests running efficiently:

- Parallelization: Running multiple tests at once dramatically speeds up large test suites. Most modern testing tools support parallel execution, helping teams make better use of their computing resources.

- Targeted Test Execution: Focus on running tests that relate to changed code. Tools that analyze code changes can automatically pick relevant tests, making the process more efficient.

- Resource Optimization: Make sure test environments have enough computing power, memory, and network capacity. Limited resources often lead to slow and unreliable test results.

By carefully choosing automation tools, integrating them with CI/CD, and continuously improving performance, teams can speed up development while building better software. This practical approach to integration testing gives developers the confidence to build reliable systems that work well together.

Taking Control with Mocks and Stubs

When writing integration tests that involve multiple interacting components, dealing with all the real dependencies can make testing slow and complex. This is where mocks and stubs shine - they let you simulate dependencies instead of using the actual services. Most developers have embraced this approach, with 70% using mocking libraries according to Stack Overflow surveys. Let's explore how these tools can help create better tests.

Understanding the Difference Between Mocks and Stubs

While people often use the terms interchangeably, mocks and stubs serve different purposes in testing. Getting clear on these differences helps you write more effective tests.

- Stubs: Think of stubs as simple stand-ins that return pre-programmed responses. Their job is to replace a real dependency so tests can run without it. For example, instead of connecting to a real payment gateway, a stub could simulate successful payment responses.

- Mocks: Mocks go further by verifying interactions. Beyond providing fake responses, they check that your code calls the right methods with the right parameters. This helps ensure components work together properly, not just that they handle responses correctly.

Choosing the Right Approach: When to Mock and When to Stub

The choice between mocking and stubbing depends on what you're trying to test. Use a stub when you just need to simulate responses from a dependency. But if you need to verify the exact way components interact, use a mock instead. For instance, when testing an order system, you might stub the inventory service to just return stock levels. But for the payment gateway, you'd want a mock to verify the system sends correct payment details and handles different responses appropriately.

Practical Examples: Implementing Mocks and Stubs

Let's look at a real example: testing user registration that includes sending welcome emails. You don't want to send actual emails during testing, so here's how mocking helps:

// Example using Mockito (Java mocking framework) @Test public void testUserRegistration() { EmailService mockEmailService = Mockito.mock(EmailService.class); UserService userService = new UserService(mockEmailService);

User newUser = new User("test@example.com");

userService.registerUser(newUser);

// Verify that the email service's sendWelcomeEmail method was called

// with the correct user object.

Mockito.verify(mockEmailService).sendWelcomeEmail(newUser);

}

This example shows how mocking helps verify interactions with the email service. Rather than testing email delivery itself, it confirms the registration process calls the email service correctly. This focused approach keeps tests quick and maintainable. By removing dependencies on external services, tests run faster and more reliably. You can also easily test error cases that would be hard to trigger with real services, making your test suite more complete.

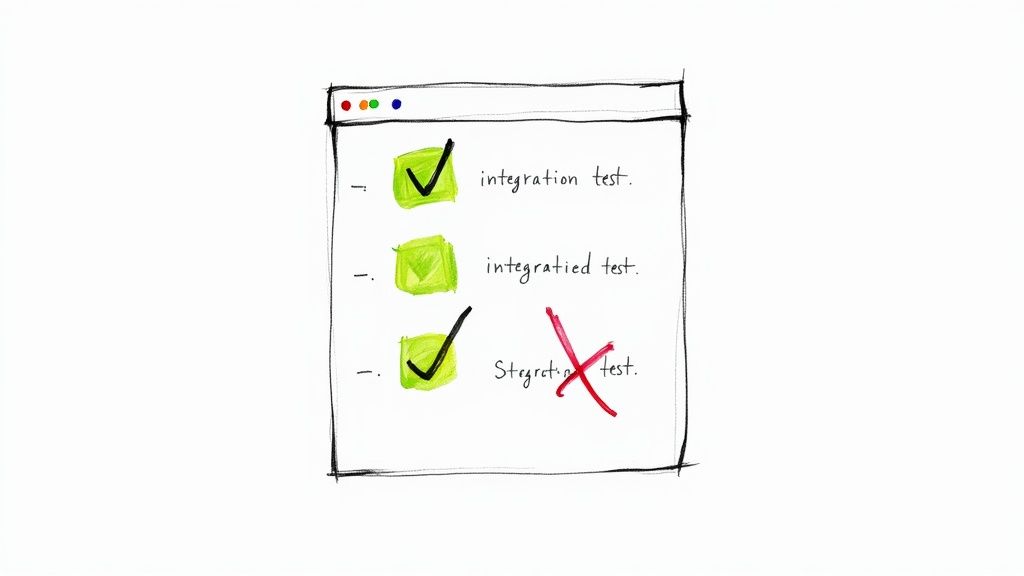

Building Reliable Regression Testing Systems

As software projects evolve and grow more complex, keeping existing features working while adding new ones becomes increasingly challenging. Regression testing addresses this challenge directly by verifying that recent code changes haven't broken existing functionality. With the right approach, regression testing can catch up to 20% more defects before they reach production, according to studies by Capgemini [1], while keeping development moving quickly.

Defining the Scope of Your Regression Tests

The first step in effective regression testing is identifying what actually needs testing after code changes. Focus on areas most likely to be impacted by recent updates. For example, if you modify a shopping cart feature, prioritize testing checkout flows, inventory updates, and order history rather than testing everything. This targeted approach helps catch critical issues efficiently without running unnecessary tests.

Selecting the Right Tests for Regression

Not every test belongs in your regression suite. Focus on tests that verify essential user flows, high-risk areas, and frequently used features. For a social media app, testing core features like posting content, user authentication, and notifications makes more sense than testing rarely used settings. This way, you ensure the most important functionality works correctly after each update.

Automating Your Regression Test Suite

As applications grow, manual regression testing becomes impractical and prone to human error. Automation makes regression testing both faster and more reliable. Tools like Selenium and Postman, used by 70% of developers according to Stack Overflow surveys, help automate UI and API testing. By running these tests automatically in your CI/CD pipeline, you get quick feedback on code changes. Services like Mergify can trigger relevant tests during merge requests, catching problems early before they affect users.

Maintaining Your Regression Tests

Just like application code, regression tests need regular updates to stay useful. When your software changes, update related tests to match new features and modified functionality. If you redesign your app's interface, update UI tests accordingly. Remove duplicate or outdated tests to keep your suite efficient and focused. This ongoing maintenance helps regression testing continue catching real issues without slowing down development. Regular reviews ensure your regression suite stays practical and effective, helping you ship quality code confidently while meeting user expectations.

Avoiding Common Integration Testing Pitfalls

Let's explore the most common mistakes teams make with integration testing and practical ways to address them. Understanding these challenges will help you build a testing approach that delivers reliable results over time.

The Environmental Mismatch

When test and production environments don't match, you risk missing critical issues that only appear in real-world conditions. Tests may pass in your test setup but fail in production due to differences in database configurations, hardware specs, or data patterns. To prevent this, make your test environment mirror production as closely as possible - use similar hardware resources, keep software versions aligned, and work with representative data sets.

The Over-Mocking Mess

While mocking can simplify testing complex systems, too much mocking leads to fragile tests. Take a user registration flow - if you mock every service call and database interaction, your tests become tightly coupled to implementation details. Even small changes to component interactions can break multiple tests, despite core functionality working correctly. Strike a balance by mocking external services you don't control while testing internal component interactions with real implementations where possible.

The Test Data Desert

Poor test data quality masks bugs and creates blind spots in your testing. For example, if you only test a sorting algorithm with pre-sorted data, you'll miss issues with unsorted inputs. Static test data that never changes can hide regressions over time. Combat this by generating diverse, realistic test data using tools like AutoFixture. Regular data refreshes help ensure your tests continue catching real issues.

The Documentation Dilemma

When tests lack clear documentation, debugging failures becomes needlessly difficult. Picture a failing test months after it was written - without context about its purpose and expected behavior, the team wastes time investigating why it exists and what it validates. Write clear, concise documentation explaining each test's goal, expected outcomes, and key assumptions. This small upfront investment saves significant maintenance time later.

The Unmaintained Test Suite

Integration tests need ongoing updates as your codebase evolves. Neglecting test maintenance leads to an ineffective and unreliable suite. For instance, when adding a new payment method to an e-commerce system, failing to update related integration tests leaves critical paths untested. Make test updates part of your development process - modify tests when features change and remove outdated ones to keep the suite focused.

By actively addressing these common issues, teams can build integration testing practices that consistently improve software quality while reducing maintenance costs. Taking a thoughtful, systematic approach to testing helps teams ship reliable software with confidence.

Ready to improve your team's integration testing and CI/CD pipeline? Explore how Mergify can help automate your testing workflows, enhance code security, and improve developer productivity. Learn more at https://mergify.com