How to Deal with the Abuse of your Service?

As Mergify keeps growing, we keep encountering new issues. A few weeks ago, we explained how we had to adapt to our traffic increase and handling close to 1M events per day.

With growth comes its share of abusive accounts. In the same fashion that our workload grew, the number of abusive accounts expanded. To avoid disturbance in the service, those users have to be managed.

Think about it like having the wrong people at a party. 👯♀️

There are several steps to successfully manage this kind of individuals with wrong behaviors:

- Identify them;

- Block them;

- Implement a long-term strategy that prevents running into the same issue.

Point 3 is essential as you grow: if you stop at step 2, you might need more and more time to run steps 1 and 2. As you're scaling up, you don't want the first 2 steps to eat more time every month.

How to spot abusers?

There are multiple ways to define an abusive account. The simplest definition is that they act in a way that is way out of the ordinary.

At Mergify, we suspect accounts by the way they manage their spaces; some of them have many random repositories which slow down exchanges of requests, some others are always out of API quotas because they're just testing multiple GitHub Apps at the same time.

There are also spambots on GitHub, though they usually do no harm to our application. They are mostly a nuisance for GitHub itself.

The best way to spot your abusers is to leverage a monitoring system that gives you metrics about certain aspects of your users. We leverage Datadog for observability and use a mix of APM and custom metrics to detect possible malicious accounts.

What to do?

When you have pinpointed users who you consider to be a problem for your service, it's time to act.

First of all, you need to consider the different options that you can implement:

- The simplest solution is to do nothing. After all, the problems with this type of account may not happen very often. It might not be worth the time to solve. I would not recommend ignoring that issue; if you leave a situation like that happen, continue, and grow it will become more difficult to solve later.

- A defensive solution can be to block every user by default. You could come up with an allow-list where you'd vet your users manually. Obviously, that's a little too extreme and time-consuming since you'd have to manually authorize every good user.

- The third — and more realistic — solution consists of defining the limit between an abusive account and a clean account. Using tests and metrics, you should be able to identify when and where there is a problem. For example, you could define a limit to not reach, based on the number of requests, or the time taken to treat an account. When you diagnose an account with abusive behavior, you can block it manually or automatically using a script.

In the case of Mergify, we defined several metrics that would trigger an abuse detection:

- An account has a large number of repositories;

- An account has a repository with a large number of collaborators;

- An account keeps exceeding its GitHub API quota by doing too many requests.

In our case, we chose to not automate the blocking process. As the problem does not happen often enough, we're not 100% sure of our criteria and we're still consolidating our data. However, the identification process is automated and we just need a single click to have a list of candidates.

Then, when we know we don't want to serve a user anymore due to its service usage, we toggle a flag that disallows serving them. Technically, this disables every feature on the account and denies any request with this user. Again, this is fully automated, making it quite simple to plug the identification and blocking later on when we'll be sure what the criteria might be.

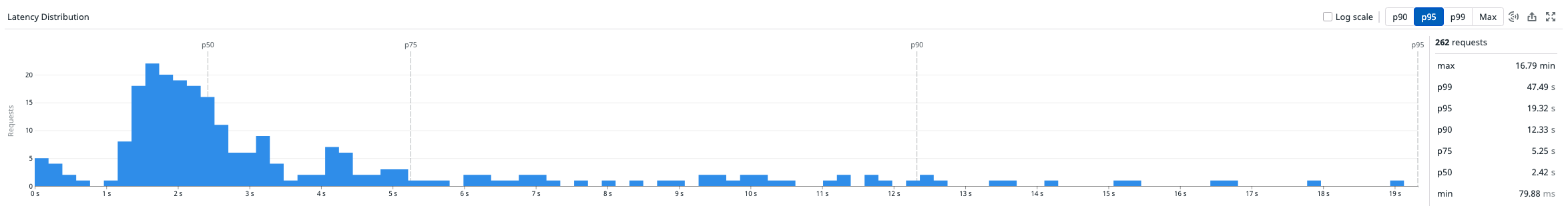

Blocking bad conduct can save a lot of resources. For example, updating a Mergify account by querying their GitHub account takes less than 20 seconds for 95% of the accounts. However, we've seen some abusive accounts take up to 5 hours due to the number of repositories they enabled.

We always monitor the maximum latency on this update operation, so we know something might be wrong if it ever increases to a very high value. Then, investigating and blocking a potential bad user is a matter of seconds.

Going further

Once you're ready to connect the different steps, you can have a fully automated abuse handling mechanism. This is quite powerful and a required system for many software-as-a-service platforms that are easy to access for anyone on the Internet.