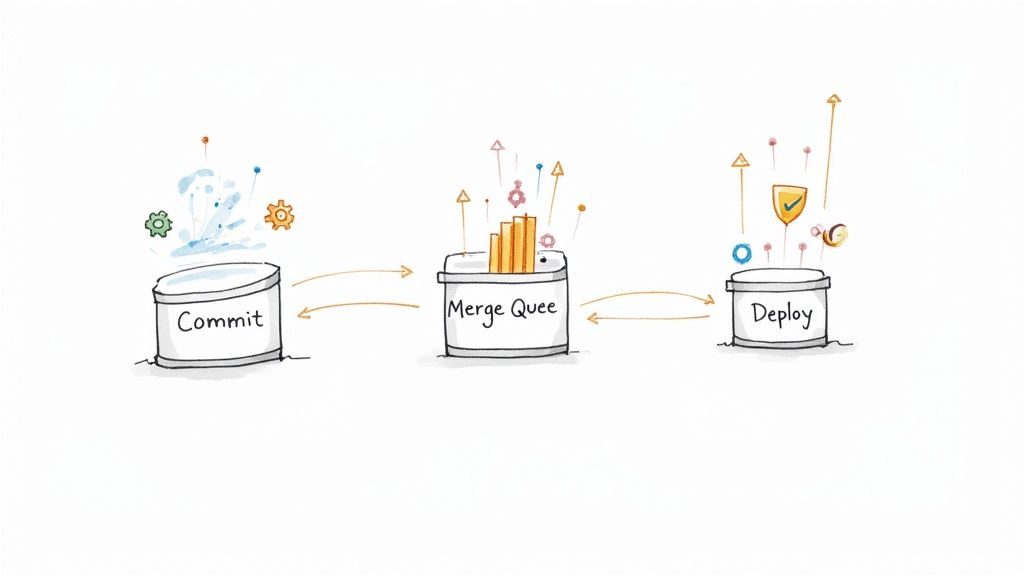

Flaky Test Example: How to Recognize Them? Catch the Culprit in Your CI/CD Pipeline

Flaky tests are the chameleons of your CI/CD pipeline, failing and passing unpredictably. This article guides you in spotting these elusive culprits, with a concrete Flaky Test example, offering practical tips for turning your pipeline into a well-oiled, reliable machine.

Imagine you’re a detective in a classic mystery novel. You’ve got a list of suspects, a room full of clues, and a magnifying glass to examine the evidence. But in this version of the tale, the room is your CI/CD pipeline, the suspects are your tests, and the magnifying glass? Well, let's call it your keen developer instincts. Among the suspects, there’s one so elusive, changing its colors like a chameleon, that it becomes notoriously difficult to catch: the Flaky Test.

What’s a Flaky Test?

Imagine a "friend" who's sometimes punctual, sometimes late, but you can never predict which version will show up. Flaky tests are just like that unpredictable friend. One minute they're passing without a hitch, the next minute they're failing, and you're scratching your head wondering what just happened. Unlike consistent tests, flaky tests lack determinism—they give different outcomes even when run with the same initial conditions. They’re the trickster in your CI/CD pipeline, causing chaos and slowing you down.

If you want to know more about Flaky Tests, you should definitely read the article Flaky Tests: What Are They and How to Classify Them? from Fabien.

How to Recognize a Flaky Test?

1. Observe the Behavior Over Time

Think of this as stalking your suspects in an old black-and-white movie. Run the suspect test multiple times without making any changes in the code or environment. If the test switches between pass and fail without any evident reason, you've caught yourself a flaky!

2. Isolate the Suspect

Quarantine the test, like it's carrying the plague. Run it separately from the rest of the suite. This helps you examine its behavior without the interference of other tests. Sometimes tests are "flaky" because other tests interfere with them. If it still behaves unpredictably, you can be quite certain it’s a flaky test.

3. Interrogate the Logs

Dive deep into the log files and CI reports like you’re poring over a cryptic manuscript. This can often provide clues to why the test is flaking. Are there timeout errors? Do you see discrepancies in the time it takes to execute the test? These are your leads.

4. Keep an Eye on External Factors

Sometimes it's not the suspect but an accomplice. Is your test dependent on an external API or database? Network latency or an unreliable third-party service could be the real villain here. Double-check these dependencies to rule them out.

5. Repeat Offenders

Flaky tests often share common characteristics like complicated setup procedures, or they rely on elements with timeouts. Keep a "rogue gallery" of past flaky tests and compare traits to recognize patterns.

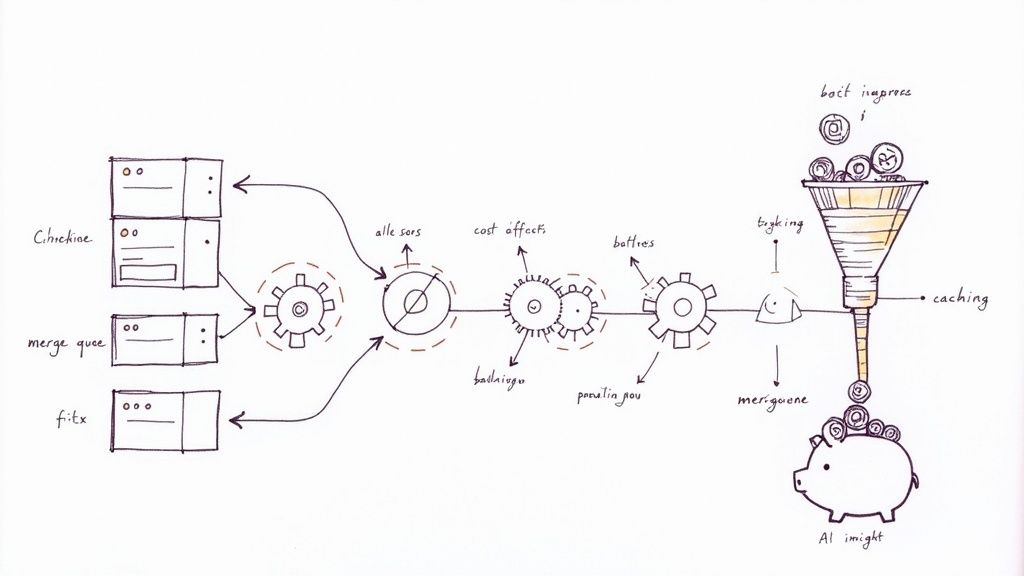

Flaky Test: a Concrete Example

Let's say you're working on an e-commerce website, and you have a Selenium test to check whether the "Add to Cart" button works. Your test mimics the steps a user takes: it navigates to a product page, clicks the "Add to Cart" button, and then checks if the cart counter at the top of the page has incremented.

Here's some pseudo-code to demonstrate what this might look like:

def test_add_to_cart():

browser = navigate_to_website("http://myecommerce.com")

browser.click("Product Page")

initial_count = browser.getText("Cart Counter")

browser.click("Add to Cart Button")

time.sleep(2) # wait for 2 seconds

final_count = browser.getText("Cart Counter")

assert final_count == initial_count + 1Now, most of the time this test runs just fine, but every once in a while it fails. You scratch your head and think, "Well, that's odd. The code for the 'Add to Cart' feature hasn't changed. Why is it failing now?"

Signs That It's a Flaky Test:

- Inconsistent Results: When you run it multiple times without any code changes, sometimes it passes and sometimes it fails.

- Time-dependent: You notice the test often fails when the system is slow or under heavy load.

- External Dependencies: It relies on the full website, which might be affected by other elements like ads or third-party services that load unpredictably.

- Manual Verification: When you manually check the "Add to Cart" feature, it works fine.

Debugging The Flaky Test

Upon close inspection (cue the detective music), you notice that the test has a time.sleep(2) line. Aha! It's waiting for 2 seconds after clicking the "Add to Cart" button to check the cart counter. But what if the system takes more than 2 seconds to update the cart counter? That’s like waiting for a kettle to boil but checking at random times—sometimes you'll catch it, sometimes you won’t.

To remedy this, you could use Selenium’s WebDriverWait to explicitly wait for the cart counter to update, making the test more stable.

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def test_add_to_cart():

browser = navigate_to_website("http://myecommerce.com")

browser.click("Product Page")

initial_count = browser.getText("Cart Counter")

browser.click("Add to Cart Button")

WebDriverWait(browser, 10).until(

EC.text_to_be_present_in_element(("Cart Counter"), str(initial_count + 1))

)

final_count = browser.getText("Cart Counter")

assert final_count == initial_count + 1Congratulations, Detective! You've just caught a flaky test and transformed it into a reliable, upstanding citizen of your test suite! 🕵️♂️🔍

Key Takeaways

- Run Multiple Times: Trust but verify. Run the test multiple times to confirm its flakiness.

- Isolate and Inspect: Run the suspect test alone to analyze its behavior without interference from other tests.

- Study the Logs: Log files often contain clues to why a test is flaky. They are the DNA evidence in your detective hunt.

- Check External Factors: Make sure no external dependencies are influencing the test's behavior.

- Learn from History: Keep records of past flaky tests to recognize patterns and traits.

So, next time you encounter an unpredictable test result in your CI/CD pipeline, channel your inner Sherlock Holmes and follow these clues to catch that pesky flaky test. With careful observation, keen analysis, and a sprinkle of detective work, you'll get your pipeline running like a well-oiled machine!