Flaky Test Detection: How to Spot Them Before They Scramble Your CI/CD Eggs 🍳

Crack the code on "Flaky Test Detection" in your CI/CD pipeline. Learn to spot the burnt toast among your tests with our easy-to-follow guide. We dive into historical data, context, and reproducibility to help you identify flaky tests. Say goodbye to unreliable tests and keep your pipeline cooking!

When you're cooking up a delicious meal in the kitchen, you don't want to keep making the same mistake—like overcooking the eggs or burning the toast. Likewise, in the kitchen of software development, CI/CD pipeline is the chef that needs to get everything just right. But beware, for there lies a wild card in your dish—a flaky test!

Flaky tests are the burnt toast of your CI/CD pipeline. Sometimes they pass, sometimes they fail, and sometimes they make you question your existence. But worry not, we're going to whisk you through the nitty-gritty of flaky test detection, helping you identify these unruly tests and differentiate them from other CI failures.

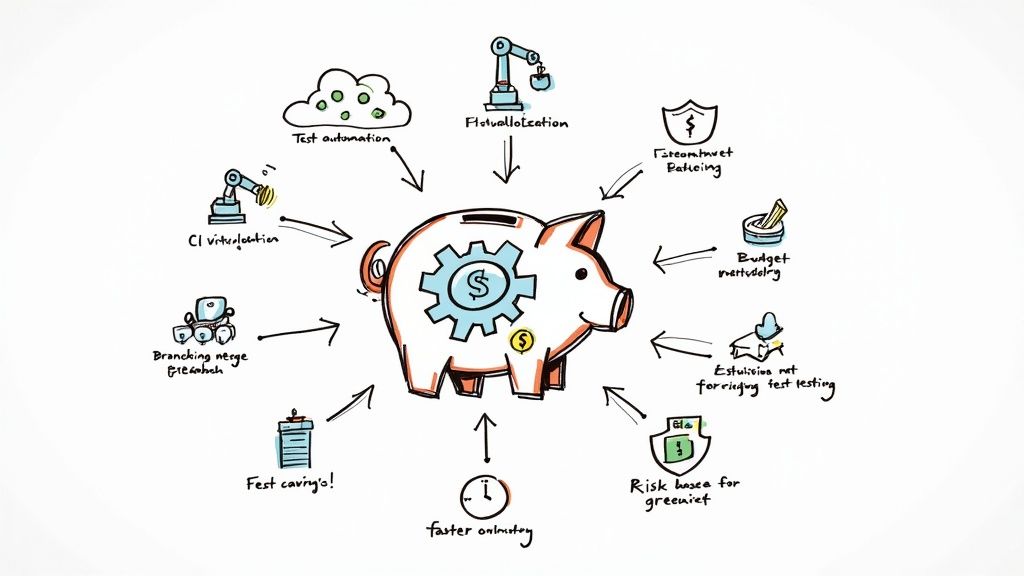

Key Ingredients to Spot a Flaky Test

1. Historical Data: The Recipe Book 📖

Before crying wolf and blaming a test for being flaky, take a look at its historical data. If a test passes and fails sporadically without code changes, you might be onto a flaky one.

2. Context: The Kitchen Layout 🍴

Flaky tests often emerge in specific environments or conditions. Sometimes they act up when the server is under heavy load or when they're run parallelly with other tests.

3. Reproducibility: The Taste Test 🍲

A reliable test will act the same way every time you run it, like your grandma's secret recipe. If it's impossible to reproduce the test failure under the same conditions, it's probably flaky.

4. Isolation: The Ingredients' Quarantine 🍎

Flaky tests often have side-effects. Try running the suspicious test in isolation. If it behaves well alone but acts up when part of the full suite, it's got some ‘splainin to do!

How to Catch a Flaky Test: A Concrete Example 🎣

Let's say we're using JUnit for Java-based tests. We have a test that fails about 1 out of 10 times. Our mission is to identify if it's a flaky test or just an unlucky streak.

1. Identify Patterns

Run the test multiple times and note its behavior. Use JUnit's @RepeatedTest annotation to run it 50 times.

@RepeatedTest(50)

public void suspiciousTest() {

// Your test code here

}2. Check Historical Data

Use tools like Jenkins or GitLab CI to examine past test results. If the test is failing sporadically and no code changes seem to correlate, it's a candidate for flakiness.

3. Run in Isolation

Execute only this test without the baggage of other tests.

mvn -Dtest=YourTestClass#suspiciousTest test

4. Reproduce Locally

Try running the test on your local machine. Sometimes CI environments have unique quirks that might contribute to flakiness.

5. Check for Context

Does the test fail more at certain times of day, or under specific system loads? Use monitoring tools to understand the test environment when it fails.

6. Diagnostics

Add extra logging to help pinpoint why the test might be failing.

If after all these steps you can't pinpoint a consistent reason for failure, congratulations! You've caught yourself a flaky test!

Key Takeaways

✅ Flaky Test Detection is Critical: Flaky tests can cripple the reliability of your CI/CD pipeline.

✅ Look for Historical Inconsistency: If a test is passing and failing randomly over time, it might be flaky.

✅ Context Matters: Flaky tests often misbehave under specific conditions or environments.

✅ Isolate and Reproduce: Run the test individually and try reproducing the issue on a local environment.

So, the next time your CI/CD pipeline serves up something strange, remember to ask, "Is it flaky or just misunderstood?" Good luck, and may your code be ever in the ‘green’. 🟢

Happy Cooking... I mean, Coding! 🍳👨💻