10 Essential Best Practices for Code Review: A Complete Team Guide

Code reviews play a vital role in creating high-quality software and building strong engineering teams. Research shows they can reduce bugs by up to 36% when done well, as demonstrated by studies at companies like Cisco Systems. Beyond just finding issues, good code reviews help teams learn from each other and establish consistent coding standards. The key is approaching reviews thoughtfully and systematically rather than treating them as a simple checkbox exercise.

Why Traditional Reviews Fall Short

Many teams struggle with code reviews because they try to tackle too much at once. Looking at thousands of lines of code in a single review overwhelms developers and makes it easy to miss important problems. Studies indicate reviews work best when limited to 200-400 lines at a time - this helps reviewers stay focused and catch issues more effectively. Without clear goals and guidelines, reviews can also become inconsistent and subjective. Teams need a structured approach to make reviews truly valuable.

Modern Tools and Human Expertise: A Winning Combination

The best code review processes combine skilled developers with helpful tools that make collaboration easier. Platforms like GitHub and GitLab provide excellent features for sharing code, discussing changes, and tracking review history. Adding automation tools like Mergify helps enforce best practices and streamline merging through features like merge queues and branch protections. This combination of human expertise and good tooling helps teams catch problems early before they become major issues.

Measuring the Impact of Your Reviews

Teams should track specific metrics to understand if their code reviews are working well. Key measurements include review cycle time (how long reviews take to complete) and defect density (number of bugs per 1,000 lines of code). Following these metrics helps identify process bottlenecks and areas for improvement. Teams can then adjust their approach based on real data rather than assumptions. Regular analysis of these metrics ensures the review process keeps getting better and supports the team's quality and productivity goals.

Making Reviews Manageable and Meaningful

Code reviews boost software quality, but they can become overwhelming when not properly scoped. Let's explore practical ways to keep reviews both manageable and valuable for your team.

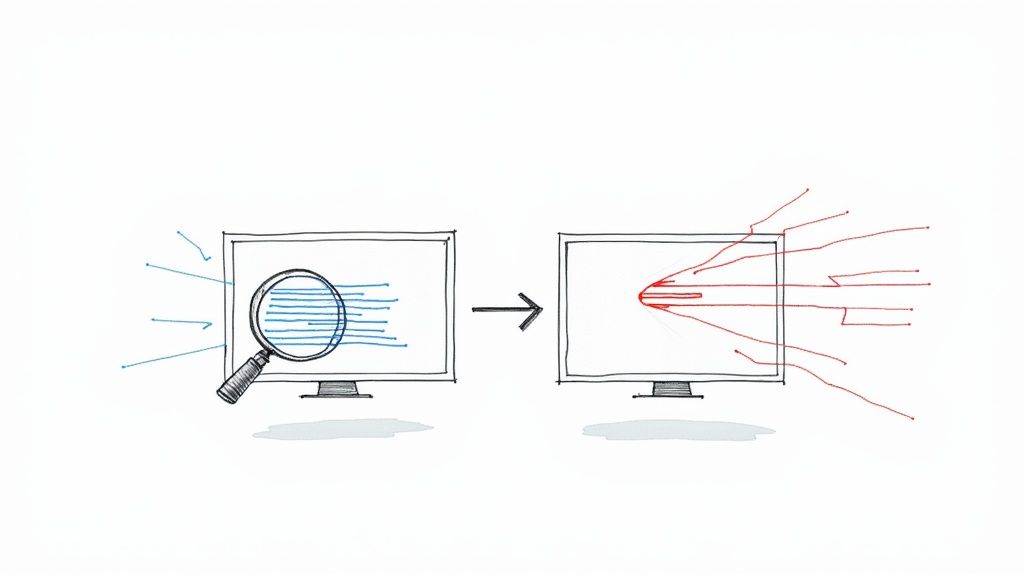

The 200-400 Line Sweet Spot

Think of reading code like proofreading - your attention drops when faced with too much content at once. Research shows that reviewers spot most bugs within the first 200 lines of code. Going beyond 200-400 lines significantly reduces a reviewer's ability to catch issues. That's why keeping reviews focused on smaller chunks of code leads to better results. When teams try to review thousands of lines at once, reviewer fatigue sets in and the review loses its purpose.

Breaking Down Large Changes

When dealing with big code changes, strategic breakdown is key. Split extensive changes into smaller, logical pieces that each focus on a specific feature or fix. This helps reviewers understand the purpose and scope of each change. For example, when building a new user authentication system, you might create separate pull requests for database changes, API endpoints, and frontend work. This focused approach makes it easier to track changes and spot potential conflicts.

Timeboxing for Focus and Engagement

Just like developers use techniques like Pomodoro to stay productive, reviewers need structured time blocks. Keep review sessions between 60-90 minutes to match natural attention spans and prevent mental exhaustion. Short, focused sessions help reviewers dive deep into the code without burning out. Tools like Mergify can help by handling routine tasks like merge queues and branch protection, letting reviewers focus purely on code quality.

Structuring a Successful Review Workflow

Effective teams build their review process around these key elements:

- Clearly defined scope: Each pull request should tackle one specific feature or fix

- Automated checks: Use linters and analysis tools to catch basic issues before human review

- Asynchronous reviews: Let reviewers work at their own pace to minimize disruptions

- Constructive feedback: Build a culture that emphasizes improvement over criticism

- Actionable comments: Give specific guidance that helps developers make needed changes

Following these practices helps teams turn code reviews from a tedious task into a valuable chance to learn and improve together. The result? Better code quality, fewer bugs, and more engaged developers who enjoy collaborating.

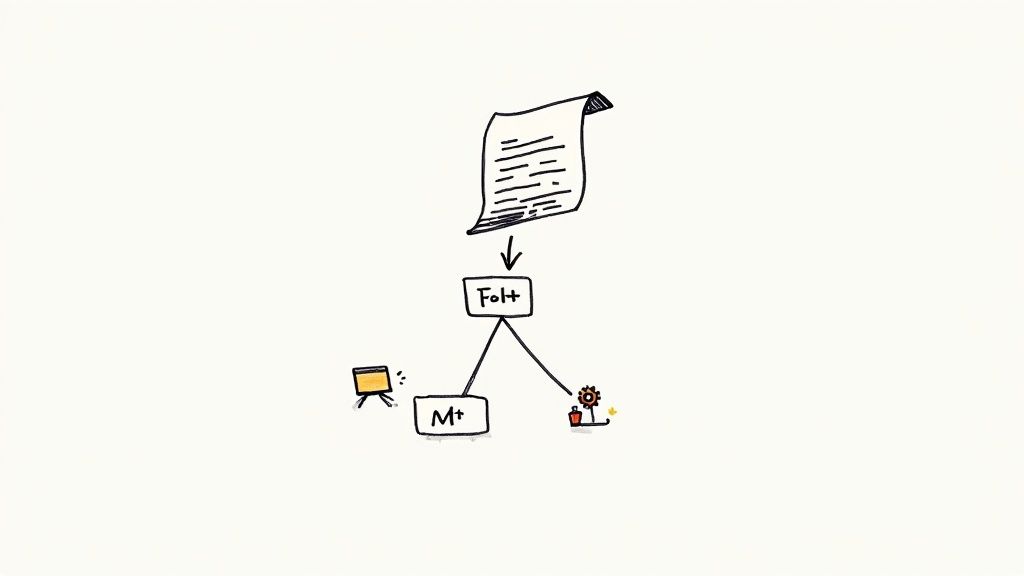

Building Review Checklists That Actually Work

Getting code reviews right takes more than just scanning through code - it needs a clear system. Good checklists make this possible by giving teams a reliable way to check everything that matters. When teams use checklists consistently, they catch many more bugs and issues. Research shows that reviews guided by checklists find over 66% more defects compared to unstructured reviews. While having a checklist is a great start, it needs to match your team's specific needs and grow with you over time.

Making Checklists Work for Your Team

Basic checklists cover the essentials, but the best ones reflect how your team actually works. Think about the programming languages you use - Python, Java, or others each have their own common mistakes to watch for. A Python checklist might focus on properly using list comprehensions and decorators, while Java reviews need extra attention on interfaces and exception handling.

Your project's security needs also shape what goes in the checklist. For apps handling sensitive user data, you'll want specific items about validating inputs and preventing issues like cross-site scripting (XSS). Good checklists also help keep code maintainable by checking that code follows team standards, uses clear designs, and includes proper documentation.

Example Checklist Categories and Items

Here's a practical starting point with key categories and items to consider:

- Functionality:

- Does the code perform the intended function correctly?

- Are boundary conditions and edge cases tested?

- Is the error handling robust and comprehensive?

- Security:

- Are all inputs validated to prevent injection attacks?

- Are sensitive data handled securely (encryption, access control)?

- Are there any known vulnerabilities in used libraries or frameworks?

- Maintainability:

- Is the code well-documented and easy to understand?

- Does the code adhere to coding style guidelines?

- Is the code modular and easy to modify or extend?

- Performance:

- Are there any obvious performance bottlenecks?

- Does the code use resources efficiently (memory, CPU)?

- Could any algorithms be optimized for better performance?

Getting Team Support and Making Improvements

Even great checklists only work when teams actually use them. To get developers on board, show how checklists lead to better code and less rework - saving everyone time in the long run. Start small with a trial run and ask for honest feedback. Developers are more likely to support a process they helped create.

Keep improving your checklist as your project grows. Use tools like Mergify to automatically enforce key checklist items through branch protection rules and required checks. Take feedback from code reviews and team discussions to keep your checklist practical and relevant. This ongoing refinement helps your review process stay effective and in sync with what your team needs to build great software.

Making Code Reviews More Impactful Through Different Perspectives

Code reviews become richer when they include a mix of viewpoints. Teams bring different experiences and areas of expertise that can catch everything from missing edge cases to performance issues. But getting the most out of these diverse perspectives, especially with remote teams spanning multiple time zones, requires some thoughtful planning and organization.

Cross-Team Review Benefits

Having developers from different teams review code offers unique advantages. When a security expert examines authentication code, they spot potential vulnerabilities that others might miss. Getting feedback from frontend developers on backend API changes helps ensure smooth integration between systems. Research shows that while reviewers from other teams may comment less frequently, their insights often address important architectural considerations that improve the overall design. This mix of perspectives leads to more resilient code.

Working Across Time Zones

For teams spread across different time zones, coordinating reviews takes extra effort. While asynchronous reviews work well most of the time, some complex changes benefit from real-time discussion. Setting clear communication expectations and guidelines helps smooth the process. Teams can use Slack for quick coordination and review updates. For deeper technical discussions, brief video meetings during overlapping work hours allow focused conversation without disrupting individual schedules.

Working with Outside Contributors

Outside contributors like open source developers or consultants can spot issues that internal teams miss. The key is making it easy for them to participate effectively. This means providing clear documentation about the project, coding standards, and development setup. Assigning an internal mentor helps external reviewers get up to speed quickly. Using GitHub simplifies tracking their contributions and feedback.

Speed vs Thoroughness

While getting input from many perspectives improves code quality, reviews still need to move quickly to avoid blocking progress. Long review cycles frustrate developers and slow down projects. The solution is setting clear timelines for reviews and asking reviewers to focus on the most important aspects - like functionality, security and maintainability. Tools like Mergify can handle routine review tasks automatically. With the right balance, teams can benefit from diverse viewpoints while keeping reviews efficient.

Measuring What Actually Matters in Reviews

Having solid code review practices is important, but knowing if they're actually working is just as critical. You need concrete data to show whether your reviews are genuinely improving code quality and helping your team work better together. This means looking beyond surface-level metrics to focus on measurements that truly reflect how well your review process works.

Key Performance Indicators for Code Reviews

Here are some specific metrics that can help you understand if your code reviews are effective and identify where you can make improvements:

- Defect Density: Count the number of bugs found per 1,000 lines of code. When you see this number drop after putting better review processes in place, it shows your code quality is improving. Real-world results back this up - Cisco Systems found that good code reviews cut bugs by up to 36%.

- Review Cycle Time: Track how long it takes from when someone submits code for review until it gets merged. Faster cycle times mean quicker feedback and faster delivery. But watch out - if reviews happen too quickly, reviewers might miss important issues.

- Inspection Rate: Look at how many lines of code get reviewed per hour. This helps spot bottlenecks in your process. If your team consistently reviews code slowly, you might need better tools or more focused review sessions. Research shows developers work best when reviewing 200-400 lines at a time.

- Code Churn: Monitor how often code gets changed after it's reviewed. When you see lots of changes to the same code, it often points to design problems that weren't caught during review.

Implementing Metrics and Interpreting Results

You don't need complex systems to track these metrics. Tools like GitHub and GitLab already include analytics features that cover many of these measurements. Mergify can help automate parts of the review process, giving developers more time to focus on the code while making data collection easier. But just having numbers isn't enough - you need to understand what they mean. For example, if you suddenly see more bugs appearing, take a closer look at recent code changes or how your team works together.

Using Data for Continuous Improvement

Once you understand what your metrics are telling you, you can make specific improvements based on that data. If reviews consistently take too long, try setting time limits or breaking big changes into smaller pieces. If you keep finding lots of bugs despite regular reviews, update your checklists to better match your project's needs. Keep measuring, analyzing, and adjusting to build a review process that really works. The key is making reviews help your team work better, not slow them down. Using data to guide your improvements turns code reviews into a powerful tool for making your development process better over time.

Creating a Culture Where Reviews Drive Growth

Code reviews work best when teams see them as chances to learn and grow together, not just find bugs. Getting the most value requires creating an environment where feedback helps everyone improve, disagreements lead to better solutions, and every review becomes a learning opportunity.

Fostering Constructive Feedback

How we give feedback shapes how the whole team works together and grows. Rather than just pointing out problems, frame comments to spark discussion and improvement. For example, instead of "This function is too complex," try "I find this logic a bit hard to follow. What if we broke it into smaller functions to make it clearer?" This approach brings the author into solving the problem together. Taking time to highlight what works well also reinforces good practices that benefit everyone.

Navigating Disagreements and Challenging Reviews

When team members disagree during reviews, how they work through it matters more than the disagreement itself. Clear guidelines help - focus on respectful discussion and finding the best solution for the project. For tricky issues, involve a senior engineer to help mediate, or jump on a quick video call to talk it through. Tools like Slack work well for initial discussion before moving complex topics to real-time conversation.

Turning Code Reviews into Mentorship Opportunities

Reviews provide perfect teaching moments in both directions. Senior developers can explain design choices and best practices, sharing the "why" behind different approaches. Less experienced developers bring fresh eyes and questions that make everyone think differently about the code. This back-and-forth learning strengthens the whole team's skills over time.

Building Trust and Maintaining a Positive Culture

Trust and respect form the foundation of effective reviews. Create space for questions and concerns without judgment. Recognize effort and progress when developers incorporate feedback well. This builds shared ownership of code quality and motivates continuous improvement. Tools like Mergify can handle routine tasks automatically, letting the team focus on meaningful discussion and learning. By using Mergify's automation features thoughtfully, teams can spend more time on what matters - sharing knowledge, mentoring each other, and building better code together.

Ready to improve how your team handles code reviews? See how Mergify can help create a stronger review process that helps everyone learn and grow. Start your free Mergify trial today!