The Complete Guide to Mastering Flaky Tests: Essential Strategies for Development Teams

Master the battle against flaky tests with proven strategies from industry veterans. Learn practical approaches to identify, fix, and prevent unreliable tests that impact development velocity and team confidence.

Every developer has encountered them - those maddeningly inconsistent test failures that appear randomly and then vanish on the next run. Flaky tests have become a common headache for software teams, undermining confidence in test suites and slowing down development. From early-stage startups to large enterprises, no project seems immune. But what exactly makes these unreliable tests so problematic, and why do they matter so much for healthy development practices?

The Pervasive Nature of Flaky Tests

The numbers tell a clear story - flaky tests are everywhere. Recent studies show that 59% of development teams deal with unreliable tests at least monthly, with many facing issues weekly or even daily. This isn't limited to specific contexts either. Analysis of open source projects reveals flaky tests appearing across different programming languages and project types. For instance, numerous projects in the Apache ecosystem contain at least one flaky test, showing just how common this challenge is.

The impact extends well beyond open source. A study by Trunk.io found that these inconsistencies seriously damage developers' faith in testing. Teams start automatically rerunning failed tests without investigating root causes, assuming any failure might be flaky. Some even rerun passing tests out of skepticism. This creates a dangerous pattern where testing - meant to be a quality safeguard - becomes a source of doubt instead of confidence.

The Hidden Costs of Inconsistency

While failed builds are frustrating enough, the real cost of flaky tests runs much deeper. Teams waste countless hours chasing down phantom issues, investigating failures that aren't actually tied to code problems. This takes valuable time away from real development work, bug fixes, and feature improvements.

The ripple effects slow everything down. When tests fail intermittently, they block pull requests and delay releases, creating bottlenecks in development pipelines. This is especially painful for teams trying to ship quickly. The constant need to rerun tests also drives up infrastructure costs as CI/CD systems work overtime, particularly problematic for larger codebases that already strain compute resources.

Beyond the Obvious: Long-Term Implications

The ongoing drain of flaky tests takes a serious toll on teams. When developers repeatedly face unreliable results, they often grow cynical about testing in general. This can lead to corner-cutting and reduced investment in test quality, creating a downward spiral.

Even more concerning is how flaky tests can hide real bugs. When teams get used to ignoring or rerunning "flaky" failures, they risk missing genuine issues that need attention. These masked bugs can lurk in the codebase far longer than they should, potentially causing bigger problems down the line. To break this cycle, teams need to understand exactly what causes tests to become flaky in the first place. Only then can they put effective solutions in place and restore testing to its proper role as a cornerstone of quality software development.

Identifying the True Culprits Behind Test Flakiness

Every development team faces the headache of flaky tests at some point. While it's easy to spot when tests are flaking, finding out why is much trickier. The real work lies in uncovering the root causes hiding beneath the surface. Let's explore the most common culprits that make tests unreliable and see how we can catch them in the act.

Common Sources of Flaky Tests

Tests can become flaky for several key reasons. Hidden state dependencies are a major offender. Picture this: you have a test that needs a specific record in your database. Another test changes that record but doesn't clean up after itself. Now your first test might pass or fail randomly depending on which test runs first. It's like trying to read a book while someone else keeps moving your bookmark - pretty frustrating!

Asynchronous operations are another common troublemaker. When your tests need to talk to external systems or handle async code, timing becomes critical. A test might expect an answer within 2 seconds, but what if the network is slow that day? These issues are especially tough because they often depend on things outside your control, like third-party API response times or network conditions.

Resource leaks can also wreak havoc on your test suite. Think of it like leaving the water running - eventually, you'll run out. If tests keep using up memory or file handles without releasing them, other tests start failing in weird ways. The tricky part is that the test causing the leak might not be the one that fails - it's the tests that run later that suffer the consequences.

Unmasking the Hidden Culprits: Race Conditions and Test Order Dependency

Some flaky test causes are sneakier than others. Race conditions pop up when multiple parts of your code try to use the same resources at the same time. It's like two people trying to write on the same piece of paper - unless you carefully coordinate who goes first, you'll end up with a mess. For example, if two tests try to update the same global variable simultaneously, both might get confused about what the actual value should be.

Test order dependency is another subtle issue. Tests that work perfectly fine on their own might fail when run as part of the full suite. This usually happens when tests share setup steps or environment settings without properly cleaning up. It's similar to cooking in the kitchen - if you don't wash the dishes between recipes, ingredients from one dish might accidentally end up in another.

Utilizing Tools and Techniques for Identification

Finding these hidden problems requires a smart approach. Modern test analysis tools can help by keeping track of which tests fail often and under what conditions. Good tools will show you patterns - like tests that only fail early in the morning or after specific code changes. This information helps point you toward the real cause of the flakiness.

It's also helpful to organize your flaky tests by how much they impact your work. Focus first on the tests that block important processes like merging code or deploying to production. By fixing these high-impact issues first, you'll get the most benefit from your debugging efforts. This systematic approach helps build a more reliable test suite that your team can trust.

Measuring the Hidden Costs of Unreliable Tests

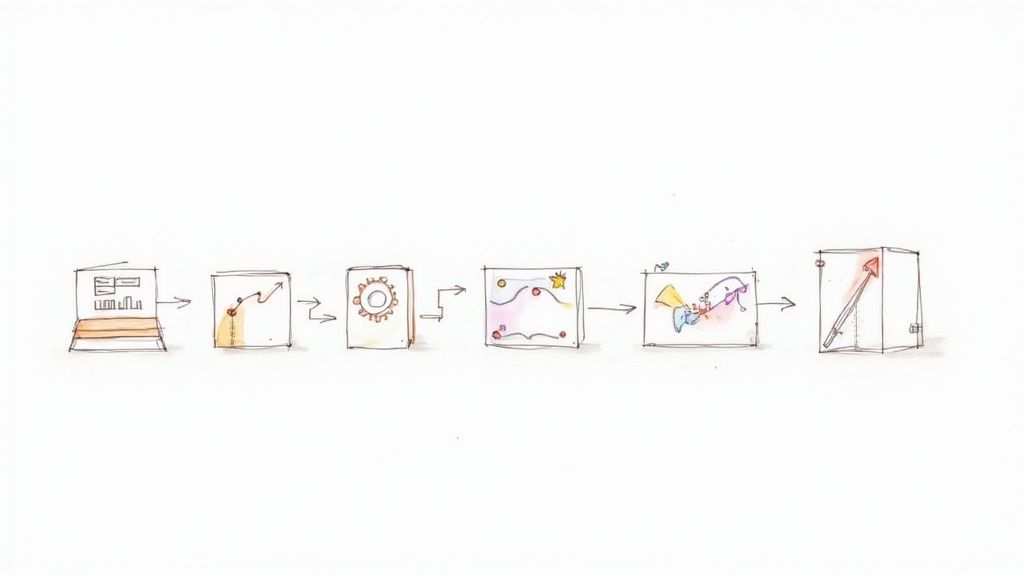

Finding flaky tests is just the start - understanding their true cost to your development process requires looking beyond surface-level metrics. While increased CI/CD usage is easy to measure, the real impact of unreliable tests ripples throughout your entire software development workflow in ways that are harder to quantify but critically important to address.

Quantifying the Impact on Developer Productivity

When tests fail unpredictably, developers get pulled away from their actual work to chase down false alarms. This constant task-switching breaks their focus and steals time from meaningful development work. Picture a developer spending half a day investigating a test failure, only to discover it was caused by a temporary infrastructure hiccup rather than an actual bug. These interruptions add up fast when flaky tests are common. Beyond just lost time, dealing with unreliable tests day after day wears on developers mentally and can make them doubt the value of testing altogether.

The Domino Effect: Delayed Releases and Eroded Trust

The problems caused by flaky tests spread far beyond individual developers. These unreliable tests create bottlenecks that slow down pull request reviews and push back release schedules. For teams that need to ship quickly, these delays directly impact business results. What's worse, as developers encounter more and more flaky tests, they start losing faith in the entire test suite. This often leads to dangerous habits like ignoring or blindly re-running failing tests without proper investigation - creating opportunities for real bugs to slip through unnoticed.

Measuring the True Cost: A Framework for Calculation

To make a solid case for fixing flaky tests, you need concrete data about their impact. Here's a practical framework for measuring the real costs:

- Developer Time: Use time tracking tools or issue tracking tags to record hours spent dealing with flaky test issues

- Delayed Releases: Look at how flaky tests affect your deployment timeline and calculate the business impact of shipping later

- CI/CD Usage: Monitor your build system metrics to identify and cost out unnecessary test reruns

- Reduced Development Velocity: Track how flaky tests correlate with slower feature delivery and bug fix rates

By gathering this data, you can clearly show the value of investing in test stability improvements. Tools like Mergify can help by optimizing your CI/CD pipeline and maintaining a stable codebase. Taking a data-driven approach to both measuring and fixing flaky test issues is key to building a reliable testing process that helps rather than hinders your development team.

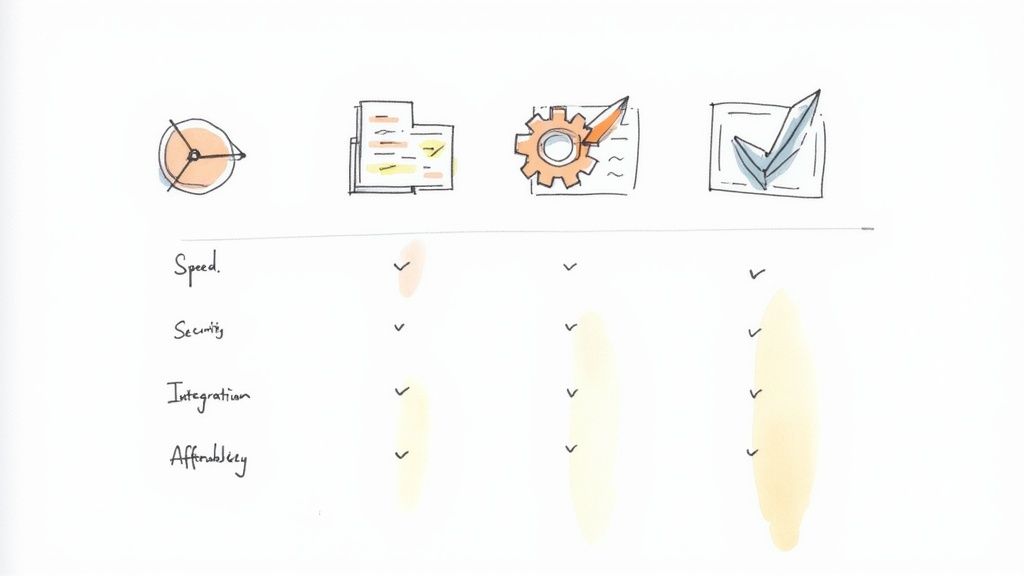

Building Your Flaky Test Detection Strategy

Once you understand how flaky tests affect your workflow and what causes them, you need a clear plan to find them. This isn't just about spotting occasional test failures - it's about building a systematic approach to catch unreliable tests before they slow down your development process. Many development teams are moving away from random checks toward more organized methods of detection.

Establishing a Baseline for Flaky Test Metrics

Start by getting a clear picture of your test suite's current reliability. Look at your test results and ask: How many tests fail regularly? Which specific tests cause the most problems? By tracking basic numbers like overall failure rates and how often individual tests fail, you create a starting point to measure future improvements. This initial data helps you see whether your detection methods are working.

Implementing Effective Monitoring and Alerting Systems

With your baseline in place, set up monitoring that actively tracks test results over time. Good monitoring goes beyond simple pass/fail counts - it should spot patterns that point to flaky behavior. For example, if a test passes consistently for a month but suddenly starts failing at random, that's a red flag worth investigating. Make sure to add alerts that notify the right people when tests start acting unpredictably. This helps teams fix issues quickly before they pile up.

Leveraging Automated Flaky Test Detection Tools

The right tools make a big difference in finding flaky tests efficiently. Automated detection tools can spot tests that behave differently across multiple runs. They track how often and in what ways tests fail, helping you understand what's causing the problems. Tools like Mergify can help by making your CI/CD process run more smoothly while giving you better insights into test execution. Using these tools together lets you catch and fix flaky tests early in development.

Real-World Examples of Successful Detection Strategies

Many teams have found practical ways to reduce flaky tests. One common method is adding flaky test detection directly to CI/CD pipelines, checking every code change automatically. Another approach involves looking at past test data to find hidden patterns. For instance, a team might discover that certain tests only fail when the server is under heavy load. This knowledge helps them fix the underlying performance problem and make the test more stable. Some teams also use "quarantine" areas for known flaky tests - keeping them separate from the main test suite until fixed. This prevents them from blocking important work while still keeping track of which tests need attention. These real examples show that having a clear, organized approach to finding flaky tests makes testing more reliable and efficient.

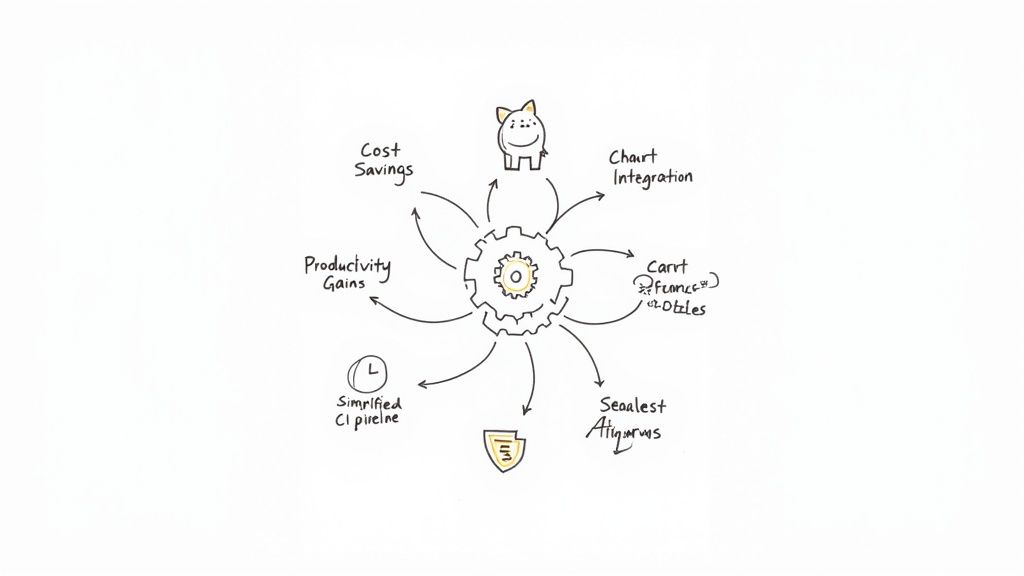

Implementing Battle-Tested Stabilization Techniques

After identifying what causes flaky tests and measuring their impact, we need solid solutions to fix them. Let's explore proven techniques that address common sources of flakiness with clear examples you can put into practice.

Taming Asynchronous Operations: Strategies for Predictable Outcomes

Asynchronous operations like API calls and timed events often cause flaky tests. Consider a test that calls a third-party API - if the API response is slow, the test might time out and fail even though the code works correctly. Rather than using fixed timeouts, implement smarter waiting strategies.

Use polling mechanisms that check for specific conditions at regular intervals instead of arbitrary waits. For example:

// Instead of a fixed wait:

// Thread.sleep(2000);

// Poll for the expected condition:

Awaitility.await().atMost(5, SECONDS).until(() -> conditionIsTrue());

When possible, mock external dependencies to isolate your tests. This makes them more reliable and faster to run.

Managing Test Dependencies: Isolation and Cleanup for Consistent Results

Tests that share state without proper cleanup can affect each other unpredictably. It's like multiple chefs using the same kitchen without washing dishes - ingredients from one recipe might contaminate another. Each test needs its own setup and cleanup to run independently.

For database tests:

- Use transactions to roll back changes after each test

- Reset to a known state before each run

- Use a dedicated test database

For shared state:

- Reset global variables after each test

- Clean up shared objects between runs

Ensuring Proper Isolation: Sandboxing Your Tests for Maximum Reliability

Take isolation further by running each test in its own environment:

- Run tests in separate containers to prevent resource conflicts

- Use virtual machines for complete isolation when needed

- Create dedicated test data for each test instead of sharing datasets

Tools like Docker make it easy to create isolated environments. While setting up containers takes some initial work, the improved reliability is worth it. Studies show 59% of developers struggle with flaky tests, which hurts productivity and trust in testing. Good isolation dramatically reduces flakiness.

Implementing Retries: A Safety Net for Occasional Hiccups

While not a complete fix, retrying failed tests can help handle temporary issues like network problems. Just be careful not to overdo it - too many retries can hide real bugs. Track retry patterns carefully to identify tests that need permanent fixes.

Keep detailed logs about:

- Which tests needed retries

- How often they failed

- What caused the failures

- Patterns in failure timing

Use this data to systematically improve test stability over time.

These strategies provide a solid foundation for reliable tests. Apply them consistently and you'll see faster feedback cycles and increased confidence in your test suite. Remember that maintaining stable tests requires ongoing attention and commitment to best practices. With these techniques, you can make testing a valuable part of your development process rather than a source of frustration.

"Creating a Culture of Test Excellence" with a style similar to your example blogs:

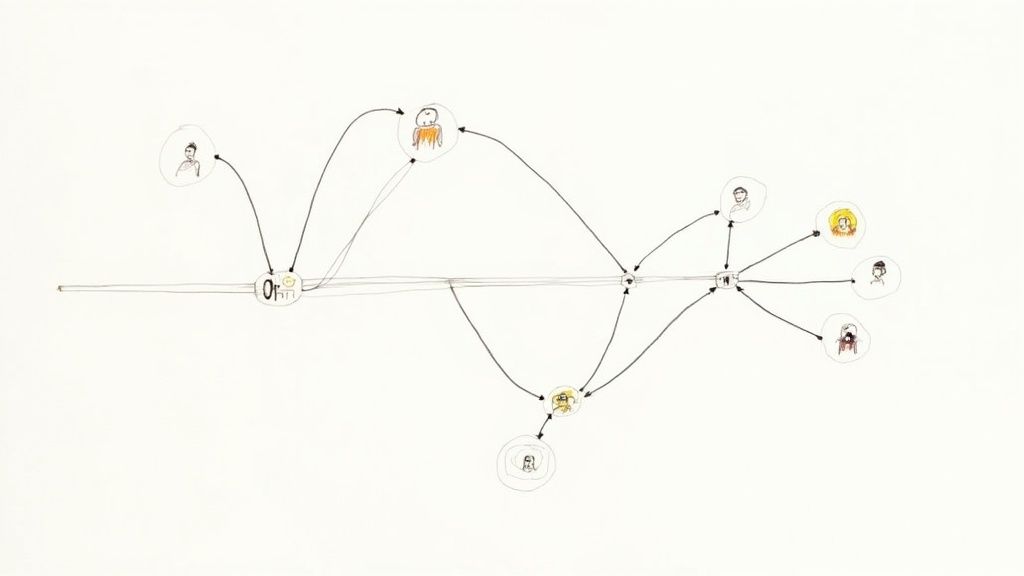

Creating a Culture of Test Excellence

What makes tests truly reliable isn't just the tools we use - it's the mindset and practices we foster within our teams. While most engineers know how to write tests, building an environment where quality testing thrives takes intentional effort. Let's explore practical ways teams can build this foundation and prevent flaky tests from undermining their work.

Establishing Team Protocols and Ownership

Clear testing standards act like a well-written recipe - they give teams consistent steps to follow and predictable results. This means defining specific approaches for test structure, managing dependencies, and proper cleanup. When everyone follows the same practices, it becomes much easier to spot and fix problematic tests.

Ownership is equally important. Just as a restaurant assigns specific chefs to different stations, teams need clear accountability for different test suites. When specific individuals or teams own test quality, they're more likely to maintain tests proactively rather than letting them degrade over time.

Implementing Effective Review Processes

Code reviews shouldn't just check if tests exist - they need to actively look for potential flakiness. Reviewers should ask questions like: Are there hidden dependencies between tests? Do async operations have proper handling? Is cleanup thorough? When teams catch these issues during review, they prevent problematic tests from becoming embedded in their codebase.

Creating Accountability for Test Quality

Numbers tell stories. Tracking metrics like flakiness rates and newly introduced flaky tests helps teams understand where problems come from. For instance, if flaky tests spike after certain types of changes, it may reveal underlying issues with test design or infrastructure. Regular team discussions about these metrics keep quality top of mind and drive improvement efforts.

Fostering Team Buy-in and Maintaining Momentum

For testing practices to stick, teams need to see their value. The impact is clear - studies show 59% of developers regularly deal with flaky tests, leading to wasted time and frustration. By highlighting how reliable tests improve productivity and reduce headaches, teams are more likely to embrace testing best practices.

Celebrating wins, even small ones, helps maintain momentum. When teams recognize efforts to improve test quality, it reinforces the importance of testing excellence. Real examples can be powerful - companies like Uber have documented their journey to better testing, providing practical lessons for others.

Building great test culture is ongoing work that requires consistent effort from everyone involved. But with clear standards, ownership, metrics, and team buy-in, development teams can create an environment where quality testing thrives.

Want to improve your team's testing workflow? Learn more about how Mergify can help you build a more reliable CI/CD pipeline and improve your team's testing workflow.