10 Code Review Best Practices for Superior Code

Supercharge Your Workflow with Code Review Best Practices

Code review best practices are crucial for producing high-quality software and improving team performance. This listicle presents 10 essential practices to streamline your code review process. Learn how to conduct effective reviews, provide constructive feedback, and utilize automated tools. These code review best practices will help your team deliver better code faster, reducing bugs and fostering collaboration. By implementing these tips, you’ll create a more efficient and positive development experience for everyone.

1. Review Small Chunks of Code at a Time

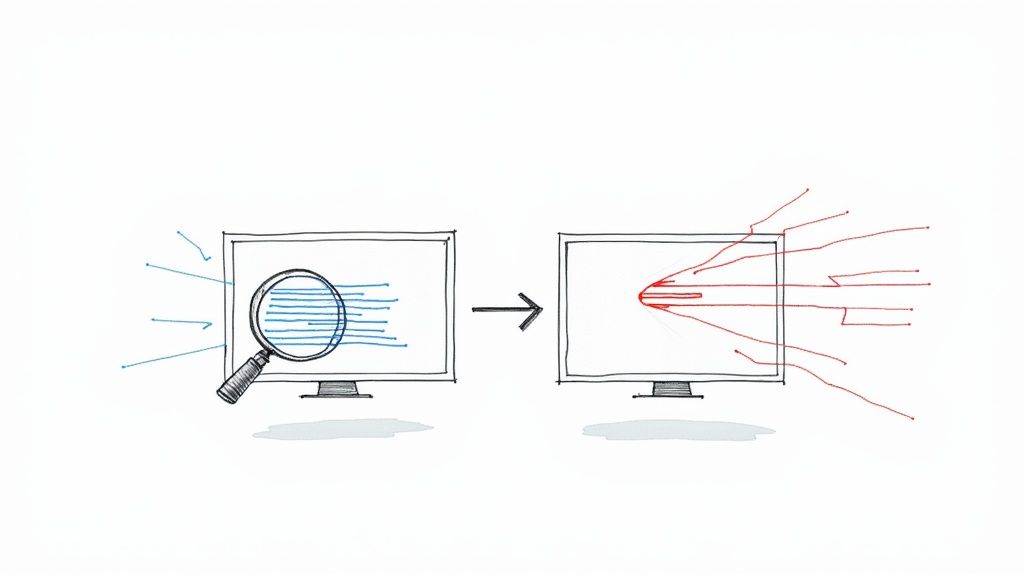

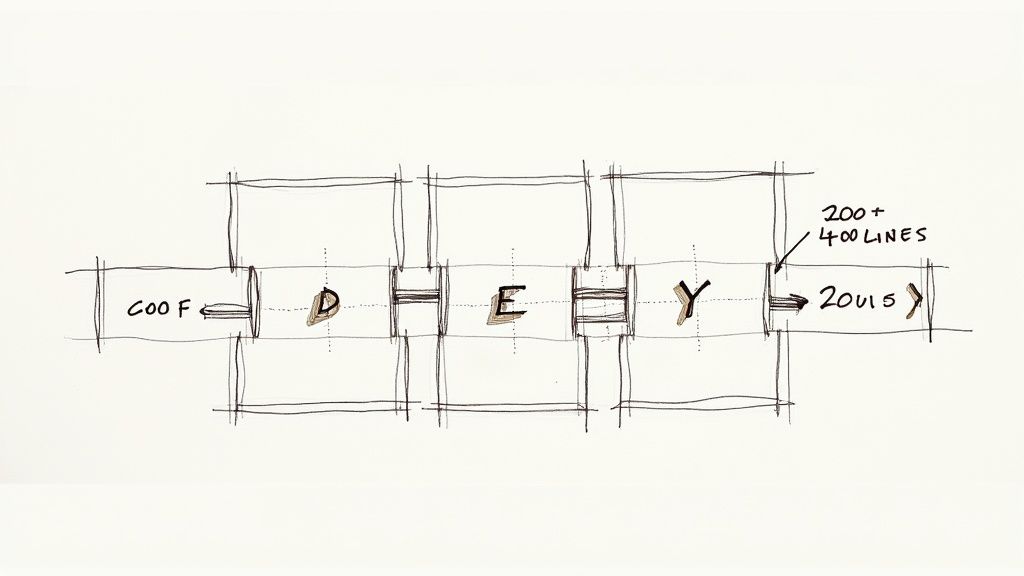

One of the most effective code review best practices is reviewing small chunks of code at a time. This approach involves breaking down large code changes into smaller, more manageable pull requests. Instead of submitting a massive pull request containing thousands of lines of code, developers should strive for focused changes, ideally addressing a single feature, bug fix, or a specific aspect of refactoring. This practice significantly improves the quality of the review process and reduces reviewer fatigue, leading to more effective feedback and higher quality code.

Smaller pull requests, generally under 400 lines of code, allow reviewers to concentrate on a specific piece of functionality or a particular fix. This focused review promotes a deeper understanding of the changes, making it easier to identify potential bugs, logic errors, or deviations from coding standards. By enabling faster feedback cycles, smaller changesets also facilitate quicker integration into the main codebase. This rapid iteration helps to maintain momentum in the development process and minimizes the risk of conflicts and integration issues down the line.

This practice deserves a top spot in our list of code review best practices because its impact on both code quality and team productivity is significant. The benefits extend beyond merely finding bugs; they contribute to improved knowledge sharing within the team, faster onboarding of new members, and a more streamlined development workflow.

Features of reviewing small chunks of code:

- Keeps pull requests under 400 lines of code: This manageable size makes reviews less daunting and more efficient.

- Focuses on single functionality or fix: Allows reviewers to concentrate their attention and provide more targeted feedback.

- Enables faster feedback cycles: Quicker reviews lead to faster integration and a more agile development process.

Pros:

- Improves review quality: Reviewers can focus better on smaller changesets, leading to more thorough and effective reviews.

- Reduces cognitive load on reviewers: Smaller chunks of code are easier to understand and analyze, minimizing reviewer fatigue.

- Facilitates quicker integration into codebase: Faster reviews and approvals contribute to a more streamlined integration process.

- Increases probability of finding bugs: Focused reviews increase the likelihood of identifying potential issues and vulnerabilities.

Cons:

- May require restructuring work into smaller commits: Can add some overhead to the development process.

- Can be challenging for large features or refactoring: Requires careful planning and decomposition of large tasks.

- Might increase the number of pull requests to manage: Teams need effective processes to handle the potentially increased volume of pull requests.

Examples of successful implementation:

- Google: Google's engineering practice recommends limiting changes to around 200 lines of code per pull request.

- Microsoft: Studies at Microsoft have indicated that an optimal code review size is around 300 lines.

- Facebook: Facebook's Phabricator code review tool encourages atomic commits, further reinforcing the benefits of smaller changesets.

Tips for implementing this best practice:

- Aim for pull requests that can be reviewed in 30-60 minutes: This timeframe helps maintain focus and efficiency.

- Split large features into several logical components: Break down complex features into smaller, independent units of work.

- Separate refactoring from feature changes: Submitting these changes separately simplifies the review process and makes it easier to track the impact of each change.

- Submit independent changes separately: Even small, seemingly unrelated changes should be submitted in separate pull requests to maintain clarity and focus.

Popularized by:

- Google's Engineering Practices documentation

- Martin Fowler's articles on continuous integration

This best practice is particularly beneficial for software development teams, DevOps engineers, Quality Assurance engineers, Enterprise IT leaders, tech startups, CI/CD engineers, and platform engineers striving to improve code quality, streamline development workflows, and enhance team collaboration. By adopting the practice of reviewing small chunks of code, teams can significantly improve the effectiveness of their code review process and build a stronger foundation for high-quality software.

2. Use Automated Code Analysis Tools

One of the most effective code review best practices is incorporating automated code analysis tools into your workflow. These tools, often referred to as static analysis tools, examine your code without actually executing it. They automatically scan for style violations, potential bugs, security vulnerabilities, and code smells, effectively handling the mechanical aspects of code review. This frees up human reviewers to concentrate on higher-level aspects like code design, architecture, logic, and overall maintainability. This approach dramatically improves efficiency and catches potential issues early in the development cycle.

Automated code analysis tools boast several key features that make them indispensable for modern software development. These include automated detection of style violations (ensuring adherence to coding standards like PEP 8 for Python or Google Java Style), static analysis for uncovering potential bugs (like null pointer exceptions or resource leaks), consistency checking across the entire codebase, and seamless integration with Continuous Integration/Continuous Deployment (CI/CD) pipelines. This last feature is particularly powerful as it allows for automated checks with every commit or pull request, preventing regressions and maintaining code quality over time. You can learn more about Use Automated Code Analysis Tools and explore further resources on this topic.

Many leading tech companies successfully leverage automated code analysis tools. LinkedIn, for example, uses ESLint and Prettier in their JavaScript workflow to enforce consistent code style and identify potential JavaScript issues. Google maintains and uses Error Prone for Java, a powerful static analysis tool that catches complex bugs and promotes best practices within their massive codebase. Similarly, Microsoft utilizes tools like FxCop and StyleCop for .NET code analysis. These real-world examples highlight the effectiveness and scalability of automated code analysis in diverse development environments.

Pros:

- Catches mechanical issues early: Identifies style violations, formatting problems, and potential bugs before they reach human reviewers, saving valuable time and effort.

- Enforces consistent code style: Promotes a uniform coding style across the team, making the codebase easier to read, understand, and maintain.

- Reduces trivial back-and-forth in reviews: Eliminates the need for reviewers to comment on minor stylistic issues, allowing them to focus on more substantial concerns.

- Finds bugs that might be missed in manual review: Automated tools can detect complex patterns and potential issues that might escape the notice of even experienced human reviewers.

Cons:

- May produce false positives: Static analysis tools can occasionally flag code as problematic even when it is not, requiring developers to investigate and dismiss these false positives.

- Requires setup and maintenance: Integrating these tools into the workflow requires initial setup and ongoing maintenance to ensure they remain effective.

- Can slow down the build process: If too many rules are enabled, the analysis process can add significant time to the build cycle.

Tips for Successful Implementation:

- Integrate linters and formatters in pre-commit hooks: This automatically checks code for style and formatting issues before each commit, preventing these issues from ever reaching the main repository.

- Configure CI to run static analysis on pull requests: This ensures that all code changes are analyzed before being merged, enforcing code quality standards across the team.

- Start with a minimal set of rules and expand gradually: Avoid overwhelming your team by starting with a small number of essential rules and gradually adding more as needed.

- Customize rules to match team conventions: Tailor the rules of your chosen tools to align with your specific coding style and project requirements.

By following these best practices and leveraging automated code analysis tools, software development teams can significantly improve code quality, reduce review time, and foster a more efficient and collaborative development process. This approach is essential for any team aiming to deliver high-quality, maintainable software.

3. Establish Clear Code Review Guidelines

Effective code reviews hinge on shared understanding and consistent application of standards. Establishing clear code review guidelines is a crucial best practice for any software development team aiming for high-quality code and efficient review processes. This practice ensures that all reviewers are looking for the same things, provides clarity for code authors on expectations, and ultimately leads to more productive and less subjective feedback. This deserves its place in the list of code review best practices because it lays the groundwork for a consistent and constructive review culture. Learn more about Establish Clear Code Review Guidelines

This involves creating documented standards, checklists, and a defined review process that outlines what aspects of the code reviewers should focus on, acceptable coding patterns, and how feedback should be delivered. These guidelines should encompass everything from code style and functionality to security considerations and performance.

Features of robust code review guidelines:

- Documented review standards: A central document outlining all expectations for code quality and style.

- Checklists for common issues: Pre-defined lists of common problems to look for during reviews, like potential bugs, security vulnerabilities, or style inconsistencies.

- Clear acceptance criteria: Defining what constitutes "shippable" code, ensuring that all code meets a minimum standard before merging.

- Defined review process: A clear workflow for how code reviews are initiated, conducted, and concluded.

Pros:

- Consistency across different reviewers: Eliminates discrepancies in feedback and ensures everyone is evaluating code based on the same criteria.

- Sets clear expectations for code authors: Authors know what to expect during review, leading to better code quality from the start.

- Reduces subjective feedback: Focuses reviews on objective criteria rather than personal preferences.

- Onboards new team members faster: Provides new hires with a clear understanding of the team's coding standards and review process.

Cons:

- Requires maintenance as standards evolve: Guidelines need to be updated as technologies and best practices change.

- May become too rigid if not regularly revisited: Overly strict guidelines can stifle innovation and create unnecessary bureaucracy.

- Can be overdone, leading to bureaucracy: Finding the right balance between thoroughness and efficiency is crucial.

Examples of successful implementation:

- Square: Known for their comprehensive and publicly available code review guidelines, which cover various aspects of code quality and style.

- Dropbox: Also publishes their code review principles, providing insights into their approach to ensuring high-quality code.

- Google: Emphasizes readability in their code review guidelines, promoting a consistent and easily understandable codebase.

Actionable Tips:

- Include examples of good and bad feedback: Illustrate constructive criticism and highlight common pitfalls to avoid.

- Categorize guidelines (must-haves vs. nice-to-haves): Prioritize essential criteria and differentiate them from less critical suggestions.

- Update guidelines based on recurring issues: Regularly review and refine the guidelines based on the challenges and patterns observed during code reviews.

- Create language-specific checklists: Tailor checklists to the specific nuances and best practices of each programming language used.

- Review the guidelines periodically with the team: Ensure the guidelines remain relevant and reflect the team's current needs and priorities.

When and why to use this approach:

This approach is essential for any team practicing code review, regardless of size or experience level. It's particularly beneficial when:

- Scaling the engineering team: As the team grows, consistent standards become even more crucial.

- Improving code quality: Clear guidelines help identify and address code quality issues more effectively.

- Reducing bugs and vulnerabilities: Checklists for common security and performance issues can help prevent problems before they reach production.

- Streamlining the review process: Clear expectations make reviews more efficient and less prone to disagreements.

By implementing clear code review guidelines, development teams can cultivate a culture of quality, improve code consistency, and ultimately deliver better software. This proactive approach to code review minimizes subjective feedback, streamlines the review process, and fosters a shared understanding of best practices within the team.

4. Focus on Knowledge Transfer and Learning

Code review best practices often focus on finding bugs and ensuring style consistency. While these are important aspects, truly effective code reviews go beyond mere defect detection. They embrace knowledge transfer and learning as a core principle, transforming the review process into a powerful tool for team growth and improved code quality. This approach shifts the dynamic from pure criticism to collaborative learning, ensuring that every review contributes to a shared understanding of the codebase and strengthens the team's collective expertise.

This method works by reframing the code review as a two-way conversation. Instead of simply pointing out errors, reviewers take the time to explain the reasoning behind their suggestions. This might involve discussing best practices, design patterns, or potential performance implications. By actively sharing their knowledge, senior developers mentor junior team members, and the entire team benefits from a distributed understanding of the code. Learn more about Focus on Knowledge Transfer and Learning

Features of a knowledge-focused code review:

- Explicit knowledge sharing goals: Reviewers consciously aim to teach and learn during the process.

- Documentation of decisions and patterns: Comments explain not just what to change, but why, creating a valuable record for future reference.

- Educational comments: Reviewers link to relevant resources, provide context, and explain underlying concepts.

- Cross-training opportunities: Rotating reviewers exposes team members to different parts of the codebase and different coding styles.

Pros:

- Reduces knowledge silos within teams: Shared understanding reduces the risk of single points of failure and makes the team more resilient.

- Accelerates junior developer growth: Provides targeted feedback and mentorship in a real-world context.

- Improves team's collective code quality: Shared knowledge and best practices lead to consistently higher standards.

- Creates shared understanding of the codebase: Easier maintenance, faster onboarding of new team members, and improved collaboration.

Cons:

- Can slow down the review process initially: Explaining concepts and providing context takes more time than simply pointing out errors.

- May feel unnecessary for simple changes: For trivial modifications, the overhead of a knowledge-focused review might outweigh the benefits.

- Requires patience from experienced developers: Mentoring and teaching requires effort and a willingness to invest in junior team members.

Examples of successful implementation:

- Etsy: Known for pairing senior and junior developers for code reviews, fostering direct mentorship and knowledge sharing.

- Spotify: Utilizes a "guild" system to facilitate knowledge sharing and cross-functional collaboration among engineers.

- Stack Overflow: Emphasizes teaching through code reviews, encouraging detailed explanations and constructive feedback.

Actionable tips for incorporating knowledge transfer into your code reviews:

- Ask questions rather than dictating changes: Encourage the author to think critically about their code.

- Explain why, not just what to change: Provide context and rationale for your suggestions.

- Link to resources for deeper understanding: Share relevant articles, documentation, or style guides.

- Document non-obvious decisions: Explain the reasoning behind complex logic or design choices.

- Rotate reviewers to spread knowledge: Expose team members to different areas of the codebase and different coding styles.

This approach to code review deserves its place in the list of best practices because it transcends the immediate goal of finding defects and invests in the long-term health and productivity of the team. By prioritizing knowledge transfer and learning, teams can build a shared understanding of the codebase, accelerate the growth of junior developers, and cultivate a culture of continuous improvement. This ultimately leads to higher quality code, more efficient development processes, and a more engaged and knowledgeable team. This is a crucial element for any software development team, DevOps engineers, Quality Assurance engineers, Enterprise IT Leaders, Tech Startups, CI/CD engineers, and Platform engineers seeking to optimize their development workflow.

5. Implement a Two-Level Review Process

A crucial code review best practice for maintaining code quality and architectural consistency is implementing a two-level review process. This approach divides the review into two distinct stages, each with specific objectives and ideally, different reviewers. This separation allows for both a detailed examination of the code's implementation and a higher-level assessment of its alignment with overall system architecture and design goals. This method helps to balance the need for meticulous code scrutiny with the broader perspective of architectural integrity, a critical factor in successful software development.

How it Works:

The two-level review process operates on the principle of separating concerns. The first level, often referred to as the technical review, primarily focuses on the code itself. Reviewers at this stage meticulously check for:

- Code Correctness: Does the code function as intended and produce the expected results? Are there any logic errors, bugs, or potential edge cases that have been overlooked?

- Code Style and Readability: Is the code clean, well-formatted, and easy to understand? Does it adhere to established coding standards and style guidelines?

- Test Coverage: Are there sufficient tests to ensure the code's reliability and prevent regressions? Are the tests well-written and comprehensive?

The second level, often called the architectural review, takes a broader perspective. Here, the focus shifts from the individual lines of code to the overall impact on the system architecture. Reviewers at this stage consider:

- Architectural Alignment: Does the code adhere to the established architectural principles and patterns? Does it introduce any unintended dependencies or complexities?

- Design Consistency: Is the code consistent with the overall system design and does it contribute to a cohesive and maintainable codebase?

- Scalability and Performance: Does the code introduce any potential performance bottlenecks or scalability issues? Does it impact the system's overall resilience and reliability?

Examples of Successful Implementation:

Several leading tech companies employ variations of the two-level review process:

- Amazon: Amazon's engineering practices often incorporate a two-tier review system where senior developers or designated architects act as final approvers, focusing on architectural considerations after an initial technical review.

- Microsoft: For significant changes, Microsoft utilizes architecture review boards to ensure alignment with overarching architectural strategies and prevent fragmentation of the system design.

- Netflix: Netflix's multi-level review process for service changes ensures both code quality and architectural consistency across its complex microservices architecture.

Tips for Implementation:

- Define Clear Responsibilities: Clearly delineate the responsibilities of reviewers at each level to avoid confusion and ensure efficient reviews.

- Leverage Seniority for Architectural Reviews: Utilize senior developers or architects with a deep understanding of the system architecture for the second-level review.

- Automate the First Level: Where possible, automate the first-level technical review using linters, static analysis tools, and automated test suites. This frees up human reviewers to focus on more complex aspects.

- Risk-Based Approach: Consider the risk level of the change when deciding if both levels of review are necessary. For minor changes or bug fixes, a single-level review may suffice.

Pros and Cons:

Pros:

- Balances Detailed Review with Big-Picture Thinking: Ensures both the minutiae of the code and the broader architectural implications are considered.

- Leverages Different Expertise Appropriately: Allows developers with specialized skills to focus on the aspects of the review that best suit their expertise.

- Ensures Architectural Consistency: Promotes adherence to architectural principles and prevents the accumulation of technical debt.

- Distributes Review Workload: Shares the review burden across multiple individuals, reducing the load on any single reviewer.

Cons:

- Increases Process Complexity: Adds an extra layer to the code review process, requiring more coordination and potentially increasing overhead.

- May Extend the Review Timeline: The two-level process can lengthen the overall review time, especially if not managed efficiently.

- Requires Coordination Between Reviewers: Effective communication and coordination between the reviewers at each level are essential for a smooth and efficient process.

Why this approach deserves a place in the list of code review best practices:

The two-level review process addresses a common challenge in software development: balancing the need for detailed code scrutiny with the importance of maintaining a consistent and robust architecture. By separating these concerns, this approach allows teams to achieve both high-quality code and architectural integrity, leading to more maintainable, scalable, and reliable software systems. It provides a structured way to leverage different expertise within the team and promotes a more thorough and comprehensive approach to code review.

6. Provide Constructive, Specific Feedback

Effective code reviews hinge on the quality of feedback provided. Constructive, specific feedback is crucial for driving improvement and fostering a positive collaborative environment. This approach emphasizes focusing on the code itself, not the developer who wrote it, and offering concrete suggestions for improvement, supported by clear explanations. This facilitates learning and makes it more likely the feedback will be implemented. It's a cornerstone of effective code review best practices because it helps teams build better software and stronger working relationships.

This method works by shifting the focus from critiquing the individual to analyzing the code's functionality, style, and maintainability. Instead of saying "This is messy code," a constructive reviewer might say, "We could improve readability by extracting this logic into a separate helper function. This would also make it easier to test." This approach provides a specific action and explains the reasoning behind the suggestion.

Features of Constructive Feedback:

- Feedback focused on code, not coder: Depersonalizes the review process, making it less likely to be perceived as a personal attack.

- Specific actionable suggestions: Provides clear guidance on how to improve the code, making it easier for the author to implement changes.

- Explanatory comments: Explain the rationale behind suggestions, fostering understanding and promoting learning.

- Positive reinforcement of good practices: Recognizing and appreciating well-written code encourages developers to maintain high standards.

Pros:

- Creates a positive review culture: Encourages open communication and collaboration within the team.

- Increases likelihood of feedback implementation: Actionable suggestions are more likely to be adopted than vague criticisms.

- Reduces defensive reactions: Focusing on the code rather than the person minimizes defensiveness and encourages a growth mindset.

- Facilitates learning and improvement: Explanatory comments help developers understand the reasoning behind suggestions, leading to improved coding skills.

Cons:

- Takes more time to craft thoughtful feedback: Requires reviewers to carefully consider their comments and provide detailed explanations.

- Might require practice for reviewers used to blunt communication: Shifting from direct criticism to constructive feedback can be a learning curve.

- Can be challenging in cross-cultural teams: Nuances in communication styles can sometimes make it more difficult to deliver constructive feedback effectively.

Examples of Successful Implementation:

- Google: Emphasizes politeness and respect in code review comments, encouraging reviewers to phrase suggestions as questions and avoid personal attacks.

- Atlassian: Promotes the "sandwich method" for feedback, starting with positive feedback, followed by constructive criticism, and ending with another positive note.

- GitLab: Provides detailed guidelines in their handbook for conducting constructive code reviews, emphasizing clarity, specificity, and a focus on the code.

Actionable Tips:

- Use "we" instead of "you" in comments: Creates a sense of shared responsibility and collaboration.

- Explain why a change would improve the code: Helps the author understand the reasoning behind the suggestion.

- Phrase feedback as questions when appropriate: Encourages discussion and avoids sounding overly critical.

- Acknowledge good code and clever solutions: Reinforces positive behaviors and fosters a positive review environment.

- Be explicit about which feedback is blocking vs. suggestions: Clarifies priorities and helps the author focus on essential changes.

When and Why to Use This Approach:

Constructive, specific feedback should be the standard approach for all code reviews. This ensures a consistent and positive experience for all team members, promotes continuous improvement, and helps build a culture of learning and collaboration. This approach is rooted in frameworks like Radical Candor by Kim Scott and is exemplified in Google's engineering culture documentation. By adopting this code review best practice, teams can improve code quality, accelerate development cycles, and foster a more collaborative and supportive work environment.

7. Prioritize Testing in Code Reviews

Prioritizing testing in code reviews is a crucial best practice for ensuring high-quality, maintainable code. This approach elevates test review to a first-class citizen in the code review process, making it an essential step rather than an afterthought. By reviewing tests before diving into the implementation code, reviewers gain valuable context, verify that the code is testable, and ensure it's correctly validated against requirements. This practice directly contributes to better code review best practices and ultimately leads to more robust and reliable software.

How it Works:

The core principle is simple: review the tests first. Before examining the implementation details of a code change, the reviewer focuses on the accompanying tests. This includes unit tests, integration tests, and any other relevant test cases. The reviewer scrutinizes the tests for completeness, correctness, and clarity, ensuring they adequately cover the code's functionality and edge cases. Only after thoroughly reviewing and approving the tests does the reviewer move on to inspect the implementation itself.

Features of Test-First Code Review:

- Test-first review approach: Tests are reviewed before the implementation code.

- Emphasis on test coverage: Ensuring all critical code paths are covered by tests.

- Assessment of test quality and edge cases: Evaluating the effectiveness of tests in catching potential bugs and handling boundary conditions.

- Verification of test-code alignment: Confirming that tests accurately reflect the intended behavior of the code.

Pros:

- Ensures proper test coverage: By focusing on tests first, reviewers are more likely to identify gaps in test coverage.

- Validates requirements understanding: Well-written tests demonstrate a clear understanding of the requirements and how the code should behave.

- Improves design through testability requirements: Writing testable code often leads to better design choices, resulting in more modular and maintainable code.

- Reduces future regressions: Thorough testing minimizes the risk of introducing bugs and regressions in subsequent code changes.

Cons:

- Requires discipline to review tests thoroughly: Teams need to commit to dedicating sufficient time and effort to test review.

- May extend review time: Reviewing tests adds another step to the code review process, potentially increasing the overall review time.

- Can be challenging for teams new to testing: Teams with limited testing experience may find it difficult to effectively review tests.

Examples of Successful Implementation:

- Facebook: Facebook is known for its strong emphasis on testing and code review, with tests playing a central role in their development process.

- ThoughtWorks: As proponents of test-driven development (TDD), ThoughtWorks integrates testing deeply into their code review practices.

- Netflix: Netflix prioritizes testing for reliability, ensuring their systems can withstand failures and continue to provide uninterrupted service.

Actionable Tips:

- Review tests before implementation code: This provides context and ensures the code is testable.

- Check that tests fail when they should: Verify that tests correctly identify incorrect behavior.

- Verify edge cases are covered: Ensure tests handle boundary conditions and unusual inputs.

- Look for meaningful assertions, not just coverage: Focus on the quality of the assertions, not just the number of lines covered.

- Ensure tests are readable and maintainable: Tests should be easy to understand and modify.

Why This Item Deserves Its Place in the List:

Prioritizing testing in code reviews is a fundamental practice that significantly impacts code quality and maintainability. It reinforces a culture of quality and helps prevent bugs early in the development cycle, saving time and resources in the long run. This practice aligns with established software development principles like Test-Driven Development (popularized by Kent Beck) and Clean Code principles (advocated by Robert C. Martin), further solidifying its importance in code review best practices. By focusing on tests, teams can build more robust, reliable, and maintainable software, ultimately leading to greater success.

8. Set Reasonable Time Expectations

Time is a precious commodity in software development. One of the most effective code review best practices involves setting reasonable time expectations for the process. Establishing clear expectations for both review completion time and reviewer responsiveness prevents bottlenecks in the development pipeline, keeps projects moving forward, and fosters a more collaborative and less frustrating environment. This practice is crucial for maintaining development momentum while still allowing for thorough analysis and upholding code quality.

This approach revolves around defining clear timeframes within which code reviews should be completed. It's not about rushing the review process, but about creating a predictable and efficient flow. This involves several key features:

- Defined Time Windows for Review Completion: Establish Service Level Agreements (SLAs) for different types of code changes. Small, non-critical changes might have a shorter review window (e.g., 24 hours) while larger, more complex changes might have a longer window (e.g., 48-72 hours).

- Clear Escalation Paths for Blocked Reviews: Delays happen. Define a clear process for what happens when a review is blocked, perhaps due to reviewer unavailability or disagreements on the code. This could involve escalating to a senior engineer or having a designated backup reviewer.

- Balanced Workload Distribution: Ensure that code review requests are distributed fairly among team members. Overburdening one developer with reviews while others have free time creates an uneven and inefficient process.

- Respect for Reviewer Time Constraints: Code review is important, but it's not the only task developers have. Encourage developers to block off specific time on their calendars for reviews, preventing interruptions and ensuring focused review time.

Why This Matters in Code Review Best Practices

This practice deserves its place in the list of code review best practices because it directly addresses a common pain point: delays. Unclear expectations often lead to reviews languishing in the queue, frustrating developers and stalling project progress. Setting time expectations fosters a more efficient, respectful, and predictable development flow.

Examples of Successful Implementation:

- Google: Google reportedly encourages code review completion within 24 hours for most changes, promoting rapid feedback and iteration.

- Shopify: Shopify utilizes a "review buddies" system, pairing developers to ensure timely reviews and provide a consistent feedback loop.

- Basecamp: Basecamp prioritizes asynchronous communication and code review, ensuring reviews happen without disrupting deep work, but still maintains momentum by setting clear expectations on response times and priorities.

Actionable Tips for Your Team:

- Set Different SLAs: Don't treat all code changes equally. Establish different SLAs for small bug fixes, feature additions, and major refactoring projects.

- Round-Robin Assignments: Use a round-robin system or automated tools to distribute review requests evenly among team members.

- Block Calendar Time: Encourage developers to schedule specific times for code review, treating it as a dedicated task.

- Consider Time Zones: For distributed teams, account for time zone differences when setting expectations.

- Review Swap Agreements: Encourage developers to make "review swap" agreements amongst themselves, fostering a sense of shared responsibility.

Pros:

- Prevents development bottlenecks

- Creates a predictable development flow

- Reduces frustration stemming from waiting on reviews

- Balances review quality with efficiency

Cons:

- May create pressure that inadvertently reduces review quality if SLAs are too aggressive

- Can be difficult to maintain stringent time expectations during crunch periods

- Might not account for differences in review complexity

Popularized By:

The emphasis on setting reasonable time expectations for code review aligns with:

- Agile methodology: Agile's focus on continuous flow and rapid iteration necessitates timely feedback loops.

- DevOps principles: DevOps emphasizes removing bottlenecks and streamlining the entire software delivery process.

By incorporating this practice into your workflow, you can significantly improve the effectiveness and efficiency of your code review process, ultimately leading to higher quality software and a more productive development team.

9. Use Face-to-Face Reviews for Complex Changes

Asynchronous code reviews are a cornerstone of modern software development, providing a documented and traceable way to improve code quality. However, when dealing with intricate architectural changes, substantial feature additions, or particularly complex logic, asynchronous reviews can sometimes fall short. The back-and-forth nature of written comments can become inefficient, leading to misunderstandings and extended review cycles. This is where face-to-face code reviews come into play, offering a valuable supplement to traditional asynchronous approaches. This practice allows for real-time clarification, fostering deeper understanding and more efficient collaboration, which is why it deserves a place among code review best practices.

Face-to-face code reviews, sometimes referred to as synchronous code reviews, involve scheduled sessions where team members gather to discuss code changes in real-time. These sessions leverage screen sharing and walkthroughs, allowing the code author to explain the changes and address questions immediately. This interactive format clarifies complex logic quickly, reduces the potential for miscommunication inherent in written comments, and promotes a shared understanding of the codebase. This approach effectively combines the documentation benefits of traditional written reviews with the efficiency of real-time communication.

Features of effective face-to-face code reviews include:

- Scheduled review sessions: Dedicated time slots ensure focused discussion and avoid interruptions.

- Screen sharing and walkthrough capabilities: Enable clear visualization of the code changes and their impact.

- Real-time Q&A opportunities: Facilitate immediate clarification and deeper understanding.

- Recorded discussions: Provide valuable documentation for future reference and for team members who could not attend.

Pros:

- Reduces back-and-forth comments, shortening the review cycle.

- Clarifies complex logic quickly and effectively.

- Builds stronger team relationships through collaboration.

- Facilitates deeper technical discussions and knowledge sharing.

Cons:

- Requires scheduling coordination, which can be challenging.

- May be difficult for geographically distributed teams.

- Can be time-consuming, especially with multiple participants.

Several organizations have successfully implemented face-to-face code reviews. Palantir utilizes "critic" sessions for complex changes, while Facebook employs "code review jams" for major features. Stripe combines written reviews with selective pair reviews, targeting synchronous discussions for critical parts of the codebase. These examples highlight the diverse ways face-to-face reviews can be integrated into existing workflows.

Tips for Effective Face-to-Face Code Reviews:

- Prepare and share code context before the meeting: This allows reviewers to familiarize themselves with the changes beforehand, leading to a more productive discussion.

- Set a clear agenda for the review session: Focus the discussion and ensure all key aspects are covered.

- Document key decisions after the meeting: Capture important insights and action items for follow-up.

- Use video for distributed teams: Enhance communication and build rapport even when geographically separated.

- Consider recording sessions for team members who couldn't attend: Ensure everyone has access to the discussion and its outcomes.

Face-to-face code reviews are particularly valuable when dealing with significant changes, complex logic, or critical parts of the system. While asynchronous reviews remain a crucial element of code review best practices, incorporating synchronous discussions for complex scenarios significantly enhances understanding, reduces miscommunication, and promotes a more collaborative and efficient development process. The foundation for this practice can be found in concepts like pair programming from Extreme Programming and code inspection methods developed by Michael Fagan, demonstrating its enduring relevance in software engineering.

10. Track and Measure Code Review Metrics

Effective code review is crucial for maintaining high code quality and fostering a collaborative development environment. One of the best practices for optimizing your code review process is to track and measure key metrics. This allows teams to move beyond subjective opinions and use objective data to identify bottlenecks, refine workflows, and ultimately improve the quality of their software. This data-driven approach to code review is essential for any team striving to implement code review best practices.

Tracking and measuring code review metrics involves collecting data on various aspects of the review process and analyzing it to identify trends and patterns. This provides valuable insights into the effectiveness of your code review strategy and highlights areas for improvement. It's about understanding not just that a review happened, but how it happened and what impact it had.

Features to Track:

- Review Turnaround Time: How long does it take for a code review to be completed? Long turnaround times can delay releases and frustrate developers.

- Defect Detection Rates: How many defects are identified during code review? This metric helps assess the effectiveness of the review process in catching bugs early.

- Comment Volume and Types: Analyzing the number and nature of comments (e.g., clarifying questions, suggestions for improvement, bug reports) can provide insights into the thoroughness of reviews and areas where developers might need more support.

- Author Response Patterns: How quickly and effectively do authors respond to review comments? This can highlight areas where communication can be improved.

- Review Participation Distribution: Is the review workload evenly distributed across the team, or are a few individuals carrying the burden? Uneven distribution can lead to burnout and inconsistent review quality.

Pros:

- Provides objective data for process improvement: Instead of relying on gut feelings, teams can use data to identify areas for improvement.

- Identifies bottlenecks in the review workflow: Metrics can pinpoint slowdowns in the process, such as long wait times for reviewers or excessive back-and-forth in comment threads.

- Helps balance review workload across team: Data on review participation can help ensure that the workload is distributed fairly, preventing burnout and promoting shared ownership of code quality.

- Shows trends in code quality over time: Tracking defect detection rates over time can reveal whether code quality is improving or declining.

Cons:

- Can lead to counterproductive incentives if misused: If metrics are tied to performance evaluations, developers might be tempted to prioritize speed over thoroughness or inflate the number of comments they make.

- Requires careful interpretation to be meaningful: Raw numbers alone don't tell the whole story. It's crucial to analyze the context behind the metrics and avoid drawing hasty conclusions.

- May create unnecessary pressure if overemphasized: Focusing too heavily on metrics can create a stressful environment and discourage open communication during code review.

Examples of Successful Implementation:

- Microsoft: Has conducted extensive research and analysis on code review effectiveness at scale, using data to refine their internal processes and tools.

- Google: Utilizes internal tooling to track and analyze review metrics, enabling data-driven decisions about their code review practices.

- GitLab: Demonstrates transparency by publicly sharing data about their review cycle time, providing valuable benchmarks for other organizations.

Actionable Tips:

- Focus on trends rather than absolute numbers: Look for patterns and changes over time rather than fixating on individual data points.

- Use metrics for improvement, not performance evaluation: Emphasize the use of metrics for process optimization and learning, not for judging individual developers.

- Combine quantitative with qualitative assessment: Supplement data with feedback from developers to gain a holistic understanding of the review process.

- Look for patterns in defects found during review: Identify recurring types of defects to address underlying issues in coding practices or training.

- Periodically review the metrics with the team: Discuss the data with the team to foster shared understanding and identify collaborative solutions for improvement.

Why This Deserves Its Place in the List:

Tracking and measuring code review metrics is a fundamental best practice because it enables data-driven decision-making, promotes continuous improvement, and helps teams achieve higher levels of code quality. By adopting this practice, development teams can move beyond subjective opinions and use objective data to optimize their code review process and build better software. This aligns with the core principles promoted by DORA's research on high-performing engineering teams and Microsoft Research papers on code review efficiency, further solidifying its importance in the broader context of software development best practices.

10-Point Comparison of Code Review Best Practices

| Technique | Complexity 🔄 | Resources ⚡ | Outcomes 📊 | Advantages ⭐ | Tips 💡 |

|---|---|---|---|---|---|

| Review Small Chunks of Code at a Time | Medium – requires splitting large commits | Low to Moderate – organizational effort | Improves review quality and speeds up feedback cycle | Reduces cognitive load and increases bug detection | Keep PRs under 400 lines and focus on single functionality |

| Use Automated Code Analysis Tools | Medium – setup and integration needed | Moderate to High – tooling and CI integration | Ensures consistent style and catches mechanical issues | Automates routine checks and finds hidden bugs | Start with essential rules and integrate with your CI |

| Establish Clear Code Review Guidelines | Low-Medium – involves documentation | Low – mostly knowledge-based effort | Creates consistency and clear expectations | Speeds onboarding and reduces subjective feedback | Update guidelines periodically and include concrete examples |

| Focus on Knowledge Transfer and Learning | Medium – requires cultural adjustment | Moderate – dedicates time to mentoring | Promotes team learning and reduces knowledge silos | Enhances team collaboration and growth | Use questions, explanations, and rotate reviewer pairings |

| Implement a Two-Level Review Process | High – coordination between review stages | High – involves additional expert involvement | Balances detailed checks with architectural oversight | Leverages specialized expertise and ensures design consistency | Clearly define responsibilities and automate initial checks |

| Provide Constructive, Specific Feedback | Low-Medium – demands strong communication | Low – no extra tooling required | Fosters a positive review culture and better code quality | Increases actionable insights and reduces defensive responses | Use inclusive language and be explicit about suggestions |

| Prioritize Testing in Code Reviews | Medium – emphasizes dual review of tests/code | Moderate – extra time to assess test quality | Improves test coverage and minimizes future regressions | Validates design through testability and detailed checks | Review tests first and ensure edge cases are properly covered |

| Set Reasonable Time Expectations | Low – involves setting clear policies | Low – scheduling and process guidelines | Creates predictable workflows and avoids bottlenecks | Balances review speed with quality | Define clear SLAs and adjust for review complexity |

| Use Face-to-Face Reviews for Complex Changes | High – requires coordination for meetings | Moderate-High – scheduling and meeting time | Accelerates clarification and improves communication | Enhances understanding and reduces back-and-forth | Prepare agendas, use screen sharing, and record key points |

| Track and Measure Code Review Metrics | High – involves data collection and analysis | High – investment in tools and reporting | Provides data-driven insights and identifies bottlenecks | Offers objective feedback to improve review processes | Focus on trends, combine quantitative and qualitative insights |

Level Up Your Code Reviews Today!

This article explored ten code review best practices, from reviewing small code chunks and leveraging automated tools to establishing clear guidelines and prioritizing knowledge transfer. The most important takeaways are to focus on providing constructive feedback, ensuring adequate testing during review, and setting realistic time expectations. Implementing these core practices can significantly improve code quality, reduce bugs, and foster a more collaborative development environment. Mastering these code review best practices translates directly to more robust software, faster release cycles, and a more engaged and skilled team. By prioritizing continuous improvement in your code review process, you invest not only in the quality of your code but also in the growth and expertise of your development team.

Remember, embracing even a few of these best practices can make a significant impact. Don’t feel overwhelmed – start small, experiment, and adapt these techniques to your team’s specific needs. Want to streamline your code review workflow and automate key processes so you can focus on what matters most? Explore how Mergify can enhance your code review process and free up your team's time by visiting Mergify.