Top CI Pipeline Best Practices for Speed & Security

Supercharge Your CI/CD with These Best Practices

A well-optimized CI pipeline is crucial for rapid, reliable software delivery. This article presents eight CI pipeline best practices to streamline your development process and improve code quality. Learn how to leverage techniques like short-lived branches, the automated testing pyramid, and immutable artifacts. Implementing these CI pipeline best practices will enable faster releases and fewer integration issues. We'll cover strategies for teams of all sizes, from optimizing caching to enhancing pipeline observability.

1. Continuous Integration with Short-Lived Branches

One of the most effective CI pipeline best practices is integrating code changes frequently using short-lived feature branches. This strategy, often referred to as trunk-based development or short-lived branches, emphasizes creating small, focused branches that are merged back into the main branch (often called "main" or "trunk") multiple times a day, ideally after just a few hours of work. This approach minimizes the risk of large, complex merges and helps to identify and resolve integration issues early, when they are easier and less costly to fix. It forms a cornerstone of effective CI/CD pipelines, ensuring a consistently healthy and releasable codebase.

This practice revolves around making small, incremental changes to the codebase rather than developing large features in isolation for extended periods. Developers create a branch for a specific task or bug fix, complete the work within a short timeframe (e.g., a few hours or a day), and then merge it back to the main branch after thorough testing. This rapid integration cycle ensures that the main branch always represents the latest, integrated version of the software.

Examples of Successful Implementation:

- Google: Known for maintaining a massive monolithic codebase, Google employs a strategy where thousands of engineers commit directly to the main branch, relying heavily on automated testing and code review to maintain stability.

- Facebook: Utilizes a trunk-based development approach, encouraging frequent integration with the main branch to minimize merge conflicts and maintain a consistently integrable codebase.

- Spotify: Leverages short-lived feature branches coupled with comprehensive automated testing to ensure code quality and rapid integration.

When and Why to Use Short-Lived Branches:

This approach is ideal for teams aiming to improve collaboration, accelerate development cycles, and maintain a high level of code quality. It’s particularly beneficial for projects with continuous delivery goals where frequent releases are desired. However, it necessitates a strong commitment to automated testing and a potential cultural shift for teams accustomed to longer development cycles.

Features and Benefits:

- Frequent merges to the main branch: This leads to early detection of integration problems and reduces the likelihood of complex merge conflicts.

- Short-lived feature branches: Keeps changes small and focused, making them easier to review, test, and understand.

- Automated testing on each branch: Provides immediate feedback on code quality and prevents regressions.

- Smaller, incremental changes: Enhances code maintainability and reduces the risk of introducing large-scale bugs.

Pros:

- Drastically reduces merge conflicts

- Issues are identified quickly while the context is fresh

- Encourages smaller, more manageable code changes

- Provides continuous feedback on code quality

- Keeps the codebase in a consistently releasable state

Cons:

- Requires rigorous automated testing to prevent breaking the main branch

- May require a cultural shift for teams used to long-lived branches

- Can be challenging with large features; consider breaking them down into smaller, deliverable increments.

- May require feature toggles or other techniques to manage incomplete features in the main branch.

Actionable Tips for Implementation:

- Prioritize automated testing: Robust automated tests are crucial for preventing regressions and ensuring that the main branch remains stable.

- Use feature flags: Hide incomplete features behind feature flags to deploy them to production without impacting users.

- Enforce branch protection rules: Configure your version control system to require passing tests before merging into the main branch.

- Start small: Begin by applying this technique to smaller, less critical features to build team confidence and gain experience.

- Establish clear guidelines: Define a maximum lifespan for branches (e.g., 1-2 days) to encourage frequent integration.

This CI pipeline best practice, championed by thought leaders like Martin Fowler and Jez Humble and ingrained in the workflows of platforms like GitLab and GitHub, is essential for achieving true continuous integration and enabling faster, more reliable software delivery. By embracing short-lived branches and frequent integration, teams can significantly improve their development process and deliver higher-quality software.

2. Automated Testing Pyramid Strategy

A cornerstone of efficient CI/CD pipelines, the Automated Testing Pyramid Strategy is crucial for balancing comprehensive test coverage with rapid feedback cycles. This strategy applies the testing pyramid concept, structuring automated tests within the CI pipeline with a broad base of fast, isolated unit tests. Above this sits a smaller layer of integration tests, verifying interactions between components. Finally, at the peak, a minimal set of end-to-end tests assesses the entire system's functionality. This layered approach optimizes the pipeline by executing the most numerous (and fastest) tests early on, catching common errors quickly and efficiently.

This strategy deserves its place in the best practices list because it addresses a fundamental challenge in CI/CD: how to thoroughly test software without slowing down the development process. By front-loading the pipeline with fast, focused unit tests, developers receive immediate feedback on code changes, preventing small errors from escalating into larger problems later in the cycle. The progressive execution from unit to integration to end-to-end tests ensures that each layer builds upon the previous one, providing a comprehensive safety net while minimizing redundancy. You can Learn more about Automated Testing Pyramid Strategy.

Features of the Automated Testing Pyramid Strategy:

- Hierarchical test organization: Follows the pyramid model: Unit > Integration > End-to-End.

- Progressive test execution: Fastest tests run first, followed by progressively slower ones.

- Stage-specific testing: Early CI stages focus on unit tests, later stages incorporate integration and end-to-end tests.

- Fail-fast mechanism: Test failure at any stage halts the pipeline, providing immediate feedback and preventing faulty code from progressing.

Pros:

- Fast feedback: Quickly identifies common issues through unit tests.

- Balanced testing: Thorough coverage without sacrificing pipeline performance.

- Resource efficiency: Reduces resource consumption by failing fast.

- Scalability: Adapts to growing codebases by prioritizing efficient unit tests.

- Bottleneck visibility: Highlights performance issues in the pipeline.

Cons:

- Disciplined test writing: Requires consistent practices across the development team.

- Refactoring overhead: Adapting legacy codebases can be challenging.

- Balancing test types: Finding the right ratio between different test types requires careful consideration.

- End-to-end test maintenance: These tests can be flaky and require ongoing maintenance.

Examples of Successful Implementation:

- Google: Emphasizes small, fast tests executed early in their CI pipelines.

- Spotify: Adheres to the testing pyramid model for efficient and reliable CI/CD.

- Netflix: Leverages the testing pyramid to maintain service reliability despite rapid iteration.

Actionable Tips:

- Target Ratio: Aim for an approximate 80/15/5 ratio for unit, integration, and UI/end-to-end tests, respectively.

- Performance Standards: Establish standards for test execution time to prevent slow tests from infiltrating faster categories.

- Parallelization: Utilize test parallelization for longer-running test suites to improve pipeline speed.

- Test Quarantine: Implement a quarantine mechanism for flaky tests to prevent unnecessary pipeline disruptions.

- Resource Optimization (Non-Main Branches): Consider running a subset of critical tests for feature branches or development branches to conserve resources.

The Automated Testing Pyramid Strategy is a crucial best practice for any CI pipeline. By prioritizing fast feedback and efficient resource utilization, it enables development teams to deliver high-quality software at speed. This approach is especially beneficial for organizations adopting agile methodologies and aiming for continuous delivery, making it a key component of modern software development practices.

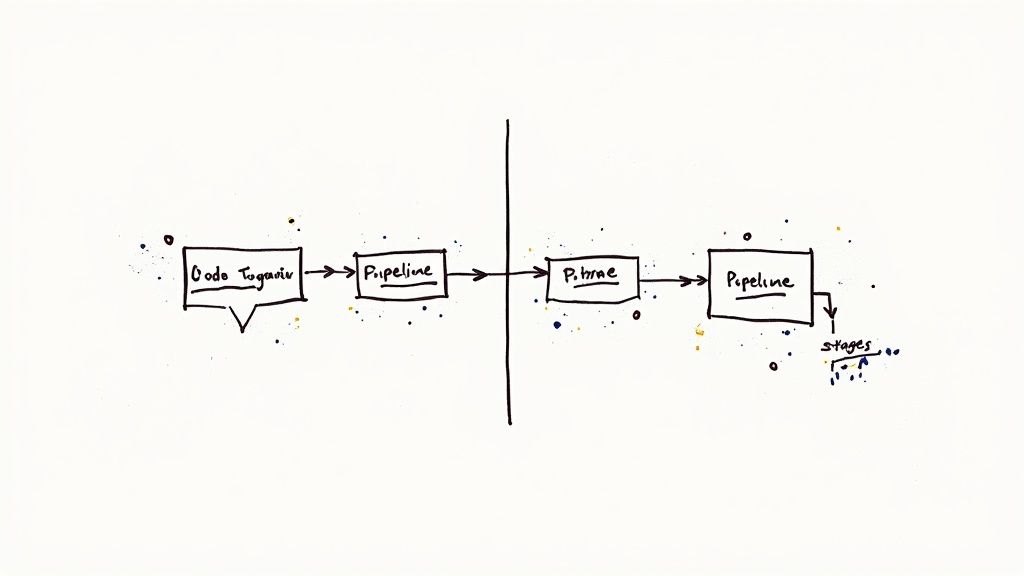

3. Pipeline as Code

One of the most crucial CI pipeline best practices is implementing Pipeline as Code. This approach defines your CI/CD pipelines in code, stored alongside your application's source code, rather than relying on cumbersome and often opaque UI-based configurations. This shift enables you to manage your pipelines with the same rigor and discipline you apply to your application code, including code review, versioning, and automated testing. This means changes to your deployment process are reviewed, tracked, and tested, just like any other code change, leading to more reliable and predictable deployments.

Pipeline as Code leverages the principles of Infrastructure as Code (IaC), extending them to your CI/CD processes. Features like version-controlled pipeline changes and self-documenting infrastructure through code contribute to increased transparency and auditability. Having your pipeline defined in code provides a clear and concise representation of your deployment process, making it easier to understand and troubleshoot. Learn more about Pipeline as Code for a deeper dive into the topic. This is invaluable for onboarding new team members and ensuring everyone understands how deployments work.

This approach provides several key benefits:

- Enhanced Collaboration and Review: Pipeline changes undergo the same peer review process as application code, minimizing the risk of errors and promoting best practices.

- Complete Version History: Track the evolution of your pipeline over time. This allows for easy rollback to previous versions if necessary and provides insights into how your deployment process has matured.

- Reusable Components: Create a library of reusable pipeline components, promoting consistency and efficiency across different projects.

- Auditable and Transparent: The codified nature of the pipeline makes it easy to audit and understand every step of the deployment process.

- Testable Pipelines: You can test pipeline changes in isolation before deploying them to production, reducing the risk of unexpected issues.

- Simplified Onboarding: New team members can quickly understand the deployment process by examining the pipeline code.

However, like any practice, Pipeline as Code has its challenges:

- Learning Curve: Adopting Pipeline as Code requires learning a pipeline DSL (Domain Specific Language), which can be a hurdle for teams unfamiliar with code-based configurations.

- Specialized Knowledge: While simpler pipelines might be straightforward, complex scenarios may necessitate deeper knowledge of the chosen DSL and CI/CD best practices.

- Pipeline Testing Complexity: Effectively testing complex pipelines can be challenging and requires careful planning and execution.

- Maintainability: Without proper structuring and modularization, complex pipelines can become difficult to maintain.

Several platforms and tools support Pipeline as Code, including:

- Jenkins: Uses

Jenkinsfileto define pipelines in Groovy. - GitLab CI/CD: Leverages

.gitlab-ci.ymlfiles for pipeline configuration. - GitHub Actions: Employs workflow YAML files.

- AWS CodePipeline: Supports definitions in AWS CloudFormation.

- Azure DevOps: Uses YAML-based pipelines.

To effectively implement Pipeline as Code, consider the following tips:

- Standardization with Templates: Use pipeline templates to establish consistent patterns across your teams and projects.

- Reusable Component Libraries: Create and maintain a library of reusable pipeline components to avoid code duplication and promote efficiency.

- Thorough Documentation: Document your pipeline code thoroughly to help future maintainers understand its purpose and functionality.

- Structured Code with Error Handling: Structure your pipeline code with clear stages and incorporate robust error handling mechanisms.

- Local Validation: Use local validation tools to verify pipeline syntax and catch errors before committing code.

- Linting Tools: Employ linting tools specific to your chosen pipeline format to enforce coding standards and best practices.

Pipeline as Code earns its place as a CI pipeline best practice due to its ability to transform your deployment process into a more robust, transparent, and manageable part of your software development lifecycle. It promotes collaboration, enables automation, and ultimately leads to more reliable and predictable software delivery. By embracing Pipeline as Code, you empower your teams to build and deploy software with greater confidence and efficiency.

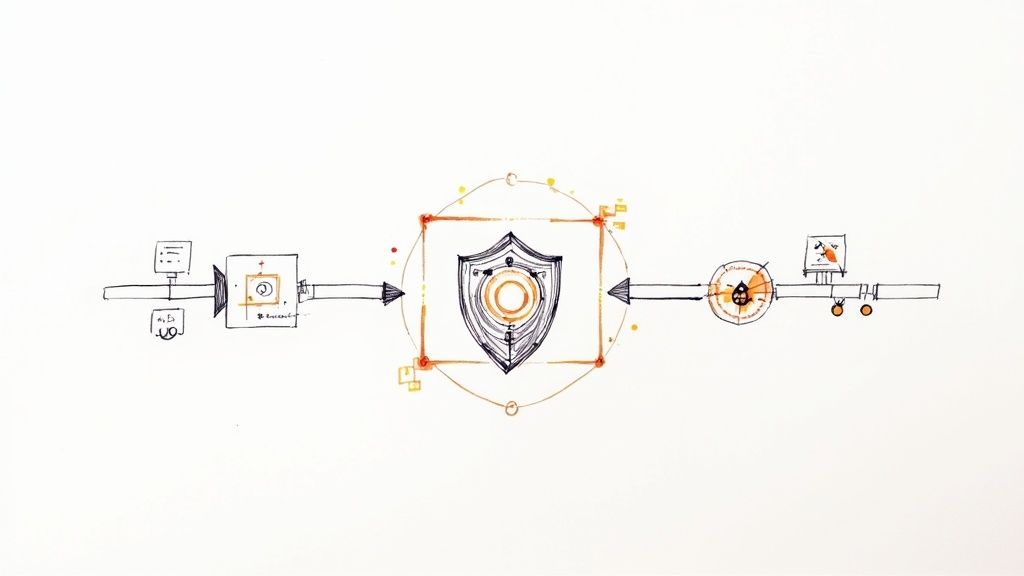

4. Shift-Left Security Integration

Shift-Left Security Integration is a crucial CI pipeline best practice that emphasizes integrating security testing throughout the software development lifecycle, rather than treating it as an afterthought. This proactive approach bakes security into the CI pipeline from the very beginning, ensuring vulnerabilities are identified and addressed early on, when they are significantly less expensive and complex to fix. Instead of waiting for a dedicated security audit late in the process, security checks become an integral part of every build and deployment. This helps create a more secure application and fosters a security-conscious culture within development teams.

Shift-left security operates by automating security scanning tools, dependency checks, and compliance validations within the CI process. These automated checks run alongside other tests, providing immediate feedback to developers about potential security flaws. This includes static application security testing (SAST), dynamic application security testing (DAST), software composition analysis (SCA), and container image scanning. For example, a CI pipeline might automatically trigger a SAST scan every time new code is committed, flagging any potential vulnerabilities in real-time. This allows developers to address the issue immediately, while the code is still fresh in their minds, reducing the time and cost associated with fixing the issue later.

Examples of Successful Implementation:

- Microsoft's Azure DevOps: Integrates various security scanning tools and offers features like automated security analysis and compliance checks throughout the pipeline stages.

- Netflix: Employs extensive security automation tools within its CI process, enabling continuous security validation and rapid vulnerability remediation.

- Google: Utilizes internal security scanning processes that validate code changes before they are merged, preventing security flaws from entering the codebase.

Actionable Tips for Implementation:

- Start Small and Iterate: Begin by integrating critical security checks, such as SAST for common vulnerabilities, and progressively expand coverage to more comprehensive scans.

- Manage False Positives: Implement a clear process for triaging and managing false positives generated by security scanning tools to avoid unnecessary developer overhead.

- Security Baselines and Exceptions: Establish baseline exceptions for legacy code that may have known vulnerabilities, while enforcing stricter security rules for new code.

- Fail Fast for Critical Issues: Configure your CI pipeline to fail the build if critical security vulnerabilities are detected, preventing insecure code from progressing.

- Integrate Security Training: Use detected security issues as opportunities for targeted security training for developers, fostering a culture of continuous security improvement.

- Regular Tool Updates: Establish a regular cadence for updating security scanning tools and libraries to ensure they are equipped to detect the latest vulnerabilities.

Pros and Cons of Shift-Left Security Integration:

Pros:

- Early identification of security issues, reducing the cost and complexity of remediation.

- Enhanced security awareness among developers.

- Creation of an auditable security trail for compliance.

- Prevention of security becoming a bottleneck at the end of the development process.

Cons:

- Potential increase in pipeline execution time due to added security scans.

- Possibility of false positives requiring triaging.

- Configuration of security tools may require specialized knowledge.

- Initial setup can be complex and time-consuming.

Why Shift-Left Security Deserves its Place in CI Pipeline Best Practices:

Shift-Left Security Integration is a foundational element of modern CI/CD pipelines. By shifting security left, organizations can build more secure applications, reduce the cost and impact of vulnerabilities, and foster a culture of security awareness throughout the development lifecycle. This proactive approach is essential for any organization that prioritizes software security and wants to stay ahead of evolving threats. It ultimately contributes to a more robust and reliable CI/CD process that delivers higher-quality and more secure software.

5. Immutable Pipeline Artifacts: A CI Pipeline Best Practice

Immutable pipeline artifacts represent a crucial best practice in modern CI/CD pipelines. This strategy significantly enhances reliability and predictability in software delivery by ensuring that the exact same artifact, whether it's a container image, package, or binary, is promoted through each stage of the pipeline, from testing to production. This "build once, deploy many times" approach eliminates discrepancies between environments and fosters confidence in the deployment process. This is a key component of robust CI pipeline best practices.

How it Works:

The core principle is to create the deployable artifact as early as possible in the CI pipeline. This artifact is built only once and assigned a unique identifier, often based on the version number or commit hash. This uniquely identified artifact is then promoted through subsequent environments – testing, staging, and finally, production. Crucially, no rebuilding or repackaging occurs at any stage. Comprehensive metadata, such as build timestamp, git commit, and author, is attached to the artifact for traceability and auditing. Technical controls, such as checksum verification, are implemented to enforce immutability.

Why Use Immutable Artifacts?

This approach addresses the common problem of "works on my machine" by guaranteeing consistency across environments. It instills confidence that what was tested is precisely what goes live, reducing the risk of unexpected issues in production. This practice also provides a clear audit trail, enabling easy tracking of deployed artifacts and facilitating faster rollbacks to previous known-good versions if necessary.

Features and Benefits:

- Build Once, Deploy Many Times: Eliminates build inconsistencies between environments.

- Unique Identifiers: Enables precise tracking and auditing of artifacts.

- Identical Artifacts Across Environments: Guarantees consistent behavior across the pipeline.

- Comprehensive Metadata: Provides rich context for debugging and auditing.

- Enforced Immutability: Ensures artifact integrity and prevents accidental modifications.

Pros:

- Eliminates “works on my machine” scenarios.

- Increases confidence in deployments.

- Creates a clear audit trail.

- Reduces environment-specific build issues.

- Supports reliable rollbacks.

Cons:

- Requires robust artifact storage with versioning.

- Can increase storage costs.

- Requires strict discipline to avoid environment-specific configurations within the artifact.

- Can be challenging to integrate with legacy deployment approaches.

Examples of Successful Implementation:

- Google: Employs container-based deployment pipelines utilizing immutable images extensively.

- Amazon: Advocates building a single artifact and promoting it unchanged across environments.

- Netflix: Uses an immutable server approach with container images or AMIs.

Actionable Tips:

- Containerization: Use container images as the primary artifact type whenever possible.

- Versioned Repositories: Implement secure, versioned artifact repositories.

- Metadata Enrichment: Include build metadata (git commit, timestamp, author) in artifacts.

- Externalized Configuration: Separate configuration from artifacts using external configuration sources.

- Verification: Implement automated verification to confirm the same artifact is used across all environments.

- Expiration Policies: Consider implementing artifact expiration policies to manage storage costs.

Popularized By:

- Jez Humble (author of Continuous Delivery)

- The Docker and containerization movement

- The Immutable Infrastructure pattern from cloud-native computing

- HashiCorp, with tools like Packer for creating immutable images

By implementing immutable pipeline artifacts as part of your CI pipeline best practices, you can significantly improve the reliability, predictability, and auditability of your software delivery process. This practice is particularly valuable for software development teams, DevOps engineers, and IT leaders seeking to optimize their CI/CD workflows and achieve faster, more reliable releases.

6. Multi-Stage Caching Strategy

A robust CI/CD pipeline is crucial for modern software development, enabling rapid iteration and delivery. Implementing a multi-stage caching strategy is a vital best practice for optimizing your CI pipeline and achieving significant performance gains. This strategy minimizes redundant computations by intelligently caching and reusing artifacts generated at various stages of your build process. This dramatically reduces build times, allowing for faster feedback loops, increased developer productivity, and more efficient resource utilization, ultimately leading to a more streamlined and cost-effective CI/CD workflow. This makes it a critical component of any efficient CI pipeline best practices implementation.

How it Works:

Multi-stage caching operates on the principle of identifying deterministic parts of your build process and storing the outputs (artifacts) of these parts. Subsequent pipeline runs can then reuse these cached artifacts if the inputs haven't changed. This is achieved through a layered approach, targeting different artifact types:

- Dependency Caching: Caching downloaded dependencies from package managers like npm, Maven, pip, and others avoids repeated downloads during each build.

- Compilation Caching: Incremental builds benefit significantly from caching compiled code, as only changed files and their dependencies need recompilation.

- Docker Layer Caching: For containerized builds, leveraging Docker's layered file system allows caching of individual layers. Rebuilding only occurs when a layer's content changes.

- Test Result Caching: If tests haven't changed, cached test results can be reused, saving significant time, especially for extensive test suites.

Crucially, cache invalidation is handled through content-based hashing. By using hashes of the input files, rather than timestamps, the cache accurately reflects the state of the codebase. A change in any input file results in a new hash, triggering a rebuild of that specific part of the pipeline.

Examples of Successful Implementation:

Several industry-leading tools and companies demonstrate the power of multi-stage caching:

- Bazel (Google): Bazel's advanced caching mechanisms are core to its performance, enabling fast and reproducible builds for large projects.

- Azure DevOps Pipelines (Microsoft): Offers built-in caching features for dependencies, build outputs, and other artifacts, simplifying implementation.

- GitLab CI: Provides multi-level caching, allowing for granular control over caching at different stages.

- CircleCI: Known for its pioneering work on build caching, offering a layered approach that optimizes various build steps.

Actionable Tips for Implementation:

- Content-Based Cache Keys: Always use hashes of dependency files and source code for cache keys. Avoid timestamps, as they can lead to inaccurate caching.

- Regular Cache Cleanup: Implement a strategy for purging old or unused cache entries to prevent storage bloat.

- Periodic Full Rebuilds: Schedule periodic clean builds (e.g., weekly) to catch issues that might be masked by aggressive caching.

- Optimized Dockerfiles: Structure your Dockerfiles to place infrequently changing layers at the bottom to maximize cache reuse.

- Documentation: Document your caching strategy clearly to ensure all team members understand how cache invalidation works.

- Incremental Approach: Start by caching the most time-consuming and deterministic steps first, gradually expanding your caching strategy.

Pros:

- Reduced Build Times: Dramatically accelerates pipeline execution, particularly for incremental changes.

- Lower Resource Costs: Less compute time translates directly to lower cloud infrastructure costs.

- Faster Feedback: Developers receive quicker feedback on their changes, enhancing productivity.

- Reduced External Dependencies: Minimizes reliance on external networks during builds.

- Increased Concurrency: Enables running more builds concurrently with the same resources.

Cons:

- Cache Invalidation Complexity: Requires careful management to avoid subtle bugs due to stale caches.

- Increased Configuration Complexity: Adds another layer of configuration to your CI pipeline.

- Potential to Mask Issues: Overly aggressive caching can hide problems that only surface in clean builds.

- Storage Space: Cached artifacts can consume considerable storage space, requiring management.

By strategically implementing a multi-stage caching strategy, development teams can significantly optimize their CI pipelines, leading to faster builds, reduced costs, and improved developer productivity. This makes it a cornerstone best practice for any modern CI/CD workflow striving for efficiency and speed.

7. Pipeline Observability and Metrics

A high-performing CI pipeline is crucial for rapid software delivery. But how do you know your pipeline is performing optimally? The answer lies in pipeline observability and metrics, a critical best practice for any modern CI/CD implementation. This strategy involves instrumenting your CI pipelines with comprehensive monitoring, logging, and metrics collection to gain deep insights into pipeline performance, reliability, and identify potential bottlenecks. By treating your CI pipeline as a critical production system deserving of observability, you can continuously improve pipeline efficiency, reduce costs, and significantly increase developer productivity. This deserves a place in the CI pipeline best practices list because it empowers teams to move beyond simple success/failure statuses and understand the why behind pipeline behavior.

How it Works:

Pipeline observability goes beyond basic logging. It involves collecting detailed metrics at every stage of your pipeline, from code checkout and compilation to testing and deployment. This data is then aggregated, analyzed, and visualized through dashboards and alerts, enabling you to understand trends, identify anomalies, and pinpoint areas for improvement.

Features of a robust pipeline observability setup:

- Detailed Metrics Collection: Track key metrics like pipeline duration, resource usage (CPU, memory, disk I/O), queue times, and individual stage performance.

- Performance Trending: Visualize pipeline performance over time to identify regressions and track the impact of optimizations.

- Failure Analytics and Automatic Categorization: Automatically categorize and analyze pipeline failures to quickly identify common root causes and prevent recurring issues.

- Real-time Dashboards: Provide real-time visibility into pipeline health, enabling quick identification of problems and faster resolution.

- Alerts on Pipeline Degradation or Failures: Set up alerts for key metrics to proactively address performance issues and minimize developer downtime.

- Historical Data Retention for Analysis: Retain historical pipeline data to analyze long-term trends, perform root cause analysis, and track the effectiveness of CI improvements.

Pros:

- Identifies Bottlenecks and Optimization Opportunities: Pinpoint slow stages, resource-intensive tasks, and other bottlenecks hindering pipeline performance.

- Provides Data-Driven Insights for Pipeline Improvements: Base optimization decisions on concrete data rather than guesswork.

- Helps Quantify the Impact of CI Improvements on Developer Productivity: Measure the impact of changes on key metrics like build time and deployment frequency.

- Enables Capacity Planning for CI Infrastructure: Forecast future resource needs based on historical trends and projected growth.

- Assists in Troubleshooting Intermittent Issues: Leverage detailed logs and metrics to quickly diagnose and resolve sporadic problems.

Cons:

- Requires Additional Infrastructure: Setting up and maintaining metrics collection and storage infrastructure requires investment.

- Can Add Complexity to Pipeline Configuration: Instrumenting the pipeline with monitoring and logging can introduce some initial complexity.

- May Generate Large Volumes of Data: Managing and analyzing large datasets requires appropriate tools and strategies.

- Needs Investment in Dashboard Creation and Maintenance: Creating and maintaining informative dashboards requires dedicated effort.

Examples of Successful Implementation:

- Microsoft's internal telemetry for Azure DevOps pipelines: Microsoft leverages extensive telemetry to monitor and optimize the performance of its own Azure DevOps pipelines.

- Google's build analysis tools: Google uses sophisticated build analysis tools to track performance across thousands of builds, ensuring fast and reliable software delivery.

- GitHub's internal pipeline analytics platform: GitHub uses its own internal analytics to monitor and improve the performance of its CI/CD infrastructure.

- GitLab's CI/CD analytics features: GitLab provides built-in CI/CD analytics to help users track pipeline performance, identify bottlenecks, and improve efficiency.

Tips for Implementing Pipeline Observability:

- Start Small: Begin by measuring the most important metrics: total duration, success rate, and resource usage.

- Trending Dashboards: Set up trending dashboards to visualize pipeline health over time and identify regressions.

- Baselines and Alerts: Establish baselines and alerting thresholds for key metrics to proactively address performance issues.

- Team-Specific Views: Create team-specific views of pipeline performance to foster ownership and accountability.

- Cost Metrics: Include cost metrics to drive efficiency improvements and optimize resource allocation.

- Automatic Failure Tagging: Implement automatic tagging of failures to identify patterns and common root causes.

- Pipeline Retrospectives: Schedule regular pipeline retrospectives using the collected data to identify areas for improvement.

Popularized By:

The concept of pipeline observability has been popularized by the observability movement, spearheaded by companies like Honeycomb.io. Google's Site Reliability Engineering (SRE) practices also emphasize the importance of monitoring and observability for critical systems, including CI/CD pipelines. Tools like CloudBees (Jenkins) and CircleCI have also incorporated pipeline analytics features, making it easier for teams to implement observability best practices.

By embracing pipeline observability and metrics, you transform your CI pipeline from a black box into a transparent and optimized system, ultimately accelerating software delivery and empowering your development teams.

8. Self-Service Pipeline Platform

A self-service pipeline platform represents a significant evolution in CI pipeline best practices, shifting the paradigm from bespoke, team-specific pipelines to a centralized, platform-based approach. This strategy involves packaging standardized pipeline components, templates, and organizational best practices into a readily accessible platform for development teams. This empowers teams to swiftly implement production-grade CI pipelines, incorporating built-in best practices while adhering to organizational standards and significantly reducing the expertise traditionally required to create and maintain these pipelines. This approach is crucial for organizations looking to scale their CI/CD practices effectively and efficiently.

This platform-centric approach works by providing developers with a catalog of pre-built, reusable pipeline components and templates. These templates cater to common application types and incorporate industry best practices for security, testing, and deployment. Teams can select a template that aligns with their project needs and customize it further within defined organizational guardrails. This balance between standardization and flexibility allows teams to benefit from pre-configured pipelines while still addressing specific project requirements.

Features of a Self-Service Pipeline Platform:

- Standardized pipeline templates: Pre-built pipelines for common application types (e.g., Java, Node.js, Python) covering various stages like build, test, and deploy.

- Reusable, modular pipeline components: Individual building blocks (e.g., code linting, security scanning, deployment scripts) that can be combined and reused across different pipelines.

- Self-service portal or CLI: An intuitive interface for developers to create, manage, and monitor their pipelines without deep CI/CD expertise.

- Built-in compliance and security checks: Automated checks integrated into the pipeline to ensure adherence to security and compliance standards.

- Centralized management with team-level customization: Global control over platform configuration and upgrades while allowing teams to customize within defined parameters.

- Automated upgrades and maintenance: Centralized updates and maintenance ensure all pipelines benefit from the latest features and security patches.

Pros:

- Democratizes CI pipeline creation: Empowers all development teams to build and manage their pipelines, regardless of CI/CD expertise.

- Ensures consistent application of organizational best practices: Standardized templates guarantee consistent implementation of security, testing, and deployment best practices.

- Reduces duplication of effort across teams: Reusable components and templates minimize redundant pipeline code and configuration.

- Centralizes pipeline maintenance and upgrades: Simplifies maintenance and upgrades, ensuring all teams benefit from the latest improvements.

- Decreases the learning curve for new teams and projects: Pre-built templates and intuitive interfaces make it easier for new teams to adopt CI/CD.

- Enables platform teams to gradually improve all pipelines simultaneously: Centralized management allows for continuous improvement and application of best practices across the organization.

Cons:

- Requires initial investment to build the platform: Setting up the platform and creating initial templates requires upfront effort and resources.

- May not cover all edge cases for specialized application types: Highly specialized applications might require custom pipeline solutions beyond the platform's capabilities.

- Can create tension between standardization and customization needs: Balancing organizational standards with individual team requirements can be challenging.

- Needs dedicated platform team to maintain and improve: Ongoing maintenance, support, and evolution of the platform require a dedicated team.

Examples of Successful Implementation:

- Spotify's Backstage developer portal incorporates CI/CD components, providing a unified experience for developers.

- Netflix has built an internal developer platform for streamlining pipeline creation and management.

- GitHub leverages its own Actions for their internal pipeline platform.

- Capital One utilizes a self-service DevOps platform to empower development teams.

Learn more about Self-Service Pipeline Platform

Tips for Implementing a Self-Service Pipeline Platform:

- Start with templates for the most common application types in your organization.

- Create clear extension points for teams to customize within guardrails.

- Implement a feedback loop from users to continuously improve the platform.

- Build comprehensive documentation and self-service help resources.

- Consider implementing a certification process for custom pipeline components.

- Use internal developer advocacy to drive adoption.

- Measure and communicate the time savings from using the platform.

Why This Approach is a CI Pipeline Best Practice:

A self-service pipeline platform embodies CI/CD best practices by promoting standardization, automation, and developer empowerment. It addresses the challenges of scaling CI/CD across large organizations by reducing complexity, ensuring consistency, and freeing up development teams to focus on building and shipping software. This approach is particularly beneficial for organizations adopting methodologies like Team Topologies, which emphasize the importance of platform teams empowering stream-aligned teams. The growing popularity of internal developer platforms and the Platform Engineering movement further underscores the value and effectiveness of this approach in modern software development.

8 CI Pipeline Best Practices Comparison

| Strategy | Implementation Complexity (🔄) | Resource Requirements (⚡) | Expected Outcomes (📊) | Ideal Use Cases (💡) | Key Advantages (⭐) |

|---|---|---|---|---|---|

| Continuous Integration with Short-Lived Branches | Moderate – requires disciplined frequent merges and strong automated tests | Moderate – needs robust automation and branch management | Fast feedback with early conflict detection | Agile projects with incremental changes and high collaboration | Early issue detection and consistently releasable code |

| Automated Testing Pyramid Strategy | Moderate-High – structured multi-layer test suite implementation | Moderate – efficient test organization and parallel execution | Layered feedback with rapid unit test validation | Projects seeking balanced, fast, and comprehensive test coverage | Fast response to issues with optimized resource usage |

| Pipeline as Code | High – involves writing and maintaining pipeline DSL/configuration code | Moderate – requires version control integration and code reviews | Reproducible, auditable pipelines with historical tracking | Teams aiming to treat pipeline like application code using version control | Transparency, reusability, and robust change management |

| Shift-Left Security Integration | High – needs integration of security tools early in the pipeline | High – dedicated security tools and configuration management | Early vulnerability detection with reduced remediation costs | Organizations prioritizing security and compliance | Reduced risk and lower cost fixes with proactive scanning |

| Immutable Pipeline Artifacts | Moderate – build once, deploy many times with controlled artifact creation | Moderate-High – secure, versioned artifact storage required | Consistent and exactly reproducible deployments with reliable rollbacks | Environments needing identical artifacts across testing and production | Eliminates environment drift and ensures deployment integrity |

| Multi-Stage Caching Strategy | High – complex cache key management and invalidation mechanisms | Moderate – requires caching infrastructure and storage oversight | Significantly reduced build times and optimized resource usage | Projects with long build processes needing incremental speed improvements | Enhanced speed and cost efficiency through layered caching |

| Pipeline Observability and Metrics | High – involves detailed instrumentation and dashboard configuration | High – additional infrastructure for metrics and monitoring | Data-driven insights leading to continuous pipeline optimization | Large-scale CI environments where performance analysis is critical | Clear identification of bottlenecks and informed improvements |

| Self-Service Pipeline Platform | High – creation of standardized, modular pipeline components | High – demands dedicated platform development and ongoing maintenance | Streamlined pipeline creation with consistent best practices | Organizations with multiple teams requiring standardization | Democratizes pipeline creation and centralizes upgrades |

Taking Your CI Pipeline to the Next Level

This article has explored a range of CI pipeline best practices, from utilizing short-lived branches and implementing a robust automated testing pyramid strategy to embracing pipeline as code and prioritizing shift-left security. We've also delved into the importance of immutable pipeline artifacts, multi-stage caching, comprehensive pipeline observability, and the power of a self-service pipeline platform. Mastering these CI pipeline best practices is crucial for any team aiming to optimize their development processes. By focusing on these key areas, you can significantly reduce development costs, improve software quality, accelerate delivery speed, and foster a culture of continuous improvement. These best practices empower teams to confidently ship code faster and more frequently, leading to a more competitive edge in the market and greater customer satisfaction.

Implementing even a few of these improvements can yield significant benefits, but the true power comes from integrating them holistically. Imagine a development workflow where code changes are seamlessly integrated, tested, and deployed with minimal manual intervention. A robust CI pipeline, built upon these best practices, transforms your development process from a potential bottleneck into a powerful engine for innovation. This ultimately empowers your team to focus on what truly matters: building and delivering exceptional software.

Ready to streamline your merge workflow and take your CI pipeline automation to the next level? Explore how Mergify can help you implement these CI pipeline best practices and optimize your development process. Visit Mergify today to learn more and start your free trial.