CI/CD best practices: Top Strategies for Agile Delivery

Supercharge Your CI/CD

Accelerate your software delivery and improve code quality with these eight CI/CD best practices. This list provides practical strategies to boost your team's efficiency and transform your pipeline into a powerful engine for innovation. Learn how to implement trunk-based development, automated testing, infrastructure as code, immutable deployments, comprehensive monitoring, pipeline as code, shift-left security, and containerized environments. These core practices streamline development, enabling faster releases and more reliable software.

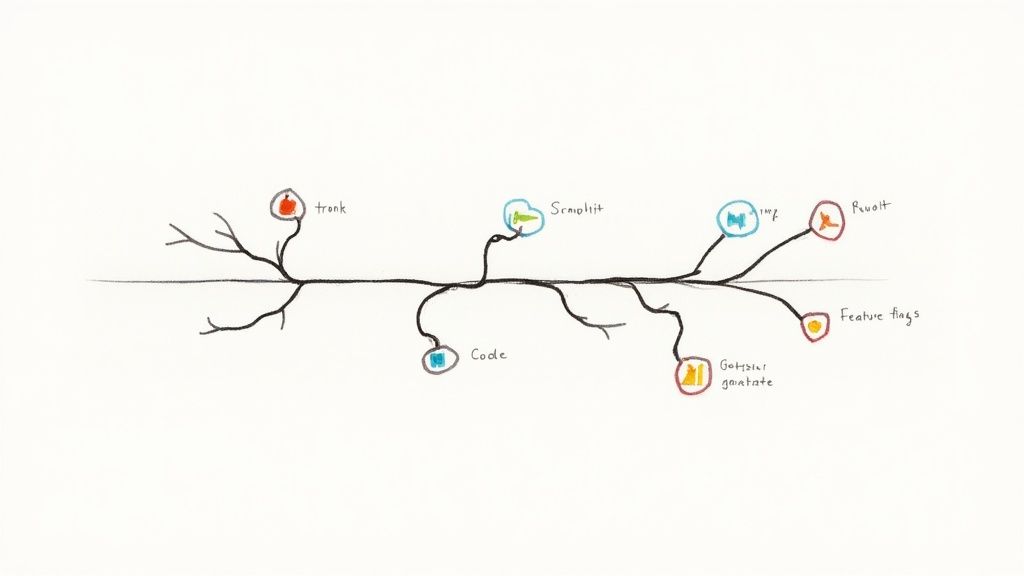

1. Trunk-Based Development

Trunk-Based Development (TBD) is a crucial source control branching model for achieving efficient and continuous integration and delivery. It centers around the principle of integrating code changes frequently into a single branch, typically named 'trunk' (or 'main' or 'master' in Git). This contrasts sharply with other branching strategies that rely on long-lived feature branches, which can lead to significant merge conflicts and integration headaches further down the line. TBD encourages developers to commit small, incremental changes directly to the trunk, ideally multiple times a day. This practice minimizes the divergence between developers' working copies and the shared codebase, fostering better collaboration and reducing the risk of complex merges.

This approach necessitates the use of specific techniques to manage in-progress or incomplete features. Feature toggles (also known as feature flags) play a vital role, allowing developers to merge unfinished code into the trunk but keep it deactivated until it's ready for release. This allows continuous integration even while features are still under development. Robust automated testing is another cornerstone of TBD. With developers frequently committing to the trunk, a comprehensive suite of automated tests is essential to catch regressions and ensure the stability of the shared codebase.

Successful Implementations: Industry giants like Google, Facebook, and Netflix have demonstrated the effectiveness of TBD at scale. Google maintains a massive monolithic repository with most developers working directly on the trunk. Facebook utilizes a variation with an emphasis on continuous deployment from main branches. Netflix leverages TBD within its microservices architecture.

Why use Trunk-Based Development? TBD's primary benefits lie in streamlined integration, faster delivery cycles, and improved team collaboration. By minimizing merge conflicts, teams can spend less time resolving integration issues and more time building valuable features. The frequent integration inherent in TBD aligns perfectly with CI/CD principles, enabling faster feedback loops and more rapid delivery of changes to production. The collaborative nature of TBD also fosters better communication and knowledge sharing within development teams.

Pros:

- Reduces merge conflicts and integration issues: Small, frequent integrations minimize the chances of large, complex merges.

- Accelerates delivery of changes to production: Enables continuous integration and continuous delivery pipelines.

- Encourages smaller, more manageable changes: Improves code quality and makes it easier to identify and fix bugs.

- Significantly improves team collaboration: Promotes shared code ownership and faster feedback loops.

- Aligns perfectly with CI/CD principles: Forms the foundation for efficient and automated software delivery pipelines.

Cons:

- Requires high-quality automated test coverage: Essential for catching regressions and maintaining trunk stability.

- May be challenging for teams transitioning from long-lived branches: Requires a shift in mindset and development practices.

- Requires additional tooling (feature flags) for managing incomplete features: Adds complexity to the development process.

- Can be difficult to implement in large, legacy codebases initially: Requires careful planning and refactoring.

Tips for Implementing Trunk-Based Development:

- Start with comprehensive automated test suites: Invest in unit, integration, and end-to-end tests before adopting TBD.

- Implement feature flags for incomplete functionality: Enables merging incomplete code without affecting users.

- Set up pre-commit hooks and quality gates: Enforce coding standards and prevent regressions.

- Educate team members on making smaller, incremental changes: A key cultural shift for successful TBD.

- Use pull requests with quick review cycles (hours, not days): Maintain fast feedback loops and prevent long-lived branches.

Trunk-Based Development earns its place as a CI/CD best practice due to its direct impact on development velocity and software quality. By embracing frequent integration and minimizing merge conflicts, teams can achieve faster delivery cycles, improved collaboration, and a more stable codebase. While it requires a commitment to automated testing and feature flag management, the long-term benefits of TBD make it a valuable approach for any team seeking to optimize their CI/CD pipeline.

2. Automated Testing Strategy

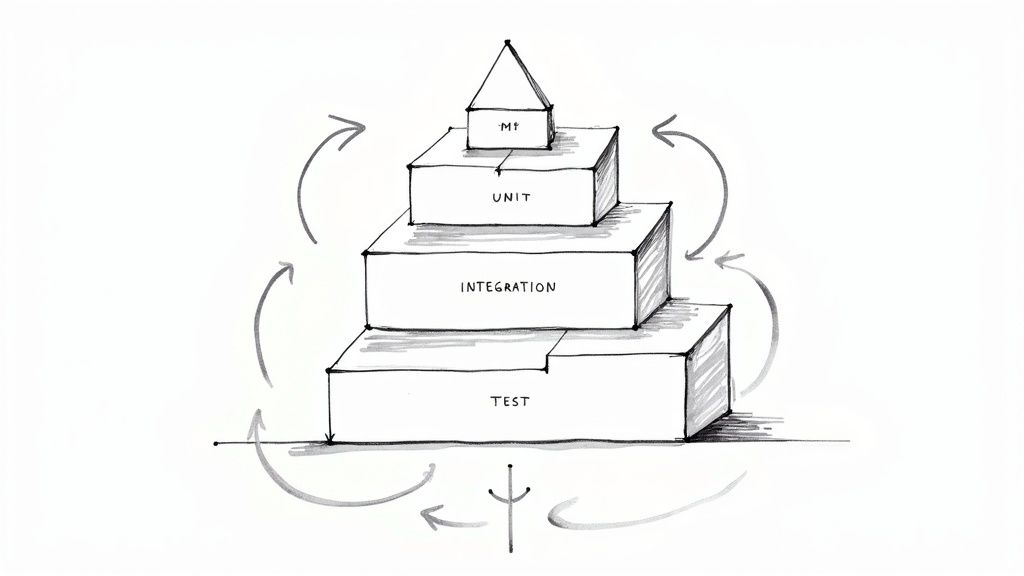

A robust CI/CD pipeline hinges on a comprehensive automated testing strategy. This involves implementing various testing layers—unit, integration, and end-to-end—that execute automatically at different stages of the pipeline. This multi-layered approach, guided by the test pyramid principle, ensures code quality before deployment by balancing thoroughness with speed. The testing pyramid advocates for a larger base of fast, isolated unit tests, a smaller mid-section of integration tests, and a minimal number of slower, more complex end-to-end tests. This structure ensures rapid feedback for developers while maintaining sufficient coverage for critical functionalities.

Automated testing is crucial for modern software development practices and deserves its place on this list because it forms the bedrock of continuous integration and continuous delivery. Without a robust automated testing strategy, CI/CD pipelines become vulnerable to undetected errors, leading to unreliable and potentially disastrous deployments. The key features of this approach—multi-layered test coverage, adherence to the test pyramid, automated execution within the CI/CD pipeline, and fast feedback loops—enable teams to deliver high-quality software rapidly and reliably.

Examples of Successful Implementation:

- Spotify: Leverages a comprehensive automated testing suite with a strong focus on unit testing to ensure the stability and quality of their music streaming platform.

- Etsy: Maintains thousands of automated tests integrated into their CI/CD pipeline, guaranteeing rigorous quality checks before any changes reach production.

- Amazon: Employs a culture of automated testing across their service teams, using automated quality checks to maintain high test coverage and support their microservices architecture.

Pros:

- Early Defect Detection: Catches bugs early in the development cycle, reducing the cost and effort of fixing them later.

- Reduced Manual Testing Burden: Minimizes the need for time-consuming and error-prone manual testing.

- Increased Deployment Confidence: Provides confidence in the stability and reliability of frequent deployments.

- Safe Refactoring: Enables safe code refactoring and modernization by providing a safety net of automated tests.

- Rapid Feedback: Delivers fast feedback to developers, allowing them to address issues promptly.

Cons:

- Initial Investment: Setting up a comprehensive automated testing framework requires a significant initial investment in development time and resources.

- Maintenance Overhead: Test suites need to be maintained and updated alongside the codebase, which can be burdensome if not managed effectively.

- Flaky Tests: Tests that produce inconsistent results (flaky tests) can erode trust in the CI/CD pipeline and require dedicated effort to identify and fix.

- Resource Intensive End-to-End Tests: End-to-end tests can be slow to run and consume substantial resources, potentially impacting the overall pipeline speed.

Actionable Tips for Implementation:

- Prioritize Quality over Quantity: Focus on writing effective tests that cover critical functionalities rather than aiming for arbitrary code coverage metrics.

- Favor Fast Tests: Prioritize fast-running tests, particularly unit tests, to provide immediate feedback to developers during the development cycle.

- Isolate Tests: Ensure tests are isolated and independent to prevent interdependencies that can lead to cascading failures and difficult debugging.

- Manage Test Data: Implement effective test data management strategies to ensure consistent and reliable test results.

- Address Flaky Tests Promptly: Identify and address flaky tests immediately to maintain confidence in the test suite and the CI/CD pipeline.

- Integrate Security and Performance Testing: Incorporate automated security and performance tests into the pipeline to ensure holistic quality assurance.

When and Why to Use This Approach:

An automated testing strategy is essential for any team adopting CI/CD practices. It is especially crucial for projects with frequent releases, complex codebases, and distributed teams. By automating the testing process, you can ensure consistent quality, reduce manual effort, and accelerate the delivery of high-quality software. This approach is fundamental to building a robust and efficient CI/CD pipeline that enables faster iterations, quicker feedback, and higher confidence in deployments. The principles popularized by industry leaders like Mike Cohn (test pyramid), Kent Beck (TDD), Martin Fowler, and the engineering practices of Google and Microsoft further reinforce the importance and effectiveness of automated testing in modern software development.

3. Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is a crucial practice for modern CI/CD pipelines, revolutionizing how we manage and provision infrastructure. Instead of manually configuring servers, networks, and databases, IaC uses machine-readable definition files. This allows you to treat your infrastructure like your application code: version-controlled, testable, and automatically deployable. This approach ensures consistency across all environments, from development to production, eliminating the dreaded "works on my machine" scenario.

How IaC Works:

IaC utilizes declarative or imperative approaches. Declarative IaC defines the desired state of your infrastructure, leaving the tool to figure out how to achieve it. Imperative IaC, on the other hand, defines the specific steps required to reach the desired state. Regardless of the chosen approach, these definitions are stored in version-controlled files, allowing for easy rollback and auditing. Popular tools for IaC offer features such as automated provisioning, configuration, idempotent operations (meaning running the same code multiple times produces the same result), environment templating and reuse, and even policy as code for compliance and governance.

Why IaC Belongs in Your CI/CD Best Practices:

IaC is essential for building a robust and efficient CI/CD pipeline. By automating infrastructure deployment, you streamline the entire software delivery process, reducing errors and accelerating time to market. The ability to test infrastructure changes alongside application code changes ensures environment consistency and reduces the risk of deployment failures. Learn more about Infrastructure as Code (IaC) to understand its deeper implications in CI/CD workflows.

Successful Implementations:

Numerous companies have demonstrated the benefits of IaC:

- Netflix: Uses Spinnaker with infrastructure templates to manage their vast and complex cloud deployments, enabling rapid scaling and efficient resource utilization.

- Capital One: Leverages Terraform for consistent cloud infrastructure across different teams, promoting collaboration and standardization.

- Hashicorp: Utilizes its own product, Terraform, to manage its infrastructure, showcasing the power and flexibility of the tool.

Pros and Cons of IaC:

Pros:

- Eliminates environment inconsistencies: Says goodbye to "works on my machine" problems by ensuring consistent environments.

- Enables rapid scaling and disaster recovery: Automates infrastructure provisioning for scaling and recreating environments quickly in case of failures.

- Creates self-documenting infrastructure: Configuration files serve as documentation, providing a clear view of the infrastructure setup.

- Allows infrastructure testing before deployment: Enables testing of infrastructure changes, reducing the risk of deployment issues.

- Provides audit trail for compliance purposes: Version control provides a complete history of changes for auditing and compliance.

Cons:

- Learning curve for teams new to IaC concepts: Requires teams to learn new tools and concepts.

- Requires disciplined approach to security (secrets management): Securely managing secrets and credentials is critical.

- Can lead to over-engineering of infrastructure: Overly complex IaC setups can become difficult to manage.

- Complex state management in some tools: Managing the state of infrastructure can be challenging with certain tools.

Actionable Tips for Implementing IaC:

- Start small: Begin with simple, well-understood components of your infrastructure.

- Version control: Keep your infrastructure code in the same repository as your application code whenever possible to streamline the development process.

- Security first: Implement proper secret management from the very beginning.

- Modularity and Reusability: Use modules and templates to promote reuse and reduce code duplication.

- Testing: Test your infrastructure code with tools like Terratest or Kitchen-Terraform.

- Drift Detection: Implement drift detection to identify any manual changes made to the infrastructure outside of the IaC process.

By following these best practices and adopting IaC, your organization can significantly improve its CI/CD pipeline, enabling faster, more reliable, and more efficient software delivery.

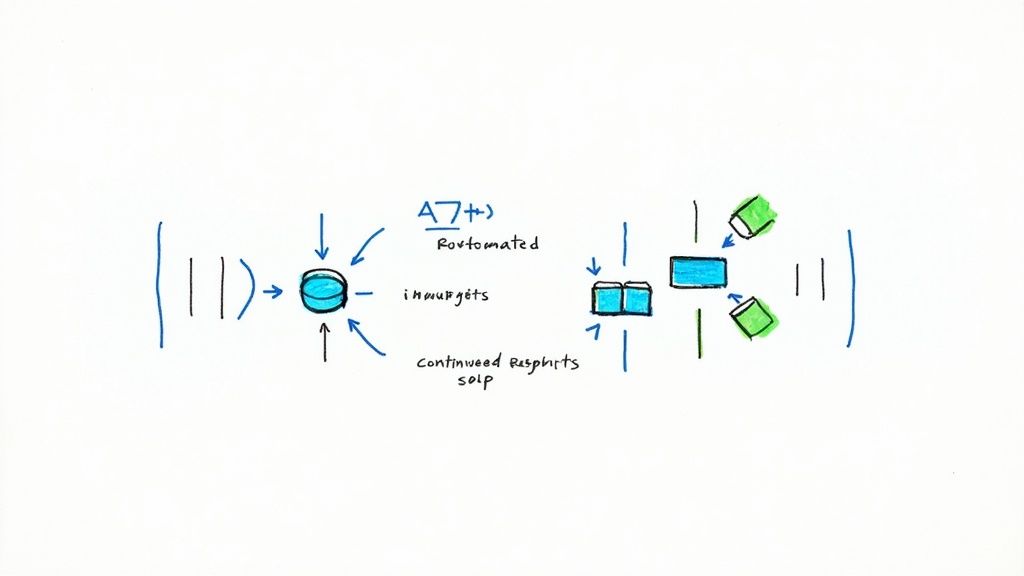

4. Deployment Automation with Immutable Infrastructure

Deployment Automation with Immutable Infrastructure represents a significant advancement in CI/CD practices, focusing on rebuilding entire environments for each deployment rather than updating existing components in place. This approach treats infrastructure as code, where servers and containers are replaced with new, pre-configured instances for every release. This eliminates the risk of configuration drift – where server environments gradually diverge over time – and promotes consistent, predictable deployments.

This method offers several key features, including complete deployment automation without manual intervention, immutable server/container instances, integration of infrastructure provisioning into the deployment process, versioned deployments with easy rollback capabilities, and support for progressive deployment strategies like blue-green and canary releases. Companies like Amazon, Netflix, and Etsy have successfully implemented immutable infrastructure, demonstrating its effectiveness at scale. Amazon, for example, leverages immutable deployments to push to production thousands of times a day. Netflix's Spinnaker platform, a popular open-source continuous delivery tool, is built around immutable infrastructure principles. Etsy also utilizes this approach, rebuilding environments with every deployment to ensure consistency. You can Learn more about Deployment Automation with Immutable Infrastructure to delve deeper into its implementation details and benefits.

This practice is especially valuable for organizations seeking to enhance reliability, improve security, and simplify the deployment process. By eliminating snowflake servers and ensuring consistency across environments, immutable infrastructure provides increased confidence in deployments. The ease of rollback, enabled by versioned deployments and readily available previous versions, significantly reduces the risk and impact of faulty releases. Regularly patching new instances improves security posture, addressing vulnerabilities promptly.

Pros:

- Eliminates configuration drift and snowflake servers

- Enables consistent, repeatable deployments

- Simplifies rollbacks (switch to previous version)

- Improves security with regular patching

- Provides confidence through identical environments

Cons:

- Requires significant upfront automation investment

- May increase cloud resource costs initially

- Requires mature monitoring for deployment validation

- Database changes need special handling

Tips for Implementing Immutable Infrastructure:

- Containerize applications: Containers provide a consistent runtime environment across different stages of the deployment pipeline.

- Implement proper state management: Carefully manage the state of stateful components like databases.

- Use image baking pipelines: Create pre-configured, ready-to-deploy instances with all necessary dependencies and configurations.

- Develop comprehensive health checks: Ensure robust health checks are in place to validate deployments and trigger automated rollbacks if necessary.

- Start small: Begin with staging environments before implementing in production to gain experience and refine your processes.

- Automate database schema migrations carefully: Database migrations require special attention and should be automated as part of the deployment process.

Deployment Automation with Immutable Infrastructure deserves a place in any CI/CD best practices list due to its ability to drastically improve reliability and consistency in software delivery. While the upfront investment in automation can be significant, the long-term benefits of reduced risk, improved security, and simplified deployments make it a worthwhile strategy for organizations looking to modernize their software development lifecycle. This approach is particularly relevant for software development teams, DevOps engineers, quality assurance engineers, enterprise IT leaders, tech startups, CI/CD engineers, and platform engineers striving to deliver high-quality software rapidly and reliably.

5. Comprehensive Monitoring and Observability

A robust CI/CD pipeline isn't just about automating deployments; it's about ensuring those deployments are successful and contribute positively to the user experience. This is where comprehensive monitoring and observability become critical. This practice involves instrumenting your applications and infrastructure to collect telemetry data – metrics, logs, and traces – providing deep insights into the behavior of your systems. This data empowers teams to detect issues rapidly, understand their root cause, and make informed decisions regarding deployments and overall system health.

How it Works:

Comprehensive monitoring and observability go beyond simply checking if a server is up. It's about understanding the why behind any performance deviations or errors. Metrics provide quantitative data like CPU usage, latency, and error rates. Logs offer detailed records of events occurring within your applications and infrastructure. Traces illustrate the flow of requests across distributed systems, allowing you to pinpoint bottlenecks and latency issues. By correlating these three pillars of observability, you gain a complete picture of your system's health and performance.

Examples of Successful Implementation:

- Google's SRE practices: Google's Site Reliability Engineering teams heavily rely on monitoring and observability, using concepts like error budgets to define acceptable levels of service disruption and guide deployment decisions.

- LinkedIn: Leverages real-time monitoring to validate deployments and quickly rollback if any issues are detected, minimizing user impact.

- Datadog: Employs its own comprehensive suite of observability tools within their CI/CD processes, demonstrating the value of "dogfooding" in ensuring a high-quality product.

When and Why to Use This Approach:

Comprehensive monitoring and observability are essential for any team practicing CI/CD, particularly those operating at scale or with complex distributed systems. This approach is particularly valuable when:

- Deploying frequently: Rapid deployments necessitate robust monitoring to quickly detect and address any issues introduced by changes.

- Managing microservices: Distributed tracing becomes crucial for understanding the complex interactions between services in a microservices architecture.

- Maintaining high availability: Proactive monitoring and alerting help prevent outages and minimize downtime.

- Optimizing performance: Identifying bottlenecks and performance issues relies on detailed metrics and tracing data.

Features and Benefits:

- Real-time monitoring of pipeline execution metrics: Understand the performance and efficiency of your CI/CD pipeline itself.

- Application performance monitoring (APM): Gain insights into application performance and identify areas for optimization.

- Distributed tracing across services: Track requests as they flow through your system, pinpointing performance bottlenecks.

- Centralized logging with structured data: Aggregate logs from various sources and use structured data for efficient querying and analysis.

- Alerting and notification systems: Receive timely notifications about critical issues and anomalies.

- SLO/SLI monitoring for service health: Track service level objectives (SLOs) and indicators (SLIs) to ensure service reliability.

- User experience and business metrics tracking: Connect system performance to business outcomes and user satisfaction.

Pros:

- Enables rapid detection and resolution of issues

- Provides feedback for deployment success/failure

- Facilitates data-driven deployment decisions

- Improves mean time to recovery (MTTR)

- Creates confidence for frequent deployments

Cons:

- Can generate overwhelming data volume

- Requires thoughtful implementation to avoid alert fatigue

- May require specialized skills for advanced observability

- Additional infrastructure costs for monitoring systems

Actionable Tips:

- Define clear SLIs/SLOs for each service: Establish clear performance targets to guide monitoring and alerting efforts.

- Instrument code with OpenTelemetry or similar standards: Standardize instrumentation for consistent and portable telemetry data.

- Implement synthetic monitoring to simulate user interactions: Proactively monitor critical user flows and detect issues before real users are impacted.

- Set up deployment validation via metrics: Automate deployment validation by checking key metrics after deployment.

- Create dashboards specifically for deployment events: Visualize deployment impact on system behavior.

- Correlate deployment events with system behavior changes: Identify correlations between deployments and performance anomalies.

- Establish baselines to detect anomalies: Use historical data to establish baseline performance and identify deviations.

Popularized By:

- Google SRE team

- Charity Majors (observability advocate)

- Ben Sigelman (LightStep founder)

- Grafana Labs

- New Relic

- Datadog

This comprehensive approach to monitoring and observability deserves its place in the CI/CD best practices list because it bridges the gap between automated deployments and reliable, high-performing systems. By investing in robust observability, organizations can ensure that their CI/CD pipelines deliver not just speed, but also stability and a positive user experience.

6. Pipeline as Code

Pipeline as Code (PaC) is a fundamental practice in modern CI/CD that involves defining your entire build and deployment pipeline through code, much like you write code for your application. This shifts away from the traditional click-and-configure approach of managing pipelines through UI tools, towards a more robust and automated method using configuration files. These files, often written in YAML, JSON, or a domain-specific language (DSL), are stored alongside your application code in your version control system. This allows you to manage your pipeline with the same rigor and discipline as your software development process, leveraging version control, code review, and automated testing.

This approach brings numerous benefits. By defining pipelines in code, they become self-documenting, easily reproducible, and readily shareable across teams. Version control integration allows for tracking changes, rolling back to previous versions, and collaborating on pipeline improvements through pull requests and code reviews. Automated testing ensures pipeline reliability and prevents deployment errors before they impact production.

Features of Pipeline as Code:

- Declarative or Scripted Pipeline Definitions: Choose between declarative syntax for simpler pipelines or scripted approaches for more complex, dynamic control.

- Version-Controlled Pipeline Configurations: Manage your pipeline code in your version control system (e.g., Git) for full history and traceability.

- Pipeline Testing Capabilities: Test and validate your pipeline logic before deployment to ensure reliability.

- Pipeline Templates and Shared Libraries: Promote reusability and standardization across projects.

- Multi-branch Pipeline Support: Automate CI/CD workflows for feature branches, development branches, and releases.

- Self-documenting Build and Deployment Processes: The code itself serves as documentation, clearly defining each step.

Pros:

- Enables pipeline versioning and history tracking: Trace every change to your pipeline for easier debugging and rollback.

- Facilitates code review for pipeline changes: Improve pipeline quality and catch errors through peer review.

- Provides consistent pipeline execution: Eliminate inconsistencies caused by manual configuration.

- Allows pipeline testing before implementation: Validate changes in a safe environment before they reach production.

- Simplifies onboarding with well-documented processes: New team members can easily understand and contribute to the pipeline.

Cons:

- Learning curve for pipeline definition languages: Requires familiarity with YAML, JSON, or other DSLs.

- Can lead to complex pipeline definitions for sophisticated processes: Managing intricate pipelines requires careful planning and organization.

- Requires discipline in managing pipeline code: Treat your pipeline code with the same care as your application code.

- May need separate testing for pipeline changes: Ensure that pipeline changes don't negatively impact your application.

Examples of Successful Implementation:

- GitHub Actions: Uses YAML-based workflow files within repositories to define CI/CD pipelines.

- Netflix: Employs Spinnaker for complex deployment pipelines as code, managing massive deployments at scale.

- ThoughtWorks: Uses GoCD for implementing pipelines as code, delivering continuous delivery solutions to their clients.

Tips for Implementing Pipeline as Code:

- Keep pipeline definitions simple and modular: Break down complex pipelines into smaller, reusable components.

- Create reusable pipeline components with parameters: Increase flexibility and reduce duplication.

- Implement pipeline testing for critical paths: Prioritize testing for crucial stages of your pipeline.

- Store pipeline code alongside application code: Maintain context and versioning consistency.

- Document pipeline behavior and prerequisites: Improve understandability and maintainability.

- Use linting for pipeline code validation: Enforce coding standards and prevent errors.

Pipeline as Code deserves a place in any best practices list because it is a cornerstone of modern DevOps. By treating your pipeline as a first-class citizen in your software development lifecycle, you gain the advantages of increased automation, improved collaboration, and enhanced reliability. This ultimately leads to faster delivery cycles, higher-quality software, and a more efficient development process.

7. Shift-Left Security (DevSecOps)

Shift-Left Security, often referred to as DevSecOps, fundamentally changes how we approach security in software development. Instead of treating security as a separate phase tacked on at the end, DevSecOps integrates security practices and testing throughout the entire Continuous Integration/Continuous Delivery (CI/CD) pipeline. This makes security everyone's responsibility, from developers and operations teams to security specialists, fostering a culture of shared ownership. By automating security checks and integrating them into the development workflow, vulnerabilities are identified and addressed much earlier in the cycle, when they are significantly less expensive and complex to fix.

This approach works by embedding various security tools and practices into each stage of the CI/CD pipeline. Automated security scanning tools are triggered automatically as code is committed, built, and deployed. This might include static application security testing (SAST), dynamic application security testing (DAST), dependency vulnerability scanning, and infrastructure security validation. Pre-commit hooks can even prevent developers from committing code with known security flaws, providing immediate feedback and preventing vulnerabilities from entering the codebase in the first place.

Successful implementations of DevSecOps can be seen across various industries. Microsoft, for example, performs automated security scanning with every code check-in, ensuring that potential issues are caught early. Capital One integrates security validation at multiple stages of their pipeline, providing layered protection. Netflix utilizes both proprietary and open-source tools to automate security checks throughout their CI/CD process.

Why Shift-Left Belongs in Your CI/CD Best Practices:

Shift-Left Security earns its place on this list due to its proactive nature and the tangible benefits it delivers. By catching vulnerabilities early, it dramatically reduces the cost and effort of remediation. Integrating security within the development process also eliminates security bottlenecks that can delay releases, fostering a more streamlined and efficient delivery pipeline. Moreover, DevSecOps fosters a culture of security awareness amongst developers, empowering them to write more secure code from the outset. Learn more about Shift-Left Security (DevSecOps) to delve deeper into the topic.

Features of Shift-Left Security:

- Automated security scanning in CI pipelines: Automatically triggers security scans during various stages of the pipeline.

- Pre-commit hooks for security checks: Provides instant feedback to developers before code is even committed.

- Infrastructure security validation: Ensures the security of the underlying infrastructure.

- Dependency vulnerability scanning: Identifies vulnerabilities in third-party libraries and components.

- Compliance validation as code: Automates compliance checks as part of the pipeline.

- Container security scanning: Specifically addresses security concerns related to containerized applications.

- Secrets management automation: Securely manages sensitive information such as API keys and passwords.

Pros:

- Identifies security issues early, reducing remediation costs.

- Reduces security-related bottlenecks in the delivery process.

- Increases security awareness among developers.

- Creates audit trails for compliance requirements.

- Enables frequent releases without compromising security.

Cons:

- Initial setup can require significant security expertise.

- Potential for false positives that require tuning and refinement.

- May slow down pipelines if not properly optimized.

- Requires ongoing maintenance of security tools and processes.

Actionable Tips for Implementing Shift-Left Security:

- Start small: Begin with basic SAST and dependency scanning and gradually expand your security toolset.

- Implement security gates: Define appropriate security checks for each environment (development, testing, production).

- Foster security champions: Identify and train individuals within development teams to advocate for security best practices.

- Leverage pre-commit hooks: Provide quick security feedback to developers during the coding process.

- Automate compliance checks: Integrate automated compliance validation into your pipeline, especially for regulated industries.

- Prioritize secrets management: Implement robust secrets management solutions from the start.

- Focus on actionable findings: Concentrate on security findings that provide clear remediation guidance.

By embracing Shift-Left Security and integrating it into your CI/CD pipeline, you can create a more secure development lifecycle, reduce risks, and deliver high-quality software with confidence.

8. Environment Parity with Containerization

Environment parity, the concept of keeping development, testing, and production environments as similar as possible, is crucial for reliable and predictable software deployments. Containerization offers a robust solution to achieve this, significantly minimizing the dreaded "it works on my machine" scenario. This practice leverages container technologies like Docker to package applications and their dependencies into isolated, portable units. These containers ensure consistent runtime behavior regardless of the underlying infrastructure, leading to increased deployment reliability and reduced debugging time.

How it Works:

Containerization encapsulates an application and its dependencies (libraries, runtime, system tools, code, and configuration files) within a container image. This image serves as a blueprint for creating container instances that run the application. Because the container includes everything the application needs, it behaves consistently across different environments. Declarative configuration files, often using tools like Kubernetes, define how these containers should be deployed and managed. This allows for automated and repeatable deployments, further enhancing consistency.

Features and Benefits:

- Containerized applications and dependencies: Bundling everything together ensures consistent execution across various stages.

- Declarative environment specifications: Tools like Docker Compose and Kubernetes allow for defining and managing environments through code, promoting reproducibility.

- Immutable application artifacts: Container images are typically built once and deployed across different environments, preventing inconsistencies from manual changes.

- Environment-specific configuration injection: While the core application remains the same, environment-specific configurations (like database credentials) can be injected during deployment.

- Consistent runtime behavior across stages: This consistency drastically reduces environment-related bugs and accelerates the debugging process.

- Portable deployments across infrastructure: Containers can run on various platforms (physical servers, virtual machines, cloud providers), enabling flexibility and multi-cloud deployments.

Pros:

- Eliminates environment inconsistencies and the associated bugs, saving valuable development time.

- Speeds up onboarding by providing standardized development environments for new team members.

- Simplifies scaling in production by allowing for easy replication of container instances.

- Enables microservices architecture by providing isolated runtime environments for individual services.

- Facilitates multi-cloud deployments, avoiding vendor lock-in.

Cons:

- There's a learning curve associated with container orchestration tools like Kubernetes.

- Managing stateful applications within containers requires careful consideration and planning.

- Container security requires specific expertise to address vulnerabilities and secure the container lifecycle.

- Containerized applications can sometimes consume more resources compared to bare metal deployments, especially if not optimized correctly.

Examples of Successful Implementation:

- Spotify: Leverages containerization extensively for its microservices architecture, enabling independent scaling and deployment of individual services.

- PayPal: Implemented Docker to achieve environment consistency and streamline their CI/CD pipeline.

- Google: Runs virtually all of its services in containers, orchestrated primarily by Kubernetes (and internally by Borg, its predecessor).

Actionable Tips:

- Use multi-stage builds: Create smaller, more efficient production images by separating build dependencies from runtime dependencies.

- Implement proper container security scanning: Integrate security scanning into your CI/CD pipeline to identify and address vulnerabilities early.

- Create developer-friendly local container environments: Tools like Docker Desktop and Minikube simplify local development with containers.

- Standardize base images across the organization: This ensures consistency and reduces potential security risks.

- Use declarative Kubernetes manifests for deployment: Automate deployments and ensure repeatability.

- Separate configuration from container images: Use environment variables or ConfigMaps to inject environment-specific settings.

- Implement proper container health checks: Ensure that your containers are running correctly and restart them automatically if necessary.

Why Containerization Deserves a Place in CI/CD Best Practices:

Containerization is a cornerstone of modern CI/CD pipelines. By providing environment parity, it significantly increases the reliability and predictability of software deployments, leading to faster release cycles, reduced downtime, and improved overall software quality. It empowers teams to embrace modern architectural patterns like microservices and facilitates multi-cloud deployments, offering greater flexibility and scalability. While there are challenges to overcome, the benefits of containerization make it an essential practice for any organization aiming to optimize its software delivery process.

CI/CD Best Practices: 8-Point Comparison

| Practice | 🔄 Complexity | ⚡ Resource Requirements | 📊 Expected Outcomes | ⭐ Ideal Use Cases | 💡 Key Advantages |

|---|---|---|---|---|---|

| Trunk-Based Development | Medium; requires robust automated testing & fast merges | Low; minimal branch overhead with feature flag tooling | High integration, reduced merge conflicts, continuous delivery | Agile teams using CI/CD with rapid delivery cycles | Improved collaboration and faster deployment |

| Automated Testing Strategy | High; multi-layer test design adds setup complexity | High; significant effort for test suite creation & upkeep | Early defect detection and rapid feedback, fewer manual errors | Projects emphasizing quality and frequent releases | Safer refactoring and increased developer confidence |

| Infrastructure as Code (IaC) | Medium-High; new skill set and disciplined practices needed | Moderate; upfront investment in tooling and configuration | Consistent, repeatable infrastructure deployments with audit trails | Cloud environments and compliance-driven organizations | Eliminates drift and provides self-documenting setups |

| Deployment Automation with Immutable Infrastructure | High; full automation and environment rebuild required | High; increased resource consumption and initial automation costs | Reliable, rollback-friendly deployments with zero drift | Systems needing high availability and frequent updates | Uniform environments and simplified rollback processes |

| Comprehensive Monitoring and Observability | Medium; integrating diverse monitoring tools can be complex | High; resource-intensive data collection & alert systems | Faster issue detection, improved MTTR, data-driven decisions | Large-scale, distributed systems and production environments | Deep system insights and operational confidence |

| Pipeline as Code | Medium; requires learning pipeline DSLs and modular design | Low-Moderate; easier maintenance once established | Version-controlled, consistent, and testable pipelines | Organizations adopting CI/CD and automated workflows | Simplified reviews and reproducible deployment processes |

| Shift-Left Security (DevSecOps) | High; integrates multiple security tools into development | Moderate-High; continuous scanning demands dedicated resources | Early vulnerability detection and proactive security measures | Regulated industries and security-focused organizations | Builds security awareness with early remediation measures |

| Environment Parity with Containerization | Medium; container orchestration skills required | Moderate; needs container management infrastructure | Consistent, reproducible environments across all stages | Microservices architectures and multi-cloud deployments | Eliminates "works on my machine" issues with scalable setups |

Mastering Modern CI/CD

Implementing a robust CI/CD pipeline is no longer a luxury but a necessity for modern software development teams. From trunk-based development and automated testing to infrastructure as code and DevSecOps, the practices discussed in this article lay the foundation for a streamlined, efficient, and reliable release process. The key takeaway is that by focusing on automation, consistency, and security at every stage of your pipeline, you empower your team to deliver high-quality software faster and more frequently. This translates to quicker feedback loops, reduced time to market, and ultimately, greater customer satisfaction.

Optimizing your CI/CD implementation requires careful planning and execution. For a deeper dive into optimizing your CI/CD implementation, explore this comprehensive guide on CI/CD pipeline best practices from Pull Checklist, covering everything from development excellence to pipeline optimization. By integrating principles such as immutable infrastructure, comprehensive monitoring, and environment parity through containerization, you can minimize deployment risks and ensure consistent performance across different environments. Moreover, embracing pipeline as code enables version control and automation of your CI/CD process itself, further enhancing its robustness and maintainability.

Mastering these CI/CD best practices empowers your organization to respond rapidly to changing market demands, iterate quickly on new features, and stay ahead of the competition. The journey towards CI/CD maturity is an ongoing process, but the benefits are undeniable.

Ready to streamline your pull requests and automate your merging workflow to further enhance your CI/CD practices? Explore Mergify and discover how it can help you efficiently manage and automate your merge processes, freeing up your team to focus on building exceptional software.