Automated Testing Best Practices: A Strategic Guide for Modern Development Teams

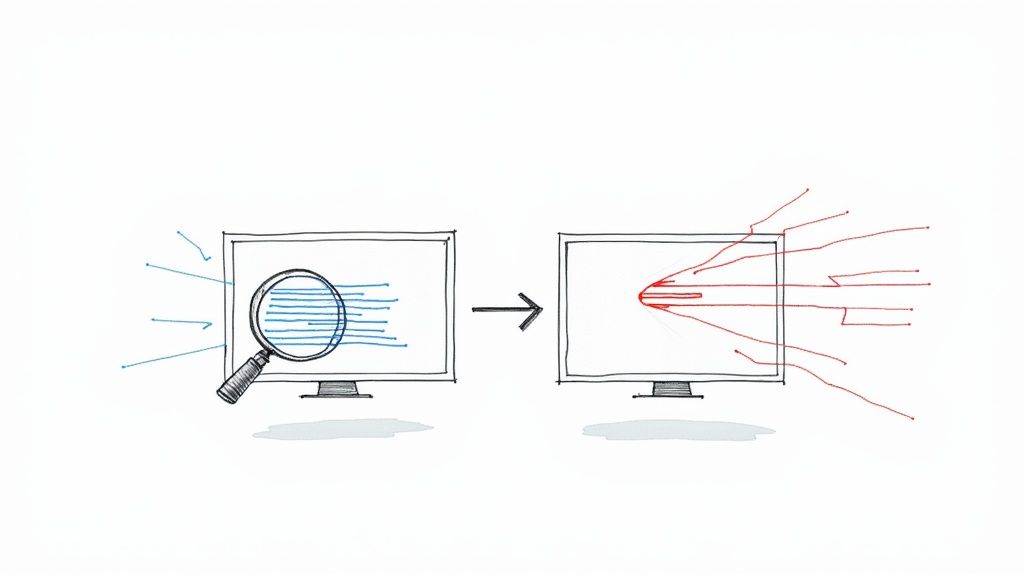

Understanding the True Value of Test Automation

Top development teams are taking a smarter approach to testing by carefully blending automated testing with manual methods. This balanced approach helps teams ship more reliable software faster while maintaining high quality standards. The key is moving beyond simplistic automation and building testing practices that deliver real, measurable improvements.

Moving Beyond Basic Metrics

Smart teams know that success isn't just about running more automated tests. It's about fostering a culture of quality throughout development. When teams automate repetitive tasks like regression testing, their QA engineers can focus on detailed exploratory testing and finding edge cases that automated tests might miss. This leads to better overall testing coverage and a superior end product.

Measuring the ROI of Automation

To calculate automation ROI, teams should compare manual testing costs against automation setup and maintenance costs. The math becomes clearer when you factor in bug prevention - fixing issues in production costs ten times more than catching them during development. Recent data shows growing adoption of these practices - by 2020, 44% of IT teams had automated at least 50% of their testing work. In 46% of cases, automated tests directly replaced manual testing efforts. For more details, check out these test automation statistics and trends.

Overcoming Resistance to Automation

Some team members may worry about automation replacing their jobs or feel overwhelmed by new tools. The best way forward is open communication about how automation helps the whole team. QA engineers can focus on complex testing scenarios while automation handles repetitive tasks. This creates a more engaging work environment where everyone contributes their unique skills.

Real-World Examples

Many companies have seen concrete benefits from smart test automation. For example, development teams often cut their release cycles in half after implementing automated testing. This means they can ship updates more often and respond faster to customer needs, giving them an edge over competitors who are still doing everything manually.

By taking a thoughtful approach to automation, focusing on quality over quantity, and bringing the whole team along, development teams can get the most value from automated testing. The goal isn't just faster testing - it's building better software through a combination of automated efficiency and human expertise. This balanced perspective helps teams succeed with test automation in the long run.

Building Your Test Automation Strategy

A successful test automation program needs more than just good tools - it requires careful planning and strategic thinking. When you take time to develop a clear testing strategy upfront, you set your team up to catch bugs early and ship quality code faster. The most effective engineering teams make test automation a core part of their development process.

Evaluating and Selecting the Right Tools

Finding tools that match your team's needs is essential. Consider key factors like:

- Programming language support and existing tooling compatibility

- Your team's technical skills and experience

- Types of testing required (UI, API, mobile, etc.)

For instance, if your developers work primarily in Java, you'll want tools with strong Java support, like those from Mergify. Take time to thoroughly evaluate options against your specific requirements.

Prioritizing Test Automation Efforts

Start by identifying which tests will give you the biggest impact. Focus first on automating:

- Regression tests to catch breaking changes

- Smoke tests for core functionality

- Critical user paths and features

This targeted approach helps you catch important issues quickly while using manual testing for more nuanced scenarios. Begin with high-value tests and expand from there.

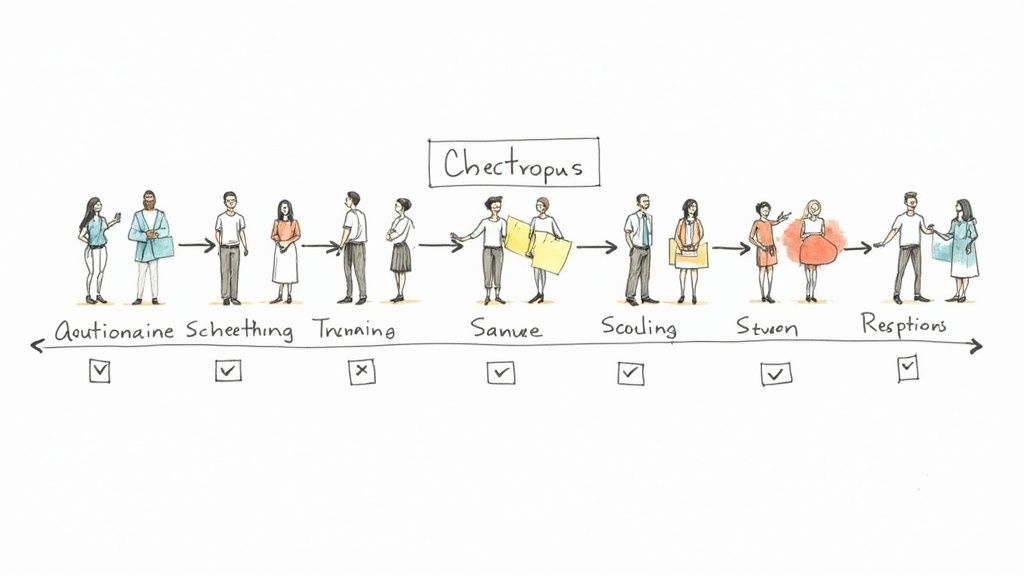

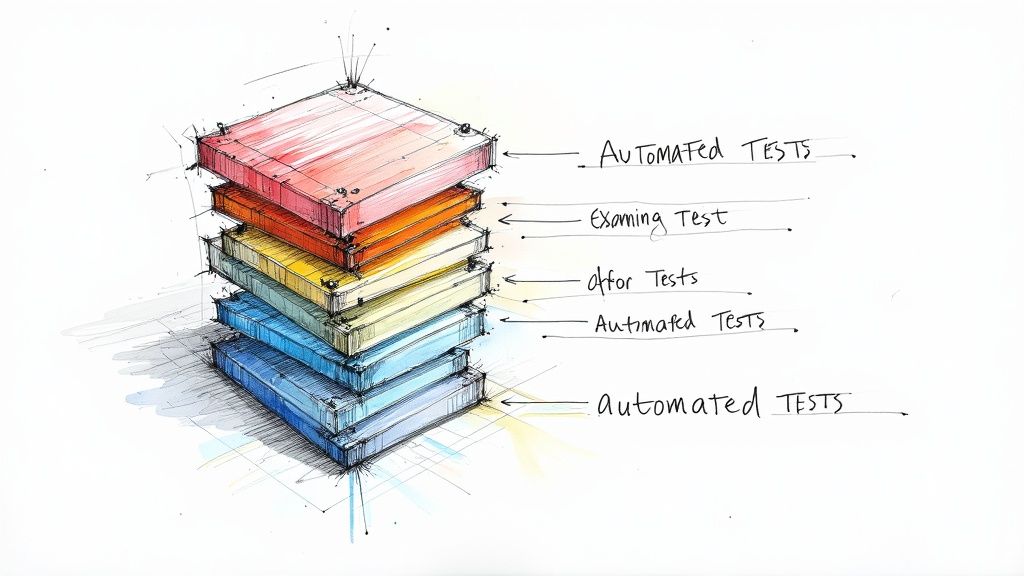

The Test Automation Pyramid

Think of your test suite like a pyramid. At the base, you have many fast, simple unit tests. The middle contains fewer integration tests that check how components work together. At the top sits a small number of complete end-to-end tests. This structure keeps your test suite efficient and maintainable.

| Test Type | Quantity | Speed | Cost |

|---|---|---|---|

| Unit Tests | High | Fast | Low |

| Integration Tests | Medium | Medium | Medium |

| E2E Tests | Low | Slow | High |

Test Environment Management and Continuous Integration

Reliable test environments are crucial for consistent results. Use containers and infrastructure-as-code to create stable, repeatable test setups. Connect your automated tests to your CI/CD pipeline to get quick feedback on code changes. Mergify's merge queue and protection features help manage this process while keeping your code stable and CI costs down. When you put all these pieces together, you build a testing strategy that improves quality and speeds up development.

Measuring What Matters in Test Automation

When it comes to automated testing, measuring the right things makes all the difference. Rather than chasing vanity metrics, successful teams focus on meaningful indicators that show real impact. Key performance indicators like bug detection rates, test coverage, execution speed, and maintenance needs give teams clear insights into how well their automation efforts are working. Teams that emphasize strong test automation often catch more bugs before release and ship updates faster, leading to better software quality overall. Learn more about test automation success metrics here.

Identifying Key Performance Indicators (KPIs)

The most valuable KPIs for automated testing include:

- Defect Detection Efficiency: How many bugs your tests catch before release

- Test Coverage: What percentage of your code is tested

- Test Execution Time: How quickly you get feedback from test runs

- Maintenance Costs: What it takes to keep your test suite running smoothly

These metrics help teams understand not just how they're doing now, but where they need to focus their automation efforts going forward.

Practical Approaches to ROI and Reliability

To measure the Return on Investment (ROI) of test automation, compare what you spend on manual testing versus automation setup and maintenance. Remember that fixing bugs after release costs much more than catching them during development. Having clear ROI data helps teams make better decisions about where to invest in more automation.

Test reliability needs constant attention to ensure automated tests give accurate results consistently. Tools like Mergify help teams manage this through CI/CD features that support ongoing improvements and keep testing aligned with business goals.

| KPI | Explanation |

|---|---|

| Defect Detection | Measures tests' ability to catch bugs |

| Test Coverage | Examines breadth of code tested |

| Test Execution Time | Assesses speed of obtaining test feedback |

| Maintenance Costs | Evaluates expense of maintaining tests |

Success in test automation comes from constantly improving based on these metrics. By staying focused on business goals and measuring what truly matters, teams can build testing processes that deliver real value.

Mastering Test Design Patterns for Long-Term Success

Creating reliable automated tests requires thoughtful design patterns, not just tools and metrics. A well-structured test suite forms the foundation for sustainable testing efforts. By implementing proven test design patterns, teams can write maintainable code that's easy to reuse and update over time.

Handling Test Data Effectively

Good test data management makes or breaks automated testing success. Here are key practices to implement:

- Data-Driven Testing: Keep your test data separate from test logic. This lets you run identical tests with different data inputs to expand coverage without duplicating code. Tools like JUnit's parameterized tests make this straightforward.

- Test Data Factories: Build factory methods that generate test data on demand. With consistent data creation, test setup becomes simpler and more predictable.

- Cleanup and Reset: Add code to reset data between test runs. This prevents tests from interfering with each other and ensures each test starts fresh.

Implementing Robust Error Handling

Your tests need to handle unexpected issues gracefully. Focus on these error handling essentials:

- Try-Catch Blocks: Put test steps in try-catch blocks to manage exceptions properly. This prevents abrupt test failures and gives useful context about what went wrong.

- Clear Assertions: Write specific assertions that check exact expected outcomes. When tests fail, you'll know precisely where to look for issues.

- Detailed Logging: Add good logging throughout your tests. Having a clear record of what happened during test runs makes debugging much easier.

Take a login form test as an example - it should handle bad passwords, network timeouts, and server errors smoothly while providing helpful error messages in each case.

Organizing Test Suites for Optimal Performance

A clean, organized test suite saves time and headaches. Structure your tests with these principles:

- Modular Design: Split large tests into focused, independent pieces. This makes your code more reusable and helps isolate problems. It also enables running tests in parallel.

- Clear Test Names: Give tests descriptive names that explain what they check. This helps the whole team understand and maintain the test suite.

- Efficient Setup/Teardown: Use setup and teardown methods to handle test resources properly. This keeps your test environment consistent. Mergify can help by automating CI/CD workflows to keep test environments stable.

Following these patterns leads to more reliable, maintainable automated tests. As your codebase grows, good test design becomes crucial for managing complexity. This organized approach reduces test flakiness and makes test dependencies easier to handle, creating a test framework that scales with your application.

Scaling Your Automation Practice Without Breaking Things

Growing your automated testing requires careful planning. Many teams struggle with scaling while maintaining stable test results. Here's a practical guide to scale effectively without compromising quality or speed.

Leveraging Cloud-Based Testing Infrastructure

Running tests in the cloud brings major benefits compared to managing physical servers. Services like AWS Device Farm and BrowserStack let you instantly scale test environments up or down. This gives teams:

- Lower costs through pay-as-you-go pricing

- Quick setup of test environments in minutes

- Easy adjustment of testing resources based on needs

Efficient Test Data Management at Scale

As test suites grow, proper test data management becomes essential. Poor data practices lead to flaky tests and slower execution. Here are proven approaches that work:

- Create data-driven test frameworks to keep test data separate from test logic

- Build test data generators for consistent, reusable data sets

- Use data masking and anonymization to protect sensitive information

Building a Robust CI/CD Pipeline

Your CI/CD pipeline needs to handle increased automation load smoothly. Tools like Mergify help by providing merge queues and protection rules. Key pipeline strategies include:

- Run the fastest tests first to get quick feedback

- Split test execution across multiple machines

- Set up automatic retries for occasional test failures

Optimizing Execution Times Across Large Test Suites

Long test runs can slow down development. Here's how to keep execution times manageable:

- Use test impact analysis to only run tests affected by code changes

- Set up parallel test execution across multiple nodes

- Remove or update slow, unreliable tests regularly

By following these guidelines, teams can scale their automation practice effectively. The key is making incremental improvements while maintaining reliability. Focus on practical solutions that work for your specific needs rather than trying to do everything at once.

Navigating Common Automation Pitfalls and Challenges

Every team working on test automation faces obstacles along the way. These challenges can slow progress and affect the return on your automation investment. Let's look at the most common issues teams encounter and practical ways to overcome them.

The Perils of Improper Tool Selection

Many teams make the critical mistake of choosing automation tools without proper evaluation. Selecting tools based on popularity or current trends often leads to major problems - from compatibility issues with existing systems to steep learning curves that delay projects. When evaluating tools, focus on:

- Your team's current technical skills

- The specific types of testing needed (UI, API, mobile)

- Integration with your existing tech stack

For example, Mergify works seamlessly with common CI/CD pipelines, making it easier to manage automated workflows.

The Trap of Brittle and Unreliable Tests

Flaky tests are a major headache for automation teams. These tests pass and fail randomly without any code changes, which wastes time investigating false issues and reduces trust in test results. The main causes are usually poor test data management and timing problems.

To fix this:

- Create robust test data management processes

- Design clear test setup and cleanup procedures

- Use tools like Mergify to better manage merge queues and prevent integration issues

Maintaining Stability Through Change

As applications grow and change, automated tests can break if they're too tightly linked to implementation details. When tests are closely coupled to the code, small changes require constant test updates. This increases maintenance work and slows down development.

The solution is following automated testing best practices:

- Build modular, reusable test components

- Create abstraction layers to shield tests from code changes

- Design tests focused on behavior rather than implementation

Strategies for Long-Term Success

Teams often focus on short-term needs while overlooking long-term maintainability. This creates technical debt that makes it harder to adapt tests as projects evolve. To build sustainable test automation:

- Set clear coding standards for test code

- Use version control for all test assets

- Document framework design and best practices

- Plan for increasing test volume over time

- Consider future application architecture changes

By addressing these challenges early, you can build reliable, maintainable automated testing. The key is choosing the right tools, designing stable tests, and planning for long-term success.

Want to improve your testing workflow? See how Mergify can help streamline your development process.