Automated Testing Best Practices: Your Guide to Transforming Dev Team Efficiency

Understanding the Real Impact of Test Automation

More companies are embracing automated testing as a core part of their software development process. Recent data shows that 44% of organizations are working to automate at least half of their testing work. This shift comes from real business needs - teams need to release software faster while maintaining high quality. But simply automating everything isn't the answer. The key is finding smart ways to combine automated and manual testing approaches.

Balancing Automated and Manual Testing

Getting the mix right between automated and manual testing is essential. Automated tests work great for repetitive tasks like checking if basic features still work after code changes. But manual testing brings human insight that's crucial for exploring new features, checking usability, and finding unexpected issues. The goal isn't to replace manual testers - it's to free them from tedious work so they can focus on more valuable testing that requires creativity and judgment. For example, when routine checks are automated, testers can spend more time deeply exploring new features to ensure they work well for users.

Measuring Automation Progress and Setting Realistic Goals

Success with test automation requires clear goals and ways to measure progress. While it might seem ideal to automate everything, that's rarely practical or worth the cost. Instead, focus first on automating the tests that give you the biggest benefits - like checks for critical features, commonly used functions, and areas where manual testing is prone to mistakes. Keep track of key numbers like how long tests take to run, how much of your code is tested, and how many bugs you're finding. This helps you improve your approach over time and make sure it fits your development needs.

Overcoming Common Pitfalls

Test automation brings clear benefits, but teams need to watch out for common problems. One big challenge is picking the right tools. There are many options available, so you need to choose ones that match your team's skills and project needs. Another issue is keeping automated tests working as your software changes. Updates to the interface or code can break tests, creating extra maintenance work. Using good design patterns like the Page Object Model and choosing reliable ways to identify screen elements helps build tests that last. When teams plan for these challenges from the start, they can avoid frustration and get more value from their automation work. By understanding what test automation can and can't do, using it strategically alongside manual testing, and following good practices, teams can deliver better software more quickly.

Crafting Your Test Automation Game Plan

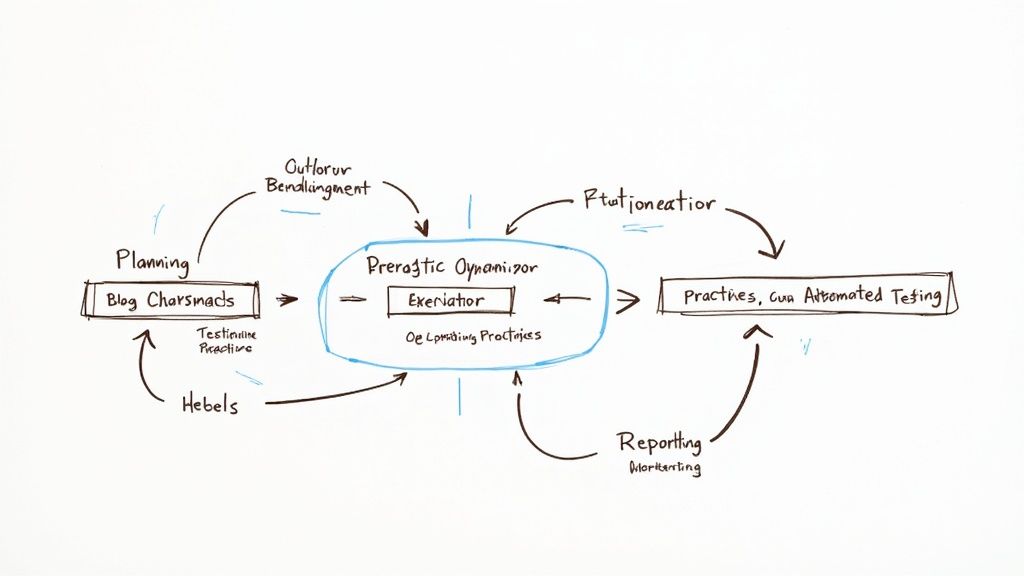

Effective test automation requires a thoughtful, practical approach rather than blindly automating everything. A solid test automation strategy focuses on maximizing value while keeping maintenance manageable. This means making smart choices about what to automate and building reliable test frameworks that deliver consistent results over time.

Identifying Key Tests for Automation

When developing your automation strategy, the first step is identifying which tests will give you the best return on your investment. While it may be tempting to automate every test case, that's rarely the best use of resources. Instead, focus on tests that clearly support your business goals and development needs.

- High-Frequency Tests: Tests that run repeatedly, like regression suites, make excellent automation candidates. This frees up your manual testers to focus on exploratory testing and deeper usability analysis.

- Error-Prone Tests: Complex or repetitive test scenarios where humans are likely to make mistakes are perfect for automation. Converting these to automated tests improves accuracy and reliability.

- Business-Critical Tests: Prioritize automating core features that directly impact your users and bottom line. For example, if you run an online store, thoroughly automated testing of the checkout flow is essential.

- Data-Driven Tests: Tests that need to run against multiple data sets work well with automation. Scripts can efficiently repeat the same test with different inputs for better coverage.

- Cross-Platform Tests: Applications that run on multiple browsers or devices benefit from automated testing to ensure consistent behavior everywhere. This is particularly important as users access software through an expanding range of platforms.

Building a Sustainable Automation Framework

After selecting your test candidates, the next phase is creating a sustainable framework. This involves picking appropriate tools and establishing clear processes. With many teams finding tool selection challenging, making informed choices is key. A good framework considers both current needs and room for future growth.

- Tool Selection: The right tools depend on your team's expertise, project requirements, and available budget. Look at factors like ease of use, how well tools integrate with your existing systems, and the size of the user community.

- Design Patterns: Using established patterns like the Page Object Model helps create reusable, maintainable code. This becomes increasingly valuable as your application grows and changes.

- Test Data Management: Create clear processes for handling test data - from creation to storage to retrieval. Good data management leads to more reliable and consistent test results.

- Reporting and Analysis: Set up strong reporting systems to track results, spot patterns, and identify areas needing attention. Clear reporting helps teams understand how well the application is performing.

By taking a measured approach to automation and following these practical guidelines, you can build a testing system that consistently improves quality and speeds up development. This focused strategy helps ensure your automation work delivers lasting value rather than becoming a maintenance burden.

Maximizing Speed Without Sacrificing Quality

A solid automation framework sets the foundation for fast test execution, but achieving major speed improvements requires mastering key testing practices. Teams that excel at automation typically reduce their test execution time by 66% through careful optimization of test design, execution, and management. This leads to faster feedback for developers, quicker release cycles, and smoother development workflows.

Optimizing Test Design for Speed

Fast test execution starts with smart test design. The key is writing focused tests that check specific functionality while keeping dependencies minimal and following modular design principles. The Page Object Model pattern helps reduce duplicate code and makes tests easier to maintain, which saves time during both test creation and runs. Small, single-purpose tests are also easier to run in parallel and have less impact when they fail.

Parallelization Strategies for Faster Execution

Running multiple tests at the same time is one of the best ways to speed up execution, but it requires thoughtful planning. Not all tests can run simultaneously - you need to identify which ones are truly independent and group them into suites that can safely run in parallel across different machines or environments. For example, a set of 10 tests that each take 1 minute would normally need 10 minutes to complete in sequence. With proper parallelization, those same tests could potentially finish in just 1 minute.

Maintaining Stability at Scale

As test suites grow larger, keeping them stable becomes more challenging. This requires robust error handling, tests that can handle UI updates gracefully, and smart strategies for managing asynchronous operations. Tests that fail randomly without code changes (known as "flaky" tests) can seriously slow down the testing process. Careful debugging and design help minimize flakiness. Good logging and reporting also make it easier to quickly identify and fix issues.

Architectural Decisions for High-Performing Test Suites

The technical foundation of your test framework has a big impact on speed. Choosing appropriate tools and testing platforms is essential - for instance, cloud-based testing services provide the scale needed for extensive parallel testing. Integration with CI/CD pipelines ensures tests run automatically and provide constant feedback during development. Tools like Mergify can streamline code merging and trigger automated tests after successful merges, maintaining quality without creating bottlenecks. By aligning these architectural choices with testing best practices, teams can significantly improve their testing speed and efficiency.

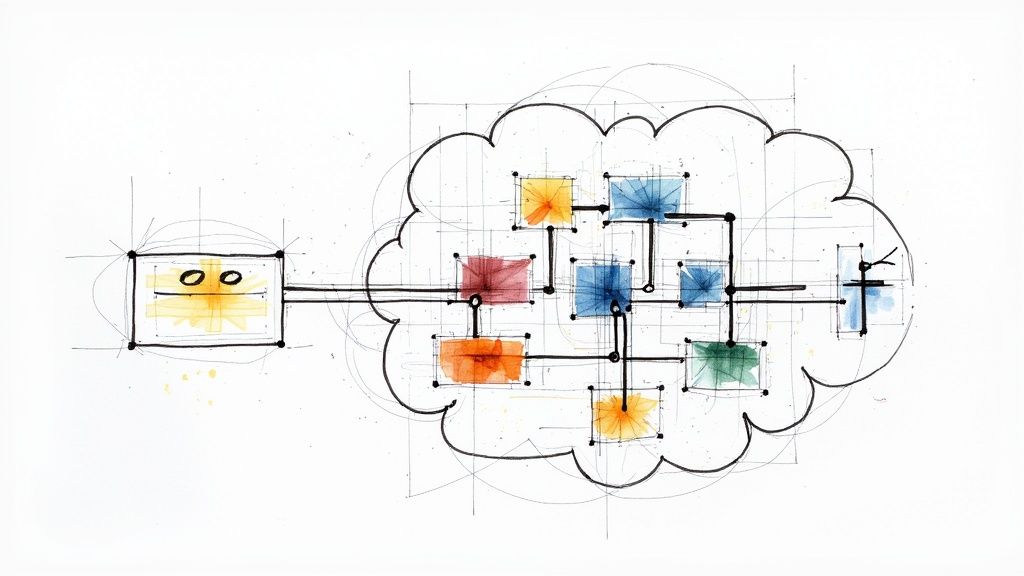

Using AI to Improve Your Automated Testing Process

While having solid test automation and design practices is essential, adding AI capabilities can make your testing even more effective. The goal isn't to replace human testers, but to give them better tools and make testing more efficient. For instance, AI can spot patterns in test data to identify where problems are most likely to occur, helping teams focus their testing efforts in the right places.

Smart Test Selection and Prioritization

Choosing which tests to run becomes challenging as applications grow larger and more complex. AI helps by examining code changes, past test results, and user data to pick the most important tests to run. This means you don't have to run every single test after each code update. Instead, AI helps you focus on the tests that matter most for each change. This smarter approach saves time while maintaining strong test coverage.

Self-Repairing Test Scripts

As applications change and evolve, keeping automated tests working properly takes significant effort. AI helps reduce this burden through "self-healing" tests. When an application's interface changes, standard automated tests often break because they can't find the elements they need. AI-powered tools can detect these changes and automatically update test scripts to work with the new interface. This reduces manual maintenance work and makes tests more reliable over time.

Advanced Test Failure Detection

AI does more than just pick and maintain tests. By studying patterns in past test failures, AI can predict which tests are likely to fail in upcoming runs. This early warning system lets teams fix potential issues before they impact development work. Catching and fixing bugs earlier reduces costs and saves time. It also helps team morale by preventing the frustration of dealing with the same test failures repeatedly.

Adding AI to Your Testing Process

Successfully bringing AI into your testing requires careful planning. Start by clearly defining what you want to achieve. Then evaluate different AI testing tools to find ones that work well with your current testing setup. Begin with a small pilot project to see how AI performs before expanding it across all your testing. This measured approach helps teams gain experience with AI testing and demonstrate its benefits before fully integrating it into their regular testing practices.

Mastering Cross-Platform and UI Testing

Cross-platform and UI testing presents unique challenges that require strategic approaches to create reliable, maintainable tests. A well-planned testing strategy helps teams deliver software that works consistently across different platforms and devices. Let's explore key aspects of building effective cross-platform and UI tests.

Selecting the Right Tools for the Job

The foundation of successful testing starts with choosing tools that match your project's needs. Selenium provides excellent flexibility for browser testing across operating systems, making it a solid choice for web applications. For mobile testing, Appium extends testing capabilities to native and hybrid apps. Your choice should align with your team's skills and target platforms. For instance, a project supporting both web and mobile platforms might benefit from combining Selenium for browser tests and Appium for mobile testing.

Designing Stable and Reliable UI Tests

UI tests often break due to changing interfaces, dynamic content, and timing issues. The Page Object Model (POM) pattern helps create more stable tests by separating test logic from page element details. This makes tests easier to update when the UI changes. Adding explicit waits for dynamic elements prevents timing-related failures. For example, when testing a form submission, waiting for success messages to appear ensures the test accurately verifies the outcome rather than failing because it checked too early.

Handling Dynamic UI Elements and Test Data

Dynamic content and changing data make UI testing complex. Use reliable element locators that remain stable even when surrounding content changes - prefer unique IDs and specific CSS selectors over brittle XPath expressions. Good test data management also helps create consistent results. Create reusable test data sets and implement clear setup and cleanup processes. Think of it like preparing for a cooking show - having ingredients measured and ready makes the actual cooking smooth and predictable.

Maintaining Test Reliability Across Platforms

Testing across platforms often reveals inconsistencies in how features behave. Focus first on core functionality that should work the same way everywhere. However, recognize when platform-specific test cases are needed - mobile touch events differ from desktop mouse clicks, for example. Add detailed logging to quickly identify platform-specific issues. Good logs save debugging time by showing exactly where and how tests fail on different platforms. Combining these practices with the right tools and patterns creates reliable cross-platform tests that help ensure software quality.

Measuring Success and Scaling Your Test Automation

Creating effective automated testing requires ongoing attention and refinement. To build a truly valuable testing practice, you need to both track meaningful metrics and adapt your approach as your software grows. Recent data shows that teams who focus on measuring concrete results - like faster releases and fewer bugs - are 85% more likely to succeed with their automation efforts.

Establishing Meaningful Metrics

Rather than just counting the number of automated tests, focus on metrics that directly show business value and impact:

- Reduced Time to Market: Track how automation speeds up your release cycle. If you can cut a 6-week release cycle down to 4 weeks through automation, that's a clear win worth measuring.

- Improved Product Quality: Monitor how many bugs reach production, especially critical issues. Look at defect rates before and after implementing automation to show real quality improvements.

- Increased Test Coverage: While 100% test coverage isn't realistic, steadily expanding your automated test coverage provides better protection against regressions.

- Faster Test Execution Time: Measure how quickly your test suite runs. For example, using parallel test execution could cut a 12-hour test run down to 4 hours, letting developers ship features much faster.

- Lower Test Maintenance Costs: Track the time and effort spent fixing and updating tests as your code changes. Well-designed tests should require minimal ongoing maintenance.

By consistently measuring these key metrics, you can clearly see where automation delivers value and make smart decisions about where to focus your efforts.

Scaling Your Automation for Growth

As your application and team get bigger, your testing approach needs to grow too. This means more than just writing additional tests - you need thoughtful planning in several key areas:

- Test Maintenance Strategies: Create clear processes for handling broken tests, including good documentation, version control for test code, and systematic approaches to investigating and fixing failures.

- Continuous Improvement through Feedback Loops: Regularly review test results and gather input from the team to keep improving. For example, analyzing patterns in test failures helps prevent similar issues in the future.

- Team Development and Skill Enhancement: Help your team learn new testing tools and techniques. If a promising new test framework emerges, give your team time to evaluate it and see if it could improve your process.

- Strategic Tool Selection and Integration: Pick testing tools that can grow with you, like cloud platforms that enable parallel test runs and work well with your deployment pipeline.

Following these practices helps you both measure success and build testing processes that adapt as you grow. The result? Faster development and better quality software.

Want to improve how your team handles code integration and deployment? Learn more about how Mergify can help your team ship better software faster.