Post Mortem of Incident 2024-09-17

On September 17th, 2024, Mergify service went down from 07:45 UTC to 09:35 UTC. Due to the nature of the incident, we think it would be interesting to share with our users the context of this incident, how we handled it, how we restored the service, and what we are planning for the future to avoid those.

Context

The Mergify engineering team relies on Mergify to merge its code — obviously. We rely on a scheduled merge queue: our merge gate opens from 09:00 CEST to 17:30 CEST, mapping our team's working hours. We use this default schedule to ensure deployments happen during working hours, making it easy to handle any consequences that pushing code might have.

At 09:40 CEST, a pull request tweaking monitoring tags was merged and deployed 4 minutes later, at 09:44 CEST. At 09:48 CEST, one of the Mergify engineers noticed that the service was down, and one of our customers reached out soon after, indicating they were not able to log into the dashboard.

Investigating

Luckily, that day, the whole Mergify team was located in the same space as we were hosting one of our regular on-site events (we're a fully remote team spread in France). While we're used to managing incidents remotely, this gave us a chance to perfect our incident response plan with a lower latency channel.

Checking our monitoring system indicated that our backend system no longer processed pull requests. Our ingestion endpoint also did not acknowledge the events (webhooks) sent from GitHub.

Our first instinct was to revert our code to a previous working version, which we did around 10:20 CEST. That did not solve the issue. This made sense since the latest change merged, the one tweaking monitoring, couldn't impact the whole system.

Our second intuition was to look, therefore, at external systems. We quickly checked for ongoing incidents on Heroku, AWS, and GitHub, the main systems we rely on to operate, but none of them had open incidents at this time.

Our logging system reported a few SQL connection errors, which sent us to a wrong lead for a few minutes. We saw a spike in connection errors between our container and database system and decided to open a ticket on Heroku to check for any ongoing incidents. We had a few experiences where Heroku would backfill their incident system hours after an incident would happen, making us suspicious that we'd have been deployed on a Heroku system unable to reach the database. We tested the connection manually and realized this was not the root cause of our incident.

At this point, we started to be clueless about why the application would be stuck on startup, and we had to enable more logging to dig further. After digging into the boot code of Mergify, one of the newly enabled log lines indicated that Mergify was stuck in a loop talking to GitHub API.

The Change

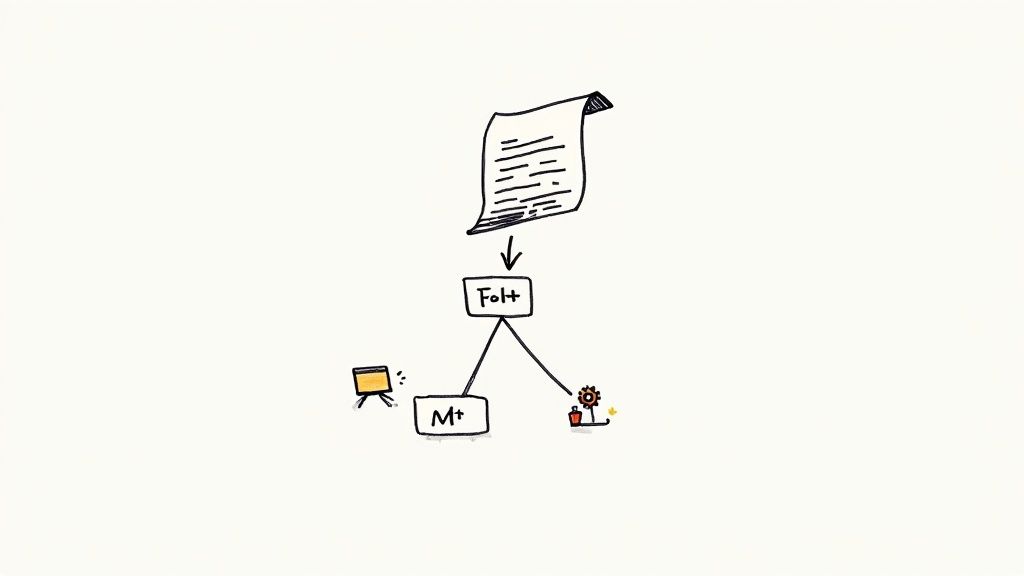

A few months ago, we changed the Mergify engine startup process to simplify its deployment. Mergify relies on a GitHub App to operate. To make those apps work seamlessly, GitHub creates a special bot user for those — in our case, mergify[bot] on github.com. Rather than hardcoding those data, in the boot process, our engine does various introspection and sanity checks to retrieve information about itself using the GitHub API. As this information is not supposed to change often, we cache it for several hours to avoid retrieving it on every deployment.

The Impact

Unfortunately, the cache had expired that morning, and our GitHub app had to re-request its information to the GitHub API. It does this by requesting https://api.github.com/users/mergify[bot] which returned a 403 Forbidden that day.

The system did not expect this, as this call is allowed according to the documentation and API specification. Suddenly, Mergify was not able to inspect its GitHub App on GitHub itself. The application would retry without complaining about the impossible issue that had just arrived. We started to wonder if GitHub did something wrong with our GitHub Apps credential. We spent time testing more calls manually, but they seemed to work correctly.

We soon noticed that an anonymous call to this API endpoint — which was a few hours sooner — suddenly started to result in a 404 Not Found.

We quickly discovered that we were not the only ones impacted by this change in permissions — a discussion was opened by other users.

We reached GitHub support. An hour later, they privately acknowledged the incident and apologized. As they consider it a simple bug, they do not want to share any more information publicly. Don't expect a post-mortem.

The Fix

On Mergify's side, the fix was easy to implement once we found the root cause. At 11:35 CEST, we changed the code to use a hardcoded version of the needed metadata, deployed it, and restarted the service.

Our long-term fix is to store those metadata for longer in order to avoid querying GitHub entirely for this once the service is deployed. We're working on it.

Unfortunately, this is not the first time we've been affected by a sudden change in GitHub's API behavior. These events regularly happen and impact our system in various ways, from minor inconveniences to major outages. Unfortunately, we have little control over those changes, and while GitHub is making its best effort to avoid those disruptions and version their API, those kinds of issues still tend to happen regularly.

Be sure that we're making sure to make our service as reliable as possible while being dependent on GitHub. This means relying on their system as little as possible when achievable and having a team that can respond quickly to any incident.