Flaky tests: what are they and how to classify them?

What is a flaky test? This is a big question since automated testing is key to CI/CD. To fully answer this question, you will understand what makes a test flaky and know the different types of flaky tests, helping you to classify them.

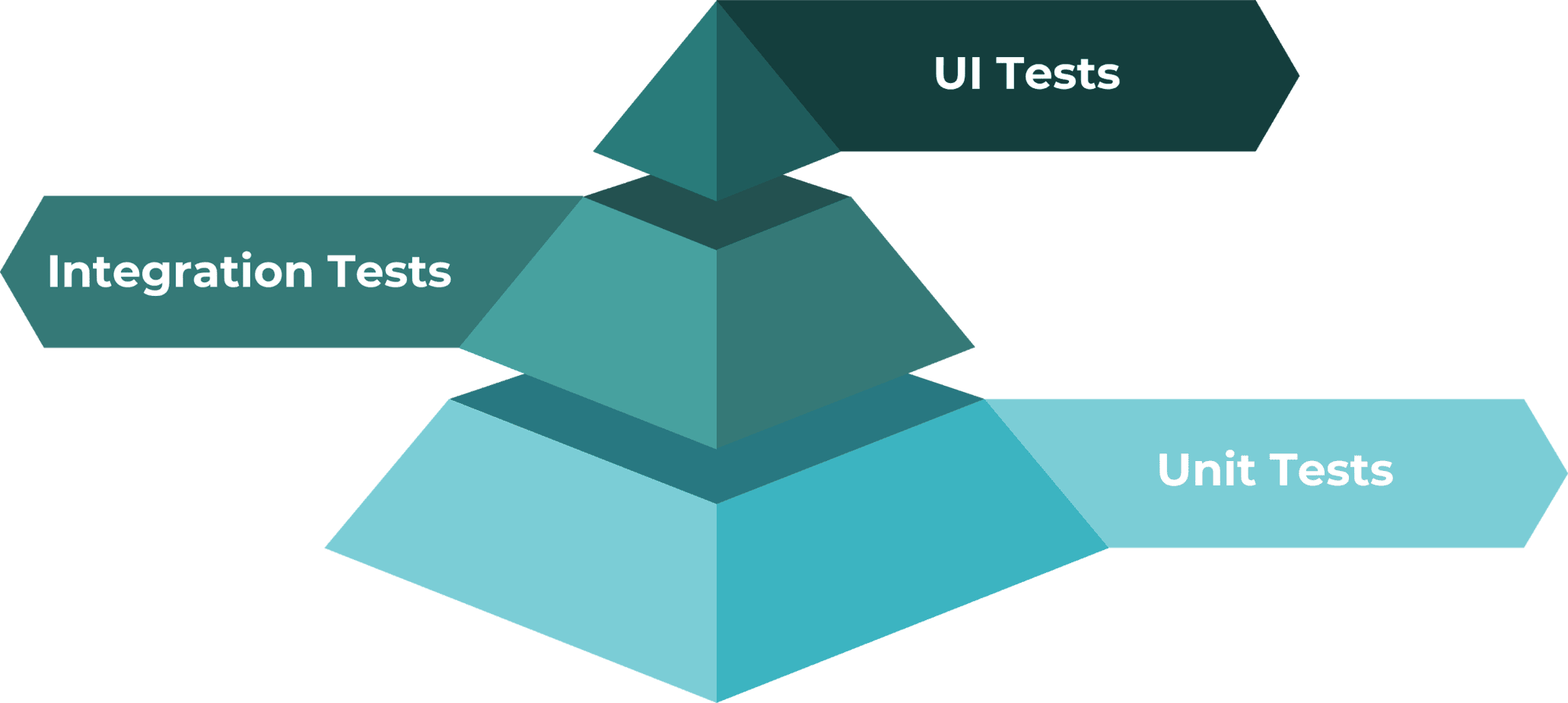

Nowadays, automated testing is a key and unavoidable practice that allows you and your teams to perform continuous delivery and improvements to your project. While determinism is one of the core concepts of this tool, you can never really achieve it. Indeed, if every test is designed to run and deliver a predictable outcome, there are some times when it does not meet these expectations. When it happens, it probably means you are facing what we call flaky tests.

What is a flaky test?

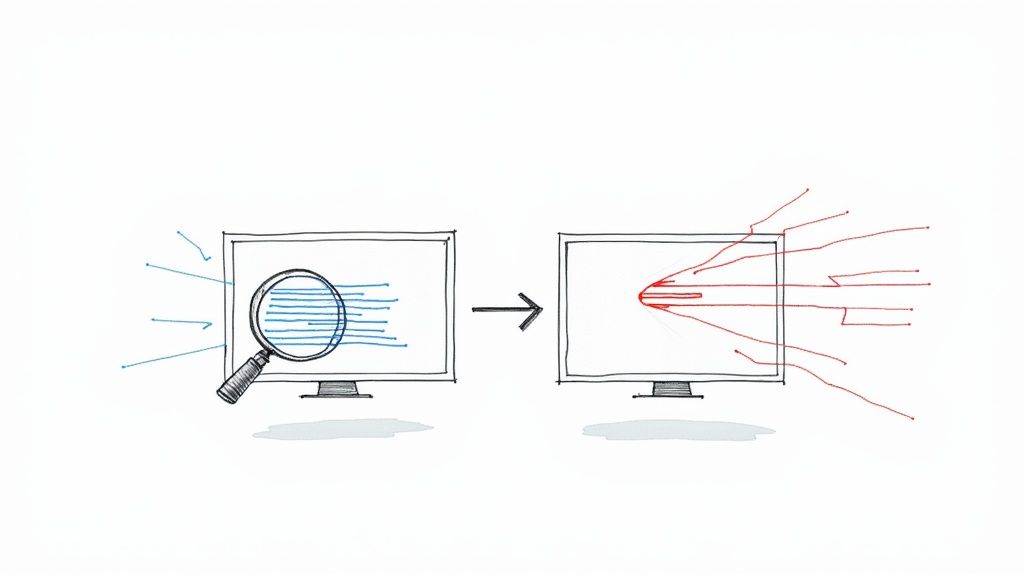

A flaky test can be seen as a bi-state object that passes and fails periodically, given the same test configuration. All along your project's life, you'll never be able to avoid dealing with them, as they tend to grow exponentially with the size of your test suites.

A critical struggle that adds up is that flaky tests lead to unreliable CI test suites, thus reducing the confidence you can have in your testing and the overall development experience. You can bear little flakiness in your tests; it will be barely noticeable. But have a lot, and your whole test suite will be obsolete, losing its value and allowing bugs and lousy code to pass through it.

Flaky tests are not easy to catch due to their evasive nature. Indeed, the frequency of flakiness is always a struggle to deal with, some can happen frequently, and others are so rare that they go undetected. Moreover, tests can be flaky as a direct result of the testing environment they're run in. Indeed, they can find their origin from multiple sources, some of which can help you find bugs you would never have without their flakiness. Some poor infrastructures or test environment designs can then be unveiled.

On the cost side, flakiness takes a lot of time, money, and resources to deal with while also slowing the project's progress and decreasing the team's trust in the development process. As costs are high, fixing flaky tests can be meaningful on the critical and large ones, but the return on investment is fatally decreasing as the number of flaky tests is reducing.

This leaves you in a paradoxical situation where suppressing all flaky tests is not entirely the best solution and is not achievable. In reality, there is a balance, a tolerance threshold.

How much flakiness can you handle? That is the crucial question you have to answer.

How to classify them?

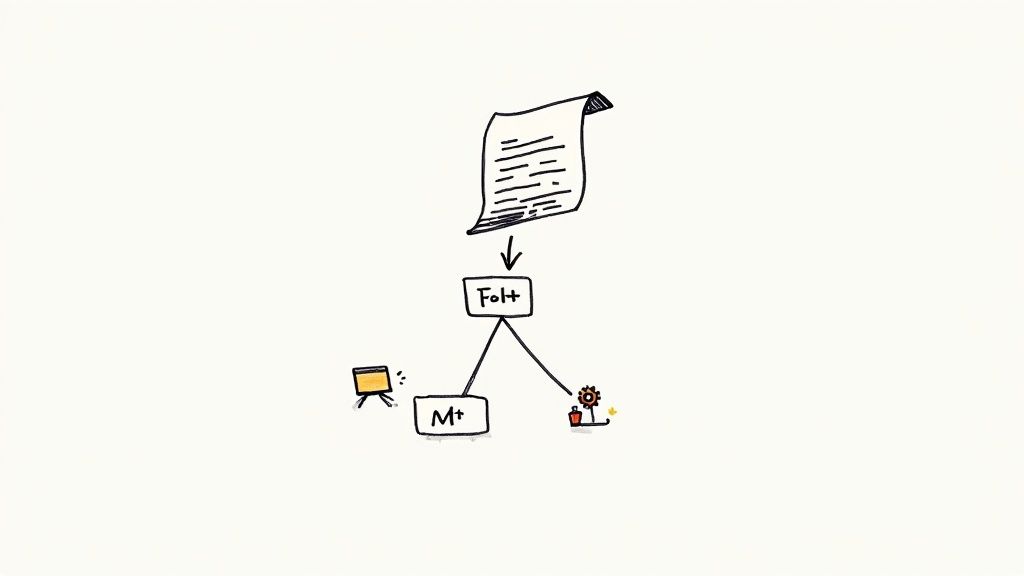

To reach that balance, classifying seems inevitable when it comes to flaky tests. Objectively, there is no unique solution, and the categorization system can be adapted and modified according to the enterprise's needs and vision. Still, when handling flaky tests, it is a primordial step to allow better use of the resources (people, time, CI, and cost).

The goal is to be able to separate flaky tests into groups according to their origin to prioritize them. We can notice two categories that seem more evident than others across software projects:

- Independent flaky tests: Test that fails independently, outside or inside the test suite. Due to the easiness of reproducibility, they are easier to notice, debug and solve.

- Systemic flaky tests: Tests failing due to environmental, shared state issues, or even their order in the test suite. They are way more delicate to detect and debug since their behavior can change along with the system or workflow evolution.

While the test can be flaky for infinite reasons, some are more redundant than others. Identifying these origins can lead to a more efficient categorization, allowing you to create sub-categories or new ones. Here are some of the most encountered reasons for flakiness:

- Accessing resources that are not strictly required: Test that, for example, download a file outside the tested unit or use a system tool that could be mocked.

- Insufficient isolation: Tests not using copies of the resources can result in race conditions or resource contention when run in parallel. Also, tests that change the system state or interact with databases should always clean up after use. Otherwise, other tests might be failing due to these interactions.

- Concurrency: Several parallel threads interact in a non-desirable way (race conditions, deadlocks, etc.)

- Test order dependency: Tests that can deterministically fail or pass depending on the order they are run in the test suite.

- Network: Tests relying on network connectivity, which is not a parameter that can be fully controlled.

- Time: Tests relying on system time can be non-deterministic and hard to reproduce when failing.

- Input/Output operations: A test can be flaky if it does not correctly garbage-collect and close the resources it has accessed.

- Asynchronous wait, invocations left un-synchronized, or poorly synchronized: Tests that make asynchronous calls but do not wait appropriately for the result. Tests should avoid any fixed sleep period. The waiting time can differ depending on the environment.

- Accessing systems or services that are not perfectly stable: It is better to use mocks for services as fully as possible to avoid dependency on external, uncontrolled factors.

- Usage of random number generation: When using a random number or other object generation, it is helpful to log the generated value to avoid needlessly tricky reproduction of the test failure.

- Unordered collections: Avoid making assumptions about element order in an unordered object.

- Hardcoded values: Test that uses constant values where elements or mechanics might change in time.

- Too restrictive testing range: When using an output range for an assertion, it might be possible that not all outcomes have been considered, making the test fails when they happen.

- Environment dependency: Test outcomes can vary depending on the test environment it is run on.

Having an idea of what failure reasons are more often encountered than others in your project will help you separate and prioritize the tests concerned. This way, the solving part will be easier since there are already existing data on the case. Those data can hint the solver in specific directions, gaining time and potentially unveiling some more global project's sensible points, such as writing practices, environment flaws, or lousy workflow processes.

Conclusion

Now that we know what we are talking about, it should be simpler to identify flaky tests and separate them into groups to simplify their handling.

It will also be easier to set up processes to fix and prevent them more optimally. We hope this information will help you in your combat against flaky tests in the future, as it has helped us at Mergify!