Flaky Tests: How to Fix Them?

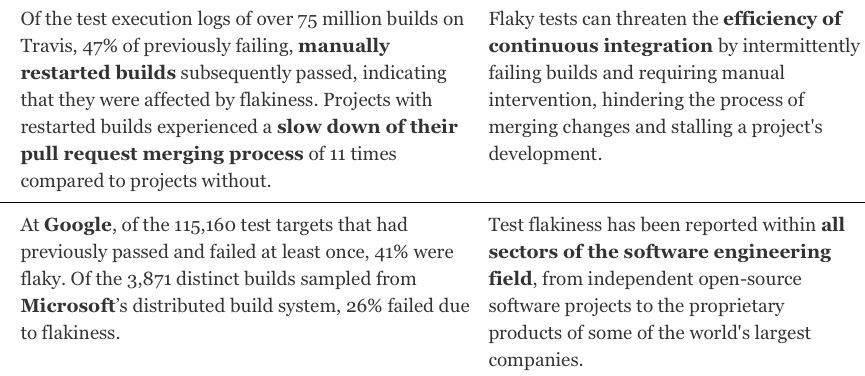

In the world of software development, tests are easier to write than to maintain.

This statement is even more accurate when it comes to flaky tests. You know, those tests that pass 90% of the time, but well, sometimes, they fail without you knowing why 😢.

In cases of flakiness, each problem may need a different solution, implicating a wide range of resources that can be expensive to sustain. This is why, following the steps of our last post about categorization, strategies must be developed to optimally fix those uncooperative tests.

Before going into it, remember that strategies for fixing flakiness depend highly on the application and organization. Still, the ideas and approaches highlighted in this article should be accurate enough to help you in your fight against flaky tests.

Flakiness and laziness are best friends!

How often have you noticed a failing test and re-run it until it passes again? At first sight, it might seem enough to go further in your workflow, but if you want to be coherent and professional, you can't just ignore those failures. Indeed, when confronted with flaky tests, organizations might tend to implement a simple automatic retry system. These trades increase build times and costs for test coverage retention and build reliability while not solving the core problem. This allows flakiness to continue to grow until retries are not enough anymore. Other instinctive actions that are not at all solutions, but mistakes, are the following:

- Do nothing and accept the flakiness;

- Delete and forget about the test;

- Temporarily disable the test.

Those (lazy) actions lead to decreased confidence in your project and ability to deliver, alongside letting bugs proliferate and time losses. It is a vicious, demoralizing circle.

What to do, then?

To avoid such situations, when flaky tests are encountered, they might be handled right away. Indeed, The first appearance of a flaky test is the best moment to fix it. At this point, every change is fresh enough to have a context and even leads to investigating the problem. If you don't have time, your second reflex should be to document it, create a ticket and make room in your planning to handle it as quickly as possible. Remember, reporting a problem is not fixing it; technical debt is a reality, and going with a "let's fix it right now" mentality is good behavior when facing flakiness in your tests.

For this, you have to make room for your engineers to work on such problems with time and resources. The pressure to deliver features can be high on developers as engineering costs are expensive, but it should not overpass the project's health and stability.

Developers must take test flakiness seriously, as it can endanger your whole project's workflow.

Once you've understood the situation, various practices and tools are available to help you deal with flaky tests:

- Split your test suites: Using parallelism can make you gain time on your test runs. The time gained can get you quicker feedback and better agility on what to do when flakiness is noticed. Also, according to your test environment, It could help you minimize test failures due to slow load times and concurrency overload.

- Execute tests on VMs or controlled environments: When using real devices, there is always a probability that additional configuration factors can be introduced and cause flakiness. Reproducing the failure could be a complex mission without complete control of said devices. Using VMs gives you more control over your test environments and simplifies failure reproducibility.

- Log and document: No matter how you handle flaky tests, generating records and documentation on your test runs is primordial. Every scrap of information can be helpful, such as event logs, memory maps, profiler outputs, or screenshots for UI tests. Thus, you'll know which tests produced inconsistent results, how they have been handled and the failure reason if found. Applying such processes will maintain faith and confidence in your test suite and project. Moreover, it will help you develop best practices and notice patterns that could simplify your fight against flakiness.

- Separate and quarantine: Just because a test is flaky does not mean all the test suites should have failed reports. Once you've completed a proper analysis, you should separate and quarantine the flaky test aside until its resolution to not lose the value and results of the whole test suite. On the cost side, you'll save a lot of runtimes since every successful test does not need to be re-run, only the flaky ones. If, when isolating a test independently, it suddenly passes, it can hint at inter-test dependence in your suite, thus facilitating the investigations. You can automate your quarantining system with ticket creation and bot reminders to ensure the issue isn't overlooked. With this solution, you'll temporarily lose the coverage of the flaky tests, but you'll also provide a quick handle and resolution of the problem. Doing this task manually can take time when automation can be more time and cost-saving, but be careful; your automation is highly dependent on your flaky test classification. You need to be observant to not mismatch flakiness for actual bugs or defects that could end up on the user side.

- Retry your tests (the good way): Test retrying is controversial. But there are intelligent ways of implementing it. Indeed, it should not blindly retry until a successful result is returned; instead, it should only retry in certain situations. For example, when you've concluded that flakiness comes from an external source or dependency, retrying might be your only solution here. Though, when applying retries on tests, you should always flag or document them as being a target for retries and what reason, as they should not be forgotten because of the retrying time and cost. A retrying system can save time, but it does not solve anything and can make your test suite unreliable over time if you don't address the issues. It is then up to you to determine specific criteria and contexts when this option can be used.

- Monitor and alert: An intelligent solution that works alongside the above points is implementing a monitoring system that acts according to your logs, stats, and analysis. Automatically quarantine a test after X failures, look up the retry system and detect changes in the level of flakiness (failure occurrences). Working your data may help you identify changes that lead to flaky tests, sometimes without even having to re-run them. Always following the idea of handling and documenting flaky tests immediately, you could plug a notification system on top of your monitoring. Sending emails, creating tickets, and notifying the developers responsible for the code about what is going on is the best way to keep the information flowing and the fight against flaky tests going.

- Develop processes of investigations: According to your classification and investigation history, you should be able to generate stats and analysis to guide your solving process. Some failures origins might come up more often than others, depending on your environment, organization, etc. These data will allow you to develop adapted processes that will make you gain time when investigating flaky tests.

- Run tests multiple times before merge: This practice consists in running a test several times in various test environments and contexts (isolated, in combination) if needed to challenge the test stability. This process should be implemented before the final merge of the test to be sure that each addition to the test suite is ensured to have minimal exposure to flakiness.

- Write and apply good practices: Following the documentation mindset, you must agree on keeping and using an up-to-date book of good practices. Evolving with your experience of flaky tests, applying these practices will ensure flaky tests are handled before even being deployed and noticed. It can be a simple list of criteria to avoid that often indicate a test is unreliable — for example, hard-coded periods of sleep or timeouts, random generations, or assertions on partially-predictable data. It can also be a list of minor tweaks that improve the test suite stability—for example, keeping tests small, with less complex logic, reducing the code coverage, or cleaning installations between test runs.

Conclusion

Since flaky tests impose technical and procedural challenges, there must be procedural and technical solutions. Keeping track of flakiness across your testing pipeline is the first and most crucial step, not to let them be overwhelming and forgotten.

Moving on from there, if you keep the points and strategies exposed in this article, you will be able to act quickly and handle flaky tests when they are noticed.

Remember that flakiness is unavoidable through time; you can only aim for a threshold where flakiness is reduced but not inexistent. What can keep you around this threshold are solid processes and learned lessons over time. They will strengthen your confidence in your workflow, improve your development experience and erase the nightmares that flaky tests can cause. You wrote them; you can fix them!