Ensuring Seamless SQL Migrations in Production

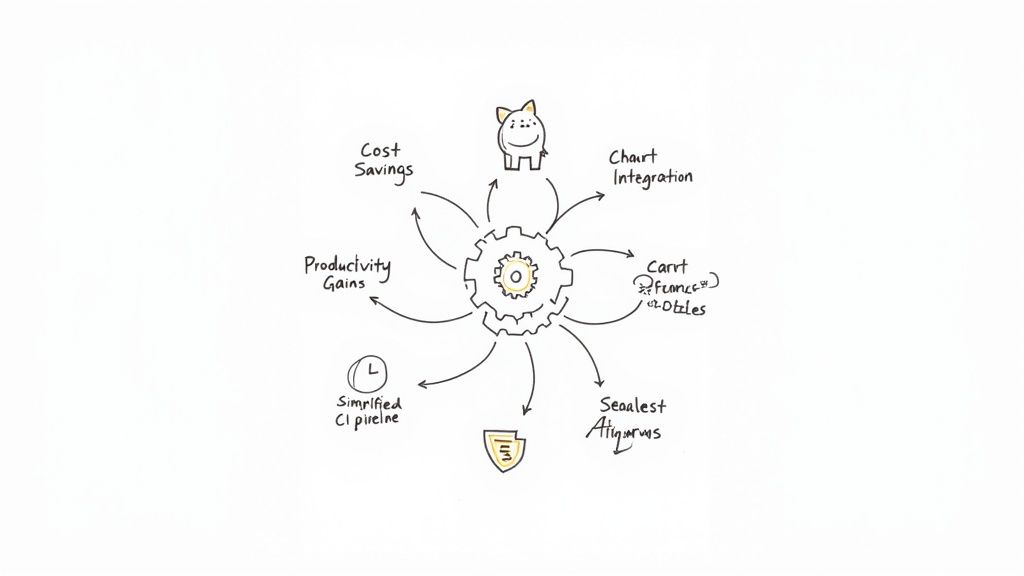

Learn how to test SQL migration scripts in your CI/CD pipeline with PostgreSQL, SQLAlchemy, and Alembic. This guide explains creating and comparing database schemas, handling migration script evolution, and overcoming challenges like column order differences.

At Mergify, we use PostgreSQL to handle some of the data, such as the state of GitHub objects (pull requests, commits, etc.) or Mergify's event log. Since the beginning, we have entirely interacted with the database using an ORM. We chose SQLAlchemy because it's not attached to any framework, the ORM is mature, and SQLAlchemy has supported asynchronous I/O since its 2.0.0 version.

As we ship several times a day to production, we have to implement a solid CI/CD that perfectly handles the database's evolution. Each database schema modification must be thoroughly tested and automatically applied to the production database. This workflow is well documented, like in Martin Fowler's blog post "Evolutionary Database Design." We have to version-control each database artifact and script every change as a migration.

Numerous libraries help you handle database migrations today. We decided to use Alembic, the most used library by SQLAlchemy users (and also maintained by SQLAlchemy maintainers). Alembic is a command-line tool. It can create migration scripts from your SQLAlchemy models automatically. Each migration script has a revision number and is version-controlled with your source code. Alembic can apply the migrations on a database and store the revision number in a table called alembic_version, so it will apply only the new migrations the next time. Typically, this command is called in the continuous delivery pipeline to upgrade the production database.

Those database schema changes are critical. You want to be sure that the SQLAlchemy models will work with the production database once the migration script is executed. How can you do that?

The Naive Test

The first test on migration scripts was quite simple. Here is the idea: you have to create two databases, one using the SQLAlchemy models and one using only the migration scripts. The two database schemas have to be the same. Pretty easy, right?

Creating a PostgreSQL server is fast and easy using Docker. On this new server, you create two empty databases. You use the first database to create all artifacts using SQLAlchemy models. We use the declarative style to define our models so we can create all database artifacts with a single command. For the second database, you use Alembic to run every migration script. At this step, you should have two databases with the exact same schema.

To compare them, you can use pg_dump to dump each database schema in an SQL file. Here is a command to do that.

pg_dump \

--dbname=postgresql://user:password@host:port/database \

--schema-only \

--exclude-table=alembic_version \

--format=p \

--encoding=UTF8 \

--file /path/to/dump.sqlDump a database schema in an SQL file

Then, you can use the Python standard library to compare the files using filecmp and difflib for example. filecmp has some functions to compare files. The following code compares two files in terms of their content.

assert filecmp.cmp(schema_dump_creation_path, schema_dump_migration_path, shallow=False)Compare two files on the disk on their size and content

Whenever this test fails, you can show the differences in the test result using difflib. It has some functions to compare sequences, so you can write a small function to display the differences between two files.

def filediff(path1: pathlib.Path, path2: pathlib.Path) -> str:

with path1.open() as f1, path2.open() as f2:

diff = difflib.unified_diff(

f1.readlines(),

f2.readlines(),

path1.name,

path2.name,

)

return "Database dump differences: \n" + "".join(diff)Compute the difference between two files

This test is great, but it has some drawbacks. For example, the order of columns in a table is important. PostgreSQL can't change the position of a column in a table (not easily, at least). You must maintain the same column order in your models as in your production database.

Moving This Test One Step Further

We used this test as is for a long time. But at some point, we had to change the way we compare two database schemas. Some models became more complex and couldn't pass the migration test anymore.

Let's take an example.

class Base:

updated_at: orm.Mapped[datetime.datetime] = orm.mapped_column(

sqlalchemy.DateTime(timezone=True),

server_default=sqlalchemy.func.now(),

)

class User(Base):

id: orm.Mapped[int] = orm.mapped_column(

sqlalchemy.BigInteger,

primary_key=True,

)Here, you have a User model that inherits from the Base model. The Base model adds a column named updated_at at the end of every child model. This can be handy. You can add the same column on several models easily. But there is a trouble: you can't add columns to a child model anymore. If you add a column to User, let's say name, this column won't be the last in the model. SQLAlchemy creates a table that looks like User(id, name, updated_at), while your migration script will add the column name at the end of the existing table, resulting in something like User(id, updated_at, name).

The migration test isn't viable anymore. When comparing the database created by SQLAlchemy with the one created by the migration scripts, we have to ignore the order of columns.

You could eventually use Alembic to compare two schemas, as it does to autogenerate migration scripts. Sadly, Alembic can't detect all differences. You have to be sure to include every artifact in your test.

>>> alembic.autogenerate.compare_metadata(migration_context, metadata)

[

(

"add_column",

None,

"user",

sqlalchemy.Column("name", sqlalchemy.String(), table=<user>),

),

]Comparison between a database (the migration context) and the models (tables metadata)

You could use migra, a tool for computing differences between databases. We used it in the past, but it is no longer maintained.

$ migra postgresql:///a postgresql:///b

alter table "public"."user" add column name text;Comparing database schemas using migra

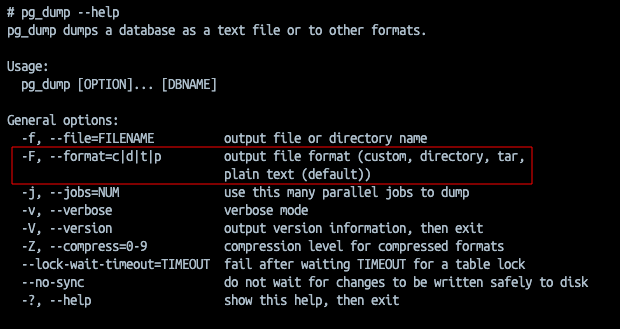

You could dump the database schema in a more convenient format than SQL, something you can easily parse like JSON. But pg_dump doesn't allow such formats.

pg_dump output file formatsSQL files generated by pg_dump are the most reliable. They contain every database artifact. In the end, we chose to use a Python library called sqlparse to parse those SQL files and compare each artifact using specific rules.

sql-compare was born. We wrote a small library to compare SQL files using sqlparse. The comparison doesn't care about comments, whitespaces between keywords, the order of the columns in a CREATE TABLE statement, or the order of enumerator values.

Ignoring the order was the main challenge. sqlparse doesn't parse each column definition distinctly. Instead, it returns a list of tokens of all kinds: column name, data type, default value, separator between columns, whitespace… We had to filter those tokens and group them by column definition before sorting those groups. Have a look at the source code if you are interested.

This library solved our issue. We include it in projects that use SQLAlchemy and Alembic, and we deliver to production every day, thanks to that. It works well and catches some migration issues from time to time whenever Alembic misses something. We decided to make it an open-source library so it can be used by others in a similar situation. If you want to try it, just run pip install sql-compare.

We already have some ideas for improving the library. For example, we would like to create a function to retrieve all the differences between two schemas so we can display them in the test result whenever they fail. The difflib library isn't relevant anymore as it shows every difference, including the order of the columns.

If you have any suggestions, you can submit an issue on the GitHub repository. Or, even better, submit a pull request!