Data Anonymization with PostgreSQL and SQLAlchemy

Developers face a unique challenge in the era of stringent data privacy laws like GDPR and SOC2 compliance. This article delves into how developers can access necessary data without compromising user privacy, specifically focusing on PostgreSQL databases.

Developers face a unique challenge in the era of stringent data privacy laws like GDPR and SOC2 compliance. They often need access to production databases to troubleshoot issues, but direct querying of these databases can lead to security issues and potential privacy breaches.

The solution? Data anonymization. This article delves into how developers can access necessary data without compromising user privacy, specifically focusing on PostgreSQL databases.

Understanding the Need for Data Anonymization

When it comes to debugging and improving applications, developers frequently require access to real-time, operational data. However, this need for access clashes with the legal and ethical obligation to protect user privacy.

Let me be clear:

You cannot simply dump your production database on your developer's laptop.

This is where data anonymization comes into play. It's a process that masks or alters personal data so that individuals cannot be readily identified, thus ensuring compliance with data protection regulations.

Leveraging PostgreSQL with pg_anonymizer

For teams using PostgreSQL, one practical tool is the pg_anonymizer extension.

This tool is a PostgreSQL extension designed for protecting personal and sensitive data. It adopts a declarative approach, allowing rules for data masking to be defined directly within the table structure. The extension offers three methods to access anonymized data: creating anonymous data dumps, permanently altering data via static masking, and selectively hiding data with dynamic masking. It includes various data randomization and scrambling functions and even allows for custom functions. Additionally, it provides a generalization approach for statistical use, and automatic detection functions to identify columns needing anonymization, making it a comprehensive solution for data privacy in PostgreSQL databases.

Using this tool for the creation of database dumps where sensitive information is either randomized or anonymized ensures developers access crucial data without compromising user privacy.

To effectively implement anonymization, a solid strategy is essential. One such strategy is to integrate data anonymization directly into the database schema definition, which can be achieved using extensions for popular ORM (Object Relational Mapping) tools like SQLAlchemy.

The Role of SQLAlchemy in Data Anonymization

SQLAlchemy, a widely used ORM tool for Python, plays a crucial role in this strategy. By creating an extension for SQLAlchemy, developers can specify anonymization rules directly within the database schema. Each column that contains sensitive information can be tagged with a specific anonymization method, ensuring no data column goes unnoticed.

For instance, a developer can add a new attribute to an SQLAlchemy column definition, specifying the pg_anonymizer method to be applied to that column. This attribute explicitly defines how the data in the column should be anonymized or randomized.

The first step is to create a new dialect for SQLAlchemy. If you're using poetry, you can declare this in your pyproject.toml file:

[tool.poetry.plugins."sqlalchemy.dialects"]

anonymizer = "myapp.database:Anonymizer"The code for the dialect is quite simple:

import sqlalchemy

# This is a fake dialect to be able to annotate column with anonymizer config

class Anonymizer(sqlalchemy.Dialect):

passThis dialect is only used to be able to pass custom keyword arguments to your column. You can now declare your SQLAlchemy ORM column like this:

import sqlalchemy

from sqlalchemy import orm

class Base(orm.DeclarativeBase):

pass

class MyTable(Base):

name: orm.Mapped[str] = orm.mapped_column(

sqlalchemy.String(255),

nullable=False,

anonymizer_config="anon.lorem_ipsum( words := 7 )",

)Passing the anonymizer_config keyword argument to mapped_column allows to specify how the anonymization should be done using pg_anonymiser functions. You can use any value, such as a static string or a custom function.

Once your tables are all set with anonymization directives, we have all the steps to generate our anonymized dump. This is going to be done in multiple steps:

- Generate the anonymization rules SQL file;

- Load the production database dump in a temporary database with the the anonymization rules;

- Use

pg_dump_anonto dump an anonymized version of the database.

Generating Anonymization Rules

Leveraging our custom dialect, we can now generate a special file named postgresql_anonymizer_rules.sql that will be used to anonymize our database content.

import pathlib

import subprocess

HEADERS = """CREATE EXTENSION anon CASCADE;

SELECT anon.init();

"""

class MissingAnonymizedFunction(Exception):

pass

async def gen_postgresql_anonymized_rules() -> None:

rules = HEADERS

for table in Base.metadata.sorted_tables:

for col in table.c:

if "anonymizer_config" not in col.dialect_kwargs:

raise MissingAnonymizedFunction(

f"{table.name}.{col.name} does not have anonymizer config yet")

anonymizer_config = col.dialect_kwargs["anonymizer_config"]

if anonymizer_config is None:

continue

rules += f"SECURITY LABEL FOR anon ON COLUMN {table.name}.{col.name} IS 'MASKED WITH VALUE {anonymizer_config}';\n"

with open("postgresql_anonymizer_rules.sql", "w") as f:

f.write(rules)Running this code will generate the file that we can then use for the next step: loading it with the actual data.

Loading PostgreSQL Data

There are multiple ways of dumping your production data, but using a simple pg_dump command should be enough:

pg_dump | cat - rules.sql > dump.sqlThe final dump.sql will contain the whole database dump with the security rules loaded at the end. You can now load this dump into a database to extract an anonymized version of the data.

Practical Implementation: Special pg_dump Command

With the rules in place, the next step involves creating an anonymized version of the production database. The pg_anonymizer extension provides a special pg_dump_anon command, which is used to generate a database dump. This dump is devoid of any sensitive customer data, making it safe for developers to use for debugging and development purposes.

Looking Towards the Future: Integration with AWS

As cloud services continue to dominate the tech landscape, the future looks promising for more integrated solutions. One such anticipated development is AWS RDS's native support for the pg_anonymizer extension. This support could open up new possibilities, such as connecting to production databases using a special account that provides a read-only, anonymized view of the data.

This level of integration would significantly simplify the process of data anonymization, making it more seamless for developers to access the data they need while rigorously adhering to privacy standards.

Conclusion: Balancing Access with Privacy

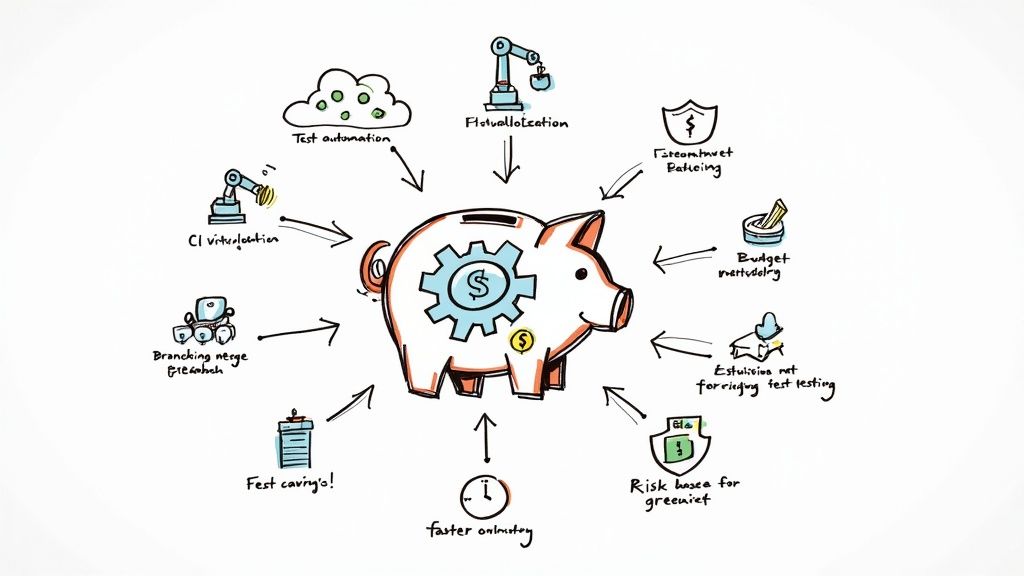

In conclusion, data anonymization is not just a compliance requirement; it's a necessary practice in today's privacy-conscious world. By leveraging tools like pg_anonymizer and integrating them into the development workflow through ORM extensions and CI scripts, organizations can strike a balance between giving developers access to the data they need and ensuring user privacy.

As technology evolves, we can expect these processes to become more streamlined, further bridging the gap between operational necessity and ethical responsibility. The SQLAlchemy plugin code, included here, serves as a starting point for organizations looking to implement these practices in their PostgreSQL environments.