What Is a Flaky Test: A Complete Guide to Software Testing's Most Unpredictable Challenge

Master the art of handling flaky tests with battle-tested strategies from industry experts. Learn how to identify, fix, and prevent unstable tests from derailing your development process.

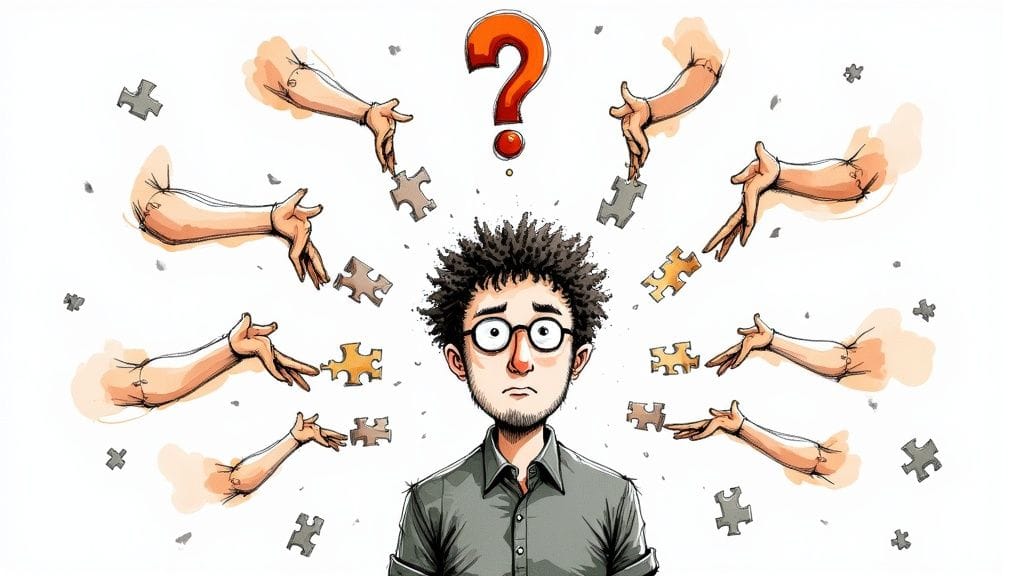

Software teams of all sizes face a persistent challenge with flaky tests - those unreliable tests that pass and fail inconsistently without any code changes. Even Google grapples with this issue, reporting that around 16% of their test suite exhibits flaky behavior. To address this problem effectively, teams need to understand how these unpredictable tests truly impact their development process.

The Erosion of Trust and Productivity

When tests fail randomly, developers start doubting the entire testing process. This leads to dangerous workarounds, like repeatedly running failed tests until they pass - essentially making the tests meaningless as quality checks. Real bugs can slip through unnoticed while developers waste hours rerunning tests that should be reliable indicators of code health. The constant interruptions from investigating false failures drain team productivity and push back release schedules.

The Hidden Costs of Flaky Tests

Beyond the obvious time waste, flaky tests create deeper problems for development teams. The constant stream of false alarms breeds frustration and burnout as engineers spend their days chasing phantom issues instead of building features. More critically, these unreliable tests can mask actual bugs, making it nearly impossible to spot real problems amidst the noise. Over time, this erodes code quality and creates technical debt that becomes harder to address.

Identifying the Warning Signs

Teams need to watch for key indicators that signal test flakiness is becoming a serious issue. While random test failures are the most visible sign, other subtle warnings often emerge first. Tests that only break in certain environments or at specific times point to poorly controlled external dependencies. Another red flag is when test suite runtime keeps growing because developers have to repeatedly rerun failed tests to get a clean build.

Categorizing Flaky Tests for Better Understanding

Flaky tests generally fall into two main categories that help guide troubleshooting. Order-dependent tests fail or pass based on the sequence they run in, usually due to shared test state or dependencies. Non-order-dependent tests fail randomly regardless of execution order - these might be affected by external factors like network issues or contain inherently non-deterministic logic.

Understanding these distinct types of flaky tests reveals both the complexity of the problem and what's needed to solve it. By recognizing the true impact of flaky tests and implementing focused detection and fix strategies, teams can rebuild confidence in their test suite and deliver more reliable software. The key is taking a systematic approach to identify, categorize and eliminate sources of flakiness before they undermine the entire testing process.

Breaking Down the Types of Test Flakiness

Test flakiness is more complex than simple inconsistent behavior. Just as doctors analyze symptoms to properly treat illnesses, development teams need to carefully examine and classify different types of flaky tests to effectively fix them. By breaking down the patterns and root causes, we can build testing processes that deliver reliable results.

Order-Dependent Tests: The Domino Effect

Like falling dominoes that rely on a specific sequence, order-dependent tests pass or fail based on how they are run. These tests often share state or resources, creating dependencies between them. For example, if one test modifies a database record that other tests use, those later tests may fail unpredictably. While these tests might work perfectly in isolation, they become unstable when run as part of a larger test suite. The key to fixing order-dependent tests is isolation - ensuring each test operates independently in its own environment.

Non-Deterministic Tests: The Wildcard

Non-deterministic tests fail randomly regardless of execution order, making them especially difficult to debug. These tests fall into two main categories:

Non-Deterministic, Order-Independent Tests (NDOI)

These tests behave erratically without any clear pattern. External factors like network issues or resource constraints often trigger the failures. A test calling a third-party API that occasionally times out is a classic example. The test code itself may also introduce randomness through timing dependencies or random number generators. Finding the source requires carefully examining both external dependencies and potential race conditions in the test implementation.

Non-Deterministic, Order-Dependent Tests (NDOD)

These tests combine the worst of both worlds - they can fail randomly and their failure rate changes based on execution order. Consider a test that needs a shared resource that other processes sometimes lock. The timing of the lock combined with test sequencing creates complex failure patterns. Fixing these tests demands a two-pronged approach: resolving resource conflicts while also addressing the underlying non-deterministic behavior.

The Importance of Categorization

Taking time to properly categorize flaky tests gives teams vital information about what's causing instability. This knowledge helps prioritize fixes and assign the right people to address each issue. Much like how contractors use different approaches for plumbing versus electrical work, each type of flaky test needs its own targeted solution. Understanding these distinctions is essential for building reliable tests that help teams ship quality software.

Uncovering Hidden Causes of Test Instability

Now that we've covered different types of flaky tests, let's examine what makes tests become unstable in the first place. Getting to the root causes requires moving beyond just noticing erratic test behavior to investigating the specific conditions that trigger failures. Understanding these underlying issues is key to preventing and fixing flaky tests effectively.

Infrastructure Issues: The Unseen Culprits

Many test failures stem from problems in the testing infrastructure itself. Tests that depend on external services can fail randomly due to network delays. Limited system resources like memory or processing power can also cause tests to behave unpredictably. A common scenario is when tests pass on powerful continuous integration servers but fail on developers' less capable local machines. This shows why having consistent, well-maintained test environments matters so much.

Test Environment Inconsistencies: A Breeding Ground for Flakiness

When test environments differ, flaky tests often follow. A test might work perfectly in staging but repeatedly fail in production because of different database setups, operating systems, or library versions. These variations make it hard to find the real problem. To avoid this, teams need to keep environments as similar as possible throughout development.

Asynchronous Operations and Timing Issues: The Race Against Time

Tests involving background processes or network requests are especially prone to flakiness. When a test expects an async operation to finish within a certain time, even small performance changes can cause failures. For example, if a test checks a database too soon after triggering a background update, it might fail because the update hasn't completed yet. Good async handling and wait mechanisms help prevent these timing problems.

Shared State and Resource Conflicts: The Battle for Resources

Problems arise when tests interfere with each other by sharing state or competing for resources. Two tests modifying the same database record at once might succeed or fail based on which runs first. Similarly, tests can fail if they run out of available resources like file handles or network connections. Keeping tests isolated and managing resources carefully prevents these conflicts.

Test Code Issues: Internal Vulnerabilities

Sometimes flakiness comes from problems in the test code itself. Tests with hidden dependencies or wrong assumptions about the system can give unreliable results. One example is when a test assumes items in a list will always appear in a specific order. Writing clear, focused test code that avoids these assumptions is essential.

Intermittent External Dependencies: The Unreliable Third Party

Outside services and APIs that occasionally fail can make otherwise good tests flaky. Even with perfect test code, an unreliable external dependency leads to random failures. That's why it often helps to mock external dependencies during testing - it lets you control their behavior and get consistent results. This approach leads to more stable tests that aren't affected by third-party issues.

Understanding these common causes helps teams take steps to prevent flaky tests before they become problems. This proactive approach saves time and builds confidence in the testing process, ultimately leading to better software quality.

Building Your Flaky Test Detection Strategy

Finding and managing flaky tests requires more than just noticing occasional test failures. Much like a detective uncovering clues, you need a methodical approach to spot, verify, and keep track of tests that behave unpredictably. Without a clear strategy, these rogue tests can slowly erode confidence in your entire test suite.

Implementing a Multi-Pronged Approach

Successfully detecting flaky tests requires combining several key techniques:

-

Automated Analysis Tools: You can pinpoint inconsistent tests by using tools that scan test execution history for failure patterns. For example, Datadog Test Visibility automatically flags erratic tests in CI/CD pipelines by analyzing their behavior over time. These tools give you concrete data to investigate further.

-

Strategic Test Running Patterns: Run tests in varied orders and environments to expose issues with test dependencies. Even tests that usually pass should be rerun regularly to catch hidden instability. For instance, Google faced this challenge when they discovered flakiness in 16% of their tests - they now use carefully planned rerun patterns to identify and address these issues.

-

Effective Monitoring Systems: Track test results consistently, including basic metrics like pass/fail rates and how long tests take to run. Watching these numbers over time helps spot emerging problems before they become major headaches.

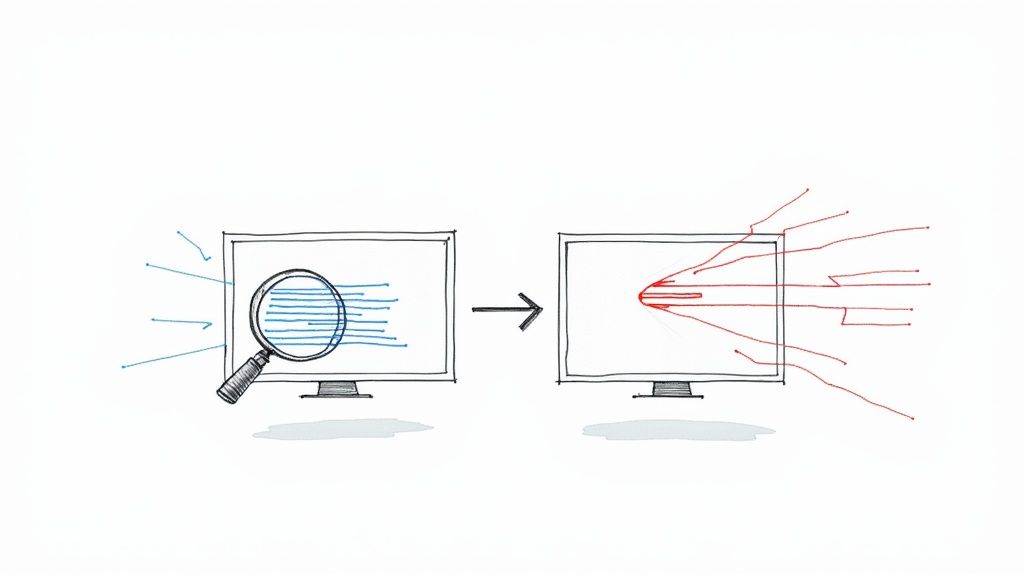

Differentiating Genuine Failures from Flaky Behavior

One of the trickiest parts of managing flaky tests is telling the difference between real bugs and temperamental tests. You don't want to waste time chasing false alarms. A simple but effective approach is to rerun failed tests several times - if it fails every time, you likely have a real bug. If it passes sometimes and fails others, you're probably dealing with a flaky test.

Documentation and Tracking

Once you identify flaky tests, you need a clear system to document and track them. This helps teams understand how widespread the problem is, decide which tests to fix first, and measure progress. Your documentation should cover what the test does, how it misbehaves, potential causes, and notes about ongoing fixes. Test management tools or even basic spreadsheets can help organize this information consistently.

Building a solid detection strategy takes work upfront but saves countless hours down the road. When teams can quickly spot and address flaky tests, they spend less time dealing with unreliable results and more time improving their code. By putting these approaches into practice, you'll build more trust in your test suite and ship better software.

Measuring the True Cost of Flaky Tests

Now that we understand what causes flaky tests, we need to look at their real impact on development teams. While the frustration they cause is obvious, flaky tests create concrete costs that affect productivity, quality, and financial results. Teams need to recognize that fixing flaky tests isn't just about making testing better - it's about using resources wisely and shipping better software.

Quantifying the Impact on Productivity

The most obvious cost comes from developers spending precious time investigating test failures that aren't real issues. Consider a test suite where just 1% of tests are flaky. That small percentage becomes a big problem when you're running thousands of tests multiple times per day. Teams can waste several hours each week chasing down these phantom bugs instead of building features or fixing actual problems. This directly slows down development and pushes back release dates.

The Ripple Effect on Product Quality

When tests become unreliable, developers start losing faith in the entire testing process. They begin ignoring test failures, assuming they're just more flaky results - and that's when real bugs slip through into production. The constant cycle of investigating and rerunning tests also wears people down over time. Developer burnout sets in, morale drops, and product quality suffers as a result.

Measuring and Communicating the Cost

To get buy-in for fixing flaky tests, you need more than complaints - you need data that shows their true impact. Track specific metrics like how often tests fail intermittently, how much time gets spent investigating false alarms, and how these issues affect your release schedule. These numbers tell the real story.

Practical Measurement Strategies

- Time Tracking: Have developers log time spent dealing with flaky tests, whether in a simple shared spreadsheet or your project management tool

- Flaky Test Dashboards: Create visual dashboards showing trends in flakiness rates, failure counts, and which tests are causing problems. Datadog Test Visibility can help automate this.

- Cost Calculation: Convert the time spent into actual dollar amounts based on developer salaries. This gives stakeholders a clear picture of the financial impact.

Prioritizing Fixes Based on Business Impact

Some flaky tests matter more than others. A flaky test in your checkout flow needs immediate attention, while one in a rarely-used feature can wait. By focusing first on tests that affect core business functions, you'll get the most value from your fix efforts.

This focused approach helps teams use their resources effectively and get the best results from stabilizing their tests. When you understand what flaky tests really cost and tackle them strategically, you can make your development process more efficient, improve your software quality, and deliver better business outcomes.

Implementing Battle-Tested Solutions

Creating reliable test suites is essential for successful software development. Once you grasp what flaky tests are, their impact on your team, and how to spot them, you need practical solutions to reduce their disruption. This requires both fixing current unstable tests and putting systems in place to prevent new ones. With the right approach, you can make your tests more dependable, boost your team's confidence, and speed up development.

Quick Fixes for Existing Flaky Tests

When dealing with current flaky tests, start with immediate solutions that can provide quick stability. While these may be temporary fixes, they help restore reliability as you investigate deeper issues.

- Increase Timeouts: Tests sometimes fail randomly due to small performance variations. Adding longer timeouts gives tests more time to finish, especially for network requests and async operations. Just be careful not to set them too high, which could hide real performance problems.

- Retry Mechanisms: Adding retry logic helps handle temporary failures. If a test fails once, trying it again a few times can help it pass if the issue was temporary. This works well for tests that depend on external systems.

- Isolate Tests: For tests that fail based on run order, proper isolation is key. Reset the test environment between runs and avoid shared state that could affect later tests. Each test should work independently as if it were running alone.

Long-Term Architectural Solutions for Preventing Test Flakiness

Fixing the root causes requires careful planning. Good architectural solutions help prevent future instability and create more reliable test suites.

- Stable Test Environments: Inconsistent environments often cause flaky tests. Build reliable infrastructure and automation to ensure identical setups across development, testing, and production. For example, Docker helps create consistent environments.

- Mock External Dependencies: Using external services makes tests vulnerable to network problems and outages. Mocking these dependencies during testing gives you more control and predictability. You can even mock databases locally to avoid network issues.

- Improved Test Design: Often the test code itself causes flakiness. Review and rewrite tests that show random behavior. This may mean removing hidden dependencies, handling async code better, or making tests more realistic.

- Robust Continuous Integration: CI pipelines help catch flaky tests early. Set up your CI to run tests in different environments and orders to find dependencies and inconsistencies. Datadog provides tools to analyze test history and find flaky tests in CI/CD pipelines. Google faces flakiness in about 16% of their tests and uses advanced CI systems to manage this.

Building a Culture of Test Stability

Creating a culture focused on test stability is crucial. Give developers the tools and time to fix flaky tests quickly, and recognize the importance of reliable testing.

- Dedicated Time for Fixes: Set aside specific time for developers to fix flaky tests rather than treating it as optional. This shows test stability matters.

- Clear Ownership: Give specific developers responsibility for tests to ensure quick fixes. When developers own their tests' stability, they care more about fixing issues.

- Tracking and Reporting: Create a system to track and report flaky tests so teams can monitor progress, spot patterns, and measure how well fixes work.

Using these approaches, from quick solutions to long-term changes, will make your tests more reliable and improve development. Flaky tests waste time and risk code quality. By tackling flakiness head-on, your team can work more efficiently, trust the test suite more, and deliver better software.

Ready to improve your CI/CD pipeline and stop fighting flaky tests? Mergify helps optimize your merge workflow, stabilize CI, and reduce developer headaches. From automated merge queues to smart merge strategies, Mergify helps your team ship code faster. Learn more about Mergify's features at https://mergify.com.